[/caption]

We already know that the Large Hadron Collider (LHC) will be the biggest, most expensive physics experiment ever carried out by mankind. Colliding relativistic particles at energies previously unimaginable (up to the 14 TeV mark by the end of the decade) will generate millions of particles (known and as yet to be discovered), that need to be tracked and characterized by huge particle detectors. This historic experiment will require a massive data collection and storage effort, re-writing the rules of data handling. Every five seconds, LHC collisions will generate the equivalent of a DVD-worth of data, that’s a data production rate of one gigabyte per second. To put this into perspective, an average household computer with a very good connection may be able to download data at a rate of one or two megabytes per second (if you are very lucky! I get 500 kilobytes/second). So, LHC engineers have designed a new kind of data handling method that can store and distribute petabytes (million-gigabytes) of data to LHC collaborators worldwide (without getting old and grey whilst waiting for a download).

In 1990, the European Organization for Nuclear Research (CERN) revolutionized the way in which we live. The previous year, Tim Berners-Lee, a CERN physicist, wrote a proposal for electronic information management. He put forward the idea that information could be transferred easily over the Internet using something called “hypertext.” As time went on Berners-Lee and collaborator Robert Cailliau, a systems engineer also at CERN, pieced together a single information network to help CERN scientists collaborate and share information from their personal computers without having to save it on cumbersome storage devices. Hypertext enabled users to browse and share text via web pages using hyperlinks. Berners-Lee then went on to create a browser-editor and soon realised this new form of communication could be shared by vast numbers of people. By May 1990, the CERN scientists called this new collaborative network the World Wide Web. In fact, CERN was responsible for the world’s first website: http://info.cern.ch/ and an early example of what this site looked like can be found via the World Wide Web Consortium website.

So CERN is no stranger to managing data over the Internet, but the brand new LHC will require special treatment. As highlighted by David Bader, executive director of high performance computing at the Georgia Institute of Technology, the current bandwidth allowed by the Internet is a huge bottleneck, making other forms of data sharing more desirable. “If I look at the LHC and what it’s doing for the future, the one thing that the Web hasn’t been able to do is manage a phenomenal wealth of data,” he said, meaning that it is easier to save large datasets on terabyte hard drives and then send them in the post to collaborators. Although CERN had addressed the collaborative nature of data sharing on the World Wide Web, the data the LHC will generate will easily overload the small bandwidths currently available.

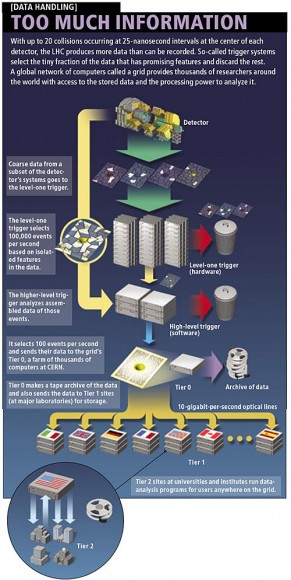

This is why the LHC Computing Grid was designed. The grid handles vast LHC dataset production in tiers, the first (Tier 0) is located on-site at CERN near Geneva, Switzerland. Tier 0 consists of a huge parallel computer network containing 100,000 advanced CPUs that have been set up to immediately store and manage the raw data (1s and 0s of binary code) pumped out by the LHC. It is worth noting at this point, that not all the particle collisions will be detected by the sensors, only a very small fraction can be captured. Although only a comparatively small number of particles may be detected, this still translates into huge output.

Tier 0 manages portions of the data outputted by blasting it through dedicated 10 gigabit-per-second fibre optic lines to 11 Tier 1 sites across North America, Asia and Europe. This allows collaborators such as the Relativistic Heavy Ion Collider (RHIC) at the Brookhaven National Laboratory in New York to analyse data from the ALICE experiment, comparing results from the LHC lead ion collisions with their own heavy ion collision results.

From the Tier 1 international computers, datasets are packaged and sent to 140 Tier 2 computer networks located at universities, laboratories and private companies around the world. It is at this point that scientists will have access to the datasets to perform the conversion from the raw binary code into usable information about particle energies and trajectories.

The tier system is all well and good, but it wouldn’t work without a highly efficient type of software called “middleware.” When trying to access data, the user may want information that is spread throughout the petabytes of data on different servers in different formats. An open-source middleware platform called Globus will have the huge responsibility to gather the required information seamlessly as if that information is already sitting inside the researcher’s computer.

It is this combination of the tier system, fast connection and ingenious software that could be expanded beyond the LHC project. In a world where everything is becoming “on demand,” this kind of technology could make the Internet transparent to the end user. There would be instant access to everything from data produced by experiments on the other side of the planet, to viewing high definition movies without waiting for the download progress bar. Much like Berners-Lee’s invention of HTML, the LHC Computing Grid may revolutionize how we use the Internet.

Sources: Scientific American, CERN

Certainly a lot going on in the world of technology.

When the Hadron Collider ramps up to full power, who knows what nasty critters it will unleash, not to mention black holes, God particle, and the 5th dimension.

Then we have quantum computing, and the internet supposedly ending in 2012.

So much happening, it’s no wonder we simple humans struggle to stay on top of things.

I wonder if in 15 years we will look back at how even “sexy” sites full of Flash and Javascript look like and deem them to be as primitive as the text-only page from 1992…

So having a gigabit network at home brings me/other geeks just 10 times slower than LHC tier 1 network. And 10Gbps is only the next step in our networking. CERN isn’t that far ahead as I have though. Well they have that LHC n’ stuff. But still.:)

Dont want to appear to maths stupid but can someone tell me if events are recorded at 20/25ns what % of this is recorded if the recorded rate is 100,000/sec . ?

BTW – My three teenage math students are not home at the moment so it would be great if someone could reply to me with an answer so as I can ask them ,and see what they come up with.

Personal email reply if you wish dencot1@optusnet .com.au

i dont want to hear about any myths about CERN creating Black Holes and exotic stuff like that because they are myths… the earth gets bombarded with particles of much higher energy than CERN will have… and nothing happens but an Extensive Air Shower… it is also the first method we had for observing High energy physics…

here is another tidbit for all those who are under the dillution that CERN will destroy the earth… CERN’s Charge current cant even power a light bulb in your house… not even those nice “Green” CFLs…

“The LHC will enable us to study in detail what nature is doing all around us,†said CERN Director General Robert Aymar. “The LHC is safe, and any suggestion that it might present a risk is pure fiction.†from the CERN web site… and i am a physicist so i am not taking this as a naive person

who wants me to make another rap song about this?

A black hole in France might be considered “the coolest science mistake ever”. But far more frightening than Red Lectroids from the Eigth Dimension coming through the LHC, lets think for a moment about the real negatives of this “super-network”. The first group to co-opt this technology will be the world’s governments for monitoring all of our emails. If we want that, then we are only promoting truly invasive big brother as the price of our faster downloads of cheap entertainment. So, what very un-free future are we building out for ourselves in our blind drive for convenience? Likewise, just like using airplanes as missiles, the potential of the internet to be turned against itself is extremely easy to achieve and just waiting for opportunities. The faster the network, the more devastating and widespread the damage it can cause. The internet is quickly becoming our “greatest and blindest vulnerability ever”. Beware getting too infatuated or dependent on this “advance”, and resist letting ourselves become a hive-society.

I suspect that several theoretical particles will eventually be identified. Also, I can’t help believe that any particle, regardles of how small in size it may be, can be broken down into smaller and smaller pieces. Which begs the question, is there a theoretical finite minimum size for atomic particles. If so, how small might be the mass number?

“# Kate McAlpine Says:

September 5th, 2008 at 8:24 am

“who wants me to make another rap song about this?”

>>> Me.

The article seems obfuscated. It talks about LHC specific distribution architecture, and how large its pipes are, but failed to give discuss on the managing app (Globus) and new protocol it is claiming would “revolutionize” the internet. It does not even give the any real world possible application or parallels of its distribution architecture.

So many words, so little substance.

IT MIGHT REVOLUTIONIZE THE INTERNET IF IT DOESNT KILL US ALL FIRST

ITS MORE LIKELY TO REVOLUTIONIZE A BLACK HOLE SWALLOWING US ALL UP

Just kidding, of course.

“Dont want to appear to maths stupid but can someone tell me if events are recorded at 20/25ns what % of this is recorded if the recorded rate is 100,000/sec . ?

BTW – My three teenage math students are not home at the moment so it would be great if someone could reply to me with an answer so as I can ask them ,and see what they come up with.”

I believe that would be… 0.25% of the data be created actually gets recorded.

25ns = 0.000000025 of a second

1 second / 0.000000025 = 40,000,000 times a second

Data is produced 40 million times a second. If we can only record it 100,000 times a second, thats a ratio of (400 to 1) which is 0.25%. 🙂

Anyone verify?

to likes math problems

Yikes bro lol I wish I could do that

But yeah I don’t think earth will be doomed from this thing I mean come on for starters we been through this once with the RHIC secondly, there are solar beams and shit like that way way more powerful than what we are using..sooooo yeah 1.We got exp 2. the universe already done it

Likes Math Problem: the math is sound, but the question posed isn’t.

Where does it say that we can record 100,000 times a second ? It says 100,000 “advanced CPUs”.

Also, what happens every 25ns is a collision. What’s to say every collision would be only 1 data point and not several ?

Finally, I from what I understand from this article and others I’ve seen on the topic, I think the bottleneck here is more on how many we can DETECT and not how many we can RECORD or even process for that matter.

If I got one of these – could I eventually rule the world?

There are still risks of a Black Hole… It is possible cosmic rays may move so quickly at to not slow down enough in collision to make one. They might, however in these conditions. I personally don’t think it will happen, but we would be stupid to assume it couldn’t. Before exploding the first atomic weapon, scientists speculated weather we would die of an endless radiation well or the ignition of our atmosphere. In order for these CBH’s to exist 8 points must be taken into consideration. First, is the alleged disprove-ation (?) of Black Hole evaporation. The other 7 points can be found here http://www.lhcfacts.org/ . Note, I don’t share the fear of this author, even if it all goes bad, we won’t have more than 50 months to live and it would probably go from all right to all dead pretty quick, and we would be fine until the end, unless they are stupid enough to tell us its happening and not let us die in blissful ignorance…