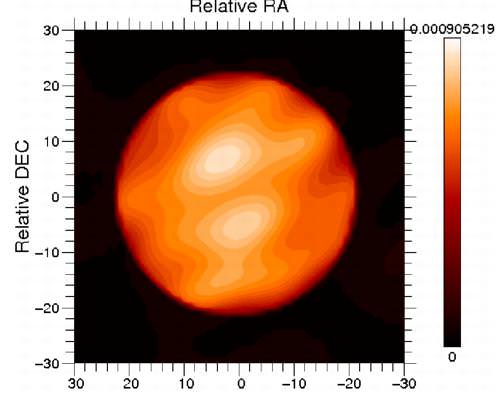

Caption:The surface of Betelgeuse in near infrared at 1.64 micron in wavelength, obtained with the IOTA interferometer (Arizona). The image has been re-constructed with two different algorithms, which yield the same details, of 9 milliarcseconds (mas). The star diameter is about 45 milliarcseconds. Credit: Copyright 2010 Haubois / Perrin (LESIA, Observatoire de Paris)

An international team of astronomers has obtained an unprecedented image of the surface of the red supergiant Betelgeuse, in the constellation Orion. The image reveals the presence of two giant bright spots, which cover a large fraction of the surface. Their size is equivalent to the Earth-Sun distance. This observation provides the first strong and direct indication of the presence of the convection phenomenon, transport of heat by moving matter, in a star other than the Sun. This result provides a better understanding of the structure and evolution of supergiants.

Betelgeuse is a red supergiant located in the constellation of Orion, and is quite different from our Sun. First, it is a huge star. If it were the center of our Solar System it would extend to the orbit of Jupiter. At 600 times larger than our Sun, it radiates approximately 100,000 times more energy. Additionally, with an age of only a few million years, the Betelgeuse star is already nearing the end of its life and is soon doomed to explode as a supernova. When it does, the supernova should be seen easily from Earth, even in broad daylight.

But we now know Betelgeuse has some similarities to the Sun, as it also has sunspots. The surface has bright and dark spots, which are actually regions that are hot and cold spots on the star. The spots appear due to convection, i.e., the transport of heat by matter currents. This phenomenon is observed every day in boiling water. On the surface of the Sun, these spots are rather well-known and visible. However, it is not at all the case for other stars and in particular supergiants. The size, physical characteristics, and lifetime of these dynamical structures remain unknown.

Betelgeuse is a good target for interferometry because its size and brightness make it easier to observe. Using simultaneously the three telescopes of the Infrared Optical Telescope Array (IOTA) interferometer on Mount Hopkins in Arizona (since removed), and the Paris Observatory (LESIA) the astronomers were able to obtain a numerous high-precision measurements. These made it possible to reconstruct an image of the star surface thanks to two algorithms and computer programs.

Two different algorithms gave the same image. One was created by Eric Thiebaut from the Astronomical Research Center of Lyon (CRAL) and the other was developed by Laurent Mugnier and Serge Meimon from ONERA. The final image reveals the star surface with unprecedented, never-before-seen details. Two bright spots clearly show up next to the center of the star.

The analysis of the brightness of the spots shows a variation of 500 degrees compared to the average temperature of the star (3,600 Kelvin). The largest of the two structures has a dimension equivalent

to the quarter of the star diameter (or one and a half the Earth-Sun distance). This marks a clear difference with the Sun where the convection cells are much finer and reach hardly 1/20th of the solar radius (a few Earth radii). These characteristics are compatible with the idea of luminous spots produced by convection. These results constitute a first strong and direct indication of the presence of convection on the surface of a star other than the Sun.

Convection could play an important role in the explanation of the mass-loss phenomenon and in the gigantic plume of gas that is expelled from Betelgeuse. The latter has been discovered by a team led by Pierre Kervella from Paris Observatory (read our article about this discovery). Convection cells are potentially at the origin of the hot gas ejections.

The astronomers say this new discovery provides new insights into supergiant stars, opening up a new field of research.

Sources: Abstract: arXiv, Paper: “Imaging the spotty surface of Betelgeuse in the H band,” 2009, A&A, 508, 923″. Paris Observatory

wow.

Looking briefly at the paper, if I understand correctly, there’s a lot of a-priori information that underlies the Interferometer data. This doesn’t make it any less “wow”, and the caption under the image is very clear, but this is not quite an “image” as much as a reconstructed model.

An “image” implies that the amount of information present is comparable to the resolution of the image, whereas here there’s a lot less information – the star is shown as round only because the model is round – the instrument can’t tell that.

Proof that global warming is caused by sunspots on Beetlegeuse! ROFL.

This is so cool, I an curious if they can do even better.

@CrazyEddieBlogger, but if 2 different techniques create the same image that does mean that it is very likely to be correct.

To test if the star is round they will have to do many experiments. A star will likely wobble if it is not a perfect sphere.

Er, how do we measure the amount of information in these two cases so that we can compare them?

An image can most often be compressed without loosing much information. (And the remainder is a true measure of the information contained in the image, btw. Dunno how to measure information contained in resolution though, assuming there is any apart from the information in a numerical estimate.)

Similarly a 2D image has lost some information, as it is “a compression” of the 3D image. It is also a model of the true 3D image that we can’t see from our unique viewpoint. (As we lack the ability to surround and capture all image information.)

Perspective and color (a fact for both true and false color images by way of our eye construction), and the way we visualize the 3D image back from it it is yet other models. So we already accept models as images, it seems to me.

Colloquially an image is a(ny) visual representation.

All these issues makes me think that Nancy didn’t do anything wrong, and perhaps did it precisely, by referring to visual representations of models as images.

well, looking at the article, you can see what the source information is. There are several localized intensity points, and from that they did a best fit. The amount of information (or degrees of freedom, loosely applied) is small, so the more information you put into the model, the nicer picture you get…

They even tried fitting a different amount of hot spots to the data, and see which correlates best. They assumed a round star with a dark limb – perfectly safe, especially since there are other observations about this star. If we already know its diameter, and if it helps interpret the data – it’s perfectly ok.

Also, the human brain works by visualizing. I get a much bigger kick out of looking at the nice visual than at the interferometer data… call me crazy…

All I said thought is that if you don’t remember that this image is just a reconstruction of several data points, overlaid on a model, then you’d be tempted to think that it implies things that it really doesn’t. For example – the sharpness of the edges of the white spots might be more a function of the model then of the data. (I said “might” – I didn’t understand too well how rich the data set is).

The fact that two techniques arrive at the same picture is only a sanity check, or a checksum – if the two techniques used the same model, they should paint the same picture.

If you took a bunch of measurements of a star and a planet, and then rendered a model based on that information, you wouldn’t call it an ‘image”, right? it would be a graphical representation of a data set.

I’m only splitting hairs. I would call “an image” to a graphical (bitmap) representation of a dataset where the amount of information present in the bitmap is comparable to the amount of information present in the dataset. Sort of like you get when you take a picture, even if you enhance it and tinker with it.

One detail, interferometer is a technique to get information to reconstruct an image. It is in no way a normal image, just like radio telescopes do not have a real visual image snapped with a camera.

Is the mechanism that is believed to drive sunspots akin to the mechanism that powers the Red Spot on Jupiter?

To make an interferometer work you have to reconstruct the image by Fourier transform methods.

LC

CrazyEddie,

The two different algorithms are used to turn the interferometric data into a simulated picture. In this case a near-infrared picture. Similar to how your brain turns light wave data it receives from your eyes into a white light picture.

Unfortunately, the interferometic data collected from this project didn’t contain enough near-infrared information to complete the picture. Therefore, to create a picture they used data from an a priori image of Betelgeuse (a hypothetical simulated image of the star in the near-infrared; from which they have quite a few different ones to choose from) to assist in filling in the missing data which is then plugged in to the algorithm.

Hypothetically this doesn’t affect the actual data, since in effect it is just an underlay with the original data placed on top.

The two algorithms then take all the data, and each one turns it into what it believes the object will look in the near-infrared.

How they choose the a priori image is a whole lecture in itself. In short, when the data is orignally received, they run through quite a bit of algorithms to “clean up” the data. Like compensate for any dust, scatter, etc. Also to “make adjustments” to the data. For example data from the center of the star is going to be brighter and more direct than data from the edge because the object is spherical. If it was a flat surface, all the data would hit directly at the receiver in pretty much the same manner.

When finished… they pick the a priori image which best matches and is best suited to plug into the image algorithms.

@Aodhhan

” For example data from the center of the star is going to be brighter and more direct than data from the edge because the object is spherical. If it was a flat surface, all the data would hit directly at the receiver in pretty much the same manner.”

Wooo something sounds fishy here!

First it is a start, it emits light in a 306×180 angle, no bright spots in the center. It is not a planet that reflects light.

.

Second it lies 640 light years away. This is basically flat viewed from this distance.

@ CEB:

Well, I’m splitting hairs as well.

As far as images goes, apparently we can’t agree on what constitutes an image or their information.

I’ve already mentioned that an image for me is “a graphical representation of a data set”, though not any representation. But a fairly photo-realistic rendering, i.e. ray tracing.

And I’m fairly certain that most people would accept a compressed image as an image after rendering. Whereupon of course the decompressed bitmap information amount is comparable to the original amount.

It is the compression that shows how much information the image has however. It is a practical realization of Kolmogorov information (which is the minimal information or maximal compression, impossible to extract from an algorithm).

As for the degree of freedom as information, it can be under certain circumstances. Similarly to Kolmogorov information, the number of parameters (roughly, degrees of freedom) of a model can be the remainder when we have agreed on the model (compression algorithm).

So I see what you mean there now, and I’ll have to agree on that point.

I’m not going to go into the full lecture.

If you send waves towards a sphere you do not get the same information back from the edges of the sphere that you do from center.

Also, energy originating from the planet doesn’t come directly at the Earth the same from the the “sides” of the star in the same manner as the energy directed right at us.

The fact it is 10 feet away or 10,000 LY away doesn’t change this fact. If heat only came from the left or right side of the star, you wouldn’t feel much of it. Yet, if it was on the side you stood in front of.. you would feel a lot.

Get it?

…probably not.

Correction:

…energy originating from the STAR doesn’t come directly at Earth from its sides in the same manner as the side facing/directed right at us.

Oh Olaf…

One more quick little fact I should let you in on. Space is a near perfect vacuum. Which means light waves… no matter what part of the spectrum… doesn’t scatter as it does in Earth’s atmosphere.

Finally, pretty much all objects outside of perhaps a black hole REFLECT light.

From time to time there are comments on astronomy websites about something being a, say, “real” image or not — I hope you get the point. Sometimes people say, that everything, which is not “pure” electromagnetical data — or even: something, which is not in the visible part of the spectrum — is not so valuable.

We should be aware, that our brain does not perceive unfiltered optical data. On the way from our eyes to the brain and between our synapses data is, as far as I know, partly frequency encoded — electromagnetic pulses with certain intervals between – and partly chemical encoded. Above that there is a lot of computing going on in our eyes and in our brain.

So, if we look at some picture or movie computed from a mathematical model of physical reality — does this extra step matter? Not for me. It’s fascinating.

@Aodhhan

“If you send waves towards a sphere you do not get the same information back from the edges of the sphere that you do from center.

Also, energy originating from the planet doesn’t come directly at the Earth the same from the the “sides” of the star in the same manner as the energy directed right at us.”

Aodhhan , as usual you do not make any sense.

This is pseudo-talk. The only difference between light emitted from the side and the front is that it needs a few microsecond longer to reach Earth no matter the distance in light years.

Light originating form the side or front all started a in he same direction towards earth with some very small bending because of the gravitational influence of the star.

Olaf…

I hate to say it, but you are wrong. Please do some experimenting and research.

Aodhhan:

Err… try telling that to those individuals who have had their retinas damaged as a result of staring at a near total eclipse of the Sun.

Olaf is right.

@ Aoddhan

Olaf is right.

We might ponder how it is that the sizes of stars were known before we ever imaged them. There is a quantum mechanical process called the Hanbury Brown-Twiss (HBT) effect, sometimes called photon bunching. If two photons from two different points on a source reach a homodyne detector they may enter into a quantum entanglement with some interference pattern. This means a photon which leaves the limb of Betelgeuse and the center are placed into a quantum entanglement throughout the path of the two photons. The detection of the two photons acts as a superselection of state entanglements into the past. This has a relationship to the Wheeler Delay Experiment (WDE), where the choice of detection observables “now” selects the quantum configuration into the past. This is an odd development, and one of those strange results of quantum mechanics. The detection of these photons are used to calculate the size of stars, and used long before we had any ability to image them.

There are Wikipedia sites on HBT and WDE, so I leave it up to the reader to look deeper into these topics. This is relies upon quantum physics which requires considerable background discussion or study to understand fully.

The detection of photons from the limb of a sphere and the leading center is changed little if the sphere is replaced by a disk. In either case the photons are de-correlated from each other, so the small time delay has no consequence. If the two photons enter into an HBT entanglement then they are correlated, but the WDE quantum logic also renders the time delay inconsequential. So long as the object you are detecting is sufficiently constant in its configuration over the small time difference between photons leaving the limb and the center of the star this small time delay difference is of no consequence.

LC

@ Lawrence B. Crowell,

Thanks for that information, I’ll look them up in Wikipedia…