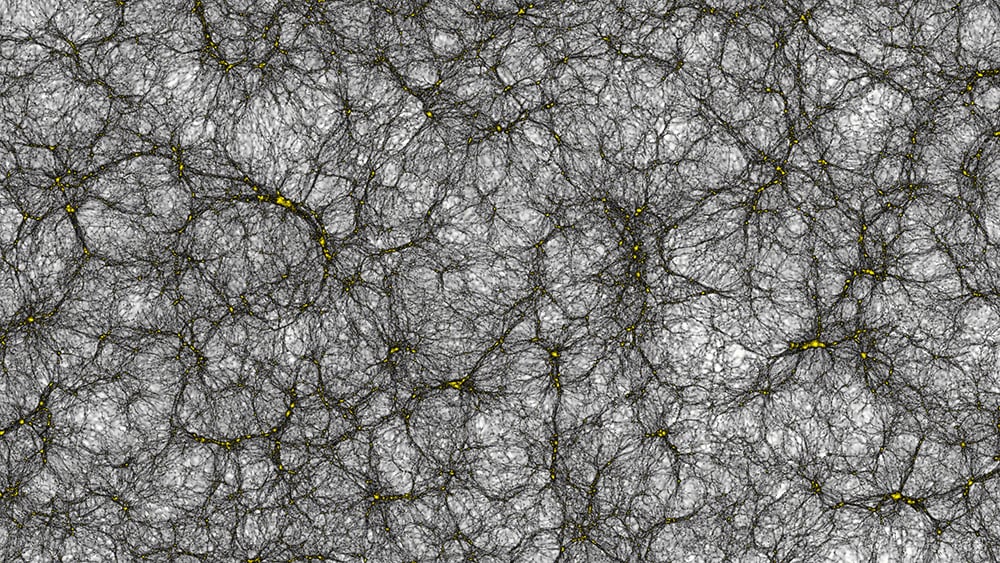

Understanding the Universe and how it has evolved over the course of billions of years is a rather daunting task. On the one hand, it involves painstakingly looking billions of light years into deep space (and thus, billions of years back in time) to see how its large-scale structure changed over time. Then, massive amounts of computing power are needed to simulate what it should look like (based on known physics) and seeing if they match up.

That is what a team of astrophysicists from the

University of Zurich

(UZH) did using the "Piz Daint" supercomputer. With this sophisticated machine, they simulated the formation of our entire Universe and produced a catalog of about 25 billion virtual galaxies. This catalog will be launched aboard the ESA's

Euclid mission

in 2020, which will spend six years probing the Universe for the sake of investigating dark matter.

The team's work was detailed in

a study

that appeared rece

n

tly in the journal

Computational Astrophysics and Cosmology.

Led by

Douglas Potter

, the team spent the past three years developing an optimized code to describe (with unprecedented accuracy) the dynamics of dark matter as well as the formation of large-scale structures in the Universe.

The code, known as PKDGRAV3, was specifically designed to optimally use the available memory and processing power of modern super-computing architectures. After being executed on the "Piz Daint" supercomputer - located at the

Swiss National Computing Center

(CSCS) - for a period of only 80 hours, it managed to generate a virtual Universe of two trillion macro-particles, from which a catalogue of 25 billion virtual galaxies was extracted.

Intrinsic to their calculations was the way in which dark matter fluid would have evolved under its own gravity, thus leading to the formation of small concentrations known as "dark matter halos". It is within these halos - a theoretical component that is thought to extend well beyond the visible extent of a galaxy - that galaxies like the Milky Way are believed to have formed.

Naturally, this presented quite the challenge. It required not only a precise calculation of how the structure of dark matter evolves, but also required that they consider how this would influence every other part of the Universe. As Joachim Stadel, a professor with the

Center for Theoretical Astrophysics and Cosmology

at UZH and a co-author on the paper, told Universe Today via email:

[caption id="attachment_129624" align="aligncenter" width="544"]

Artist impression of the Euclid probe, which is set to launch in 2020. Credit: ESA

[/caption]

Thanks to the high precision of their calculations, the team was able to turn out a catalog that met the requirements of the European Space Agency's Euclid mission, whose main objective is to explore the "dark universe". This kind of research is essential to understanding the Universe on the largest of scales, mainly because the vast majority of the Universe is dark.

Between the 23% of the Universe which is made up of dark matter and the 72% that consists of dark energy, only one-twentieth of the Universe is actually made up of matter that we can see with normal instruments (aka. "luminous" or baryonic matter). Despite being proposed during the 1960s and 1990s respectively, dark matter and dark energy remain two of the greatest cosmological mysteries.

Given that their existence is required in order for our current cosmological models to work, their existence has only ever been inferred through indirect observation. This is precisely what the Euclid mission will do over the course of its six year mission, which will consist of it capturing light from billions of galaxies and measuring it for subtle distortions caused by the presence of mass in the foreground.

Much in the same way that measuring background light can be distorted by the presence of a gravitational field between it and the observer (i.e. a time-honored

test for General Relativity

), the presence of dark matter will exert a gravitational influence on the light. As Stadel explained, their simulated Universe will play an important role in this Euclid mission - providing a framework that will be used during and after the mission.

[caption id="attachment_123936" align="aligncenter" width="580"]

Diagram showing the Lambda-CBR universe, from the Big Bang to the the current era. Credit: Alex Mittelmann/Coldcreation

[/caption]

"In order to forecast how well the current components will be able to make a given measurement, a Universe populated with galaxies as close as possible to the real observed Universe must be created," he said. "This 'mock' catalogue of galaxies is what was generated from the simulation and will be now used in this way. However, in the future when Euclid begins taking data, we will also need to use simulations like this to solve the inverse problem. We will then need to be able to take the observed Universe and determine the fundamental parameters of cosmology; a connection which currently can only be made at a sufficient precision by large simulations like the one we have just performed. This is a second important aspect of how such simulation work [and] is central to the Euclid mission."

From the Euclid data, researchers hope to obtain new information on the nature of dark matter, but also to discover new physics that goes beyond the

Standard Model

of particle physics - i.e. a modified version of general relativity or a new type of particle. As Stadel explained, the best outcome for the mission would be one in which the results do

not

conform to expectations.

"While it will certainly make the most accurate measurements of fundamental cosmological parameters (such as the amount of dark matter and energy in the Universe) far more exciting would be to measure something that conflicts or, at the very least, is in tension with the current '

standard lambda cold dark matter

' (LCDM) model," he said. "One of the biggest questions is whether the so called 'dark energy' of this model is actually a form of energy, or whether it is more correctly described by a modification to

Einstein's general theory of relativity

. While we may just begin to scratch the surface of such questions, they are very important and have the potential to change physics at a very fundamental level."

In the future, Stadel and his colleagues hope to be running simulations on cosmic evolution that take into account both dark matter

and

dark energy. Someday, these exotic aspects of nature could form the pillars of a new cosmology, one which reaches beyond the physics of the Standard Model. In the meantime, astrophysicists from around the world will likely be waiting for the first batch of results from the Euclid mission with baited breath.

Euclid is one of several missions that is currently engaged in the hunt for dark matter and the study of how it shaped our Universe. Others include the

Alpha Magnetic Spectrometer

(AMS-02) experiment aboard the ISS, the ESO's

Kilo Degree Survey

(KiDS), and CERN's

Large Hardon Collider

. With luck, these experiments will reveal pieces to the cosmological puzzle that have remained elusive for decades.

Further Reading: UZH, Computational Astrophysics and Cosmology

Universe Today

Universe Today