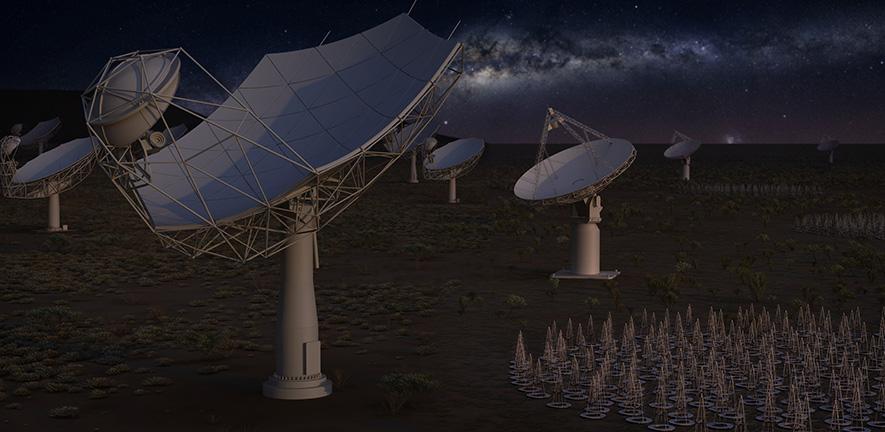

When complete, the Square Kilometer Array (SKA) will be the largest radio telescope array in the entire world. The result of decades of work involving 40 institutions in 11 countries, the SKA will allow astronomers to monitor the sky in unprecedented detail and survey it much faster than with any system currently in existence.

Such a large array will naturally be responsible for gathering an unprecedented amount of data on a regular basis. To sort through all this data, the “brain” for this massive array will consist of two supercomputers. Recently, the SKA’s Science Data Processor (SDP) consortium concluded their engineering design work on one of these supercomputers.

The SDP consortium, which is led by the University of Cambridge, is tasked with designing the second stage of data processing, which will process all the digitized astronomical signals collected by the telescope’s receivers. Like the SKA’s radio telescopes, the SDP itself will be composed of two supercomputers located in South Africa (Cape Town) and one in Australia (Perth).

As Maurizio Miccolis, SDP’s Project Manager for the SKA Organization, said in a recent University of Cambridge press release:

“It’s been a real pleasure to work with such an international team of experts, from radio astronomy but also the High-Performance Computing industry. We’ve worked with almost every SKA country to make this happen, which goes to show how hard what we’re trying to do is.”

The role of the consortium was to design the hardware, software, and algorithms needed to create a computing platform capable of processing science data from the Central Signal Processor (CSP) and turning them into data packages that will be stored in a global network of regional centers. To do this, the SDP will need to be able to process raw information and move it through data-reduction pipelines with incredible speed and capacity.

In addition to the sheer quantity of data it will be handling, the SDP will also need to be able to differentiate between usable data and noise

“We estimate SDP’s total compute power to be around 250 PFlops – that’s 25% faster than IBM’s Summit, the current fastest supercomputer in the world. In total, up to 600 petabytes of data will be distributed around the world every year from SDP –enough to fill more than a million average laptops.”

For this purpose, the SDP will be equipped with specialized machine-learning algorithms that will allow it to identify and remove manmade radio frequency interference (RFI) and other sources of “background noise” from the data. The way the SKA will leverage advancements made in AI and information sharing is one of the main reasons it will be a game-changer.

The successes of the SKA’s robust computing regime will also lead to applications in other fields. Today, high-performance computing plays an increasingly vital role in multiple industries and disciplines that depend on cutting edge modeling and simulations. These include climatological research, weather prediction and analysis, medical research, and astronomy and cosmology, and many others.

According to Professor Paul Alexander, the Consortium Lead from Cambridge’s Cavendish Laboratory, this latest development would not have been possible without the international collaboration that is making the SKA possible:

“I’d like to thank everyone involved in the consortium for their hard work over the years. Designing this supercomputer wouldn’t have been possible without such an international collaboration behind it.”

Further Reading: University of Cambridge

“processing 5 terabytes of data a second (5 Tb/s)”

Actually, 5 terabytes of data a second is 5 TB/s, or 40Tb/s!

The convention is to use capital ‘B for bytes, and lowercase ‘b’ for bits!