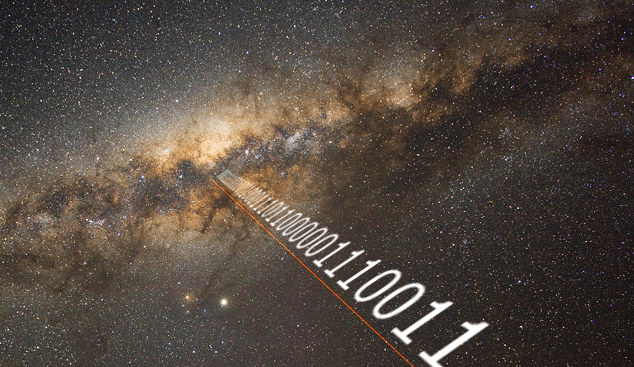

Since the beginning of the Digital Age (ca. the 1970s), theoretical physicists have speculated about the possible connection between information and the physical Universe. Considering that all matter is made up of information that describes the state of a quantum system (aka. quantum information), and genetic information is coded in our DNA, it's not farfetched at all to think that physical reality can be expressed in terms of data.

This has led to many thought experiments and paradoxes, where researchers have attempted to estimate the information capacity of the cosmos. In a recent study, Dr. Melvin M. Vopson - a Mathematician and Senior Lecturer at Portsmouth University - offered new estimates of how much information is encoded in all the baryonic matter (aka. ordinary or "luminous" matter) in the Universe.

The study that describes his research findings recently appeared in the scientific journal AIP Advances, a publication maintained by the American Institute of Physics (AIP). While previous estimates have been made about the quantity of encoded information in the Universe, Vopson's is the first to rely on Information Theory (IT) - a field of study that deals with the transmission, processing, extraction, and utilization of information.

This novel approach allowed him to address the questions arising from IT, namely: "Why is there information stored in the universe and where is it?" and "How much information is stored in the universe?" As Vopson explained in a recent AIP press release:

“The information capacity of the universe has been a topic of debate for over half a century. There have been various attempts to estimate the information content of the universe, but in this paper, I describe a unique approach that additionally postulates how much information could be compressed into a single elementary particle.”

While similar research has investigated the possibility that information is physical and can be measured, the precise physical significance of this relationship has remained elusive. Hoping to resolve this question, Vopson relied on the work of famed mathematician, electrical engineer, and cryptographer Claude Shannon - called the "Father of the Digital Age" because of his pioneering work in Information Theory.

Shannon defined his method for quantifying information in a 1948 paper titled " A Mathematical Theory of Communication," which resulted in the adoption of the "bit" (a term Shannon introduced) as a unit of measurement. This was not the first time that Vopson has delved into IT and physically encoded data. Previously, he addressed how the physical nature of information can be extrapolated to produce estimates on the mass of data itself.

This was described in his 2019 paper, " The mass-energy-information equivalence principle," which extends Einstein's theories about the interrelationship of matter and energy to data itself. Consistent with IT, Vopson's study was based on the principle that information is physical and that all physical systems can register information. He concluded that the mass of an individual bit of information at room temperature (300K) is 3.19 × 10-38kg (8.598 x 10-38lbs).

Taking Shannon's method further, Vopson determined that every elementary particle in the observable Universe has the equivalent of 1.509 bits of encoded information. "It is the first time this approach has been taken in measuring the information content of the universe, and it provides a clear numerical prediction," he said. "Even if not entirely accurate, the numerical prediction offers a potential avenue toward experimental testing."

First, Vopson employed the well-known Eddington number, which refers to the total number of protons in the observable Universe (current estimates place that at 1080). From this, Vopson derived a formula to obtain the number of all elementary particles in the cosmos. He then adjusted his estimates for how much each particle would contain based on the temperature of observable matter (stars, planets, interstellar medium, etc.)

From this, Vopson calculated that the overall amount of encoded information is equivalent to 6×1080bits. To put that in computational terms, this many bits is equivalent to 7.5 × 1059zettabytes, or 7.5 octodecillion zettabytes. Compare that to the amount of data that was produced worldwide during the year 2020 - 64.2 zettabytes. Needless to say, that's a difference that can only be described as "astronomical."

These results build on previous studies by Vopson, who has postulated that information is the fifth state of matter (alongside solid, liquid, gas, and plasma) and that Dark Matter itself could be information. They are also consistent with a lot of research conducted in recent years, all of which have attempted to shed light on how information and the laws of physics interact.

This includes how information exits a black hole, known as the "Black Hole Information Paradox," and arises from the fact that black holes emit radiation. This means that black holes lose mass over time and do not preserve the information of infalling matter (as previously believed). Both discoveries are attributed to Stephen Hawking, who first discovered this phenomenon, appropriately named " Hawking Radiation."

This also raises holographic theory, a tenet of string theory and quantum gravity that speculates that physical reality arises from information, as a hologram arises from a projector. And there's the more radical interpretation of this known as Simulation Theory, which posits that the entire Universe is a giant computer simulation, perhaps created by a highly advanced species to keep us all contained (generally known as the " Planetarium Hypothesis.")

As expected, this theory does present some problems, like how antimatter and neutrinos fit into the equation. It also makes certain assumptions about how information is transferred and stored in our Universe to obtain concrete values. Nevertheless, it offers a very innovative and entirely new means for estimating the information content of the Universe, from elementary particles to visible matter as a whole.

Coupled with Vopson's theories about information constituting the first state of matter (or Dark Matter itself), this research offers a foundation that future studies can build upon, test, and falsify. What's more, the long-term implications of this research include a possible explanation for quantum gravity and resolutions to various paradoxes.

Further Reading: AIP*, AIP Advances*

Universe Today

Universe Today