Supernovae are incredibly common in the universe. Based on observations of isotopes such as aluminum-26, we know that a supernova occurs on average about every fifty years in the Milky Way alone. A supernova can outshine a galaxy, so you wouldn't want your habitable planet to be a few light years away when it goes off. Fortunately, most supernovae have occurred very far away from Earth, so we haven't had to concern ourselves with wearing sunscreen at night. But it does raise an interesting question. When it comes to supernovae, how close is too close? As a recent study shows, the answer depends on the type of supernova.

There is geological evidence that supernovae have occurred quite close to Earth in the past. The isotope iron-60 has a half-life of just 2.6 million years, and it has been found in ocean floor sediment laid down about 2 million years ago. It has also been found in Antarctic ice cores and lunar regolith, suggesting a supernova event around that time. Samples of Earth's crust point to evidence of another supernova event around 8 million years ago. Both of these would likely have occurred within a few hundred light years of Earth, perhaps as close as 65 light years. Neither of these supernovae seems to have triggered a planet-wide mass extinction, so you might think any supernova more distant than 100 light years is harmless.

This new study suggests otherwise. Earlier studies focused on two dangerous periods of a supernova: the overall brightness of the initial explosion reaching a planet at the speed of light, and the stream of energized particles that can strike the planet hundreds or thousands of years later. Both of these tend to have weak effects over hundreds of light years. A nearby supernova might outshine the Moon for a time, which would affect the nocturnal patterns of some creatures, but it wouldn't trigger mass extinctions. Likewise, our atmosphere is a good barrier to cosmic rays, thus a burst of them for a time is relatively harmless. But this study looked specifically at X-ray light emitted by some supernova, and this is where things can get worse.

X-rays are particularly good at disrupting things like ozone. A strong beam of X-rays from a supernova could strip the ozone layer from a planet like Earth, leaving it open to ultraviolet radiation from its Sun. The ultraviolet light could trigger the creation of a smog layer of nitrogen dioxide, which would lead to acid rain and a wide-scale de-greening of the planet.

So the lethal distance of a supernova depends not only on its proximity to a habitable planet but also on the level of X-rays it generates. The team looked at the X-ray spectra of nearly three dozen supernovae over the last 45 years and calculated the lethal distance for each of them. The most harmless was the popular 1987a supernova, which was safe within a light-year or so. The most potentially deadly was a supernova named 2006jd, which could kill a habitable planet from up to 160 light years away.

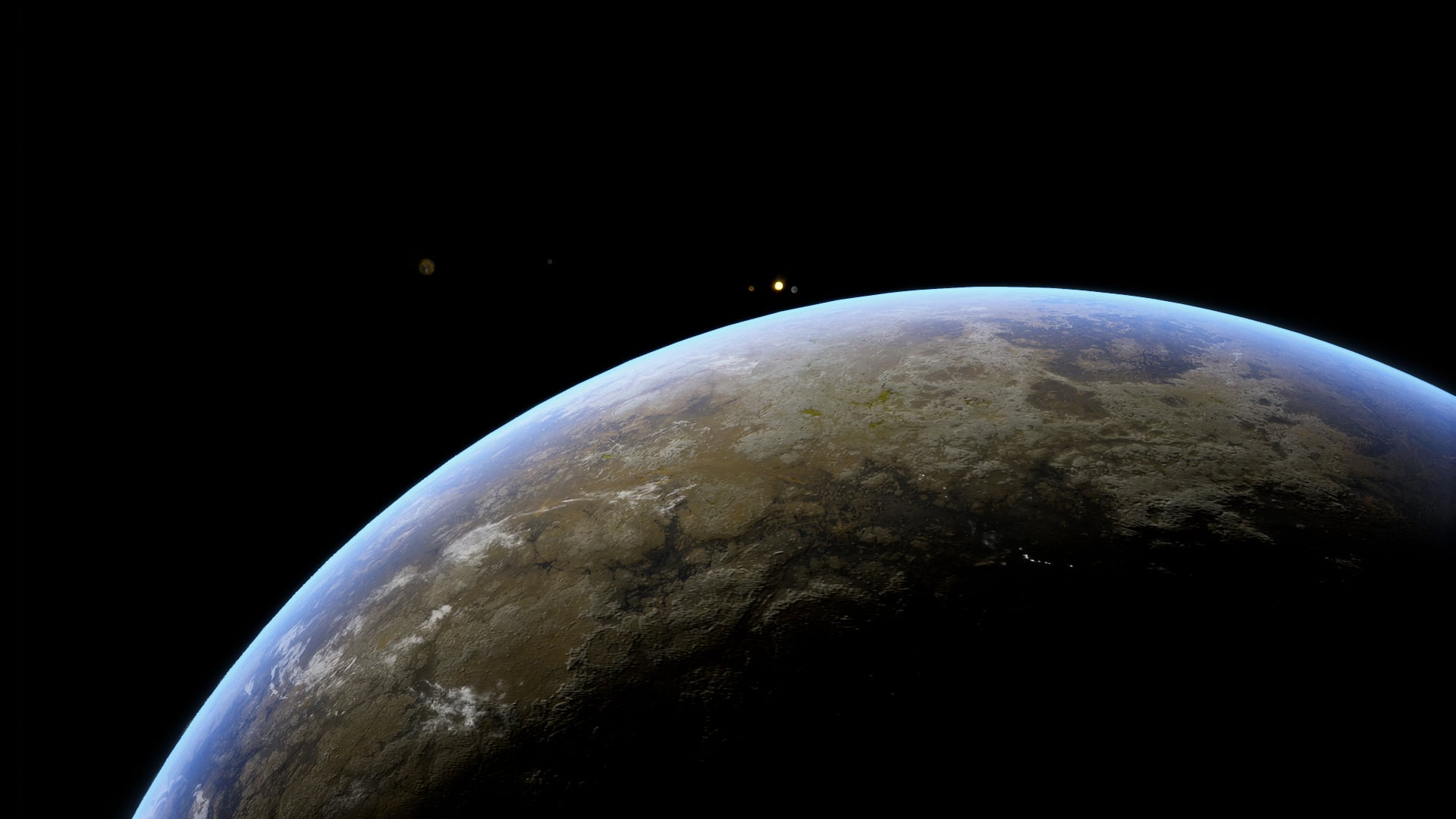

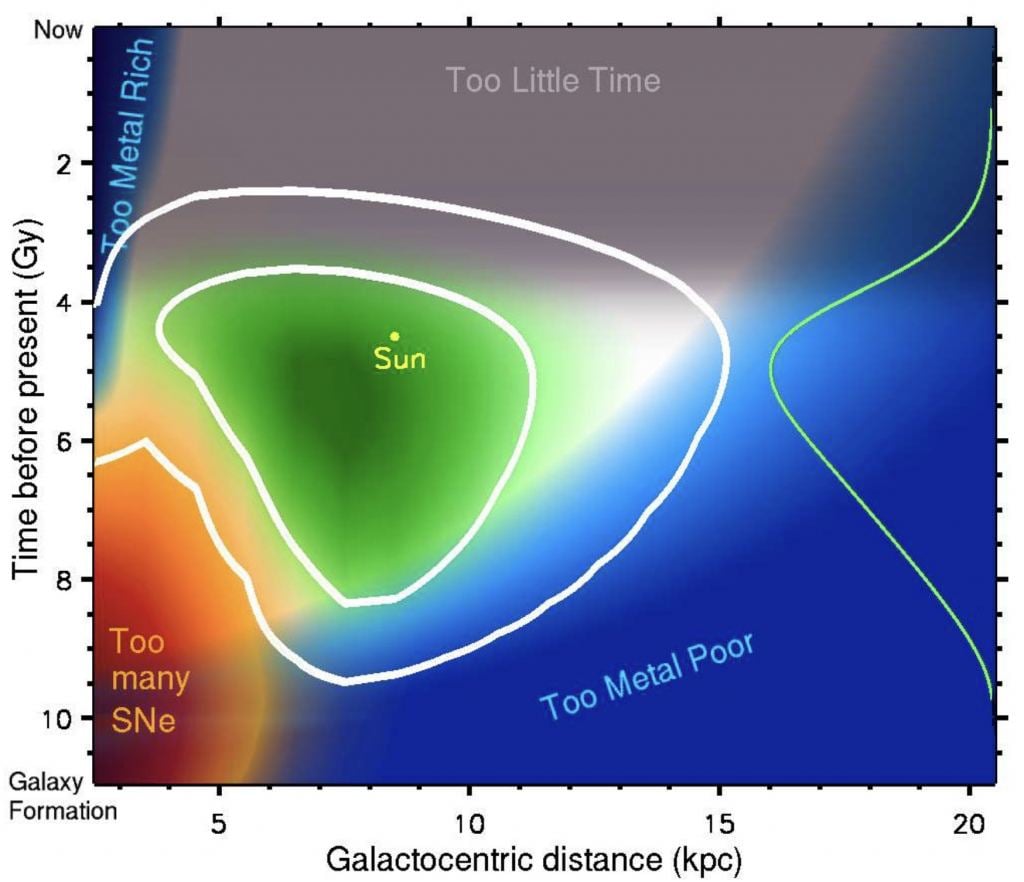

To be clear, there is no nearby star that poses a potential threat to Earth, not even Betelgeuse. But this study helps us better define where habitable planets might survive in our galaxy. Just as a habitable planet can't be too close to its star, a planetary system can't be too close to areas where supernovae are most common, such as the center of our galaxy.

Reference: Brunton, Ian R., et al. " X-Ray-luminous Supernovae: Threats to Terrestrial Biospheres." *The Astrophysical Journal* 947.2 (2023): 42.

Reference: Lineweaver, Charles H., Yeshe Fenner, and Brad K. Gibson. " The galactic habitable zone and the age distribution of complex life in the Milky Way." Science 303.5654 (2004): 59-62.

Universe Today

Universe Today