The name “dark energy” is just a placeholder for the force — whatever it is — that is causing the Universe to expand. But astronomers are perhaps getting closer to understanding this force. New observations of several Cepheid variable stars by the Hubble Space Telescope has refined the measurement of the Universe’s present expansion rate to a precision where the error is smaller than five percent. The new value for the expansion rate, known as the Hubble constant, or H0 (after Edwin Hubble who first measured the expansion of the universe nearly a century ago), is 74.2 kilometers per second per megaparsec (error margin of ± 3.6). The results agree closely with an earlier measurement gleaned from Hubble of 72 ± 8 km/sec/megaparsec, but are now more than twice as precise.

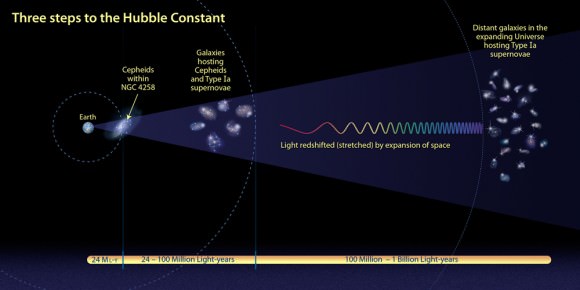

The Hubble measurement, conducted by the SHOES (Supernova H0 for the Equation of State) Team and led by Adam Riess, of the Space Telescope Science Institute and the Johns Hopkins University, uses a number of refinements to streamline and strengthen the construction of a cosmic “distance ladder,” a billion light-years in length, that astronomers use to determine the universe’s expansion rate.

Hubble observations of the pulsating Cepheid variables in a nearby cosmic mile marker, the galaxy NGC 4258, and in the host galaxies of recent supernovae, directly link these distance indicators. The use of Hubble to bridge these rungs in the ladder eliminated the systematic errors that are almost unavoidably introduced by comparing measurements from different telescopes.

Riess explains the new technique: “It’s like measuring a building with a long tape measure instead of moving a yard stick end over end. You avoid compounding the little errors you make every time you move the yardstick. The higher the building, the greater the error.”

Lucas Macri, professor of physics and astronomy at Texas A&M, and a significant contributor to the results, said, “Cepheids are the backbone of the distance ladder because their pulsation periods, which are easily observed, correlate directly with their luminosities. Another refinement of our ladder is the fact that we have observed the Cepheids in the near-infrared parts of the electromagnetic spectrum where these variable stars are better distance indicators than at optical wavelengths.”

This new, more precise value of the Hubble constant was used to test and constrain the properties of dark energy, the form of energy that produces a repulsive force in space, which is causing the expansion rate of the universe to accelerate.

By bracketing the expansion history of the universe between today and when the universe was only approximately 380,000 years old, the astronomers were able to place limits on the nature of the dark energy that is causing the expansion to speed up. (The measurement for the far, early universe is derived from fluctuations in the cosmic microwave background, as resolved by NASA’s Wilkinson Microwave Anisotropy Probe, WMAP, in 2003.)

Their result is consistent with the simplest interpretation of dark energy: that it is mathematically equivalent to Albert Einstein’s hypothesized cosmological constant, introduced a century ago to push on the fabric of space and prevent the universe from collapsing under the pull of gravity. (Einstein, however, removed the constant once the expansion of the universe was discovered by Edwin Hubble.)

“If you put in a box all the ways that dark energy might differ from the cosmological constant, that box would now be three times smaller,” says Riess. “That’s progress, but we still have a long way to go to pin down the nature of dark energy.”

Though the cosmological constant was conceived of long ago, observational evidence for dark energy didn’t come along until 11 years ago, when two studies, one led by Riess and Brian Schmidt of Mount Stromlo Observatory, and the other by Saul Perlmutter of Lawrence Berkeley National Laboratory, discovered dark energy independently, in part with Hubble observations. Since then astronomers have been pursuing observations to better characterize dark energy.

Riess’s approach to narrowing alternative explanations for dark energy—whether it is a static cosmological constant or a dynamical field (like the repulsive force that drove inflation after the big bang)—is to further refine measurements of the universe’s expansion history.

Before Hubble was launched in 1990, the estimates of the Hubble constant varied by a factor of two. In the late 1990s the Hubble Space Telescope Key Project on the Extragalactic Distance Scale refined the value of the Hubble constant to an error of only about ten percent. This was accomplished by observing Cepheid variables at optical wavelengths out to greater distances than obtained previously and comparing those to similar measurements from ground-based telescopes.

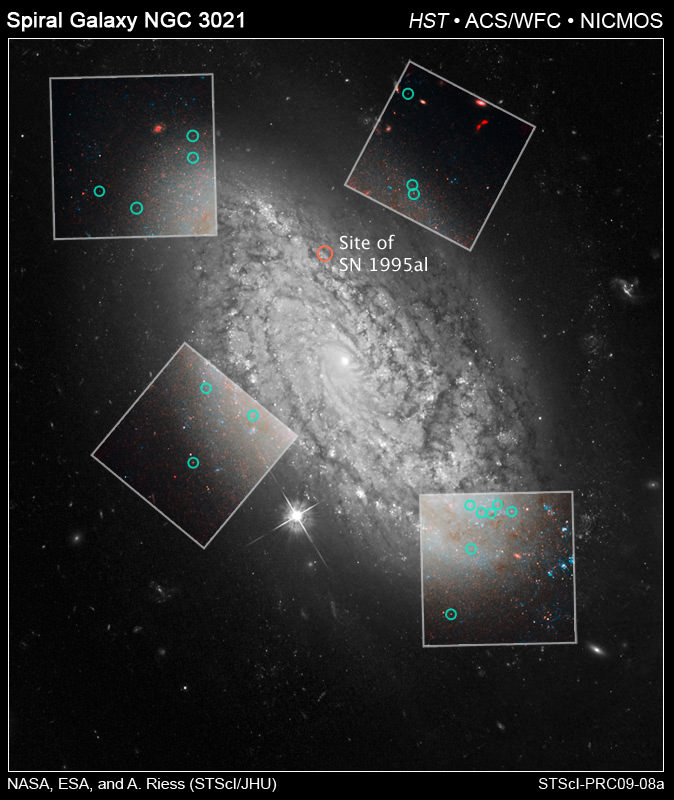

The SHOES team used Hubble’s Near Infrared Camera and Multi-Object Spectrometer (NICMOS) and the Advanced Camera for Surveys (ACS) to observe 240 Cepheid variable stars across seven galaxies. One of these galaxies was NGC 4258, whose distance was very accurately determined through observations with radio telescopes. The other six galaxies recently hosted Type Ia supernovae that are reliable distance indicators for even farther measurements in the universe. Type Ia supernovae all explode with nearly the same amount of energy and therefore have almost the same intrinsic brightness.

By observing Cepheids with very similar properties at near-infrared wavelengths in all seven galaxies, and using the same telescope and instrument, the team was able to more precisely calibrate the luminosity of supernovae. With Hubble’s powerful capabilities, the team was able to sidestep some of the shakiest rungs along the previous distance ladder involving uncertainties in the behavior of Cepheids.

Riess would eventually like to see the Hubble constant refined to a value with an error of no more than one percent, to put even tighter constraints on solutions to dark energy.

fascinating!

… and I like the way you say the name is just a placeholder. There are a lot of people who say “modern astronomy” has “invented” all kinds of mysterious “dark stuff”… and I’m spending a lot of time trying to explain to people that nobody has invented anything… but if you want to talk about something, you need a word for it. Something less longwinded than saying “we don’t know what it is, but you know what I’m talking about…”

Nice journalism!

@ Feenix: Keen (and accurate) post. Great job, Nancy, with this complicated, but crucial, aspect of the universe we live in. Only 11 years since its discovery, we are just at the beginning of the learning curve with regards to Dark Energy, but progress is being made!

“Cepheid Calibrations of Modern Type Ia Supernovae:Implications for the Hubble Constant” is the title of the related arXiv preprint; abstract (and link to PDF) here:

http://arxiv.org/abs/0905.0697

A companion paper outlining Dark Energy implications of a refined Hubble Constant entitled “A Redetermination of the Hubble Constant with the Hubble Space Telescope from a Differential Distance Ladder” can be found here: http://hubblesite.org/pubinfo/pdf/2009/08/pdf.pdf

i find it just crasy they are always looking for the waves of dark enegry but never the particles i mean isn’t a law that every force has to have particles. maybe i haven’t heard any of the news about the dark Energy particles but do they think that cern or Large Hadron Collider (LHC) will find any?

If cepheïds are more accurately measured in the infrared, does that mean that James Webb and Herschel will help strengthen that step of the ladder?

@Sili: yes, big time!

@h2owaves: by force I think you’re referring to electromagnetism, for which the photon is the force carrier, the weak force, for which the W and Z bosons are the force carriers, and the strong force, in which gluons play that carrier role.

Trouble is that gravity is not a force! (where’s the ‘jaw dropping’ smilie?). At least it isn’t if General Relativity (GR) is an accurate description of it; gravity is geometry in GR.

Now GR and the theories which describe electromagnetism and the weak and the strong forces are mutually incompatible, at a very deep level, and when physical conditions get extreme enough that incompatibility is so severe that we have no way to describe what goes on (this is called the Planck regime).

IF – and it’s only an if – gravity turns out to be a force like the electro-weak (electromagnetism and the weak force have been unified) or the strong, then there will be a force carrier particle, call it the graviton, and it will have certain properties that we can name now.

It gets weirder.

It may turn out that dark energy is just an aspect of gravity, the cosmological constant (the Greek letter capital lambda in the Einstein Field Equations); so IF gravity turns out to be ‘just another force’ and IF dark energy is an aspect of gravity, THEN there will be a particle for dark energy!

And no, the LHC is extremely unlikely to find any gravitons, because the physical conditions it will create are just far, far, far, far too wimpy (gazillions of times too tame compared to the Planck regime).

@ Feenixx:

Feenixx states: “… and I like the way you say the name is just a placeholder. There are a lot of people who say “modern astronomy” has “invented” all kinds of mysterious “dark stuff”… and I’m spending a lot of time trying to explain to people that nobody has invented anything… but if you want to talk about something, you need a word for it. Something less longwinded than saying “we don’t know what it is, but you know what I’m talking about…”

At one level I agree with you and the article: “dark” energy is a placeholder.

“we don’t know what it is…”

But it goes deeper than that, not only doesn’t “modern” astronomy “know what it is”, but it also doesn’t know if there is anything at all.

It could be that “dark” energy is a placeholder for keeping the “Hubble Law” from being falsified.

The term “dark” energy was derived because the Universe and its large structures aren’t behaving according to the “Hubble Law”.

Of course, the assumption is that the “Hubble law” is a valid description of the supposed expansionary dynamics of the Universe, as dictated by the “big bang”, so if the visible Universe isn’t behaving according to the theory there must be something invisble acting on the Universe that can’t be detected. The proceeding is the “Standard Model” interpretation of “modern” astronomy.

But there are three possible alternative explanations:

One, there is no invisible “dark” energy at all and the Hubble theory is wrong. Two, the theory could be right, but another known Fundamental Force is acting on the Universe, but it has not been considered, say like the force of electromagnetism. Three, the theory is wrong and another known Fundamental Force is acting on the Universe say like the force of electromagnetism.

Of course, since the “big bang” theory is right and it depends on the “Hubble Law” for validity, the ‘Hubble Law” must also be right, therefore, there must be invisible “dark” energy that can’t be detected.

So-called “modern” astronomy has peggged all its understanding of the Universe on the assumption and belief that their basic principles of analysis & interpretation of the observed & measured evidence is a correct reflection of reality.

So-called “modern” astronomy is in the uncomfortable position of insisting their theories are right so there must be an invisble and unknown force labled as “dark” energy.

In other words, “modern” astronomy insists its map does reflect the territory.

The problem is that the territory is not cooperating.

Would “modern” astronomy ever consider the possiblity their basic theories of the Universe are wrong?

Not yet, anyway, and the search goes on for “dark” energy.

Hubbles law doesn’t get falsified, the cause of Hubbles law does.

Hubbles law is an EMPERICALLY DERIVED EQUATION, derived by plotting the apparent distance to galaxies, against their apparent recessional speeds as indicated by red shifting (so, in essence, Hubbles law is simply the relationship between red shift, and apparent distance).

@Anaconda: there are so many misunderstandings (etc) in this comment that I hardly know where to begin!

Not to worry; over the next series of comments, by me, I’ll address them one by one (well, some of them anyway).

@ Nereid:

Nereid states: “@Anaconda: there are so many misunderstandings (etc) in this comment that I hardly know where to begin!”

Sorry, Nereid, no misunderstandings at all. It is simply a chain of logic.

Or are you to stand, here, and say, “no, it’s not possible, the “Hubble Law” has to be right.

Or are you saying, “the “Hubble Law” can’t be falsified.

Any scientific theory that can’t be falsified, isn’t a scientific theory.

Neried, are you saying there has to be “dark” energy?

What are you saying?

Your fiat statements don’t carry any water.

@ Trippy:

Trippy states: “Hubbles law doesn’t get falsified, the cause of Hubbles law does.”

That sounds mighty close to, “the “Hubble Law” must be right, any observation that contradicts it must be wrong.”

Trippy states: “Hubbles law is an EMPERICALLY DERIVED EQUATION, derived by plotting the apparent distance to galaxies, against their apparent recessional speeds as indicated by red shifting.”

“apparent distance”

“apparent recessional speeds”

As determined by assumptions at to what ‘redshift’ means.

‘Redshift’ may not correspond to speed and distance at all as Halton Arp has theorized.

This is exactly my point: Basic principles of analysis & interpretation may not be correct.

If you can’t allow for the possibility that they are false, in essence, they are non-falsifiable.

Now, all may be well and good and the “Hubble Law” is correct. But the “Hubble Law” is a principle of interpretation & analysis not the thing, itself.

The map is not the territory, the map is a construct in the mind of Man.

Definition of a fool:

Someone who knows that they are doing something dumb yet choose to do so anyway.

As Trippy has noted, dark energy can’t ‘falsify’ Hubble’s law (I prefer ‘the Hubble relationship’), because the Hubble relationship is simply a plot of z (redshift) against distance; the placeholder (dark energy) refers to a shorthand for a difference between the Hubble relationship and z-distance relationships derived from CDM cosmological models.

Wrong; Lambda, the cosmological constant, had been in General Relativity-based cosmological models for a long time, but until Riess et al. and Perlmutter et al.’s 1998 and 1999 (respectively) papers, its value was thought to be zero.

I do not know – off the top of my head – who in the astronomy/astrophysics/cosmology community first used the term itself (“dark energy”), but I think it was before the 1998 Riess et al paper (I’ll check though), so, logically, it can’t have been created to account for known deviations of the Hubble relationship from CDM-based models, and as neither the SDSS nor the 2dF large-scale structure results were published before 1998, logically, it can’t have been created to apply to those observations either.

(to be continued)

At the overview level, you have it backwards; the Hubble relationship is an observational result that can be accounted for in GR-based cosmological models.

The observational data are primary, and so the first thing you look for is unknown systematics in that data. Then you consider as many hypotheses that could give rise to a ‘dark energy’ signal in your data as you can, and you test them.

This is what both Riess et al. and Perlmutter et al. did in their papers. A great many astronomers tested all their alternatives, and many more, over the following years.

And many others looked for ways to test the idea that something like a cosmological constant (in the EFE) could be found using completely different kinds of observational data, and such signals were found.

At its most basic level, you have a theory and you seek to test it in as many ways as you can.

Perhaps the most confusing thing in this part of what you wrote, Anaconda, is “there must be something invisble acting on the Universe that can’t be detected”; if it is invisible, it can’t be seen; if it can’t be detected, then it has no influence on the Universe! As I’m pretty sure you did not mean that, perhaps you could say what it is you meant to write?

As I hope you realise, by now, this is meaningless, not least because there is no such thing as “the Hubble theory.

Yes, this is a logical alternative.

However, the large-scale (cosmological) impact of electromagnetism – as a form of energy – could not account for the observational results, not least because it would manifest itself as a deceleration, the opposite of the observed acceleration.

So this whole class of alternatives has indeed been considered, and already found to be inconsistent with the relevant observations.

This too is a logical alternative.

However no one (that I know of) has put forward any models based on such an alternative, models which can even loosely account for the Hubble relationship, the CMB, the observed large-scale structure, and the observed abundances of light nuclides. And the lack of such alternatives is not for want of trying; after all minds as brilliant as Alfvén and Hoyle certainly tried (very hard), but failed. For avoidance of doubt, I do not mean that Alfvén or Hoyle tried to account for the ‘dark energy’ observations (they obviously didn’t!), but that neither produced alternatives which accounted for the much more limited datasets of the 1970s, 1980s and (early) 1990s.

@Anaconda: the rest of your May 7th, 2009 at 2:20 pm comment either repeats the misunderstandings or confusions I’ve already addressed or builds on them.

Turning to your next comment (May 7th, 2009 at 3:45 pm), starting at the end this time.

The ‘apparent recessional speed’ is, to use your term, an inference; the Hubble relationship (or Hubble law) is z (redshift) vs distance.

Now z is, I think, a direct observation, to use your term (or, if you prefer, your use of the word), so I doubt that you have any problems with this (please correct me if I’m wrong).

And what comes out of GR-based cosmological models is z (interpretation not required).

That leaves distance.

Yes of course the estimated distances to galaxies (and the stars in them, and supernovae, and …) may be wrong, for a very, very large number of possible reasons.

But here’s the thing Anaconda: by the late 1930s, and certainly by the early 1950s, a very great deal of very good astronomical research had established that IF these distance estimates were wrong, they were wrong systematically, by a constant factor (within the understood errors and uncertainties). And that possibility has been long built in to cosmology; check out the meaning of h.

I was rather surprised to read this, Anaconda, given the many, many, many comments by you about the primacy of observation and the need for theory to be tested, measured, etc.

Surely the question you should have asked is “what is the observational evidence that Arp’s theories are right?”

And the answer to that question is, today, there is no such evidence (of course, this is an extreme summary, but as Arp theories, as far as I know, require ALL quasars, LINERs, Seyferts, etc to be ‘local’, then demonstrating that JUST ONE such object is unquestionably not at all local is fatal).

And you are right, they may indeed not be correct.

But which astronomer, astrophysicist, etc would disagree?

How do they pick out a few Cepheids from all those millions of stars in that galaxy?

And why won’t Word Press let me logout?

As I think I have now convincingly shown, it (your comment) contains some errors of fact, and some errors in the assumptions.

Thus, in logic, if the premises are incorrect, the conclusions have, at best, an indeterminate status (and, in most cases, are simply wrong).

I trust that, by now, you know what I am saying; if you still don’t, please ask for clarification.

Huh?

My comment contains two paragraphs, only one of which you quoted.

The second is (I’m putting it in bold this time, in the hope that you don’t miss it): “Not to worry; over the next series of comments, by me, I’ll address them one by one (well, some of them anyway).”

Which, I hope you’ll agree, I have done.

@opencluster: by their variability.

Cepheids have very characteristic light curves (= a plot of brightness vs time), so if you take lots of images of the same part of a galaxy, over many days, you can pick out all the variables (by ‘blinking’ the images, or, today, with software), and the light curves of the variables tell you which are Cepheids (and which are other kinds of variables).

Anaconda Says:

May 7th, 2009 at 3:45 pm

Yep – of course it could all be wrong. But then we would have to confront a ramification of this of far more depth than I think you or I could possibly understand – that all of the successes of modern cosmology with all of it’s interconnectedness – and there have been many – have just arrived at the answers they have by pure chance. They just happen to explain these things that they explain and fit with the vast majority of observations simply by pure coincidence. And you want us to believe this is the case without so much as putting forward a viable alternative? I don’t mean something vague like ‘it could be some other force’; it must be concrete. What does the alternate theory predict for the isotopic abundances in the universe? Does it explain the CMB and the anisotropies in contains? If redshift is not an indicator of universal expansion and hence distance, then what specific mechanism generates it? Why are more highly redshifted galaxies smaller in angular size and why do they appear distinctly less developed than low redshift galaxies if not because of distance? How do account for the metallicity of such galaxies and gaseous content?

There are literally millions of questions that the current paradigm addresses and addresses well. There are also many that it does a poor job of, so we tinker to see if we can make it fit. We don’t throw the baby out with the bath water at the first sign of difficulty with a theory though, or nothing would ever get done – scientists usually don’t just shout Eureka! and magically come up with a full theory that agrees perfectly with every observation ever devised…

Dark energy and dark matter are working ideas that will be tested and tested rigorously. The truth will emerge – an incorrect theory will be impossible to fit to all of the facts – the very principle of science. We all know that we haven’t got nearly all of this correct yet, so of course there is room for backtracks and false alleys of exploration. Time will tell.

Amass a dormatory (or two) of math (or other types of) savants, and set them to work on this problem.

Give’em something really useful to do, besides answering fantastic division questions on TV.

Simply allow them to listen and watch Physicists lecture and perhaps even talk about this stuff.

No mistreatment, just serious assistance for them and exposure to support to something that they and others could greatly benefit from.

What’s to loose ?

@Triskelion: that’s what already happens, in universities all over the world, isn’t it?

the math savants are called ‘grad students’ 😉

Think that when we observe and measure certain areas of the cosmos that, where we measure may find deflating areas of space and inflating areas of space in others.

The big bang caused compression waves traveling in space.

Between the waves it appears to be inflating and in the denser parts of the waves it may appear to be deflating or perhaps neither one.

I dis-believe that no space existed before.

I can’t make myself believe in nothing.

Thus, there was something.

The trick is to try to observe it.

It is too bad this thread is getting buried by PU stuff.

Dark energy is a place holder. What is commonly thought is that the cosmological constant is due to the quantum vacuum state. The Einstein field equation

R_{ab} – (1/2)Rg_{ab} = kT_{ab}

will produce the cosmological constant term with

kT_{ab} = /\g_{ab}

if T_{ab} = eg_{ab} – pU_aU_b,

for e the density of the zero point energy. There are some funny things about this idea. The universe with a cosmological constant has the Ricci curvature proportional to the metric times a constnat

R_{ab] = (/\ +R/2)g_{ab}

which is a vacuum solution (no source) and the curvature is purely geometric. So the meaning of putting the quantum vacuum in for a source is not entirely clear.

The existence of dark energy may well challenge our concepts of both spacetime and what is meant by a vacuum state in quantum mechanics.

Lawrence B. Crowell

Or redshift might be quantized as a function of composition/energy level.

http://www.ias.ac.in/jarch/jaa/8/241-255.pdf

solrey,

This old Arp paper is so antiquated. I mean galaxies having radial velocities >1000 km/sec have there own motions, and not only the expansion velocity. What about the rest of the universe, eh? (that doesn’t count, I suppose?) Hubble expansion at~50 to 55? I thought it was closer to 75 – must be an illusion, perhaps?

Nonsense, mate.

Did you read the article before making this post?

The distance derived from measuring Cephied Variables HAS NOTHING TO DO WITH REDSHIFT.

Cephied Variables vary, hence the name.

The period of that variability depends on how bright the star REALLY is.

We can look at, and measure how bright it LOOKS.

This tells us how far away the galaxy hosting the Cephied variable is. NO ASSUMPTIONS ABOUT REDSHIFT REQUIRED.

We can then measure the red shift of the SAME GALAXY.

If we do this for many galaxies, we can see if there is a relationship between DISTANCE and RED SHIFT, without ACTUALLY making any assumptions about the nature of redshift (in fact, this is precisely what Edwin Hubble did).

Maybe I’m just having a bad day, but I can’t actually extract anything meaningful or relevant out of that.

AFAIK it was Michael Turner in a paper titled:

“Prospects for probing the dark energy via supernova distance measurements”

(available on Arxiv)

abs/astro-ph/9808133

Which, AFAIK predates the Reiss et al paper.

Alternative explanations of redshift have failed to measure up. Solrey is a shill for dead physics. I am not sure why people do this. It is not only wrong, but it is boring as well.

Lawrence B. Crowell

Lawrence B. Crowell says:

“Alternative explanations of redshift have failed to measure up.”

I’m not a professional mathematician or physicist, just an enthusiastic amateur. Doing actual quantitative numerical calculations takes me ages… but I DID check up (some years ago) on “intrinsic redshift” as proposed by Arp.

Now, somebody please correct me if I’m wrong – like I said, I don’t really do this kind of math – but: “intrinsic redshift” seems to imply either:

… a different mass for elementary particles in the redshifted Galaxy (lower, I reckon, but I cannot be certain)

… or else:

time passing at a different (slower??) rate.

My view of how things work is based on the validity of the same physics throughout my Universe.

Arp may have “emeritus” status, but I disrespectfully stick out my neck and say “he cannot be right on this subject!”. Intrinsic redshift looks like an outright twisted notion to me – not even “pseudoscience” (which can be fun), but twisted.

@Feenixx: your critical thinking skills are working just fine! 🙂

Arp teamed up with Narlikar to develop what is called the Variable Mass Hypothesis (VMH) which is, at this level, the first of your “imply”‘s; so, well done.

And, IIRC, Narlikar tied the VMH to a version of the Steady State Cosmology theory which he worked on with Fred Hoyle.

Arp, Geoffrey Burbidge, and others wrote quite a few papers which set out to show that astronomical observations are consistent with the VMH, but as I said earlier (and also elsewhere) none of them has been able to show consistency with all the relevant observations (or even a reasonable subset of them), so it’s now only an interesting chapter in the history of astronomy and cosmology (take note solrey).

However, it’s fun to think of what the VMH means. For example, if you could, somehow, take a few grams of copper, sodium, magnesium, barium, etc from one of these high-z objects, bring them instantly here, make fireworks from them, and then set them off, you’d get quite a surprise! (can you work out what you’d see?)

And this gives another way to test the idea: under the VMH, at least as it applies to galaxies and quasars, particles gain mass rather slowly (after they were created in the nucleus of the active galaxy parent – remember this is tied to Steady State Cosmology), reaching values that we find in our labs today only after hundreds of millions or billions of years.

Which is more than enough time for a high-energy cosmic ray to travel from there to here, especially when you include time dilation.

So we should find that very high energy cosmic rays have at least a component with unusual masses (because at least some of these UHECRs originate in those ‘young’ active galaxies and quasars), protons with masses of ~1 x 10^-27 kg, for example (vs 1.67 x 10^-27 kg).

Guess what? No such protons (or iron nuclei, or …) have been found.

I met Narlikar at a conference last decade. He is a curious individual who inisists on promoting the Hoyle-Narlikar idea of a propagator based universe, or a modified Feynman-Wheeler absorber type theory. So he toils to keep producing ever more rococo modifications of this. The whole idea is basically wrong, or there is no evidence lending any support for the idea.

The variable mass idea has gone through various modifications. The initial idea was that the universe did expand, but did so by continuously creating matter. So the conserved quantity in cosmology is not mass-energy, but its density. The idea flopped for a number of reasons. It has problems with quantum theory, and secondly it did not reproduce the CMB properly. So then the idea was to have particles adjust their mass with time. This leads to a host of problems. And as pointed out if this happens with the proper time of the particle then we should find cosmic rays with different masses.

I have no problem with working up alternative theories. A lot of this came about in the 1960s as a way to trying to find failure points in big bang. This is acceptable science. It was reasonable to ask whether the observed expansion and CMB could be understood by completely alternative means. Of course that was then. These alternatives have failed, and to continue to uphold them is to embrace pseudo-science or just outright crackpottery. A more extreme example is seen with geocentrism, which has a small cadre of upholders out there.

I do not know why people want to put themselves in this position. There is a certain epiphany that comes from admitting one’s own error. I am wrong, or we are wrong is an honest and I think mentally healthy move to make when confronted with the facts as they develop against you. Yet for some reason there is a growing trend of people holding onto outdated theories, creationism, conspiracy theories and the like. This is a regrettable aspect of the last several decades.

I might also say that the internet is a mixed blessing. It is a part of killing serious journalism. Most online journalism is just a recycling of serious journalism, or worse nonsense. When it comes to popularizations of science a similar trend appears to be developing. Free energy, cold fusion, plasma cosmology and other very “alt-sci” websites exist which compete with real science for attention. Along with this are websites devoted to creationism (www.answersingenesis.com) and sites meant to give disinformation on environmental and energy issues. This is particularly the case with global warming. So the internet is serving less to bring about a flowering of thought and honest ideas, and instead is creating a smokescreen or cacophony of disinformation.

In looking for the origin of the term ‘dark energy’, found the Wiki piece illuminating: “The term “dark energy” was coined by Michael Turner in 1998.[20]”. See the Wiki entry for ‘dark energy’, then check out the ‘History’ section here: http://en.wikipedia.org/wiki/Dark_energy . Their reference 20 contains links to two 1998 papers with Turner (and different co-authors) and exactly how this term was used in both papers. @ Lawrence B. Crowell, many thanks for your in-depth look at specific areas of dark energy physics and the impact research into these areas could have in revolutionizing our understanding of Matter and energy. As Astrofiend has pointed out elsewhere, we seem to be at a point where a great number theories concerning DE and DM are being presented, and following historical precedents, some major paradigm shift may soon appear that totally reframes our understanding of physics. This is a good thing, and I look forward to our understanding of DE and DM. Thanks again for wading through the PU stuff to give us a good quantitative look at specific areas of DE theory and its implications and impact for future research.

@Jon Hanford: thanks for the link, and inputs.

I’ve not found anything earlier than the Huterer and Turner astro-ph preprint (which Trippy also noted, earlier).

However, I agree with whoever wrote the Wikipedia article that the way Huterer and Turner use the term strongly suggests that it was already in use (i.e. that they did not coin it, at least, not in that preprint). Of course, that’s the normal process, in how neologisms are born: they appear first in spoken language and are later written down, with usage in the formal register (I think that’s how linguists term it) appearing last.

I had to look up Narlikar, and I’m surprised I never heard of him. I can’t quite understand the link between his hypothetical “variable mass” and Arp’s “intrinsic redshift” – stumbling on it (or having it pointed out to me) unexpectedly – but I’ll look into it. To me, it isn’t a waste of time – I actually find scientific fringe speculations quite entertaining and mentally stimulating.

My own attempts at unraveling “intrinsic redshift” arrived at different masses for the same particles in different places in the Universe, while he seems to propose different masses at different times….

ah, well… I could have missed something. Even some PhDs seem to miss things, sometimes 😉

@Feenixx: the link is that, in the Arp-Narlikar version of the VMH (IIRC, which I may have not), new matter is created in the nuclei of active galaxies (the “parent”) and, by some unspecified mechanism, blasted out at relativistic speeds.

At creation, the mass – which comprises at least H and He (I’m not sure about the metals) – has zero mass, but, again by some unspecified mechanism, as it ages it gains mass, and also slows down.

That is why quasars are arrayed in lines across active galactic nuclei, with the ones closest to the “parent” having the highest redshifts; the distance from the parent is a measure of time (statistically speaking), according to Arp and G. Burbidge.

Now, today, this may seem quite ridiculous, given the results of large, careful surveys such as SDSS, but you must remember that until at least the early 1970s (and perhaps even the late 1970s or early 1908s) the systematics of quasar (QSO, blazar, etc) discovery were poorly understood.

One intriguing factoid: very early it was discovered that some quasars (they were all radio sources back then) had ‘fuzz’ around them (this may have happened within a year of quasars being discovered), which today we call the quasar’s host galaxy (not to be confused with Arp’s “parent” galaxy!). Interestingly, spectra of some of the host galaxies were reported as early as 1977/8-1982, and were shown to have the same redshift as the quasar itself, as well as having a SED which resembled that of normal, local, galaxies with similar morphologies. From where we stand today, I’d’ve said that was pretty much conclusive evidence that the Arp-Narlikar VMH had no legs, but as a few minutes with ADS will show, it was not (for some, anyway).

@ Feenixx:

Have you hung out in the BAUT Forum’s Against the Mainstream (ATM) section (Fraser referred to it in his Crackdown blog)?

Or the JREF Forum’s Science, Mathematics, Medicine, and Technology section?

Of course very few of the “fringe speculations” you will find in either place would merit the qualifier “scientific”, even with the most generous of interpretations! 🙂

If you’re into more serious stuff – with the criterion for ‘serious’ being something like at least a dozen papers published in relevant, peer-reviewed journals – you’ll have to look very hard, because unless the fringe speculation has, or had, a fan club outside the relevant scientific community (astronomers, say), very few people outside that community will even know such speculations exist, and finding them in the journals would be the work of Hercules (though not of Sisyphus).

Of course, what is today fringe may have once been a serious alternative, or even mainstream (think of the aether, for example).

It might be fun to draw up a list of failed (scientific) ideas in astronomy, cosmology, and space science, over the last ~80 years (i.e. since the quantum revolution had taken firm hold, and the Great Debate was over), starting with those which gained traction (or at least serious coverage) in the popular press; obviously, Steady State cosmology would be at, or near, the top of such a list.

Out of curiosity: where would MOND fit, in your view, wrt being alternative/fringe/etc? And what about the various extensions which incorporate relativity?

[quote]So the internet is serving less to bring about a flowering of thought and honest ideas, and instead is creating a smokescreen or cacophony of disinformation[/quote]

The internet doesn’t create the cacophony. It has always been there. Individuals have always been responsible for filtering out the noise from the signal for themselves. But thanks for being part of the signal rather than part of the noise :))

Nereid wonders:

I haven’t looked much at MOND – it strikes me as reasonably consistent, but also as quite vague on some points, with more (currently not testable) assumptions than dark matter, and hence more open to “abuse”. I’m not certain whether I’d call it “fringe” or “alternative”, or perhaps just “in need of more time”. My attitude here is: If calculating the way a Galaxies rotate according to MOND and according to invoking Dark Matter lead to the same result, then I’ll regard both as different valid ways of exploring the same phenomenon. I have a completely agnostic attitude on what Dark Matter actually “is”.

Apart from that: following Newton’s ancient findings and nothing else, unmodified, seems to work a treat… like pre-programming a spacecraft to fly past Enceladus at 20 times the speed of a combat plane with 25 km to spare… or to land on an asteroid not much bigger than a large mountain.

Those “against the mainstream” forums, ah, well, I used to read them, but after a while they become sort of “always the same-ish”. For pure entertainment value, I like conjectures where the proponent attempts to apply principles of physics (often cracked fringe theories) to subjects such as sociology, psychology and various historical studies.

@ Feenix: Ever see any papers on Hydro-Gravitational-Dynamics (HGD) ? I’ve come across a few papers by Carl Gibson & Rudolph Schild elaborating on this strange theory. You can read an overview paper by Gibson with refs to previous papers here: http://arxiv.org/ftp/arxiv/papers/0803/0803.4288.pdf . This short, illustrated paper appears to have been presented at a symposium-workshop in St. Petersburg, Russia in 2008. As Nereid commented earlier, most of the refs are to Gibson’s and Schild’s earlier work and only a few have been published in a relevant journal. Having only read the linked paper and the papers on VV 29 and Stephan’s Quintet, I can’t comment on the body of their work, but I think you and others might find this theory oddly entertaining 🙂