[/caption]

The very small wavelength of gamma ray light offers the potential to gain high resolution data about very fine detail – perhaps even detail about the quantum substructure of a vacuum – or in other words, the granularity of empty space.

Quantum physics suggests that a vacuum is anything but empty, with virtual particles regularly popping in and out of existence within Planck instants of time. The proposed particle nature of gravity also requires graviton particles to mediate gravitational interactions. So, to support a theory of quantum gravity we should expect to find evidence of a degree of granularity in the substructure of space-time.

There is a lot of current interest in finding evidence of Lorentz invariance violations – where Lorentz invariance is a fundamental principle of relativity theory – and (amongst other things) requires that the speed of light in a vacuum should always be constant.

Light is slowed when it passes through materials that have a refractive index – like glass or water. However, we don’t expect such properties to be exhibited by a vacuum – except, according to quantum theory, at exceedingly tiny Planck units of scale.

So theoretically, we might expect a light source that broadcasts across all wavelengths – that is, all energy levels – to have the very high energy, very short wavelength portion of its spectrum affected by the vacuum substructure – while the rest of its spectrum isn’t so affected.

There are at least philosophical problems with assigning a structural composition to the vacuum of space, since it then becomes a background reference frame – similar to the hypothetical luminiferous ether which Einstein dismissed the need for by establishing general relativity.

Nonetheless, theorists hope to unify the current schism between large scale general relativity and small scale quantum physics by establishing an evidence-based theory of quantum gravity. It may be that small scale Lorentz invariance violations will be found to exist, but that such violations will become irrelevant at large scales – perhaps as a result of quantum decoherence.

Quantum decoherence might permit the large scale universe to remain consistent with general relativity, but still be explainable by a unifying quantum gravity theory.

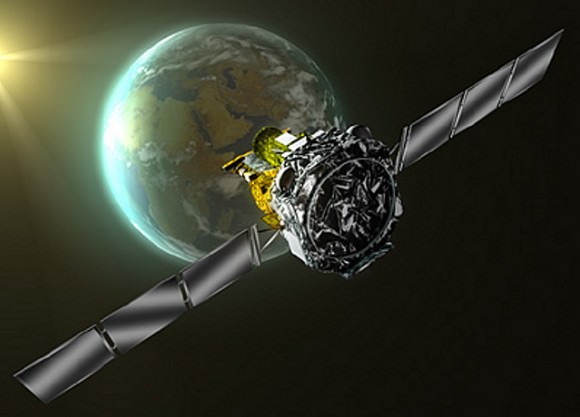

On 19 December 2004, the space-based INTEGRAL gamma ray observatory detected Gamma Ray Burst GRB 041219A, one of the brightest such bursts on record. The radiative output of the gamma ray burst showed indications of polarisation – and we can be confident that any quantum level effects were emphasised by the fact that the burst occurred in a different galaxy and the light from it has travelled through more than 300 million light years of vacuum to reach us.

Whatever extent of polarisation that can be attributed to the substructure of the vacuum, would only be visible in the gamma ray portion of the light spectrum – and it was found that the difference between polarisation of the gamma ray wavelengths and the rest of the spectrum was… well, undetectable.

The authors of a recent paper on the INTEGRAL data claim it achieved resolution down to Planck scales, being 10-35 metres. Indeed, INTEGRAL’s observations constrain the possibility of any quantum granularity down to a level of 10-48 metres or smaller.

Elvis might not have left the building, but the authors claim that this finding should have a major impact on current theoretical options for a quantum gravity theory – sending quite a few theorists back to the drawing board.

Further reading: Laurent et al. Constraints on Lorentz Invariance Violation using INTEGRAL/IBIS observations of GRB041219A.

At the ninth paragraph, there’s a missing preposition “of” in the second line: “… Gamma Ray Burst GRB 041219A, one [of] the brightest such bursts on record.”

Check – thanks

What does your statement have to do with anything on this forum? NOBODY CARES. People post from their phones and dont have time to make everything perfect, dont you get it? Once again, Ivan3man, what a douche.

I know the difference between formal and informal writing; therefore, I do not nitpick on any person’s comments!

Steve,

Bravo on the statement “There are at least philosophical problems with assigning a structural composition to the vacuum of space, since it then becomes a background reference frame – similar to the hypothetical luminiferous ether which Einstein dismissed the need for by establishing general relativity.” This is exactly the problem we face with this problem. This ended up being a bit long, with parts pieced in from other communications. However, this result and the results from the FERMI spaceraft 2-1/2 years ago are extremely important.

I was wondering it this topic would make its way here.

This subject has consumed a lot of discussion time on my part this past couple of weeks. In part I have worked for over 20 years on an idea that quantum mechanics and general relativity are on the level of category theory of mathematics the same thing. They are equivalent, but have an appearance according to a type of projection on varieties that makes them appear different.

Everything is now up for “grabs.” A lot of theory is now probably worth little more than paper for recycling. There is no information pertaining to such vacuum physics: It does not exist. This has to be taken seriously, and the implications are troublesome for many in the theoretical world. These data are the Michelson-Morely experimental results of our age. Physics contains thousands of people out there, many with decent positions at universities, who have worked up theories that are now little more than crap.

We seem to have a problem with this matter of the “vacuum.” A quantum vacuum is computed with the ZPE and this has generated lots of attention. Supersymmetry eliminates the vacuum in an interesting way with a graded Lie algebra which assigns to each fermion and boson a corresponding boson and fermion partner respectively. In the unbroken phase SUSY then cancels out the negative fermion vacuum with a positive boson vacuum. There was always something attractive about this, for it appeared to be a fundamental way of eliminating the vacuum physics. However, if you break supersymmetry the vacuum energy is increased and you have a vacuum of positive energy, including with SUGRA. However, we have no experimental evidence for this vacuum.

In 2008 the results of the Fermi spacecraft were released. The time of arrival for gamma-rays and radio waves was the same over a distance of 10 billion light years. It was back then that I began having lots of doubts about these fluctuation ideas with the metric. 10 billion light years is 10^{27}cm. A change in the speed of light would mean that quantum fluctuations of the metric introduce a dispersion, which acts as a sort of mass. The wave equation for radiation is then Proca like ?^a?_aA – m^2A = 0, which translates to a dispersion term in a Green’s function 1/(k^2 – m^2). The metric fluctuations which induce this are ?L/L ~ L_p/L ~ 10^{-33}/10^{-4} ~= 10^{-29}, or a fluctuation induced mass of 10^{-29}M_p ~ 1ev. The dispersion induced mass is a k + ?k, and the area probed is then ?x =1/?k ~ 10^2L_p. However, the time of arrival had no spread, and so the fluctuation scale probed is close to the Planck length. So these results have to be taken into account as well Y. Jack Ng’s holgraphic fluctation results also are in question as well. This predicts ?L =L_p(L/L_p)^{1/3} and the numbers predicted are 10^{-33}cm(10^{60})^{1/3} = 10^{-13}, and clearly no fluctuations on that scale were found.

The problem as I have seen over the last couple of years, in particular since FERMI gave similar results, is we are over counting the number of degrees of freedom (DoF) in the universe. The quantum vacuum has become sort of the aether space of our modern age. We may remember back then that it was presumed there was a “fluid” which inhabited space that was the medium electromagnetic radiation propagated on. There was then an implicit degree of freedom density, if we think of this fluid as made of particles of some type. None of this worked, and in the end the solution came down to 6 parameters of a symmetry group called the Lorentz group: 3 for rotations and 3 for boosts. None of this aether nonsense was needed. We are facing a similar shift in our perspectives on physics. Huge vacuum energies, with lots of modes and DoF, must be replaced by some simpler principle. Remember that the inflationary vacuum is enormous, up to 10^{110} times the current value estimated based on the small cosmological constant, and we still have this problem of 123 orders of magnitude with vacuum physics.

The correspondence (worked by Duff, Dahanyake, Borsten, Rubens) between SLOCC groups for 3-partite entanglements and the STU moduli for 1/2-1/4-1/8 supersymmetric BPS black holes and between 4-partite entanglements and extremal black holes appears to suggest some element of this path. The actual spacetime physics is completely classical. The spacetime physics has underlying quantum physics, but this is not entirely known as yet. The standard 3-partite entanglement group decomposes into C^3/SL(2,C)^3, which has an STU spacetime moduli equivalency in R^3/SL(2,R)^3. The spacetime physics that is classical indicates a Copenhagen-like correspondence, and I think that underlying quantum physics for gravity involves SL(2,H) symmetries, where H is the quaterions. Quaternions are pairs of complex numbers, which is a dual system of observables associated with the entanglement group with SL(2,C). The direct measurement of these observables involves the classical realization, which is what we call spacetime physics.

What this duality is exactly is tough to ferret out, but I think this involves a duality with respect to the Borel group representation of the AdS completion with discrete symmetries. These Borel groups define both the Heisenberg group and the parabolic group for null geodesics congruencies — lightcones, horizons etc. The AdS_n in a near horizon condition decomposes into AdS_2xS^{n-2}, and the AdS_2 has an interesting duality between soliton physics and fermion condensate physics. It may in the end by that the number of degrees of freedom in the universe is then reduced to a very small number for low dimensional spaces. The approach by Duff et al and these results with borel subgroups I am working on are aspect of how quantum mechanics and gravitation

are categorically equivalent. If this is the case, then what we expect to come from

LC

Actually, that was on May 10, 2009, when Fermi’s LAT detected from a 2.1-second gamma-ray burst – designated GRB 090510 – two gamma-ray photons, possessing energies differing by a million times, which arrived just nine-tenths of a second apart after travelling 7.3 billion light-years. Universe Today featured an article, “Einstein Still Rules, Says Fermi Telescope Team“, on that subject, back in October 28, 2009.

Indeed so, I had the year off by one. LC

Fascinating stuff. Coming from a different background, I was interested in this recent article:

http://www.electronicsweekly.com/Articles/2011/05/16/51054/graphene-could-reveal-the-grain-of-space-time.htm

and elsewhere

http://graphenetimes.com/2011/03/spin-and-the-honeycomb-lattice-lessons-from-graphene/

It originates from the paper: Spin and the Honeycomb Lattice: Lessons from Graphene [Phys. Rev. Lett. 106, 116803 (2011)]

or from arxiv

http://arxiv.org/abs/1003.3715

There are connections between graphene and the M2-brane, or at least there are similar properties. The AdS_4 spacetime which contains a black hole is perturbed near the horizon of the black hole into AdS_2xS^2. The AdS_2 has hyperbolic dynamics, which when perturbed by a Higg’s like field becomes a soliton. Zamalodchikov demonstrated how this is isomorphic to a fermion theory with a quartic potential. It is similar to superconductivity.

The graininess might be removed, but this needs to sit in an E_8 group theory. The 8 dimensional space may be tessellated by the Gossett polytope of the E_8 roots. One of the curious things about E_8 is that the space of roots is isomorphic to the algebra, which has a continuous structure. In this case the mass spectra on the 2 dimensional space is the (8,1) of the E_8 in the Zamalodchikov s = 1/2 conformal theory.

LC

I’m fascinated by the way you are comparing this problem to the problem of the electromagnetic medium. The realizations that dispelled the problematic need for the ether were radical to the point of being difficult for some to accept at that time – I can imagine the reality of quantum gravity will be equally paradigm-shattering.

The question being of course if there is such a thing as “quantum gravity”.

The ether was unnecessary. So is quantum gravity since we already know how to quantize gravity. It isn’t renormalizable, but I don’t think there is any requirement that an effective theory must be renormalizable and the idea of effective theory (such as general relativity) speaks against actually.

There should be an underlying, quantized, theory that supplants gravity, but that can be much larger/different, say derived from cosmology in the way inflation is. Inflation has the necessary local degree of freedoms (its potential value, with fluctuations) to have variation in the same way gravity has.

Whether you want to call such a theory “quantized gravity” of “quantized cosmology” I don’t know. But if it is much more than gravity, say string theory, why not let it be called what it is? Conversely, to say “quantum gravity” as this stage of the game is premature, a sign of immature imagination, and potentially harmful to investigation.

Oh wow… that is very … deep. I am stuck in classical physics, you see. I have a very hard time with the spooky quantum world of probabilities and uncertainty and whatnot 🙂 thanks for the reply.

Wonderful article Mr Nerlich! The mystery of the universe has its own beauty and for me this is revealed by your simple explanations of humanity’s wonderful science methods. Being not schooled to a high degree on physics and math theory, I really appreciate it when people with higher knowledge such as yourself can distill things into more easily digestible chunks for me.

Also great commentary by LC. I am going to bookmark this page now and do some serious googling on some of the terms here when I get time. Wow!

What I don’t quite get is why anybody thinks that space should polarize light waves? If photons are excitations of the space-time grains, then they should be vibrating at all random directions, the space-time grains won’t stop certain directions of vibrations, they will be part of the vibrations themselves. It’s like expecting water to polarize water.

Water does polarize light. Any surface polarises light.

Water polarises light, but it doesn’t polarise other water. This is the point I’m making. Light is polarised by other substances, not by itself.

I understand exactly what you’re saying, though I haven’t got the information to clarify your question. In other words, it’s not you… you’re making perfect sense. I think others aren’t reading your statements carefully enough before they reply.

lcrowell already gave you the answer above:

“Photons are excitons of the QED vacuum. They are not excitons of spacetime quanta. Excitons of spacetime quanta would be gravitons.”

I think you misunderstand the described physics.

The GRB signal is already (highly) polarized, see the paper, from physics of supernova/jet formation that have been discussed here many times. What is observed is how much a difference in polarization is seen after photons have been traveling cosmological distances.

No one is claiming that photons are excitations of “the space-time grains” but of EM fields that live in a possibly granular spacetime. Hence you get the replies that point out that most anything external to the fields can polarize them further, water, surfaces, crystals, magnetic fields. And in principle, but as it seems not in practice, the vacuum.

The energy of a photon is E = ?? and the momentum is p = ?k = ?/?. A dispersion relationship is ? = ?(k), which is a linear dispersion if ? = kc. However, if there is a dispersion relationship that is due to spacetime fluctuations there is a dispersion

?^2 = (kc)^2 +/- Ak^3/M_p, A = constant

which are two solutions for ?, which is a birefringent property of quantum “foamy” spacetime. This splitting what involves the polarization of EM photons.

It is in a way similar to the reflection of light off the surface of water. Certain crystals such as sapphire have this property. The splitting off of different dispersions induces a polarization splitting of light. These results give strong bounds on the occurrence of this, which tends to rule out a lot of grainy ideas about spacetime.

LC

You misunderstand my point here. I’m not saying whether or not water can polarise light, I’m saying whether or not a substance can polarise itself. Light is excitations of space-time quanta, and light itself is made from space-time quanta. Can a substance polarise itself? Can light polarise light, or can water polarise water waves?

Photons are excitons of the QED vacuum. They are not excitons of spacetime quanta. Excitons of spacetime quanta would be gravitons.

I suspect there is a confusion between the vacuum as a source of curvature and curvature itself. The Einstein field equation G_{ab} = (8?G/c^4)T_{ab} has on the left hand side curvature stuff and on the right hand side mass-energy source stuff. The electromagnetic field defines the T_{ab}, which determine on the left curvature stuff G_{ab}. Now of course the coupling constant 8?G/c^4 is very small, so it takes a huge density of photons to generate curvature. On the other hand curvature can easily change the path that photons take.

The spacetime curvature these observations are attempting to measure are due to quantum fluctuations on a very small scale. I will post a rather long discussion on this for those who are geeky enough to go through the mathematics — which is pretty basic actually. I will discuss the FERMI result more than these. The polarization effect is a bit subtle.

LC

At question is wether and/or how much is the vacuum quatatized. If it is quatatized like the electron “orbits” and nucleous “shells”, light will incur a phase shift depending on wavelenght and interaction wether it is water of water or not. Light does inteact with itself anyway. Light is assumed to interact with the oscillations of the space-time grains wve if your ideas hold true. Your first sentence is the best question.

The photon is quantized as a harmonic oscillator. A harmonic oscillator is a spring or pendulum with a small amplitude. The quantized version involves discrete energy steps of equal size E = ??(n + 1/2). The frequency ? is determined by the spring constant and just means this pertains to the generation of n photons with a certain frequency. The quantization of the Maxwell equations of electromagnetism works out to be a harmonic oscillator. If n = 0 there is still a residual energy left ??/2, which is called the zero point energy. To show this involves solving the Schrodinger equation for a system with a potential energy V = -kx^2/2 and working through Hermite polynomials — stuff one gets in an undergraduate quantum mechanics class. That zero point energy term can be removed by a little trick with quantum operators called normal ordering. However, if this system is coupled to another particle field, then so is this vacuum, and one can’t so easily eliminate the coupling of this other system to this vacuum. Something called the Lamb shift is a consequence of this. This also leads to the whole matter of renormalization.

LC

The photon is quantized as a harmonic oscillator. A harmonic oscillator is a spring or pendulum with a small amplitude. The quantized version involves discrete energy steps of equal size E = ??(n + 1/2). The frequency ? is determined by the spring constant and just means this pertains to the generation of n photons with a certain frequency. The quantization of the Maxwell equations of electromagnetism works out to be a harmonic oscillator. If n = 0 there is still a residual energy left ??/2, which is called the zero point energy. To show this involves solving the Schrodinger equation for a system with a potential energy V = -kx^2/2 and working through Hermite polynomials — stuff one gets in an undergraduate quantum mechanics class. That zero point energy term can be removed by a little trick with quantum operators called normal ordering. However, if this system is coupled to another particle field, then so is this vacuum, and one can’t so easily eliminate the coupling of this other system to this vacuum. Something called the Lamb shift is a consequence of this. This also leads to the whole matter of renormalization.

LC

It is hard to come up with an exact analogy, but here’s a water-based approximation.

Suppose it is raining. The air contains lots of water droplets, but you can still see objects in the distance. Sometimes, a raindrop is in your line of sight, so you lose a bit of contrast, but the focus is still the same. However, your short-wave radio reception may go to pieces, because the radio wavelength is a good match for the spacing between the raindrops, and light isn’t. Long-wave radio may be too large to be affected one way or the other.

Empty space may not be quite empty. It should be possible for a particle and an antiparticle to suddenly appear, and then annihilate again, paying back the energy they ‘borrowed’ to come onto existence in the first place. This may give a slight texture to ’empty’ space. If we found radiation with a wavelength that roughly matched was a the characteristic length of the texture of empty space, then we might expect that radiation to be deviated or delayed slightly. Radio waves, microwaves, visible light and X-rays all arrive at the same times. This research continues the search up to some enormous energies and tiny wavelengths, and still finds no significant effect. There may still be quantum granularity, but it will be really, really tiny, or the effects are really small.

Why do we think there is any quantum granularity in the first place? That’s a bit harder to explain.

This heuristic is commonly thought, and if there were dispersion for EM radiation things might be a bit like this. However, these particle heuristics of virtual particles, electron-positron pairs popping in and out of the vacuum and so forth are approximations which correspond to radiative correction terms. These results suggest that quantum uncertainty is something more fundamental than this sort of heuristic.

LC

While I haven’t read quantum field theory I think radiative correction terms is a fancier way to say that virtual particles are already incorporated in the corresponding QFT. Indeed is the embodiment of interaction (as exchange of virtual particles).

They appear as real when you accelerate (Unruh effect, Hawking radiation). And they appear as bona fide particles after pair production (of strong fields, say).

The Unruh effect of the vacuum should incorporate all fields, even gravitation of flat space. I think “quantum uncertainty is something more fundamental than this sort of heuristic” is one good way of putting it, granularity is something over and beyond what you would naively expect.

This comment has nothing to do with this actual article, instead it is a question that has been intriguing me. The Question: If all energy emitted into the universe is stretched by the expansion of this universe, what do gamma rays start out as? If they reach us from billions of light years away and still posses such high energy/frequency, what type of energy did the gamma ray start with?

Please see reply to Guest above.

They start out as high energy gamma rays. There is no higher qualitative class, since they all behave the same. (Sufficiently high energy to interact with atomic nuclei.)

Those observations are fairly backyard cosmological, though. Low z (0.02) means low stretch. The results depend on timing (Fermi) and polarization (Integral) differences over long distances.

Your question is similar to the question above. This can be computed. The Hubble relationship is v = Hd, where H = 70km/sec/Mpc. This GRB is 300 million light years away, and 3.26 light years makes a parsec. Hence this GRB is at 9.2×10^7pc = 92Mpc. Now multiply this by H and you get v = 6440km/sec. This is equal to v = .0215c, for c the speed of light. The z-factor is v/c and the wavelength of any photon which escapes this GRB is stretched out be a factor of 1.02. This is not a whole lot. For the GRB detected by the FERMI spacecraft at 7.3 billion light years or d = 2239Mpc. Multiplication by H gives 1.57×10^5km/sec or about half the speed of light. The z factor is then ~ .5 and the wavelength of light is expanded by about 1.5.

LC

This comment has really nothing to do with this actual article. It is more of a question I would like to see answered. Question: If all light/energy emitted into the universe gets stretched by the expansion of said universe, what do gamma rays start out as at their source? If we are receiving these rays at such high energy frequencies, what is higher then gamma rays?

Stronger Gamma Rays.

Or in more detail:

Gamma rays form a VERY LARGE band. This band begins at a frequency of about 10^18 or 10^19 Hz. But we detect gamma rays with frequencies as high as 10^27 Hz or even higher.

However, there is also a distance limit on such high frequencies. The farther the source the more background light (the combined light of the stars and galaxies, not the CMB in this case) is between the source and us. And this light causes some of the very high energetic gamma ray photons to “annihilate” with the background light into electron-positron-pairs. This reduces the amount of very high energetic photons we can detect. So the highest energetic photons come from sources nearby actually, where redshift is not so important.

Great article and comments, and of course LC expands this in all the great directions. I too was wondering if this result would make it here.

The result is provocative if not robust. It relies on symmetries, or “softly broken” symmetries, such as supersymmetry to suppress vacuum birefringence factors of dimension 3.

If that symmetry is supersymmetry, the dimension 5 result should be supplanted with a dimension 6 result that is nowhere near the earlier constraint Fermi puts. [“Constraints on Lorentz Invariance Violation using INTEGRAL/IBIS observations of

GRB041219A”, p 4.]

“We showed that, although astrophysical constraints are not yet really constraining, they are getting closer to the relevant regime (ξ of order 1 or smaller).”

Fortunately supersymmetry seems to be pushed out of relevance by WIMP searches both on Earth and as astronomical pair production events, as well as direct LHC experiments. AFAIU soon predicting supersymmetry particles mass would engender more finetuning than the purported symmetry would solve.

So it is promising and building on the Fermi results that pushed observations into the Planck sector for the first time. As the paper puts it: “written in the same way as we did in this work, this limit was ξ < 0.8". And there was one Fermi observation of < 0.01 planck length that was just shy of 3 sigma.

As I understand it the theoretical physics community treats the results as interesting but arguable. But the observations, such as they are, adds up.

As for the physics, there really isn't anything that suggests that spacetime itself should be granular on any scale. Special relativity predicts the converse. The Planck scale is connected to particle physics of fields, not directly spacetime physics.

As such, general relativity is perfectly quantizable as an effective field theory and predicts gravitons and no more fully as expected. (The problem is that the quantized theory breaks down at higher energies, it is fundamentally non-renormalizable.)

Quantum gravity ideas such as loop quantum gravity shows the problems with the ideas. As Nerlich notes they break special relativity. They break Lorentz invariance. They break lightcone gauge invariance. (Hence the "philosophical problem" has a very real physics alternate problem.) They have no lowest energy gap. And they don't admit harmonic oscillators so that one can derive dynamics.

If we go to ideas such as string theory structures, they have none of these problems. The planck scale is simply a cut off that leads into a dual physics model. The structure does not show up as granularity but as continuity. Showing that in this case it is possible to have the cake and eat it too.

The dimension 5 refers to the Kaluza-Klein dimension for electrodynamics. This has issues with supersymmetry, for Lorentz breaking is more commensurate with dimension 6. However, in dimension 5 if Lorentz symmetry fails at the Planck scale, for whatever rational or religious reasons, there is (almost) no reason why the following higher-dimension term (dimension-5 operator) in the Lagrangian coming from the Planckian physics shouldn’t occur in the effective action:

L = (?/M_p)n^aF_{ab}F^bc n^d ?_e(n^eF_{bc})

This term is gauge-invariant and rotationally symmetric and it is suppressed by the right Planck energy scale where the Lorentz violation, picking a preferred unit 4-vector “n”, comes from by the assumption. The dimension 6 theory is similar, but with an additional internal index. Dimension 6 is such that Lorentz violations can be made to work with supersymmetry.

All of these theories give a dispersion term

?^2 = (kc)^2 +/- 2 (?/M_p) k^{d – 2}

where d = dimension. Generically in what ever theory you are working in the two solutions to the frequency is a birefringence, which has polarization effects. These observations of polarized light in the gamma ray burst found the rotation plane wasn’t rotating which allowed them to impose a remarkable upper bound on the dimensionless coefficient ” ? ” in the term ? < 1.1×10^{-14}. In the natural Planck units, the Lorentz violation linked to the chirality-dependence is smaller than one part per 100 trillion.

I am placing here a general derivation of how a dispersion can arise from quantum fluctuations of spacetime. This covers more the results of the FERMI spacecraft, where I derive Torbjorn Larrson’s result on the limit below the Planck scale.

I know the space shuttle has all the “spacenews” right now, but this is really more of a fundamental nature.

LC

As I noted in my previous comment, the author’s themselves were unable to “impose” that constraint, but had to stop at the remark that such constraints are getting closer.

You extend, but doesn’t change the conclusion that it is the dimension 6 term that is rigorous as of yet. Instead you summarize their conclusion to say the opposite of what they claim.

Or at least, how I read it. If you don’t believe I quoted correctly, read the paper.

For myself I am cautiously optimistic, as I noted these tests do add up and one is already pushing the Planck scale even if this didn’t do so rigorously.* I’m all for chucking the unnecessary, wildly counterphysical (well, counter relativity/gauge theory) idea that quantum effects show up as fluctuation of spacetime metric.

That last sentence of yours seems misplaced in the context of my comments.

———

* Most natural string vacua are non-supersymmetric. Since the cosmological constant is so low, we sample a very little volume of string landscape. On these grounds I make the easy bet that our vacua is most likely non-supersymmetric, whether string theory is a fact or not.

So I think the author’s made the correct decision in not going overboard with this, despite what articles such as this would like to do. And in any case they should point out the tentative nature of this result, and they did.

Supersymmetry is the one thing which can unify internal and external symmetries. There is something called the Coleman-Mandula theorem which demonstrates that without graded symmetries such unifications are not possible. It would be very odd if supersymmetry turned out to be completely absent from reality.

Now in our low energy world supersymmetry is broken. How it is broken is rather interesting. The inflationary universe had a huge vacuum energy, but also an enormous negative pressure. So the cosmological constant ? was determined as

?g^{ab} = 8?G/c^4(?g^ab} + pU^aU^b),

and the total free energy dF = dE – dQ = 0 Supersymmetry loves this situation and is in the unbroken phase. However, with reheating there is a symmetry breaking of the state, which also breaks supersymmetry. The total 1 = ?_t = ?_{de} + ?_m + ?_r for dark energy, matter (including dark matter) and radiation means that some of the energy has gone into matter and radiation, which is compensated from in the vacuum density. Then supersymmetry is broken.

Now again in all of this is mention of vacuum energy density and so forth. This is the stuff which gets us into trouble. These ideas are probably heuristics that do not capture the foundations of this problem. From an information theory perspective we might want to ponder what it means for a vacuum state to have any quantum information (qubit) content.

I have this idea that the universe consists only of one of each type of particle. The occurrence of multiple copies of an elementary particle, such as an electron, is due to entanglements across event horizons, such as the cosmological horizon or Bousso bounding surfaces. So an electron here is the same as the one there. In fact there is only one of each type of elementary particle. In this perspective there is really no vacuum state. The vacuum state is only a place holder for the uncertainty of an elementary particle appearing with some particular configuration variable set in a horizon entanglement. This is a bit odd to think about.

LC

If there is anything in the article I would nitpick, apart from the mentioned physics realization of a “philosophical problem”, it would be this:

I would amend that to say that we don’t expect the vacuum to show dispersion, additional differential slowing, because EM theory revolves precisely around combining E & M through a finite interaction speed. (As Einstein realized.)

Hence the EM vacuum has susceptibility and permittivity precisely as any other media, and that sets the “slowed light” speed.

Light is everpresent. Something makes it behave the way it does, a medium. “Electromagnetic” fabric….which on one hand can not be granular becuse the dimension which is rests in is similar to bose einstein condensates….Overlaying positions…but it is granular in the way that it emerges into this dimension as a point matrix of tiny black/white holes in which each one contains all information of all others. So when we see light ‘travel’ it is not traveling continuously…it is cycling in and out of each of these vortexes and reappearing as information until it is located by an interaction/relationship. Just theorizing outloud………

J. Eve

I am sorry, but I can’t make sense of the general idea of this. My response will be partial:

“Light … a medium.”

Relativity predicts precisely that there is no medium for light, no ether. It works.

“dimension … similar to bose einstein condensates … positions.”

Again: relativity predicts precisely that there can be no preferred frame such as a condensate would constitute. It works.

“each one contains all information of all others”

Again and again: relativity predicts precisely locality. It works.

“reappearing as information”

Information is relative a system and a chosen measure, say Shannon information used to analyze message channels. Nothing physical “is” information*, it is a measure or a measure value, depending on context.

Need I say it … it is information theory, and, hey: it works!

“theorizing outloud”

Theories makes testable predictions. It works: =D

I didn’t see predictions here. Can you point to this missing piece?

———-

* Sometimes people identify degrees of freedom with information, say quantum variables. Then of course they can be said to exist as observables.

The graininess of spacetime tested here is due to quantum fluctuations. If I invoke the Heisenberg uncertainty principle ?E?t = ? and use E = mc^2 there is then a fluctuation of mass given by ?m = ?/?tc^2. The uncertainty in time is and uncertainty in position ?t = ?r/c so that ?m = ?/?rc, and a metric ?g_{00} = 1 – 2G?/(r?r c^3), where the metric radius r >> ?r.

Now let the length uncertainty be ?r ~ L_p = sqrt{G?/c^3}, and we substitute this into the metric uncertainty

?g_{00} = 1 – 2G?/(rL_p c^3) ~ 1 – 2L_p/r.

This is a nice compact result. Now let the radius term be the probe length, which is given by the wavelength of the different radiation r = ?, and we assume ? >> L_p. The approximate metric for radiation of a certain wavelength is then

ds^2 = c^2?g_{00}dt^2 – dx^2 – dy^2 – dz^2

= c^2(1 – 2L_p/?)dt^2 – dx^2 – dy^2 – dz^2.

For EM radiation ds = 0 and for radiation propagating along one of the directions we have

dx/dt = c sqrt{1 – 2L_p/?}.

This predicts then a wavelength dependency on the speed of light

c’ = c sqrt{1 – 2L_p/?}

So if radiation travels a distance D = c’T the time of travel is T = D/(c sqrt{1 – 2L_p/?}) and I use the binomial theorem for ? >> L_p

T ~ (D/c)(1 + L_p/?).

So this is the effect of quantum fluctuations, really a naïve theory of such fluctuations, should have on radiation. Clearly very short wavelength radiation is slowed down.

The FERMI spacecraft detected gamma rays of 33GeV and much radiation at the bottom of the bandwidth at about 10^3eV from a Gamma Ray Burst event GRB 090510 out 7.3 billion light years. We can use these to estimate the time of arrival for the two forms of radiation. L_p/? = E_?/E_p =~ 33GeV/1.2×10^{18}GeV = 2.7×10^{-17}. For the softer gamma ray this is E_?/E_p ~= 10^{-24}. Input this into our formula for the change in speed of light and we get

T – T’ = (D/c)( 2.7×10^{-17} – 10^{-24})

=~ (7×10^{25}m/3×10^8m/sec) 2.7×10^{-17} = 6.7sec.

The GRB event observed had a time spread of 2 seconds and the two photons which a 10^6 spread in energy arrived at the detector within .8 seconds. Given the error margins and so forth this puts some pretty tight constraints on the role of such fluctuations on physics.

This type of spacetime fluctuation is basically ruled out, which are fluctuations that on a small scale violate Lorentz invariance. With heterotic string theory E_8 — > SU(3)xE_6, which gives a twistor type of theory. Twistor theory does not invoke this sort of energy dependency on light cone structure. Rather the uncertainty is in a null congruency, but where all null rays in the bundle, such as a null plane, all have the same spacetime direction.

Cheers LC

The energy of a photon is E = ?? and the momentum is p = ?k = ?/?. A dispersion relationship is ? = ?(k), which is a linear dispersion if ? = kc. However, if there is a dispersion relationship that is due to spacetime fluctuations there is a dispersion

?^2 = (kc)^2 +/- Ak^3/M_p, A = constant

which are two solutions for ?, which is a birefringent property of quantum “foamy” spacetime. This splitting what involves the polarization of EM photons.

It is in a way similar to the reflection of light off the surface of water. Certain crystals such as sapphire have this property. The splitting off of different dispersions induces a polarization splitting of light. These results give strong bounds on the occurrence of this, which tends to rule out a lot of grainy ideas about spacetime.

LC

Ah, thanks!

I wasn’t aware that one could tie specifically quantum gravity mass scale to these results, I was relying on the fact that the timing of these traveling photons prohibits any metric fluctuations to be Planck scale regardless of probe size.

As a matter of fact you prompted me to find this recent summary of results of a coauthor of the concurrent Nature letter of Abdo et al. More methods and so more results:

Confidence Mplanck

—————- ————

very high 1.19

high 3.42

medium 5.12

low 10.0

low 102

lag analysis:

very high 1.22

“Very high” confidence is 99 %

Thanks for the paper reference. These results push the quantum gravity fluctuation scale into the trans-Planckian domain.

LC

Thank you for this really great article, and thanks to you guys who wrote interesting comments on this post.

Thats a really nice article, I want to say thank you for it and i want to give thanks to those people who wrote very interesting comments on this post.

In somewhat agreement with what Jennifer Eve has said, I would agree totally if I knew for sure what she is thinking I think, I wonder if the interaction being sought cannot be expected to reveal itself in this space. Also, I conjecture that any action would be immediatly countered by a counter action. In addition, the vacuum between here and there here is not a unmolested one since it has been traveled through by a great leveling bulldozer, the exspansion of energy and mass.

I have read the posts a couple of times to get the math and degree of freedom remarks correct. The math is not coming through well on my box but, I can fill in the incorrect characters. I am not a superstring reachers or string man. I just wonder if the experiment was looking for ghosts with dark shades on. Hate to see man years of work reduced to crap. It may have some function or use.