[/caption]

Cosmologists tend not to get all that excited about the universe being 74% dark energy and 26% conventional energy and matter (albeit most of the matter is dark and mysterious as well). Instead they get excited about the fact that the density of dark energy is of the same order of magnitude as that more conventional remainder.

After all, it is quite conceivable that the density of dark energy might be ten, one hundred or even one thousand times more (or less) than the remainder. But nope, it seems it’s about three times as much – which is less than ten and more than one, meaning that the two parts are of the same order of magnitude. And given the various uncertainties and error bars involved, you might even say the density of dark energy and of the more conventional remainder are roughly equivalent. This is what is known as the cosmic coincidence.

To a cosmologist, particularly a philosophically-inclined cosmologist, this coincidence is intriguing and raises all sorts of ideas about why it is so. However, Lineweaver and Egan suggest this is actually the natural experience of any intelligent beings/observers across the universe, since their evolution will always roughly align with the point in time at which the cosmic coincidence is achieved.

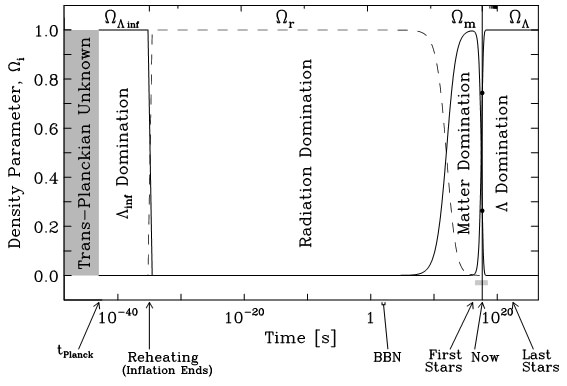

A current view of the universe describes its development through the following steps:

• Inflationary era – a huge whoomp of volume growth driven by something or other. This is a very quick era lasting from 10-35 to 10-32 of the first second after the Big Bang.

• Radiation dominated era – the universe continues expanding, but at a less furious rate. Its contents cools as their density declines. Hadrons begin to cool out from hot quark-gluon soup while dark matter forms out of whatever it forms out of – all steadily adding matter to the universe, although radiation still dominates. This era lasts for maybe 50,000 years.

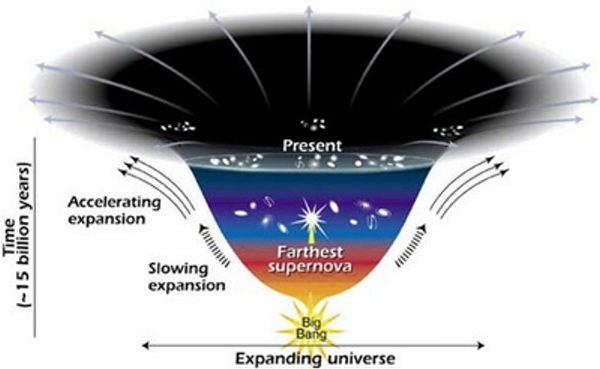

• Matter dominated era – this era begins when the density of matter exceeds the density of radiation and continues through to the release of the cosmic microwave background radiation at 380,000 years, when the first atoms formed – and then continues on for a further 5 billion years. Throughout this era, the energy/matter density of the whole universe continues to gravitationally restrain the rate of expansion of the universe, even though expansion does continue.

• Cosmological constant dominated era – from 5 billion years to now (13.7 billion) and presumably for all of hereafter, the energy/matter density of the universe is so diluted that it begins losing its capacity to restrain the expansion of universe – which hence accelerates. Empty voids of space grow ever larger between local clusters of gravitationally-concentrated matter.

And here we are. Lineweaver and Egan propose that it is unlikely that any intelligent life could have evolved in the universe much earlier than now (give or take a couple of billion years) since you need to progressively cycle through the star formation and destruction of Population III, II and then I stars to fill the universe with sufficient ‘metals’ to allow planets with evolutionary ecosystems to develop.

So any intelligent observer in this universe is likely to find the same data which underlie the phenomenon we call the cosmological coincidence. Whether any aliens describe their finding as a ‘coincidence’ may depend upon what mathematical model they have developed to formulate the cosmos. It’s unlikely to be the same one we are currently running with – full of baffling ‘dark’ components, notably a mysterious energy that behaves nothing like energy.

It might be enough for them to note that their observations have been taken at a time when the universe’s contents no longer have sufficient density to restrain the universe’s inherent tendency to expand – and so it expands at a steadily increasing rate.

Further reading: Lineweaver and Egan. The Cosmic Coincidence as a Temporal Selection Effect Produced by the Age Distribution of Terrestrial Planets in the Universe (subsequently published in Astrophysical Journal 2007, Vol 671, 853.)

Yo Steve, at the third paragraph, in the second line, there should be a comma after “However” when you mean “nevertheless” or “in spite of that”; otherwise, without the comma, it means “in whatever manner” or “regardless of how”.

Was more intending the contrary usage – like “But,…”

Nevertheless, in whatever manner, I agree the comma is appropriate – so thanks, regardless of how and in spite of that.

Since we’re doing corrections:

“• Inflationary era – a huge whoomp of volume growth driven by something or other. This is a very quick era lasting from 10-35 to 10-32 of the first second after the Big Bang.”

I believe the above sentence has no units on the small numbers. I understand perfectly what’s being said, but when it’s written like this it’s just “number to number of the first second”.

Wikipedia (a handy lexicon for all things) uses the wording: “Between 10–35 seconds and 10–32 seconds after the Big Bang”. Not sure if this is better or worse, but is more wordy.

The ASCII text needs to be formed as 10^-35 and 10^-32 or as 10e-32 and 10e-32, correct?

As you say Alaksandu, some folks might actually think the way to read these figures as ‘between 10 to 35 or 10 to 32 seconds’ for that duration of time. The caret^ or small case e indicate exponent of course.

A few resources for those wishing to utilize the full ASCII 256 character set for the common code page UTF-8 Unicode follow some other references for notation and such.

http://en.wikipedia.org/wiki/Scientific_notation

http://en.wikipedia.org/wiki/Mathematical_notation

http://en.wikipedia.org/wiki/Abuse_of_notation

http://www.asciitable.com/

http://www.theasciicode.com.ar/

http://www.jimprice.com/jim-asc.shtml

Adding to all the confusion we could just place a negative sign and a radix point and type all those zeros, all 35 and 32 of them, each time, grin.

The standard for ASCII text only workarounds is an old, reliable friend to most of us from the early days of individual computing, i.e., hitting bang paths out on the old PDPs to send routed emails just the way we wanted them to be routed.

Mary

We were debating the original article text which has -32 in superscript, but thanks for the ASCII translation.

Of course the predictions of the anthropic principle are best on the area of anthropic environment.

But its predictivity is also best on multiverses:

“1. Prediction: Most of the entropy production in the causal diamond should take place around the time tburn ~ Λ-1/2; more precisely, the prediction is tburn ≈ tedge ≈ .23tΛ, which is about 3:5 Gyr for our value of the cosmological constant, or 1060.3 in Planck units.” [Bousso et al, arxiv 1001.1155v2]

Or in other words, maximizing entropy (environmental variant of anthropic principle) means maximizing dust means maximizing stars and planets means maximizing observers.

So we miss a large bit of the power in these predictive theories if we constrain them to our own universe only.

Much of this entropy is generated through the formation and growth of black holes. It is also the time of galaxy formation and coalescence. These tend to correspond to lots of star formation.

LC

Self-refuting text is self-refuting.

The reason dark energy is considered an energy is because it behaves like energy to standard cosmology general relativity.

But beyond that it looks like it behaves like a cosmological constant, and the current best hypothesis is the natural one that it is vacuum energy.

I would say dark energy behaves like energy twice over right now. Which makes the text even more baffling. =D

I was thinking of mathematical models with respect to GR field equations – e.g. the FLRW metric. It’s hard to believe that everyone would independantly arrive at exactly this same model – albeit I imagine the general relationship between mass/energy density and curved space-time might be universally understood.

For example, if aliens had figured out the nature of dark matter before we did – that might change their cosmological model significantly.

Dark energy doesn’t fit with the 3 laws of thermodynamics (e.g. it appears out of nowhere and works at 100% efficiency). I agree that there’s something going on there, I’m just not convinced it’s energy (but happy to be proven wrong).

Isn’t vacuum energy as the cosmological constant “the worst theoretical prediction in the history of physics”?

http://en.wikipedia.org/wiki/Cosmological_constant

The problem of apparent violations of the first and second laws of thermodynamics can be looked at this way. The de Sitter configuration with w = -1 has a negative pressure so that ? + pc = 0. From a first law of thermodynamics perspective we then have

dQ = dW + dU,

where ?dW = ?V and the gas law substitutes for the internal energy dU = TdS and we use the adiabatic condition dQ = 0. This argument with the gas law invokes an effective temperature defined by the quantum noise with

Ut/? = E/kT.

The pV = nkT gives

nk?T = sum_k??(k)/2?T,

This recovers the ? + pc = 0 result, which is w = -1 equation of state. The observational data confirms this result. This argument equates the quantum noise of the vacuum with thermodynamic equivalent entropy. There is still the question of where mass energy of the rest of the 28% of the universe comes from. A Tolman type of argument might be made from this point.

The worst theoretical prediction involves the argument that quantum Planck scale fluctuations have a curvature R ~ 1/L_p^2 =~ 10^{64}cm^{-2}. This is a huge curvature. The cosmological constant we actually observe is 10^{-56}cm^{-2} (or something on that order) this is then 120 orders of magnitude off. This is of course enormous. If one were to scale up the vacuum energy to a cut-off value of E_planck with ? ~ = ?EdE, where the upper bound on the integral is E_planck, you get these funny results. This is a bit of a naïve calculation in some ways.

The inability to detect dispersion from distant photon sources such as galaxies and quasars illustrates there are no spacetime fluctuations which do this. In fact there are no fluctuations to the scale of 10^{-48}cm. This is far lower than the Planck length. Quantum gravity is then fundamentally different from what most of the physics community has thought. I think that these fluctuations are inherent in event horizons, and correspond to qubit entanglements on these horizons.

LC

Careful. That last claim, on the scale of fluctuations, is only valid if supersymmetry is valid.

The boundary physics, such as with event horizons, are supersymmetries. The reasons for this are difficult to get into in detail. Supersymmetry almost must exist. The question is the energy scale at which it occurs. Supersymmetry by itself tells us nothing about this. Early work on supersymmetry involved light super-pairs, which failed to materialize. So far the LHC is silent on the issue as well. We might just be faced with the ugly prospect that it does not materialize until sqrt{s} > 10^3TeV or something. This means we may only detect supersymmetry if we work hard on doing particle physics with cosmic rays.

I have thought we might do particle physics by looking at cosmic ray tracks in ice layers on jovian moons. Then of course that is not easy. It would require some imaging techniques of a sophisticated nature.

LC

I think we agree on that cultural effects would (have, in our case, see the WWII work on nuclear technology) play into the order of different predictions.

But when we get to a self-consistent cosmology, standard cosmology is the first one. It is a bottleneck that every civilization would pass. (Possibly “jumping” the bottle-neck if they get more pertinent observations tied to it at once, say from particle physics.)

As for dark energy (DE) we have had this discussion before. DE doesn’t break thermodynamics, or it wouldn’t be part of standard cosmology or even general relativity.

One way of looking at it that we did last time is that DE is part of spacetime expansion, and if spacetime expansion is acceptable physics, DE is as well. And spacetime expansion plays nice with thermodynamics in standard cosmology, since energy of local flat spacetime is zero.

You can look at DE as the effect that makes up for a deficit so spacetime will *not* break thermodynamics, in the naive model. (It is more complicated than that, of course.)

The last part has nothing to do with conceptual problems of DE in standard cosmology but with the general problem of finetuning. And anthropic models shows that finetuning is not an unsolvable problem in cosmology. (Instead finetuning would be expected if you had multiverses and observers!)

But FWIW I read somewhere a physicist claim that if you sum up the finetuning in the Standard Model of particles, it is in the neighborhood of 10^-100 too. Unfortunately I have never been able to find the source to that.

Agree we have discussed previously – and agree that dark energy and accelerating spacetime expansion are kind of the same thing. My broken record point being why can’t we just call it accelerating spacetime expansion then.

Raphael Bousso wrote a paper last year where he used so called “large numbers” to argue for why this is the case. We do appear to live in a “sweet spot” in the evolution of the universe. We are close enough to the origin to detect that, at least so far the CMB (an I think we can detect further back) and yet at the same time at a point where the exponential expansion of the universe into a de Sitter vacuum is apparent.

This has similarities to what Plato called the initial and final cause. In the case of cosmology these are different vacuum configurations. The initial one is what lead to inflationary expansion in the first 10^{-35} to 10^{-30} seconds. The final one is the decay of the de Sitter vacuum by quantum tunneling (similar to black hole decay) as time goes to infinity. It is interesting that we do exist at a time where we can potentially get data on the initial vacuum state, and our observations can lead to an inference on the final vacuum state.

LC

Yo Lawrence, is this Raphael Bousso’s paper, “A geometric solution to the coincidence problem, and the size of the landscape as the origin of hierarchy”, that you’re referring to?

This is the paper. It is hard to know what this is really telling us. The whole thing could be coincidental. Stars will be around for about a trillion years, so I could imagine biology on planets then and maybe intelligent life. The universe they will see will be markedely different — colder and darker.

LC

There are two papers there; I presume it’s the first one that we are talking about?

To be honest I have seen this second paper as well. Bousso has a sort of doomsday argument as well.

LC

This is Bousso’s later work where the causal patch measure replaces the causal diamond measure. I am not familiar with it, but they write:

“Less general derivations have been proposed for small subsets of the landscape (vacua that differ from ours only in a few parameters) [13, 14], or under the assumption that observers arise in proportion to the entropy produced [15, 16]. A direct antecedent [16] of our arguments employed the causal diamond, a measure that is somewhat less well-defined than the causal patch, and which suppresses the subtle role played by curvature in the present analysis.”

Ref 16 is the paper I linked to earlier.

AFAIU the paper they claim that the causal patch surpasses the causal diamond in that it incorporates the effects of cosmological curvature. Hence they see that this is a more important factor.

If the universe closes in on itself rapidly or otherwise makes problems, we would have no late observers. And that is what they predicted in the old paper, observers would live in universes with little curvature.

Otherwise I don’t see much difference, observers are proportional to matter here too, which reverts back to selection over entropy (dust, aka stars and planets).

It would be difficult to imagine a scenario in which the intelligent observer did not find themselves living in an era capable of supporting their existence.

Has the Copernican principle been replaced by the ‘Protagoras principle’, as if it would take a man* to measure all things? And let’s not get into the Copenhagen interpretation of quantum physics here, as it is different. Anthropic landscape? Is this more metaphysics than science, as these hypotheses cannot ever be falsified?

* NOTE: Alien = man, even if ‘aliens’ could very well be something else than just carbon based lifeforms close of STP variables.

UNIQUE PARAMETERS

— James Ph. Kotsybar

There is only one answer to creation.

Though we don’t nearly understand it yet,

there’s but one elegant variation

emerging from initial values set

that even allows molecules to be,

much less achieve complexity of life,

or suns to burn their planets distantly

with not too much but with the needed strife.

It’s easy to view things anthropically —

nothing explained, just looked at in reverse —

or declare we see things myopically —

anomalies blind to our multiverse.

Whether we’re destined or a doubtful freak,

as far as we know so far, we’re unique.