[/caption]

The recent news of neutrinos moving faster than light might have got everyone thinking about warp drive and all that, but really there is no need to imagine something that can move faster than 300,000 kilometres a second.

Light speed, or 300,000 kilometres a second, might seem like a speed limit, but this is just an example of 3 + 1 thinking – where we still haven’t got our heads around the concept of four dimensional space-time and hence we think in terms of space having three dimensions and think of time as something different.

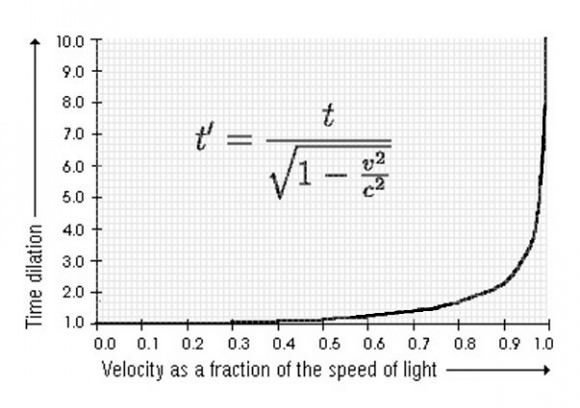

For example, while it seems to us that it takes a light beam 4.3 years to go from Earth to the Alpha Centauri system, if you were to hop on a spacecraft going at 99.999 per cent of the speed of light you would get there in a matter of days, hours or even minutes – depending on just how many .99s you add on to that proportion of light speed.

This is because, as you keep pumping the accelerator of your imaginary star drive system, time dilation will become increasingly more pronounced and you will keep getting to your destination that much quicker. With enough .999s you could cross the universe within your lifetime – even though someone you left behind would still only see you moving away at a tiny bit less than 300,000 kilometres a second. So, what might seem like a speed limit at first glance isn’t really a limit at all.

To try and comprehend the four dimensional perspective on this, consider that it’s impossible to move across any distance without also moving through time. For example, walking a kilometer may be a duration of thirty minutes – but if you run, it might only take fifteen minutes.

Speed is just a measure of how long it takes you reach a distant point. Relativity physics lets you pick any destination you like in the universe – and with the right technology you can reduce your travel time to that destination to any extent you like – as long as your travel time stays above zero.

That is the only limit the universe really imposes on us – and it’s as much about logic and causality as it is about physics. You can travel through space-time in various ways to reduce your travel time between points A and B – and you can do this up until you almost move between those points instantaneously. But you can’t do it faster than instantaneously because you would arrive at B before you had even left A.

If you could do that, it would create impossible causality problems – for example you might decide not to depart from point A, even though you’d already reached point B. The idea is both illogical and a breach of the laws of thermodynamics, since the universe would suddenly contain two of you.

So, you can’t move faster than light – not because of anything special about light, but because you can’t move faster than instantaneously between distant points. Light essentially does move instantaneously, as does gravity and perhaps other phenomena that we are yet to discover – but we will never discover anything that moves faster than instantaneously, as the idea makes no sense.

We mass-laden beings experience duration when moving between distant points – and so we are able to also measure how long it takes an instantaneous signal to move between distant points, even though we could never hope to attain such a state of motion ourselves.

We are stuck on the idea that 300,000 kilometres a second is a speed limit, because we intuitively believe that time runs at a constant universal rate. However, we have proven in many different experimental tests that time clearly does not run at a constant rate between different frames of reference. So with the right technology, you can sit in your star-drive spacecraft and make a quick cup of tea while eons pass by outside. It’s not about speed, it’s about reducing your personal travel time between two distant points.

As Woody Allen once said: Time is nature’s way of keeping everything from happening at once. Space-time is nature’s way of keeping everything from happening in the same place at once.

Funny, i was explaining this to my girlfriend just the other day. Exactly this.

This is rather simple in principle. Particles have an intrinsic invariant mass. For particles traveling at light speed it is zero; say, photons.*

Added to that relativity tells us energy has mass and vice versa. Since photons have an energy decided by their frequency, they have relativistic (extrinsic, “variant”) mass. That is the mass that gives photons their momentum.

————

The devil in the details is in asking where invariant mass comes from. In atoms, much of it comes from quark binding energies. (See above why this is mass.)

The remainder is mainly, in the standard model of particles from the Higgs particle, the one LHC at Cern tries to hunt down.

But everything that have energy contributes. Whether you call it extrinsic or intrinsic is depending on the scale and the boundaries of your system.

Or think of it in terms of momentum – give a particle more energy and its momentum increases, whether or not it has rest mass.

Particles with a rest mass develop more momentum as their velocity increases (p=mv), while photons develop more momentum as their frequency increases (e=hv) – i.e. a gamma ray photon packs a lot more punch than a radio photon, even though they both move at SOL.

Protons in the LHC are nearly travelling at SOL, but you can still substantially increase their momentum energy (which is presumably a product of increasing relativistic mass, since v hardly changes).

I never looked at light that way before. Now the speed of light and time somehow strangely make more sense to me. Light being “instantaneous” (from it’s perspective in space time) is very interesting. Thanks for this insight!

Wow. That is a hell of a good explanation. I shall remember it!

Let me grouch/nitpick: It is a good perspective since it is relativistic. However, it doesn’t remove the very tangible speed limit.

For example, it makes it expensive to travel and impossible to have extended markets of massed goods. (You can still have a, slow, interstellar information market.)

For a good novel about this very concept, check out Poul Anderson’s “Tau Zero”. Essentially it’s the movie “Speed” in space. Engines break and the only thing that can be done is continue to accelerate. Great article, thanks!

The old causality argument is getting hackneyed and tiresome, and it’s incorrect.

What we are talking about is the propagation delay of a signal. Relativity and our current understanding of the universe makes the speed of light a maximum. The faster your reference frame is traveling, the less time that passes for you, but the speed of light always stays the same and can be experimentally verified no matter the velocity that reference frame is traveling.

However, if you were to posit a superluminal signal, this would simply only reduce the real propagation delay between two points to less than that of light traveling between the points. You could reduce this propogation time to near zero, but never to less than zero. Therefore because we haven’t found a superluminal signal, yet, doesn’t mean we won’t find it because it might somehow violate causality. A signal can’t propagate from an event that hasn’t yet happened, but could hypothetically propagate at any velocity (however unlikely).

That neutrinos could exceed c may be possible, but I would bet on subtle experimental error or a weird quantum timing issue.

I think you are just saying there might be new physics to be discovered – yes, this is likely.

Causality is key to relativity physics, although there are other physics paradigms which seek to step around it. For e.g., we already have quantum entanglement which allows a faster than SOL signal. But, whether or not it’s a valid interpretation of reality, it’s not relativity physics.

My understanding was that no information actually passed between entangled particles, and thus no FTL “signal” was happening. Is this incorrect?

(I still can’t understand explanations of WTF is happening with entangled particles (or even why it happens at all), so I could be totally off base.)

Causality is a relativistic phenomena. So unless we push the light speed limit up for all objects, a phenomena where signals goes faster can indeed violate causality.

Moreover, it would mean in the simplest model that physics would be different in different frames.

If you are thinking on violating order of phenomena, it is “violated” by relativity, it is the very point of it. Different frames will appear to order phenomena differently, to keep physics the same.

I am wondering if the calculation error would be something as simple as a bug in the maths instruction set of the processor.

This would be absolutely awesome, hahahaha. I didn’t even consider the possibility that “FTL neutrinos” could have resulted from a CPU instruction set error.

I’d assume that they manually checked over the results… but that would be a lot of work. Maybe too much for a human (or group of) to handle

Dark matter is hypothesized to be Non-baryonic matter. Does that meant that without specific mass it can violate this causality limitation imposed on baryonic matter? (aka our FTL neutrinos)

Dark energy is hypothesized to be immune from the effects of the physical universe, only interacting with gravity.

So ‘hypothetically’; a dark energy drive powering a dark matter ship could travel FTL? The only problem is that the astronaut would have to be dead to travel in it. 🙂

Which does raise some interesting conjecture regarding sentience beyond the baryonic existence we currently inhabit. The limitations of causality may be the reason why we will never be able to detect or understand the universe from a Baryonic mass encumbered perspective.

Just as we cannot know if sentience traverses the gap between a baryonic and non-baryonic existence. In a way, the hypothesized existence of dark matter / energy is a hopeful sign that some kind of ordered reality ‘can’ exist beyond our physical death. If it does it could be a reality free of causality.

Just musing……

Dark matter generates gravitational influence – i.e. interacts with space-time in the same way that baryonic matter does. So FTL properties seem unlikely.

Dark energy is pure place-marker – little value in hypothesising anything about it.

You lost me with the last three paragraphs 🙂

@ Olaf,

Yes Magic, sort of like dark energy / matter, unlit someone pins down what exactly it is, it might as well be magic. I might add that science is not immune to conjecture of a Philosophical nature. Perhaps this site isn’t the place for it, but it was a lighthearted muse on my part.

@ Spectrum7Prism”

Yes exactly, its not science but my particular interest is this imaginary (boundary) that cant be crossed by anything baryonic. If there is a drop of validity in the idea, the implication is that we can never understand the nature of dark matter/ energy from this side of the fence. Personally I’m all for a more rational explanation.

@ Steve Nerlich: Some ‘Hypothesized’ Dark matter is proposed to be baryonic, however the vast majority is believed to be nonbaryonic and compromised of quote: “nonbaryonic dark matter includes neutrinos, and possibly hypothetical entities such as axions, or supersymmetric particles”

http://en.wikipedia.org/wiki/Dark_matter

While dark matter may interact gravitationally with baryonic matter the speed of Gravity, like the speed of a neutrino is defined by ‘c’ in General Relativity.

(actually defined as the speed of a Gravitational Wave in GR; that has never been observed or measured)

However: as neutrinos have ‘provisionally’ been observed to break the Cosmic speed limit I thought it was a valid muse. Until this observation is disproved or verified your conjecture that ‘causality’ makes the idea of FTL non-nonsensical is a interesting point to make.

Causality; a ‘philosophical notion’ is something humans have been arguing about for eons. What you are implying is a ‘deterministic; viewpoint, which fits well with theories such as General Relativity, I just wanted to throw a spanner in there by pointing out a scenario in relation to dark matter / energy that might not adhere to such a viewpoint. If Neutrinos are capable of FTL then deterministic causality may also be inadequate to the task of explaining the universe as we observe it.

http://en.wikipedia.org/wiki/Causality

http://en.wikipedia.org/wiki/Determinism

I think I understood your address. And from it, rewrote my little piece.

Given time and technological advancements, comprehensible explanations may well appear, and come within reach. But if it is what I have suspected: manifestations of an unseen force that has profound, and powerful ( obviously ) effects within the the material universe, then physical man may never be able to fully grasp it, lay firm hold upon it. How far does his understanding go, in what he “sees” in the nuclear sphere?

I did not mean to imply that he should not try ( else, we would still be in the Dark Ages ).

Happy with your qualification that dark matter may include some baryonic component (albeit small). However, it is not correct that GR defines neutrino speed as c – they have a small component of mass and hence should be sub-c (albeit the ones in the Gran Sasso experiment probably were moving at 99.99etc c).

Reading through interesting postings, from an article I barely understood, your thoughts caught my eye ( last two paragraphs ). We are under “limitations” as physical beings in space, and confined within the 4th dimension of time, ours being “clocked” by the Earth-Moon-Sun dial reference-frame.

But, outside its realm, man, tied-down to the space-time fabric, as he is, may not be capable of comprehending the nature of forces that lie beyond the reality of his 4-plane existence, but dramatically evident within it. What could be described as an alternate reality: a separate “ordered” existence, with intelligent life of a different nature, even on a higher-plane, separate from, but not unrelated to, our 4-dimensional domain.

On the Cosmic scale, there are the “Dark Matter”–“Dark Energy” forces, invisible in nature, yet detectable and measurable through motions of matter and properties of space. Then, through the pioneering science on the microcosmic scale, a window is opened to the startling, phantom-like properties evident in the subatomic realm ( quantum physics ).

Is there an interface–at both frontiers–between two? Where the physical laws, and energy-matter effects in the time and space of one, phases out from, or into a different, alternate, but intimately related reality? Is one the shadow of the other ( like that of a marble pillar, which is far more substantial than the shadow )?

Through instrumentation, man’s inquisitive, exploring hand may, just now, have come within reach, come within range, of the two interfacing planes? Both, in the fantastical microcosmic world, and on the awesome level of the Universe. He can explain, to an extent, effects and properties. But what is “Dark Energy”? What is the nature of “Dark Matter”? We know what it is doing, but what is it? From where does it come?

— In a way, the hypothesized existence of dark matter / energy is a hopeful sign that some kind of ordered reality ‘can’ exist beyond our physical death.–

You are basically thinking about magic things.

And no, dark matter does not translate into some mystical being in a mystical place after death.

I think you confuse baryon properties with mass-energy. Relativistically all particles have mass, and real particles have real mass. That is why we call particles “matter”: they have real existence and are particulate so can build matter objects.

Dark matter (DM) is modeled as real particles (real mass, non-ftl) in standard cosmology, and that is the only thing that fits structure formation. Now on all scales from the cosmological down to spiral galaxies such as ours, a great result presented just the other week!

And added to that DM has been observed numerous times in gravitational lensing behaving non-ftl. It is definitely not ftl.

Dark energy is not immune to the universe but a part of the standard cosmology. It makes cosmological expansion go faster, and through backreaction (yes, through gravity) expansion affects it in principle – it is “kept” constant and not diluted.

In the simplest models dark energy is vacuum energy, and vacuum energy has many other interactions. (Static and dynamical Casimir effect, Unruh & Hawking radiation, Lamb shift, et cetera.)

It most definitely is! Science is empirical in nature, and famously philosophy is “just so” story telling that can’t be adjudicated by empirical means. Show me the data, that is a scientific finding that has in any way been a result of, or even helped by, philosophy.

That philosophy is exactly opposite of science methods is the very _point_ of it. It is like its spin off theology in that sense, worthless for observation and prediction, useful for obfuscation, harmful for science at worst.

I don’t like how you talk about philosophy. It is a good friend. You don’t even start empirical data without philosophy. When you discover it, you should be happy, I don’t see how it can be viewed as a negative thing unless it is applied wrongly.

@ Torbjörn Larsson

Causality; is a ‘philosophical notion’. Deterministic causality IS the ‘Philosophy’ that underpins science. I’m not selling an alternative, simply stating a fact.

Mr Steve Nerlich cites ‘causality’ in his excellent article. I think its fair to comment on it.

I very much disagree with you that science is immune from Philosophical conjecture. Science is based on a Philosophical precept and I think its important that this fact is stated. Humanity has been arguing about this since we could think, and will likely keep doing so for as long as we exist.

Deterministic Causality is simply the flavor of the times.

I think perhaps you are defending the Deterministic Philosophy of science, and confusing Philosophy with the subjective end viewpoints of [other) philosophical conjectures. Which might not agree with your world view. That does not invalidate Philosophy, it simply places you in one interpretation. Of which there are many.

http://en.wikipedia.org/wiki/Determinism

en.wikipedia.org/wiki/Causality

It should be noted that many (scientific) minds have argued against a Deterministic Philosophy in science. Read it up here:

http://en.wikipedia.org/wiki/Indeterminism

Re apparent confusion between mass and Baryonic matter; well I’m no expert I will admit. Mass–energy equivalence is all well and fine unless some FTL particles happen to be observed. 🙂

Where will you stand IF its proven that Neutrinos have traveled FTL? I do not wish to attack GR, just curious where this line of reasoning might go.

Great Discussion BTW. 🙂

Not that it matters much, but the idea of cause and effect predates philosophy.

It is built into our biology, already very young children knows when objects disappear behind screens then they shouldn’t. (Googleable.) It was likely verbalized long before greek philosophy got started.

What matters here is that it was found, out of the blue, that relativity clarified a preexisting notion of causality so that it could be predicted and observed.

Before that there were no reason to ponder these things, and indeed few did that. As evidence, if they had looked closely, they would have found “effects predating cause”. (Relativistic effects between different observers reordering observed events of other frames.)

And relativistic causality replaced the then de facto philosophical notions in science, because they were found to be not applicable.

As for the rest, my previous comment covers that. You haven’t come up with an example where philosophy even helped. In fact it would have been confusing, if only people had checked what they thought were self evident. (Going back to the harm of injecting philosophy into reality.) So observably philosophy is not part of science.

You are on a fishing expedition to see if you can connect me to philosophy, but that is sorely mistaken. I am very explicitly claiming that there is no use of philosophy in science.

An example of a (silly) philosophical question: If a tree falls in the forest and there is no one around to see it, do the other trees make fun of it?

If they are cruel?

“Hey, I heard a crash behind my back. For a while, it had me stumped!”

Meantime, in the real world of history of science and philosophy, Newtonian physics was specifically and self-consciously a derivative of Cartesian philosophy (mathematical physics having been, like, invented by Descartes on the basis of his interpretation of matter as extended substance) and both Relativity and Quantum Physics were self-consciously and explicity derived from Neo-Kantian philosophy.

Sure, you can’t entangle philosophy history from science history before empiricism took off. That period is called “natural philosophy” for a reason.

I am talking about actual modern science naturally! If we go back and disentangle cartesian coordinates et cetera, we see that nothing of philosophy remains but have been replaced by predictive theory. (Which is why classical mechanics is invalidated and we have new kinds of mechanics.) So it didn’t help empirically, but was a hindrance for a theoretic formulation.

As for that modern period, if there was a smidgen of what, Kant?, in relativity and quantum mechanics nothing survived into peer review AFAIK. So much for “self-consciously and explicitly”.

This is as silly as religious claiming that science is based on religion. Obviously neither of these things are relevant for science of today, look at the peer reviewed papers that makes actual advancement on fact or theory.

Light is not instantaneous … it takes time to move from one place to another.

I was hoping for some insight from this article but there was none.

Think frames of reference. Sure it takes time from your FoR – but from a photon’s FoR, no time.

Do not confuse what the observer sees and what the photon experiences.

Light from the sun gets her in about 8.6 minutes observed from Earth, but the photon itself experience it as no time passed.

Photons don’t experience anything.

*headdesk* That was obviously meant figuratively, not literally. Don’t be purposefully obstinate. It’s annoying.

How about this then: “if there were a zero mass incorporeal indestructible human moving at exactly the speed of light, from their reference frame they would appear to arrive at their destination in exactly zero time”.

Dr. Hugh Everett would simply say that you can kill your grandfather and still exist since when you went back in time you entered a new universe. Killing your grandfather there would prevent any possibility of a duplicate of yourself from coming into existance there. With regards to the multiple universe theory for quantam mechanics, I have always wondered how there is enough room for all of these universes to exist. At some point wouldn’t they bump into each other? It would also make God’s job harder since how is he to judge your essence when one of you may have invented a vaccine that saved millions of lives while another became mad and released a vaccine that took millions of lives, unless God too is subject to the laws of quantam mechanics and there is one for each universe.

There’s an article on multiple universes (and their habit of bumping into each other) here: http://www.universetoday.com/87684/astronomy-without-a-telescope-bubblology/

Sorry, can’t help with the god issue.

This is about science not god!

But you have to assume non-standard physics to make that happen (time travel) in the first place. Many world theory (MWT) is as easily perverted by that as other quantum theories.

You have to distinguish many worlds from many universes (multiverses).

MWT has the worlds decohere so they can’t interact (“bump into each other”). That is the whole point of it!

Multiverses can interact. For example, bubble universes can “bump into each other”. Nerlich had another excellent article on that a few weeks back.

Then you start into mythology and magic, which is quite contrary to science. Science works because that stuff doesn’t. I suggest you take it elsewhere.

Suppose an alien spacecraft somehow zoomed through our solar system at six times the speed of light (from our perspective).

Would we be able to observe it? Would we even know it was there?

Guessing you didn’t read the article huh 🙂

It could move through at 99.999etc% of SOL – and if it did we should see it (unless it has a cloaking device)

6 times the speed of light is impossible.

It was a rhetorical question. 😉

A thought experiment: Cosmic consciousness is the idea that the universe exists as an interconnected network of consciousness, with each conscious being linked to every other, becoming one consciousness that knows no physical limitation and permeates all space and time. Faster than light travel then becomes an instantaneous given. Would that this consciousness wish to inhabit a physical body anywhere in the universe.. thinking it would make it so. Perhaps if we only listened a bit more closely to the inner spark which connects us to infinity… we could roam the stars at will? Bicameral chimera or hallucinations aside, why then do we choose to hide? ~*~

Getting a bit left field there.

Thought is still a physical process. In our case, the electrochemical transmission is not all that fast. There’s some interesting research (a recent SciAm article) around just how big you can make a brain (at least an Earth brain). Whales and elephants are probably at the edge of individual coherence because of the distance/time lag that a thought has to travel across their heads.

You can connect to infinite much more effectively with radio – which does at least move at SOL.

There you go being ‘reality centric’ again…. ~@; )

Whereas, for my thought experiment, I am assuming there are ‘other’ realities.

Consider the microbe Toxoplasma gondii, as found in cat poo. When rats eat cat excrement containing this microbe, it enters their system and has the ability to actually change rat behavior – making them loose their quite natural fear of cats.. which then causes them to be more easily captured and consumed, by cats. Trick!

“The Toxoplasma parasite invades cells and forms a space called a vacuole. Inside this specialized vacuole, called a parasitophorous vacuole, the parasite forms bradyzoites, which are the slowly replicating versions of the parasite. The vacuoles containing the reproductive bradyzoites form cysts mainly in the tissues of the muscles and brain. Since the parasites are inside cells, they are safe from the host’s immune system, which does not respond to the cysts.” (A Wiki-up)

Very clever considering that this is a ‘simple’ microbe… without a ‘brain’. Yet as in the above definition, would still contain life’s universal ‘spark’ and thereby have access to infinite knowledge… or ‘infinite’ from the perspective of a microbe anyway?

Exponentially, our complex behaviors might appear ‘microbial’ to that limitless higher ‘cosmic consciousness’…. which might exist/permeate (in) every reality/dimension.

Should we truly wish to ‘go to the stars’, I posit that this may be the only way~

The type of behaviour that you examine in your post (bacteria ==> rat ==> cat ==> bacteria (or A leads to B leads to C leads to A)) is easily explained by standard evolutionary theory. In fact, I’d go so far as to say that systems like the bacteria/rat/cat symbiosis you describe *must* eventually occur in any given ecosystem if darwinian evolution is correct.

There is no need to create new physics to explain something that is already explained. What you did there was the equivalent of saying “hyperspace must exist because bread rises!”. No, that’s not how it works. You don’t get to create a new, incredibly complex explanation to explain something that’s already fully and completely explained.

“…something that’s already fully and completely explained.” I will take as a reductionist assumption. From the perspective of a ‘cosmic consciousness’, could not evolution itself be considered a form of ‘space/time travel’?

There is a lot of intelligence in evolutionary systems, but it is well known that it is functional “lessons learned” by a non-intelligent evolutionary mechanism. Dawkins describes it thusly:

“This is analogous to the definition of information with which we began: information is what enables the narrowing down from prior uncertainty (the initial range of possibilities) to later certainty (the “successful” choice among the prior probabilities). According to this analogy, natural selection is by definition a process whereby information is fed into the gene pool of the next generation.

If natural selection feeds information into gene pools, what is the information about? It is about how to survive. Strictly it is about how to survive and reproduce, in the conditions that prevailed when previous generations were alive.”

Famously none of that learned information rests in the evolutionary process itself, which can be cartooned as “(hereditary) variation and selection”. Information in the genes have two sources, mutational noise that increase Kolmogorov information (KI) and Shannon information (SI) (the one described above) channeled into the genome by bayesian learning and decreasing the KI.

That SI is bayesian learning follows from Dawkins description. An a priori set of alleles (through variation) is run through the differential reproduction process of evolution and an a posteriori set of alleles result.

And environmental information is, to a gene, all other genes that it coevolves with, in the same and in different organisms it interacts with. It is shared and contingent happenstance interactions that contribute information, and there is no prior access. Also, local environmental description and interaction is very definitely finite.

Again, you try to insert magic where there is none to find, by ‘cold reading’ as it were.

Which is a pity, because the reality is so fantastic. Who knew that a virus “knows more about the human body” than we and our bodies do (since it takes advantage of us)? And all because that little fluff of encapsulated material is capable of simple chemical genetic function?

Realism is built into science, observed as constrained reaction on constrained action: action-reaction of classical mechanics, observation-observables of quantum mechanics. This concept is tested as valid beyond reasonable doubt.

One (or many) dualist or magic consciousness would be unlikely to have any of that. But more to the point it is less parsimonious so can’t compete with realism in the first place.

Some of the most high energy cosmic rays are at E = 10^{10} GeV. This is much higher energy than the LHC which pushes protons to E = 10^4 GeV or about 10 TeV. The mass of a proton is about 1 GeV. The relativistic gamma factor, ? = 1/sqrt{1 – (v/c)^2}, is then computed as the energy of a particle divided by its mass ? = E/m. For protons this is easy, and with the LHC ? = E/m = 10^4. With the highest energy cosmic ray ? = 10^{10}. The time compression on the frame of such a moving particle, or its proper time divided by our coordinate time, is the reciprocal of this so ?/t = 1/?. This means a cosmic ray at the highest energy will on its frame cross the region of the universe out to z = 1 in only 14 years, where as on our clock it has been 13.7 billion years.

A distance in spacetime is d = (ct)^2 – x^2 – y^2 – z^2, where this change in sign with time and space variables means that spacetime has this Lorentixian metric with signature

[+,-,-,-]. When this spacetime distance is zero these correspond to null lines or directions. The spacetime embeds its own projective space defined by light cones. The projective Lorentizian manifold is then defined at every point by the blow up of a point, or a light cone. This projective space and its null lines are then invariant in all possible frames. So there is then not special frame of reference for this structure, even if there are massive particles in the spacetime. There is a fibration between the light cone and the spacetime, which locally is the Lorentz group. Formally this is the same structure as the Fubini-Study metric and the Hilbert space C^n, which constructs the Berry phase for a quantum system.

Quantum mechanics has a projective structure which is a complex realization of the projective structure (light cones etc) in relativity. The black hole complementarity is a pairing of such structures. Black hole complementarity is the relationship between quantum observables as measured by a stationary observer outside the black hole and an observer falling into a black hole. So in this black hole complementarity a quantum system has a description according to a pair of complex state variables. This pairing of complex variables constructs quaternions, and a pairing of quantum quaternion state variables constructs octonions.

I think that quantum mechanics and general relativity have a categorically equivalent structure. Quantum mechanics has been show by various theorems to be noncontextual and to forbid signally by nonlocal (faster than light signaling) entanglements. The noncontextually of hidden variables and no-signalling theorem in QM then has a correspondence with no causality violating propagation of information in relativity.

LC

The article ignores the level of energy required to attain .999+ of light speed. Is it rational to believe we will command the energy of a supernova for star ship propulsion? Regarding the FTL neutrinos, I would remind the writer that when it comes to mass the neutrino is as close to nothing as you can get and still be something. Maybe the Lorenz Transformation is something that is possible for atomic or sub-atomic particles, but for human’s and their interstellar conveyances may be beyond the bounds of practicality.

A MATTER OF PERSPECTIVE (from a philosophical point of view;-))

Hi! Great article. Great discussion.

Actually Prof. Heinz Haber elaborated on this 50 years ago. The question of Space in terms of size (how could multiple universes fit…) is simply a matter of perspective!

In my view it is semantically wrong to purport the idea of universes existing side-by-side, but rather UNIVERSES WITHIN UNIVERSES, i.e. any atom may well contain universes. this idea, to me, is logical! Don’t we all at some point in life remember a building or apartment as much smaller when seeing it as a grown-up vs. when a child…? SMILE

“Light essentially does move instantaneously, as does gravity”

You should’ve been more clear with what you meant when you said this. I was about to start explaining exactly why that conclusion is wrong before I realized you meant it in terms of the frame of reference of the photon or graviton (assuming it exists).

Steve, your article makes me a little angry.

“if you were to hop on a spacecraft going at 99.999 per cent of the speed of light you would get”

No, you were *not* to “hop” on this spacecraft, because in reality physics does not make it possible (see e.g. what the commenter Starcastle2011 said). Contrary to what you say, the fact of traveling time staying above zero is *not* the only limit the universe really imposes on us. And by the way, the spacecraft mentioned would smack you to pieces, and you will be dead — that’s another limit the universe *really* imposes on us.

“Light essentially does move instantaneously, as does gravity and …”

Sorry, but this is complete nonsense. Light and gravity — generally all four fundamental forces of nature — do *not* move instantaneously but some time is needed (using the word “essentially” doesn’t make it better).

“We are stuck on the idea that 300,000 kilometres a second is a speed limit, because we intuitively believe that time runs at a constant universal rate.”

No. As far as I’m concerned, I do not believe anything about the running of time intuitively. At university I *learned* physics, and I did an experiment by which I measured the speed of light, and I understand, using my conscious mind, how it works. As you know, the result of the experiment would be the same, when “going at 99.999 per cent of the speed of light”.

“So with the right technology, you can …”

“Can”? The “right” technology — pipedreams. And, “going at 99.999 per cent of the speed of light” in a spacecraft you will *know* that for the stars outside things are a little bit different. In order to arrive at a galaxy far, far away, you still have to travel millions of lightyears, and some stars will explode while you travel.

Well, now at Universe Today, a respected astronomy and space related website, in an article someone said “light moves instantaneously”. This is fodder for each and every lunatic out there.

Ever heard of the term Gedankenexperiment (thought experiment)?

And yes, from the perspective of the photon, it moves instantaneously. That we clock it with 300.000km/s doesn’t matter at all to it.

You are not a big one for thought experiments.

Again think frames of reference… Within light’s frame of reference, it moves instantaneously. It is a mistake to think your FoR is the absolute FoR.

From light’s frame of reference, it doesn’t move at all. The universe moves around it. Also, if it moved instantaneously, then everything that happened to the beam of light during its motion would be happening simultaneously, which would mean that effects and causes were happening at the same time, which would seem to be a violation of causality. Or am I missing something?

It made me think about what kind of life it would be if you could travel at the speed of light. It’s like a borderline case in this article. Still breaking causality. If you wanted to visit some very distant world, with the light speed you would be instant, and you also don’t want time to pass on the Earth. You would be instantly there and instantly back, and that goes against causality, you would and wouldn’t visit the distant world. If you wanted to stay there for a week, you wouldn’t achieve the instant travel.

Hi, … if ‘ we ‘ were to limit ourselves to the speed-of-light even just inside our solar system, … it would take approximately eight minutes to reach the sun. Let alone the nearest sun, … 4+ years away.There is nothing ‘ instantaneous ‘ about that.

Everything has an acceleration curve attached. Try trillionths of a nano-second ???

Mr. Nerlich… Thank you once again for encouraging so much creative thought. You DO seem to have a ‘knack’ for that! Truely a gifting which continually ‘knocks on Heaven’s doors’… Anybody Ommmm? LOL!

Some of the comments here connect with many worlds interpretation of QM. There are also some comments about consciousness. Of course this takes us a bit afar from the relativity question at hand. However, as I indicated earlier today quantum mechanics and relativity have some categorical equivalency.

I have been reading David Foster Wallace’s essay on fatalism. DF Wallace wrote “Infinite Jest,” which is a pretty dense novel, and he was clearly a genius — who committed suicide 3 years ago. Wallace’s essay critiques Taylor argument for fatalism. Taylor’s argument centers around the decision on whether to order a battle. If the order O is given then the battle B happens. So it is if O then B, which means that O is the necessary condition for B and B is the sufficient condition on O. The same holds for not-O and not-B. The conditions B or not-B occur later in time. B or not-B is the necessary condition for O or not-O, and both of these conditions are the excluded middle O/not-O = U, and the AND condition is Q/not-O = null. There is then a retro-diction argument that it is in one’s power to do O or not-O, but then in B occurs since it is a necessary condition that O is true then O/not-O is false. So I do not really have that power to make the choice. Hence fatalism is concluded.

The question is then whether fatalism is objective or not. In the quantum mechanical setting we have the two interpretations, Bohr’s complementarity world view, or the Everett-Deutsch Many World Interpretation. Quantum mechanics actually tells us nothing about whether one is true or false. There is a reason for this, for these outcomes which we observe subjectively depend upon the context of the measurement which selects the eigenstate of measurement. Quantum mechanics by Bell’s theorem and even more with the Kochen-Specker theorem tells us that quantum mechanics has no contextual hidden variables. As a result there is no procedure by which one can compute some context sensitive aspect of a quantum state measurement. If one accepts the many worlds perspective then the universe is objectively fatalistic. If one accepts Bohr’s Copenhagen interpretation (complementarity between quantum and classical worlds) then by the Schrodinger cat (or Wigner’s friend) argument it appears that the act of an observation is what ultimately determines a quantum outcome. In that case the universe is not objectively fatalistic. It is the existence of a conscious state which is ultimately involved with conferring an ontological meaning to the universe.

So am I with Bohr or with Everett & Deutsch? If you ask me on Monday, Wednesday and Friday I might say Bohr, and then Tuesday, Thursday and Saturday I will go with Everett and Deutsch. Sundays I go with football. That is a tough question, and I suspect it is not answerable. The reason is that this delves into the issue of ensembles of quantum measurements and aspects of computability and undecidability; the theorems of Godel, Turing and Chaitan rear their heads here.

I will say that in what I just got a paper published in it involves Lie algebras for n-partite entanglements and the moduli groups for black holes. The two have compact subgroup representations that are products of SL(2,C) for quantum entanglements and products of SL(2,R) for black hole moduli. The two are within a 2 to 1 map isomorphic (equivalent), by some mathematics between algebraic systems over complex and real numbers. What this indicates is that general relativity and quantum mechanics are really equivalent structures, and general relativity is classical and quantum mechanics is, well quantum. This strongly speaks for Bohr’s perspective on this matter! Hence this weighs on the side against fatalism.

However, we still have a flip side to this. It not only comes from Everett and Deutsch, but from Bohr! During the Solvay conference of 1931 Bohr and Einstein had a huge debate over quantum mechanics. It makes for fascinating reading to see how these two intellectual titans sparred. Einstein made an argument for beating the Heisenberg uncertainty principle by considering the weight of a body which emits a quantum. I will not go through this in detail here, for this is already to long. Bohr then found the problem with this and won the day. What I recently found is that Bohr’s argument in the context of a black hole leads to an uncertainty principle ?r?t = G?/c^4, where we can get into the matter of a “time operator” on some discrete measure zero set. The radial distance to the black hole and time are complementary observables, and this uncertainty principle pertains to the fluctuation of a black hole horizon. This again brings about a funny aspect to time. In this setting time is an operator, and it has not effacious aspect to the world. This is many worlds perspective and voila, we have objective fatalism.

Then to throw more strangeness into the mix, the two perspectives are in fact dual. There is no decidable method for saying one is true and the other false. This seems to go with Wheeler’s statement that the logical complement of a great truth is also a great truth. Indeed the relationship between the algebras with complex and real numbers is a two state system Z_2, similar to spin up/down.

This then takes me to a more metaphysical comparison of many worlds and Bohr. In the many worlds perspective there is an objective sort of fatalism. In the Bohr interpretation there is a cut off in the chain of observation due to the action of a conscious being. In the Bohr perspective the universe does have a strange dual nature, which might ultimately on one side involve a cosmic consciousness that is dual to the objective world.

LC

I think it should be pointed out that Deutsch himself doesn’t rely on a fixed block universe. (Cf “The fabric of reality”.) He points to ways that the many world theory avoids that fate [sic!].

Anyway, as the comment hints this revolves around the question of consciousness. If you ask biologists like Jerry Coyne he points out that biology isn’t dualistic, and that there is no “free will” in the dualistic sense in the biologic machinery. The area is often hijacked by the “free will” choice of religious to entertain soulism duality, hence pushing this type of “free will”.

My own take is that there are two or three levels of separation between a clockwork block universe and us.

– I don’t think much of Deutsch interpretation of MWT there, that he avoids a block universe, but it is certainly possible. I am less sure about testability.

– The classical sector shows that deterministic chaos exist, and that effectively punctuate any models of prediction and retrodiction in all cases. Neither the universe or we have the local infinite resources to resolve real numbers to infinite precision.

– And the biological machinery of our bodies is sufficiently complex that we can, in fact have to, attribute the effective model of free will choice to describe its actions.

So while I understand the idea of putting two quantum theories against each other in the absence of testing, I don’t think it affects the question at large.

It looks funny since it is funny or at least a larger sense of uncertainty, the complement pair time and energy aren’t outcomes of wavefunction uncertainty. They are outcomes of observing state evolution. So it isn’t actually time that is described as having uncertainty but lifetime of a state.

This goes back to the question whether spacetime metric really fluctuates, or the system of quantum states inhabiting it.

I go with the tentative supernova timing results that implies the former doesn’t happen, while we have plenty of observations validating the latter. According to the energy-time uncertainty that is precisely what we should expect and no more.

Btw, I am curious, why would one define time as an operator over a measure zero set? (Or do you simply mean to say that a measure zero set measure, well, zero? I have problems interpreting that point.) That would introduce new physics, I would think, and maybe that is the (exciting) point.

The issue of a time operator was something W. Pauli worked out around 1933. Classical mechanics in Hamiltonian formalism is based around the idea of conjugate variables. These are usually position and momentum variables. In Hamiltonian mechanics there is the Poisson bracket structure {q, p} = 1, which is shorthand for (?q/?q)(?p/?p) – (?p/?q)(?q/?p) = 1 – 0. In quantum mechanics this translates to the commutator [q, p] = i?, which is identified with the Heisenberg uncertainty principle ?q?p = ?. For wave mechanics there is the Fourier transform relationship ???t = 1, where for the energy of a quantum wave given by E = ?? gives the additional Heisenberg uncertainty relationship between energy and time ?E?t = ?. However, there is no classical or Hamilton mechanics correspondence with this relationship. Yet we have this interest in making any distinction between space and time nonexistent. So this begs for some idea of there being a time operator.

The idea of a time operator is that there exist |t> such that ? = which describes the probability of a particle occurring at a time t according to p[?(t)] = |?(t)|^2. The ket |t> is related to the energy ket |E> by the standard Fourier relationship = N^{-1/2}e^{iEt/?}, for N a normalization constant. It is then possible to demonstrate that

= sum_i = N?_ie^{iE_i(t – t’)/?},

by a completeness sum, unit matrix = 1 = sum_i|E_i> as

T|t> = i? sum_{j,k}(E_j – E_k)^{-1}|E_j> = i?N^{-1/2}sum_{j,k}(E_j – E_k)^{-1}|E_j>e^{-iE_kt/?}

The sum over the index k is replaced by an integral

T(t) => i?N^{-1/2}sum_i?(E_i – E)^{-1}|E_i>e^{-iEt/?}}|E_j>

The integral is performed on the complex plane so the Cauchy integral formula results in

T|t> = -2??N^{-1/2}sum_je^{-iE_jt/?}|E_i>

This is just a Fourier transform of the |E_j> and thus returns T|t> = t|t>. This time operator clearly satisfies [T, H] = i?, on a subset of the Hilbert space of states {|?>} such that sum_j = 0. The odd thing about this operator is it describes the evolution on a discrete set. The inner product = e^{iE_jt/?} can be used on the state |?> = ?_ja_j|E_j>, and the inner produce ? = = sum_ja_je^{-iE_jt/?} is the wave function. This set however is a measure zero set. The energy eigenvalues are bounded below which means that ?^{-1}?_0^? log|?(t)| < ?. This means that the commutator [T, H] = i? holds on a measure zero (measure ?) set.

So the quantum time operator is a bit of an oddball, for it can only operate on some measure ? set of any quantum evolution. We might think of it as being how a discrete set of operations called steps in walking can give rise to a continuous motion. This does lead to some related issues of a discrete set in Taub-NUT spacetimes.

The many worlds interpretation results in a sort of relational block world. With each quantum state reduction the block splits off into two amplitudes that no longer interfere with each The one funny thing about this is that it creates an intrinsically time asymmetric picture of the universe. This is because the splitting off of the universe means there is some sort of contextuality inherent in the quantum universe. Contextuality in a quantum measurement is a classical aspect of the process. Quantum mechanics has no local hidden variables which have context for the measurement outcome. So there is something funny going on here.

LC

I am sorry, I have trouble understanding the physical context of your model. If we are talking discrete energy eigenvalues for a particle to make the math work, we are looking at a bounded system.

Then we would have a lowest energy, and I guess that loosely can be described as having the operator hold on a measure zero set. I would rather call such an operator holding on a non-zero measure, since the uncertainty zero-energy is the constraint on your commutator.

I hope I got the physics correct here, since I think the (for me) curious description was clarified. However, I misunderstood and there was no new physics as such what I can see. If so, bummer! =D

I would say so too, however Deutsch himself disagree so I don’t think we can claim that this is universally accepted. See the book I referred to.

The nature of time and its asymmetry is interesting, but not really a part of the block universe or quantum mechanics that takes it for granted (or not).

I don’t think evolution (whether as operator or decoherence) as such insert new context. If you took away MWT you would still have decoherence.

Decoherence is reversible according to a tentative experiment (which reference I have lost at the moment). So MWT is reversible too, unless you define a variant that isn’t and in all likelihood can be invalidated eventually.

Further, I think you will find that quantum mechanics _must_ be reversible. In Hardy’s probabilistic toy model it is quantum mechanics that is the continuous physics with an operator that has no distinguished pre- and post states, while classical mechanics is precisely the discrete case to set up initial states and look for observational outcomes.

So presumably QM can go in and out of decoherence or it (or at least decoherence) wouldn’t work. And that seems to be observed.

A continuous time operator does not permit energy eigenvalues to be bounded. We might think of the hermitean time operator T as having a unitary operator U(E) = e^{iET/?}. This is an “energy development operator” which is dual to the time development operator generated by the Hamiltonian. This prevents a boundedness condition on the Hamiltonian operator.

The energy development operator then can only have meaning on a set of discrete time points t_1, t_2, …, t_n so that the Hamiltonian eigenvalues are defined in these intervals. The intervals are then long enough so the energy spectrum can be defined to “near discrete” values. The “perfect” quantum theory has these time intervals infinite. The point of this is not to do quantum mechanics in the ordinary sense. This is set up in relationship with the discrete system in Taub-NUT spacetimes, and how this corresponds to the same in AdS spacetimes. This leads to conformal structure of the completion of the AdS and how quantum physics and relativity are categorically equivalent as Borel groups (an example is the Heisenberg group), modular systems and Etale-Grothendieck theory. Things get a bit abstract at this point.

The many worlds interpretation has a serious problem. If you are to assume that quantum mechanics pervades all of the universe then in the MWI approach the eigenbranching of the world means there is some sort of context in quantum mechanics. However, this contradicts some fundamental theorems, Bell, Kochen-Specker etc, which tell us that there do not exist contextual hidden variables. In effect the eigensplitting of the world in MWI means that there is some quantum means by which the eigen-basis of the decoherence is established. An example is how the experimentalist has a freedom to orient their Stern-Gerlach apparatus to measure the spin of electrons. In the Bohr interpretation (Copenhagen) the experimenter and apparatus are classical which permits this context to exist. Zurek has proposed an ein-selection model within the decoherence model, but we still have this little problem. In fact: We do not know WTF is going on.

There are cases where systems go in and out of decoherence. Some experiments have been performed to “resurrect” a quantum entanglement after it was subjected to a weak measurement. This is the confirmation of the Hardy theorem, which has emerged as an important aspect of QM.

With the evolution idea, that is one of Smolin’s things. Smolin has a way of coming up with all sorts of highly speculative ideas. I am not that big an upholder of this selection idea he has recently come up with.

LC

Lol, … oopsy, …

Only if you skip such irrelevancies as accelerative G-forces on the human body, … or

The fantasy time-dialation factoring, … you might travel 4 light years in real time two years, … turn around and come back in real time two years, … having travelled 8 light years distance.

You will still be only 4 years older, … and those who did not take the trip with you will only have real time aged 4 years, … just like you.

However if you travel 4 light years in two years, … and they travel the same distance at light speed it will take them 4 years, … real time eight years return trip for them.

Regardless, … by the time you ‘ get together again ‘ eight years will have passed, … no time dialation, … both will be eight years older.

Just my opinion, …

Actually it isn’t that bad. The usual “if we had a star drive” scenario assumes 1 g acceleration. After a year you observe the relativity effects easily, and the accumulative effects builds quickly from there.

The accelerated system will age slower, and these effects are so pervasive that your GPS system has to take them into account. (Since orbiting satellites accelerate differently than you do while you inhabit the surface of the Earth.)

If you accelerate and decelerate at 1 g to stop at a star system, you will roughly cover 4 light years in 5 years of planetary reference frame. (About the distance to the nearest stars.)

The ship passengers will age about 2 year since they get sufficiently close to light speed after 1 year of acceleration: 1 year to accelerate, 1 year to decelerate, nothing much in between for such a short trip.

Having two travelers accelerate on the same schedule will make them age equally. So they will be 2 years older, while the planet inhabitants will age 5 years.

Hi, … nice to see somebody is actually reading what is written.

There is an old expression about ‘ assume ‘, … not meaning it in a derogatory manner.

‘ I ‘ am not using the standard accelerative / decelleration model, … just straight simple actual factuals discounting that particular aspect as being ‘ equally utilized by both parties ‘.

Same thing as the ‘ getting to or exceeding light speed ‘.

Thank you for your input, … two thumbs up.

Why does light move instantaneously? You said there is nothing special about light, but what enables it to move instantaneously between point A and B, while I cannot? Is it mass?

In simplest terms it is light’s invariant mass being exactly zero that makes the relativistic time dilation (time factor) become zero. So from the light perspective it takes “no time” between it is created by emission and destroyed by absorption.*

And conversely it is your mass not being zero that makes the factor larger.

———–

* In terms of classical field physics, photons are created by a “near field” of an electromagnetic antenna, which can well be the electron cloud of an atom. The near field is when the separate electric and magnetic field from accelerated charges (often electrons) couple over a short distance, typically a few antenna lengths, to become EM wavepackets (photons).

That process takes time, and so does absorption when conversely the photon couples through the near field to matter again.

Interesting.

And is it known why light has no mass, while everything else has mass? Does light have energy? If so, shouldn’t E=mc^2 yield a non-zero mass for light?

What does it mean to have no mass, except that you can move in no time and have no dimensions? I mean structurally, is the inner makeup of a photon so different that it can have no mass?

I actually made comment up-thread that may be helpful. Btw, I meant to say “intrinsic invariant” mass above, sorry for the confusion. That is what is zero mass, the photon has relativistic mass of course.

Some of what you ask in the 2nd paragraph is also answered in the appendix below the line in that comment. The characteristics of dimension is totally separated from characteristics of mass, for example the massive electron can be considered to be pointlike as of yet.

[Most particle characteristics are independent of each other, modulo quantum mechanics coupling of conjugate variables. So you can have mass but no dimension.]

Often said; I didn’t know Allen said that.

Wikipedia pegs it as originating with Ray Cummings (1922); I didn’t know that either. That is a better pedigree, since Cummings was a scifi writer. =D

interestingly enough, i just tossed an email to OPERA and CERN about three weeks ago asking if they had accounted for relativistic time dilation with their neutrino experiments. i love it when great minds think alike.

Steve, does time really stand still for photons?

I would like to know how you would do a *real* physical experiment. “Real” is crucial, because with a thought experiment alone you can’t be sure that any statement in physics is correct.

I know it’s difficult, because we can’t really attach clocks — the pertinent measurement devices — to photons. We indeed are able to attach clocks to certain material things, e.g. planes, satellites, and space probes, accelerate them to higher velocities, and move them up and down in the gravity field. This way we have a foundation in reality when we speak e.g. about high speed muons raining down from space, “experiencing” a slow down of their own time, because of which their decay rate is smaller as for muons with smaller velocities. Above that we have the explanation given by the theory of relativity.

Now, extrapolating this to a photon, how would you show in a real physical experiment, that time really stands still for it? And, because of this, it would be correct saying that from a photon’s frame of reference it moves instantaneous (different words expressing just the same). By the way, a physical frame of reference includes clocks; thus it’s even questionable whether its correct talking about a frame of reference of a photon *this* way (but I’m not sure).

As an example it would not be enough trying to show (or worst: only say), that no physical feature of a photon does ever change. That’s because the energy, or the frequency, respectively, of a photon changes when it moves through a gravity field. As far as I’m concerned, this does not look like time standing still.

It is true that in the neutrino’s frame of reference travel time is shorter than that measured in a stationary frame. Wasn’t the CERN measurement made in the stationary frame?

Hi, …

Not everyone is necessarily interested in the same thing, … for the same purposes.

Is there any specificly identified Neutrino’s which can be accelerated ???

” I ” am looking for what to me has been a longer time quandry, … communications at beyond light-speed. Hmmm, … ergo, … if two ‘ ships ‘ are travelling at faster than light, … the ‘ lead ship ‘ would be able to send back a message to the following ship.

However the following ship would be ‘ unable ‘ to transmit forward at faster than light speed.

Easiest rationale is two speed boats in a slower moving river, … the lead boat can throw a hunk of wood in which reduces to the speed of the river, …

???