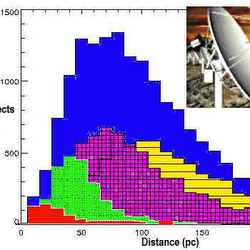

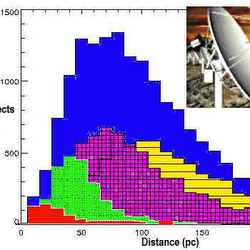

The number of HabCat stars, as a function of distance. Image credit: Turnbull, Tarter. Click to enlarge

Scientists have been searching actively for signs of intelligent extraterrestrial civilizations for nearly half a century. Their main approach has been to point radio telescopes toward target stars and to “listen” for electronic transmissions from other worlds. A radio telescope is like a satellite TV dish – only bigger. Just as you can tune your TV to different frequencies, or channels, researchers can use the electronics attached to a radio telescope to monitor different frequencies at which they suspect ET may be transmitting signals out into the galaxy.

So far, no broadcasts have been received. But then, no one knows how many other civilizations with radio transmitters are out there – or, if they exist, where they are likely to be found. It’s only recently that the existence of planets around other stars has been confirmed, and because current planet-finding techniques are limited to detecting relatively large planets, we have yet to find the first Earth-like planet orbiting another star. Most planet hunters believe it’s only a matter of time before we find other Earths, but no one can yet make even a well-founded guess about how many terrestrial planets are in our galactic neighborhood.

With so little information to go on, it has been difficult for scientists involved in SETI (the search for extra-terrestrial intelligence) to decide how to focus their search. So they’ve have had to make some assumptions. One of those assumptions, which may seem a bit odd at first, is that humans are “normal.” That is to say that, because we know for certain that intelligent life evolved on our planet, it stands to reason that other stars like ours may have planets like ours orbiting them, on which other intelligent civilizations have emerged. Based on this terrestrial bias, SETI searches thus far have focused on stars like our sun.

“The observational SETI programs have traditionally confined themselves to looking at stars that are very similar to our own star,” says Jill Tarter, director of the SETI Institute’s Center for SETI Research in Mountain View, California. “Because, after all, that’s the one place where we know that life evolved on a planetary surface and produced a technology that might be detectable across interstellar distances.”

Astronomers classify stars according to their surface temperature. The sun is a G-class star. SETI searches to date have focused on G stars and stars that are either somewhat hotter than the sun (F stars) or somewhat cooler than the sun (K stars). That has yielded a catalog of about a quarter of a million target stars. According to conventional astronomical wisdom, stars hotter than F-class would burn out too quickly for intelligent life to develop on planets that orbit them. Historically, M-dwarf stars, which are dimmer than K stars, also have been dismissed as potential SETI targets.

The two major arguments against M dwarfs have been:

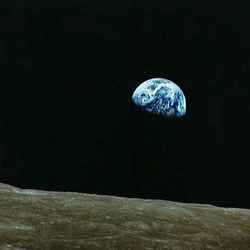

They’re too dim. M dwarfs put out so little solar radiation that a habitable planet would have to be very close-in. Farther-out planets would be frozen solid, too cold for life. A close-in planet would be tidally locked, though, always showing the same face to the star, as the moon does to Earth. The star-facing side would roast, while the opposite side would freeze. Not so good for having lots of liquid water around. And, says Tarter, “Liquid water is essential for life, at least for life as we know it.”

They’re too active. M dwarfs are known to have a lot of solar-flare activity. Solar flares produce UV-B radiation, which can destroy DNA, and X-rays, which in large doses are lethal. Presumably such radiation would be as harmful to extraterrestrial life as it is to life on Earth.

These arguments seem reasonable. But there’s a catch. Most of the stars in the galaxy – more than two-thirds of them – are M dwarfs. If M dwarfs can host habitable planets, those planets might well be home to intelligent species. With radio transmitters. So, as scientists have begun to learn more about other solar systems, and as computer models of solar-system formation have gotten more sophisticated, some SETI researchers have begun to question the assumptions that led them to reject M dwarfs as potential SETI targets.

For example, atmospheric modeling has shown that if a planet orbiting an M dwarf close in had a reasonably thick atmosphere, circulation would transfer the sun’s heat around the planet and even out the temperature worldwide.

“If you put a little bit of greenhouse gas into an atmosphere, the circulations can keep that atmosphere at a reasonable temperature and you can dissipate the heat from the star-facing side and bring it around to the farside. And, perhaps, end up with a habitable world,” says Tarter.

Scientists have also learned that most of an M dwarf’s hyperactivity occurs early in its life cycle, during the first billion years or so. After that, the star tends to settle down and burn quietly for many billions of years more. Once the fireworks end, life might be able to take hold.

The question of M-dwarf habitability is a critical one for Tarter. The SETI Institute is in the process of building a new radio telescope, the Allen Telescope Array. Comprised of 350 small antennas, the array will do double duty: it will be used by radio astronomers to survey the skies and it will search for radio transmissions from extraterrestrial civilizations.

“It’s an observatory that will simultaneously and continuously do traditional radio-astronomy observing and SETI observations,” says Tarter. “It’s the first telescope ever that’s being built to optimize both of those strategies.”

For the most part, traditional radio-astronomy research will determine where the telescope gets pointed; the SETI Institute will simply hitch a ride on the incoming signals. The array combines the signals from the many small antennas to make a large virtual antenna. By adjusting the electronics, researchers will be able to form as many as eight virtual antennas, each pointed at a different star.

That’s where the M-dwarf question comes into play. At the highest frequencies that the telescope can receive, the instrument can focus on only a tiny spot in the sky. For the SETI search to be as efficient as possible, wherever the telescope is pointed, the institute’s researchers want to have several target stars to set their sights on. If only F, G and K stars are considered, there aren’t enough stars to go around. But if M dwarfs are included as targets, the number of prospects could increase as much as ten-fold.

“To make the most progress and to do the fastest survey of the largest number of stars in the next decade or so,” Tarter says, “I want a huge catalog of target stars. I want millions of stars.”

There is no way to know for sure whether M dwarfs host habitable planets. But no one has yet found a habitable planet around any star other than the sun, and it’s unlikely that one will be discovered for many years to come. Technology capable of finding Earth-sized planets is still in the development stage.

To do their work, though, SETI researchers don’t need to know whether or not the stars they’re investigating actually have habitable planets. They simply need to know which stars have the potential to host habitable worlds. Any star with potential belongs on their list.

“It’s not the star that I’m interested in,” Tarter says. “It’s the techno-signature from the inhabitants on a planet around the star. I don’t ever have to see the star, as long as I know that it’s in that direction. I don’t ever have to see the planet. But if I can see their radio transmitter – bingo! – I’ve gotten there. I’ve found a habitable world.”

That’s why Tarter and her colleagues want to know whether or not to include M dwarfs on their target list. To help answer that question, Tarter convened a workshop in July of this year that brought together astronomers, planetary scientists, biologists, and even a few geologists, to explore whether it made sense to add M dwarfs to the catalog of SETI target stars. Although workshop participants did identify some areas that require further research, no insurmountable problems turned up. The group plans to publish its preliminary findings for scrutiny by the wider scientific community.

And that means that if we ever do receive a radio signal from an extraterrestrial civilization, the beings who sent it just might be residents of a solar system with a dim, red M dwarf at its center.

Original Source: NASA Astrobiology