Key members of the United States Congress are asking NASA to postpone further work on the Orbital Space Plane until the government decides what its next steps in space are going to be. Expected to cost $13 billion over the next 5 years, the OSP would provide a new way to transfer crews to and from the International Space Station. Two contractor teams, Boeing and Lockheed Martin/Grumman/Orbital Sciences, are competing to build the OSP – the winning team would be selected by this summer. NASA acknowledged the request, but they haven’t said if they’re going to comply yet.

SMART-1 Update: One Month in Orbit

Image credit: ESA

The European Space Agency’s SMART-1 spacecraft has been orbiting the Earth for a full month now, and has made 64 complete orbits. Engineers have been wary this week about firing its ion engine with the increased solar activity. There have been a few problems: the engine unexpectedly turned off, but worked fine on the next firing; its star tracker had difficulty orienting the spacecraft but upgrades to the software resolved that. It’s still on track to reach the Moon by March 2005.

The spacecraft is now in its 64th orbit and has been flying in space for one month! The main activity of the last week was to continue the thrust firings of the electric propulsion engine in order to boost the spacecraft orbit. This operation was limited due to problems with the local radiation environment as a result of the recent, high intensity solar activity. The engine has now generated thrust for a total cumulated time of about 300 hours.

Despite the rather short thrusting phase, the electric propulsion engine performance has been periodically monitored as usual by means of the telemetry data transmitted by the spacecraft and by radio-tracking by the ground stations. We noticed that the EP performance is still improving. From the original expected underperformance of about 3%, we went to last week?s slight over-performance of about 0.5% and we now have an engine that gives about 1% higher thrust than expected. This confirms our confidence in the excellent conditions of the electric propulsion system.

In this period we have also experienced an autonomous shut-down, or flame-out, of the engine. This happened on 26 October 2003 at 19:23 UTC, a few hours before a scheduled switch-off. The engine then re-ignited autonomously at the next scheduled thrusting restart without problems. The experts are investigating the problems. One curious coincidence is that at exactly the same time the radiation monitors on two ESA scientific spacecraft in highly elliptical orbits (XMM and Integral) had detected considerable radiation coming probably from a solar flare. This event was so large and potentially dangerous that one instrument on board Integral stopped operations and switched itself in to safe mode.

The electric power provided by the solar arrays has been according to predictions – about 1850 W for this phase of the mission. The power degradation, due to the radiation environment, was also less than expected at 1-1.5 Watts per day. Recently however, starting from October 20, we noticed a sharper degradation of the power, probably due to the increased radiation environment.

The communication, data handling and on-board software subsystems have been performing according to expectations so far. We are also detecting signs of an increase in the local radiation environment. An onboard counter records the number of hits produced by charged particles, like protons or ions, which cause a single bit in the digital circuits of the computer memory to change state, known as a Single Event Upset. We noticed a sharp increase in the count rate from 23 October onwards. This is currently attributed to the increased solar activity.

The thermal subsystem continues to perform well and all the temperatures are as expected. During the last period the spacecraft systems coped very well with a partial lunar eclipse, where the Moon obscured about 70% of the solar disk for around 80 minutes. Although the average spacecraft equipment temperature has not changed much during the mission, some equipment is experiencing temperature fluctuations due to changes in both the spacecraft’s attitude along its orbit and the Sun’s position. The angle between the Sun direction and the orbit line of apses (the line joining the perigee and the apogee) has changed considerably during the mission. It has varied from about 16 degrees at the beginning of the mission to a current value of 35 degrees. This change could be responsible for the increase of the star tracker optical head temperature during part of the orbit. As the Sun gets further away from the line of apses, this effect should be attenuated and the star tracker conditions should improve.

The attitude control subsystem continues to work, in general, very well. The main area of concern in this period has been the star tracker. This advanced autonomous star mapper has failed in the last two weeks to provide good attitude information in a few cases during different parts of the orbit. We have now found the explanation for all cases. It is due to a combination of several effects. The dominant effect is the increased background radiation level, especially protons to which the star tracker CCD is sensitive. This effect, combined with the temperature increase of the star tracker optical head in some parts of the orbit, created ‘hot spots’ in the CCD which were mistakenly interpreted as stars. This problem has been corrected by a software change uploaded to the star tracker computer.

Another problem was caused by the high star richness of some areas of the galaxy where the star tracker is pointing during part of the orbit. Too many stars require a computer processing time in excess of the allocated slot and cause ‘drops’ of attitude determination. The third problem was the blinding that the Earth disk produces to the optical head. These problems have been corrected by modifications to the software of the star tracker, which has been successfully updated onboard. Since these corrections have been made, the star tracker has been working very well and no further drops in attitude determination have been observed.

Original Source: ESA News Release

Palomar Isn’t at Risk From Fire Yet

Image credit: Caltech

The terrible wild fires in Southern California have destroyed thousands of homes, killed more than 16 people and are still out of control in many areas. The Palomar observatory is in the area, but its operators feel that the 200-inch telescope isn’t at risk. The observatory was built with two layers of concrete and steel, dead trees and underbrush have been removed from a significant area, and it boasts a large water tank and volunteer fire fighting team. Smoke and ash have put a temporary halt to observations, though.

The tragic fires that continue to affect San Diego County remind us all just how fragile life and property can be. Currently fires are slowly approaching the area of Palomar Mountain, home to the California Institute of Technology’s historic Palomar Observatory.

Smoke and ash from the fires have put a temporary end to the Observatory’s nightly observations, but the Observatory itself is not threatened. In fact the dome of the 200-inch telescope is a safe place for and has been selected as an evacuation point for the Palomar Mountain Community .

“The builders of Palomar realized the potential fire danger and designed the 200-inch Hale Telescope to survive a fire. It is constructed with two layers of concrete and steel. Also, in recent months our maintenance staff along with foresters have removed dead and dying trees from the Observatory grounds. We are prepared for the worst,” says Palomar Observatory’s superintendent, Bob Thicksten. It doesn’t hurt that the Observatory has its own million gallon water tank, an array of fire hydrants and staff members who double as volunteer firefighters as well. Thicksten has worked tirelessly to maintain a working relationship with the local fire department, the United States Forest Service and the California Department of Forestry (CDF), which has its own fire station less than half a mile from the Observatory’s main gate.

The Palomar Observatory will issue further press statements as necessary.

Original Source: Palomar Observatory

Did You See an Aurora?

So, did you see an aurora? I got an email from my Dad at 4:00 am PST letting me know that he could see white ribbons of light directly overhead (Southwestern Canada). John Chumack was in Ohio and sent in the picture you see beside this story – they look like a red curtain against the horizon. He saw them twice at 4:30 am and 5:40 am.

The bulk of the storm hit us this morning, but the aurora should be visible for another day or two. So, have another try tonight and see if you can get lucky. If you’re in the Northern Hemisphere, look towards the north, and if you’re in the Southern Hemisphere, look south.

If you did see an aurora, let me know, I want to hear all about it. Send in pictures if you took them. If I get enough letters, I’ll put them into a story tomorrow, so be vivid in your description of what you saw. Just reply to this newsletter, or send me an email at [email protected].

Good luck!

Fraser Cain

Publisher

Universe Today

Book Review: Entanglement

Entanglement is the unusual behavior of elementary particles where they become linked so that when something happens to one, something happens to the other; no matter what the distance. Two entangled particles could be separated by the entire distance of the Universe and yet they can still communicate instantly with each other. Confused? Well, you’re in good company – this stuff is hard, and weird, and it defies common sense. In his latest book, “Entanglement”, Amir D. Aczel hopes to shed some light on this puzzling behavior.

With Entanglement, Aczel covers a pretty tough topic – the bizarre behavior of particles that become inextricably linked together; what Einstein called “spooky action at a distance.” In order to set the groundwork, the book begins with a series of one-chapter biographies, covering each of the major players in the research to uncover the nature of quantum entanglement, from Thomas Young (1773 – 1829) to physicists who only did their experiments in the last couple of years.

The book then moves into a detailed description of the major experiments that physicists have done to push the field of quantum theory forward. Some of these experiments will blow your mind when you consider the amazing stuff that’s going on in the world of the very small. Each time we encounter the concepts of entanglement, Aczel tries to present them differently hoping something will eventually stick in the reader’s mind.

The test of a good science writer is the ability of walk the line when including difficult concepts, and it’s here that Aczel really excels – he can explain complex scientific and mathematic concepts without baffling you; but also without dumbing it down too much. My eyes glazed over some of the formulae, but most of the time I could follow the points that Aczel was trying to get across.

I’ve got a special place in my heart for quantum theory; I really feel that it encapsulates what’s great about science. Here’s a field of study that defies common sense at every turn. Every advancement was made through experimentation, studying the results, and then working out the math to help describe what’s going on. The human mind can’t really conceive of what’s going on, and yet the science keeps uncovering more and more details about how the universe seems to work on the smallest scale. I wish other disciplines could leave their preconceived notions at the door like the quantum scientists. Nature seems to give up her secrets more willingly when we don’t try to force them one way or the other. (That’s a quantum pun there… )

I’ll warn you in advance, I’ve got some university math under my belt and I’ve read my share of quantum theory books, so the concepts were a little more accessible to me. This isn’t an introduction to quantum theory, but it’s not overly complex either; a nice compromise in my opinion. If this kind of thing interests you, then I recommend you give “Entanglement” a read – you won’t be disappointed.

Here’s more information from Amazon.com – Amazon.co.uk

Sloan Builds 3D Map of the Universe

Image credit: SDSS

Astronomers from the Sloan Digital Sky Survey have gathered data to build a precise 3-dimensional map that details the clusters of galaxies and dark matter. The map includes images of 200,000 galaxies up to 2 billion light-years away, accounting for six percent of the sky. The SDSS team – 200 astronomers in 13 countries – measured the Universe to contain 70% dark energy (a mysterious force that repels galaxies apart), 25% dark matter, and 5% normal matter.

Astronomers from the Sloan Digital Sky Survey (SDSS) have made the most precise measurement to date of the cosmic clustering of galaxies and dark matter, refining our understanding of the structure and evolution of the Universe.

“From the outset of the project in the late 80’s, one of our key goals has been a precision measurement of how galaxies cluster under the influence of gravity”, explained Richard Kron, SDSS’s director and a professor at The University of Chicago.

SDSS Project spokesperson Michael Strauss from Princeton University and one of the lead authors on the new study elaborated that: “This clustering pattern encodes information about both invisible matter pulling on the galaxies and about the seed fluctuations that emerged from the Big Bang.”

The findings are described in two papers submitted to the Astrophysical Journal and to the Physical review D; they can be found on the physics preprint Web site, www.arXiv.org, on October 28.

MAPPING FLUCTUATIONS

The leading cosmological model invokes a rapid expansion of space known as inflation that stretched microscopic quantum fluctuations in the fiery aftermath of the Big Bang to enormous scales. After inflation ended, gravity caused these seed fluctuations to grow into the galaxies and the galaxy clustering patterns observed in the SDSS.

Images of these seed fluctuations were released from the Wilkinson Microwave Anisotropy Probe (WMAP) in February, which measured the fluctuations in the relic radiation from the early Universe.

“We have made the best three-dimensional map of the Universe to date, mapping over 200,000 galaxies up to two billion light years away over six percent of the sky”, said another lead author of the study, Michael Blanton from New York University. The gravitational clustering patterns in this map reveal the makeup of the Universe from its gravitational effects and, by combining their measurements with that from WMAP, the SDSS team measured the cosmic matter to consist of 70 percent dark energy, 25 percent dark matter and five percent ordinary matter.

The SDSS is two separate surveys in one: galaxies are identified in 2D images (right), then have their distance determined from their spectrum to create a 2 billion lightyears deep 3D map (left) where each galaxy is shown as a single point, the color representing the luminosity – this shows only those 66,976 our of 205,443 galaxies in the map that lie near the plane of Earth’s equator. (Click for high resolution jpg, version without lines.)

They found that neutrinos couldn’t be a major constituent of the dark matter, putting among the strongest constraints to date on their mass. Finally, the SDSS research found that the data are consistent with the detailed predictions of the inflation model.

COSMIC CONFIRMATION

These numbers provide a powerful confirmation of those reported by the WMAP team. The inclusion of the new SDSS findings helps to improve measurement accuracy, more than halving the uncertainties from WMAP on the cosmic matter density and on the Hubble parameter (the cosmic expansion rate). Moreover, the new measurements agree well with the previous state-of-the-art results that combined WMAP with the Anglo-Australian 2dF galaxy redshift survey.

“Different galaxies, different instruments, different people and different analysis – but the results agree”, says Max Tegmark from the University of Pennsylvania, first author on the two papers. “Extraordinary claims require extraordinary evidence”, Tegmark says, “but we now have extraordinary evidence for dark matter and dark energy and have to take them seriously no matter how disturbing they seem.”

The new SDSS results (black dots) are the most accurate measurements to date of how the density of the Universe fluctuates from place to place on scales of millions of lightyears. These and other cosmological measurements agree with the theoretical prediction (blue curve) for a Universe composed of 5% atoms, 25% dark matter and 70% dark energy. The larger the scales we average over, the more uniform the Universe appears. (Click for high resolution jpg, no frills version.)

“The real challenge is now to figure what these mysterious substances actually are”, said another author, David Weinberg from Ohio State University.

SDSS LARGE-SCALE UNDERTAKING

The SDSS is the most ambitious astronomical survey ever undertaken, with more than 200 astronomers at 13 institutions around the world.

“The SDSS is really two surveys in one”, explained Project Scientist James Gunn of Princeton University. On the most pristine nights, the SDSS uses a wide-field CCD camera (built by Gunn and his team at Princeton University and Maki Sekiguchi of the Japan Participation Group) to take pictures of the night sky in five broad wavebands with the goal of determining the position and absolute brightness of more than 100 million celestial objects in one-quarter of the entire sky. When completed, the camera was the largest ever built for astronomical purposes, gathering data at the rate of 37 gigabytes per hour.

On nights with moonshine or mild cloud cover, the imaging camera is replaced with a pair of spectrographs (built by Alan Uomoto and his team at The Johns Hopkins University). They use optical fibers to obtain spectra (and thus redshifts) of 608 objects at a time. Unlike traditional telescopes in which nights are parceled out among many astronomers carrying out a range of scientific programs, the special-purpose 2.5m SDSS telescope at Apache Point Observatory in New Mexico is devoted solely to this survey, to operate every clear night for five years.

The first public data release from the SDSS, called DR1, contained about 15 million galaxies, with redshift distance measurements for more than 100,000 of them. All measurements used in the findings reported here would be part of the second data release, DR2, which will be made available to the astronomical community in early 2004.

Strauss said the SDSS is approaching the halfway point in its goal of measuring one million galaxy and quasar redshifts.

“The real excitement here is that disparate lines of evidence from the cosmic microwave background (CMB), large-scale structure and other cosmological observations are all giving us a consistent picture of a Universe dominated by dark energy and dark matter”, said Kevork Abazajian of the Fermi National Accelerator Laboratory and the Los Alamos National Laboratory.

Original Source: Sloan Digital Sky Survey News Release

Additional Columbia Documents Coming

Image credit: CAIB

The Columbia Accident Investigation Board announced on Friday that they will be releasing volumes II through VI of their analysis and recommendations. The documents can be downloaded will be available for download on Tuesday, and printed copies can be ordered through an official government printer. The new volumes contain appendices and supporting documentation for Volume I, which was released back in August, and is still considered their formal recommendations on the accident.

The Columbia Accident Investigation Board will release Vols. II-VI of the CAIB’s Final Report at 10 a.m. on Tuesday, Oct. 28, 2003 on its website, www.caib.us. All of the volumes can be downloaded from the website. These volumes contain appendices that provide the supporting documentation for the main text of the Final Report contained in Vol. I, which was released on Aug. 26, 2003.

These appendix materials were working documents. They contain a number of conclusions and proposed recommendations, several of which were adopted by the CAIB in Vol. I. The other conclusions and proposed recommendations drawn in Vols. II-VI do not necessarily reflect the views of the CAIB but are included for the record. When there is conflict, Vol. I takes precedence. It alone is the CAIB’s official statement.

Hard copies of Vols. I-VI are available through the Government Printing Office for a fee. Those copies can be ordered from the GPO’s website, www.gpo.gov.

There will be no press briefing about the release of Vols. II-VI.

Original Source: CAIB News Release

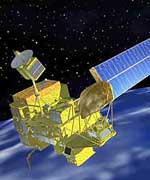

Contact Lost with Japanese Satellite

Image credit: JAXA

Ground controllers have lost contact with Midori 2, a $587 million environmental research satellite launched in December last year. The Japanese/US spacecraft didn’t check in on Saturday when it flew over a ground station; shortly after that it went into safe mode, and then all telemetry was lost. Controllers are trying to recover contact with the satellite, but it will probably be difficult because it’s not even sending out telemetry data. Midori 2 was supposed to last at least 3 years and use five scientific instruments to gather data about water vapour, ocean winds, sea temperatures, sea ice, and marine vegetation.

The Japan Aerospace Exploration Agency (JAXA) failed to receive earth observation data from its Advanced Earth Observing Satellite II, Midori-II, at its Earth Observation Center in Saitama Prefecture at 7:28 a.m. on October 25, 2003 (Japan Standard Time, JST). At 8:49 a.m. (JST), JAXA checked the operational status of Midori-II, and found it was switched to a light load mode (in which all observation equipment is automatically turned off to minimize power consumption) due to an unknown anomaly. Around 8:55 a.m. (JST), communications between the satellite and ground stations became unstable, and telemetry data was not received.

JAXA’s Katsuura Tracking and Communication Station also failed to receive telemetry data twice (9:23 and 11:05 a.m. JST.)

JAXA is currently analyzing earlier acquired telemetry data. The analysis of power generation data by the solar array paddle revealed that generated power has decreased from 6kW to 1kW.

We are doing our utmost to have Midori-II return to normal operation mode by continuing to analyze telemetry data and by working to understand the current condition of the satellite at our domestic and overseas tracking stations.

JAXA formed the ?Midori-II anomaly investigation team,? led by the president of JAXA, to lead the investigation.

Original Source: JAXA News Release

See the Sunspots for Yourself

As you might know, there are currently two huge groups of sunspots on the surface of the Sun. They’re really easy to see if you have a pair of binoculars or a telescope. Don’t look at the sun directly, you can damage your eyes, but there’s an easy way you can project an image of the Sun so you can see the spots. All you need is a piece of paper.

You line up the binoculars so that light from the Sun is passing through the eyepiece and onto a piece of paper you’re holding. Move the binoculars around a big and you’ll eventually see a big bright circle moving around your paper. That’s the Sun. Then, focus the eyepiece of the binoculars so that the circle of light has a nice crisp edge. You should be able to see the sunspots right away. NASA has some great instructions on how to do this.

Let me know how it goes!

Fraser Cain

Publisher

Universe Today

P.S. Hotmail users are going to be experiencing some delays for the next while. There’s a problem with the way Hotmail tries to limit SPAM that’s clogging up all the mail they’re receiving. My newsletters are sometimes taking days before they’re getting accepted.

New Shuttle Solid Rocket Booster Tested

Image credit: Blake Goddard

Alliant Techsystems performed the first static test of a new five-segment solid rocket booster for the space shuttle. This new booster gives approximately 10% more thrust than the four-segment boosters that the shuttle currently flies with. If these new boosters are installed on the shuttle it would have a few benefits: the shuttle would have enough thrust to still reach orbit if its main engine fails, it won’t have to make an emergency landing; or it could be used to let the shuttle carry an additional 10,500 kg of cargo.

ATK (Alliant Techsystems, NYSE: ATK) yesterday successfully conducted the first static test firing of a five-segment Space Shuttle reusable solid rocket motor (RSRM).

The test conducted by ATK Thiokol Propulsion, Promontory, Utah, was part of an ongoing safety program to verify materials and manufacturing processes, by ground testing motors with specific test objectives. This five-segment motor, also considered a margin test motor, pushed various features of the motor to its limits so engineers could validate the safety margins of the four-segment motor currently used to launch Space Shuttles. The static firing was also a test designed to demonstrate the ability of the five-segment motor to perform at thrust levels in excess of 3.6 million pounds, approximately 10 percent greater than the four-segment motor.

?This test demonstrated ATK?s unique ability and expertise in the design and production of the RSRM,? said Jeff Foote, group vice president, Aerospace. ?It is yet another visible commitment by NASA and ATK to ensure the highest quality safety standards and mission success for future Space Shuttle flights.?

Foote said that in addition to validating safety margins by over-testing many RSRM attributes, the static firing also demonstrated the capability of the five-segment motor to increase Space Shuttle payload capacity by 23,000 pounds, or enable a safe abort to orbit in the event of loss of thrust from the main engines.

The five-segment motor generated an average thrust of 3.1 million pounds and burned for approximately 128 seconds. The current four-segment configuration generates an average 2.6 million pounds of thrust and burns for approximately 123 seconds. The new motor measures 12 feet in diameter and is 153.5 feet long ? 27.5 feet longer than the four-segment motor.

The static test allowed ATK to verify and validate numerous performance characteristics, processes, materials, components, and design changes that were incorporated into the five-segment RSRM. The test had 67 objectives and employed 633 instrumentation channels to collect data for evaluation. Preliminary results indicate that the motor met or exceeded all objectives.

The Space Shuttle RSRM is the largest solid rocket motor ever flown and the first designed for reuse. The reusability of the RSRM case and nozzle hardware is an important cost-saving factor for the nation’s space program. Each Space Shuttle launch currently requires the boost of two RSRMs. By the time the twin RSRMs have completed their task, the Space Shuttle orbiter has reached an altitude of 24 nautical miles and is traveling at a speed in excess of 3,000 miles per hour.

ATK Thiokol Propulsion is the world?s leading supplier of solid-propellant rocket motors. Products manufactured by the company include propulsion systems for the Delta, Pegasus?, Taurus?, Athena, Atlas, H-IIA, and Titan IV B expendable space launch vehicles, NASA?s Space Shuttle, the Trident II Fleet Ballistic Missile and the Minuteman III Intercontinental Ballistic Missile, and ground-based missile defense interceptors.

ATK is a $2.2 billion aerospace and defense company with strong positions in propulsion, composite structures, munitions, precision capabilities, and civil and sporting ammunition. The company, which is headquartered in Edina, Minn., employs approximately 12,200 people and has three business groups: Precision Systems, Aerospace, and Ammunition and Related Products. ATK news and information can be found on the Internet at www.atk.com.

Original Source: Alliant News Release