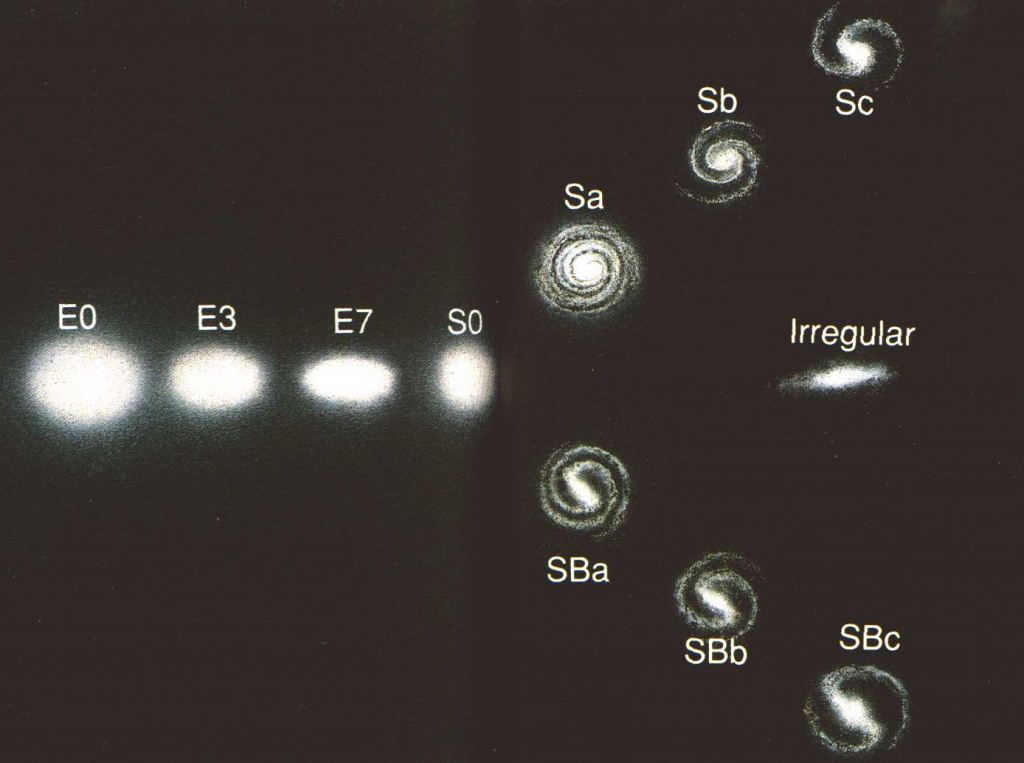

In the 1920s, Edwin Hubble studied hundreds of galaxies. He found that they tended to fall into a few broad types. Some contained elegant spirals of bright stars, while others were spherical or elliptical with little or no internal structure. In 1926 he developed a classification scheme for galaxies, now known as Hubble’s Tuning Fork.

When you look at Hubble’s scheme, it suggests an evolution of galaxies, beginning as an elliptical galaxy, then flattening and shifting into a spiral galaxy. While many saw this as a reasonable model, Hubble cautioned against jumping to conclusions. We now know ellipticals do not evolve into spirals, and the evolution of galaxies is complex. But Hubble’s scheme marks the beginning of the attempt to understand how galaxies grow, live, and die.

Continue reading “What Shuts Down a Galaxy’s Star Formation?”