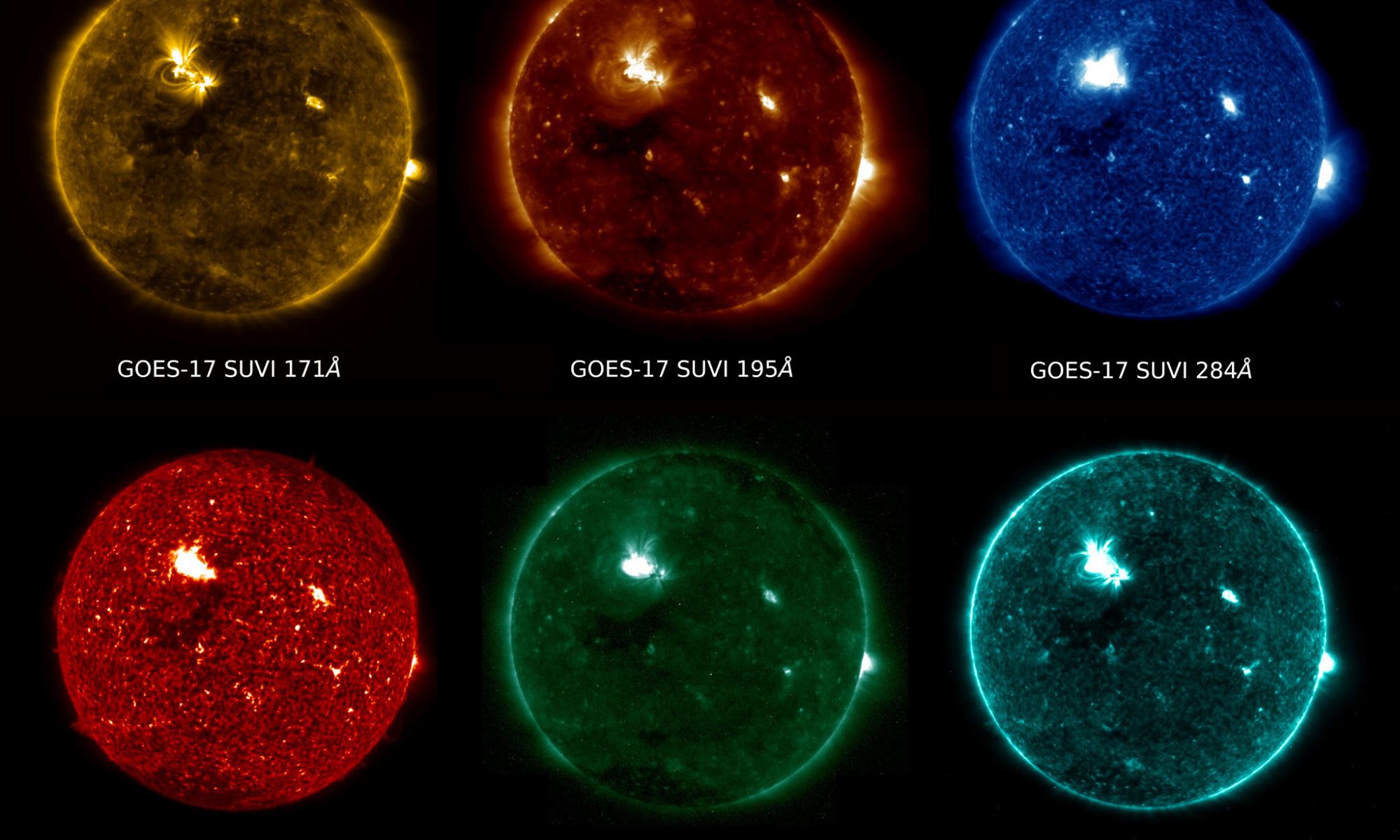

Our Sun is the source of life on Earth. Its calm glow across billions of years has allowed life to evolve and flourish on our world. This does not mean our Sun doesn’t have an active side. We have observed massive solar flares, such as the 1859 Carrington event, which produced northern lights as far south as the Caribbean, and drove electrical currents in telegraph lines. If such a flare occurred in Earth’s direction today, it would devastate our electrical infrastructure. But fortunately for us, the Sun is mostly calm. Unusually calm when compared to other stars.

Continue reading “The Sun is less active magnetically than other stars”Could The Physical Constants Change? Possibly, But Probably Not

The world we see around us seems to be rooted in scientific laws. Theories and equations that are absolute and universal. Central to these are fundamental physical constants. The speed of light, the mass of a proton, the constant of gravitational attraction. But are these constants really constant? What would happen to our theories if they changed?

Continue reading “Could The Physical Constants Change? Possibly, But Probably Not”How Do You Weigh The Universe?

The weight of the universe (technically the mass of the universe) is a difficult thing to measure. To do it you need to count not just stars and galaxies, but dark matter, diffuse clouds of dust and even wisps of neutral hydrogen in intergalactic space. Astronomers have tried to weigh the universe for more than a century, and they are still finding ways to be more accurate.

Continue reading “How Do You Weigh The Universe?”Betelgeuse Is Bright Again

Everyone’s favorite red supergiant star is bright again. The American Association of Variable Star Observers (AAVSO) has been tracking Betelgeuse as it has gradually returned to its more normal brilliance. As of this writing, it is about 95% of its typical visual brightness. Supernova fans will have to wait a bit longer.

Continue reading “Betelgeuse Is Bright Again”Why was there more matter than antimatter in the Universe? Neutrinos might give us the answer

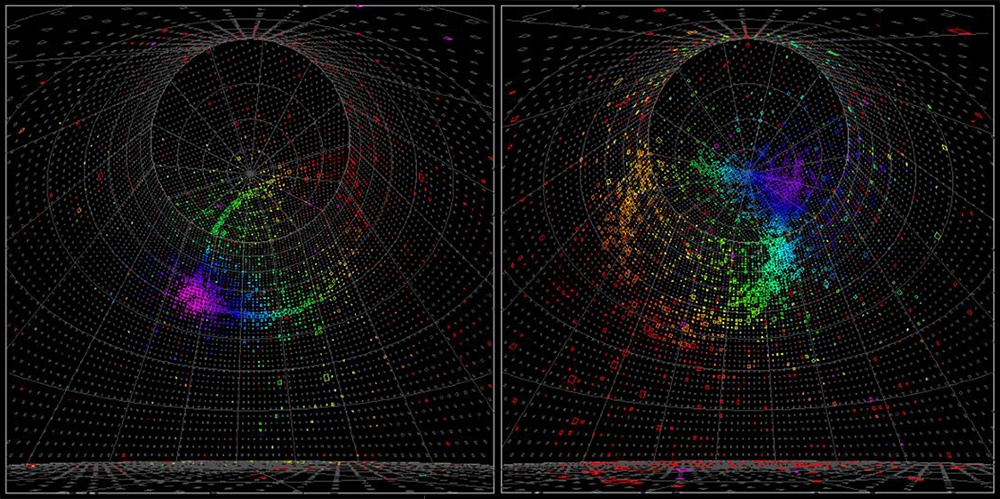

The universe is filled with matter, and we don’t know why. We know how matter was created, and can even create matter in the lab, but there’s a catch. Every time we create matter in particle accelerators, we get an equal amount of antimatter. This is perfectly fine for the lab, but if the big bang created equal amounts of matter and antimatter, the two would have destroyed each other early on, leaving a cosmic sea of photons and no matter. If you are reading this, that clearly didn’t happen.

Continue reading “Why was there more matter than antimatter in the Universe? Neutrinos might give us the answer”New observations show that the Universe might not be expanding at the same rate in all directions

When we look at the world around us, we see patterns. The Sun rises and sets. The seasons cycle through the year. The constellations drift across the night sky. As we’ve studied these patterns, we’ve developed scientific laws and theories that help us understand the cosmos. While our theories are powerful, they are still rooted in some fundamental assumptions. One of these is that the laws of physics are the same everywhere. This is known as cosmic isotropy, and it allows us to compare what we see in the lab with what we see light-years away.

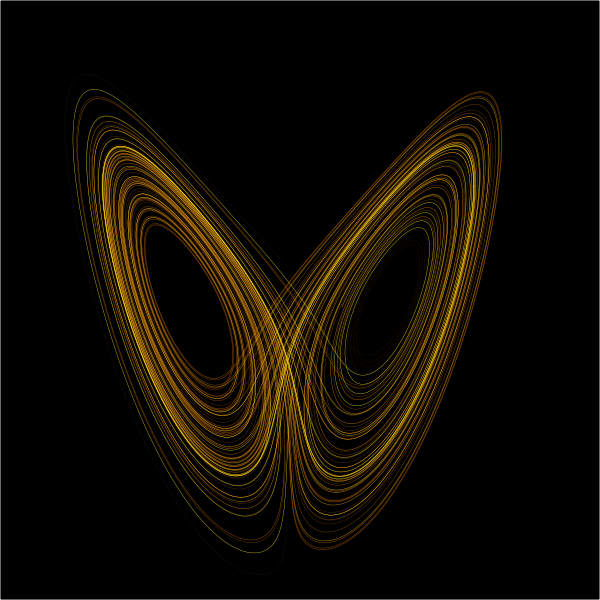

Continue reading “New observations show that the Universe might not be expanding at the same rate in all directions”The three-body problem shows us why we can’t accurately calculate the past

Our universe is driven by cause and effect. What happens now leads directly to what happens later. Because of this, many things in the universe are predictable. We can predict when a solar eclipse will occur, or how to launch a rocket that will take a spacecraft to Mars. This also works in reverse. By looking at events now, we can work backward to understand what happened before. We can, for example, look at the motion of galaxies today and know that the cosmos was once in the hot dense state we call the big bang.

Continue reading “The three-body problem shows us why we can’t accurately calculate the past”The Chemicals That Make Up Exploding Stars Could Help Explain Away Dark Energy

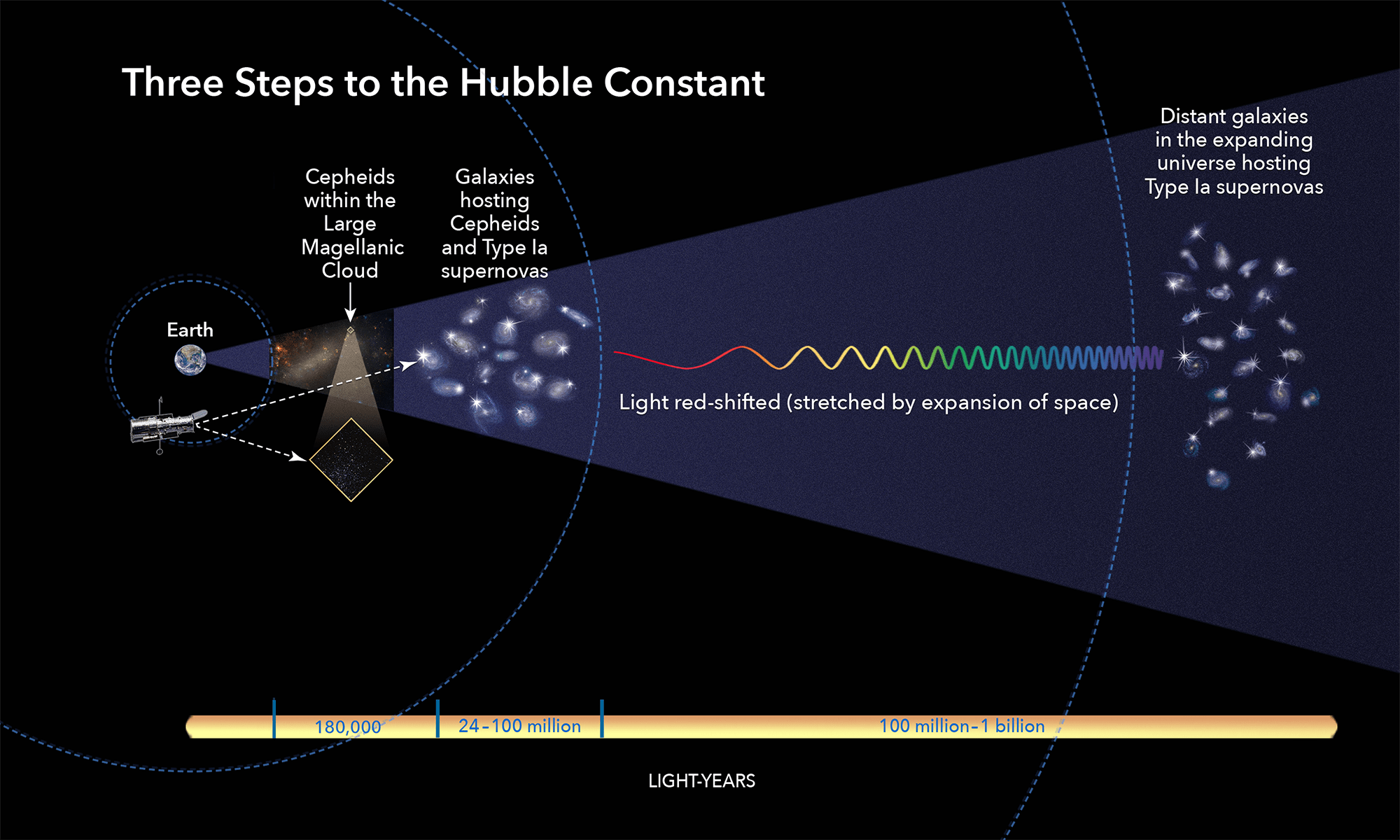

Astronomers have a dark energy problem. On the one hand, we’ve known for years that the universe is not just expanding, but accelerating. There seems to be a dark energy that drives cosmic expansion. On the other hand, when we measure cosmic expansion in different ways we get values that don’t quite agree. Some methods cluster around a higher value for dark energy, while other methods cluster around a lower one. On the gripping hand, something will need to give if we are to solve this mystery.

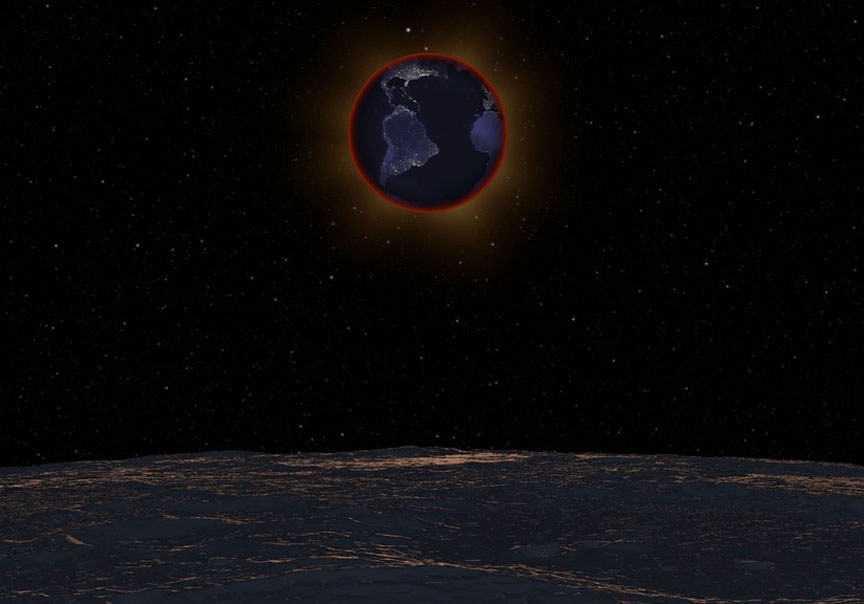

Continue reading “The Chemicals That Make Up Exploding Stars Could Help Explain Away Dark Energy”During A Lunar Eclipse, It’s A Chance To See Earth As An Exoplanet

There are several ways to look for alien life on distant worlds. One is to listen for radio signals these aliens might send, such as SETI and others are doing, but another is to study the atmospheres of exoplanets to find bio-signatures of life. But what might these signatures be? And what would they appear to our telescopes?

Continue reading “During A Lunar Eclipse, It’s A Chance To See Earth As An Exoplanet”Betelgeuse Is Brightening Again

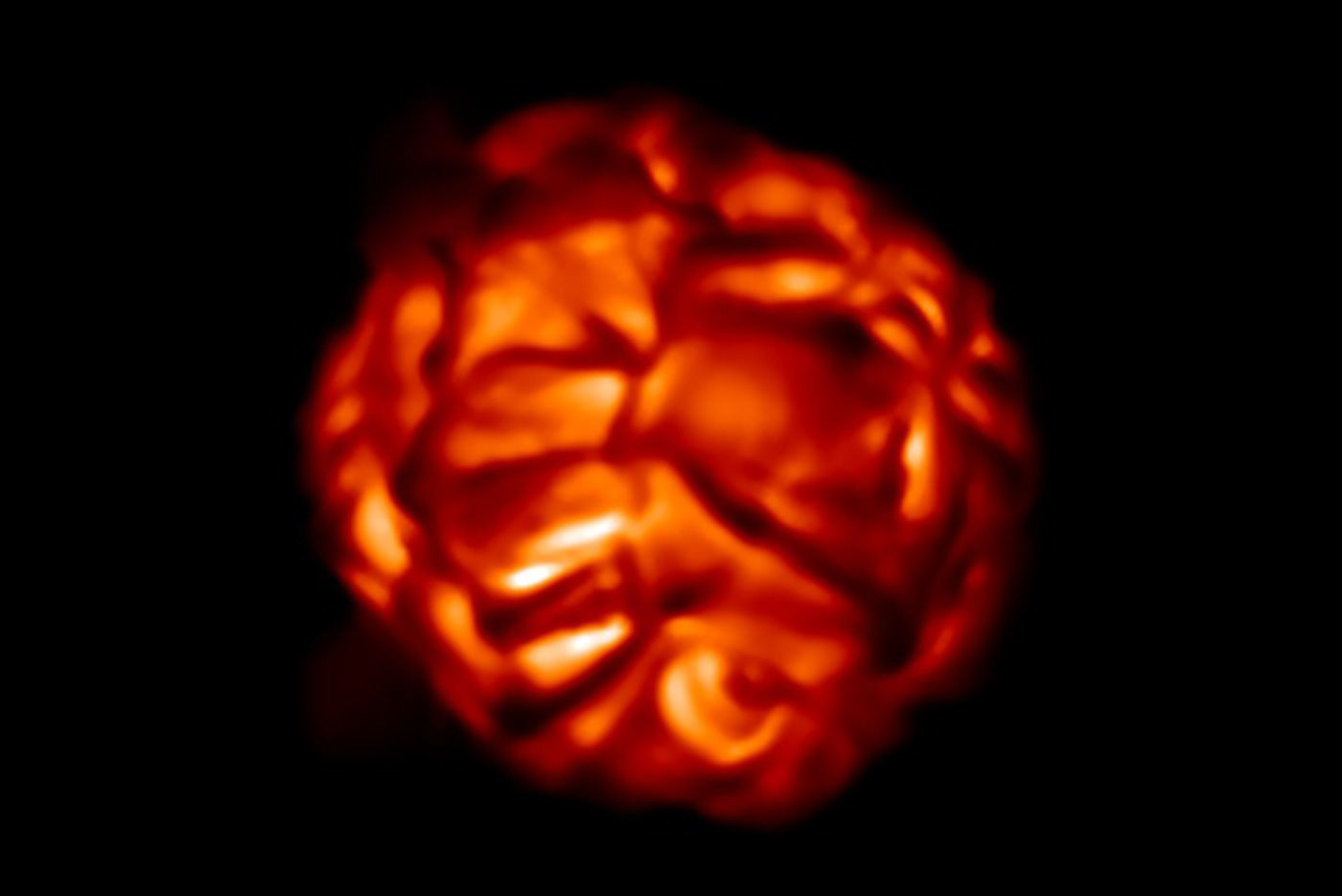

The latest observations of Betelgeuse show that the star is now beginning to slowly brighten. No supernova today! Nothing to see, better luck next time.

Despite some of the hype, this behavior is exactly what astronomers expected. Betelgeuse is a very different star from our Sun. While our Sun is a main-sequence star in its prime of life, Betelgeuse is a red giant star on the verge of death. But the death of a star is not a simple process.

Continue reading “Betelgeuse Is Brightening Again”