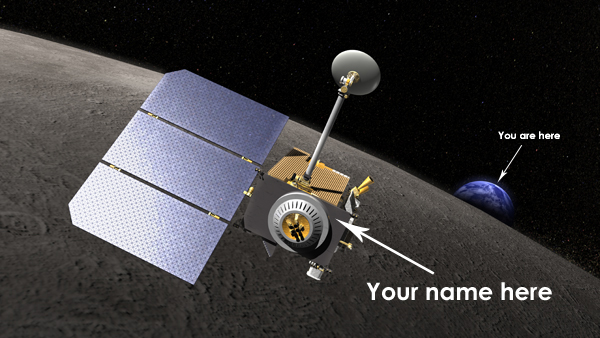

Have you ever dreamt of travelling to the Moon? Unfortunately, for the time being, this will be a privilege only for an elite few astronauts and robotic explorers. But NASA has just released news that you will have the opportunity to send your name to the Moon on board their next big Moon mission, the Lunar Reconnaissance Orbiter. So get over to the mission site and send your name that will be embedded into a computer chip, allowing a small part of you to orbit our natural satellite over 360,000 km (220 000 miles) away…

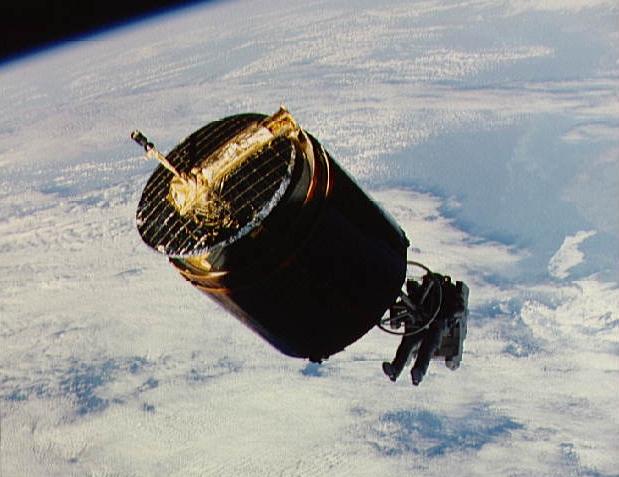

Last month I looked into how long it would take to travel to the Moon and the results were wide-ranging. From an impressive eight hour zip past the Moon by the Pluto mission, New Horizons to a slow spiral route taken by the SMART-1 lunar probe, taking over a year. The next NASA mission, the Lunar Reconnaissance Orbiter (LRO), is likely to take about four days (just a little longer than the manned Apollo 11 mission in July 1969). It is scheduled for launch on an Atlas V 401 rocket in late 2008 and the mission is expected to last for about a year.

Thank goodness we’re not travelling by car, according to the LRO mission facts page, it would take 135 days (that’s nearly 5 months!) to get there when travelling at an average speed of 70 miles per hour.

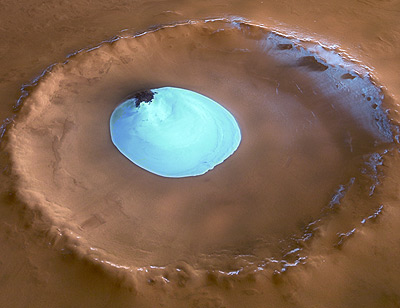

The LRO is another step toward building a Moon base (by 2020), the stepping stone toward colonizing Mars. The craft will orbit the Moon at an altitude of 50 km (31 miles), taking global data, constructing temperature maps, high-resolution colour imaging and measuring the Moon’s albedo. Of course, like all planetary missions to the Moon and Mars, the LRO will look out for water. As the Moon will likely become mankind’s first extra-terrestrial settlement, looking for the location of Moon water will be paramount when considering possible locations for colonization.

This is all exciting stuff, but what can we do apart from watch the LRO launch and begin sending back data? Wouldn’t it be nice if we could somehow get involved? Although we’re not going to be asked to help out at mission control any time soon, NASA is offering us the chance to send our names to the Moon. But how can this be done? First things first, watch the NASA trailer, and then follow these instructions:

- Go to “NASA’s Return to the Moon” page.

- Type in your first name and last name.

- Click “continue” and download your certificate – your name is going to the Moon!

But how will your name be taken to the Moon? It won’t be engraved into the LRO’s bodywork (although that would have been nice!); it will be held on a microchip embedded into the spaceship’s circuitry. The database of names will be taken on board the LRO and will remain with it for the entire duration of the mission. Anyone who submits their name will be exploring the Moon in their own small way. I’ve signed up (see my certificate, pictured) and you have until June 27, 2008 to do the same.

Will see you on board the LRO!

Sources: LRO mission site, Press release