As computers become more advanced, the microprocessors inside them shrink in size and use less electrical current. These new, energy efficient chips can be crammed closer together, increasing the number of calculations that can be done per second, therefore making the computer more powerful. But even the mighty supercomputer has its Achilles heel: an increased sensitivity to interference from charged particles originating beyond your office. These highly energetic particles come from space and may cause critical hardware to miscalculate, possibly putting lives at risk.

Foreseeing this problem, microchip manufacturer Intel has begun devising ways to detect when a shower of charged particles may hit their chips, so when they do, calculations can be re-run to iron out any errors…

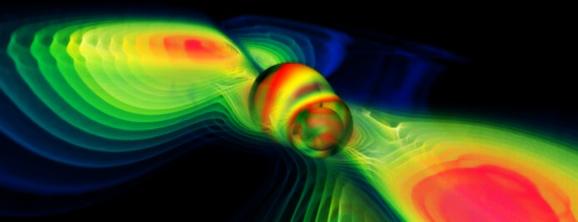

Cosmic rays originate from our Sun, supernovae and other unknown cosmic sources. Typically, they are very energetic protons that zip through space close to the speed of light. They could be so powerful that on impact with the upper atmosphere of the Earth it has been postulated that they may create micro black holes. Naturally these energetic particles can cause some damage. In fact, they may be a huge barrier to travelling beyond the safety of Earth’s magnetic field (the magnetosphere deflects most cosmic radiation, even astronauts in Earth orbit are well shielded), the health of astronauts will be severely damaged during prolonged interplanetary flight.

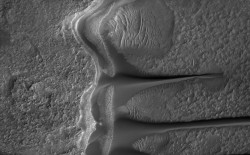

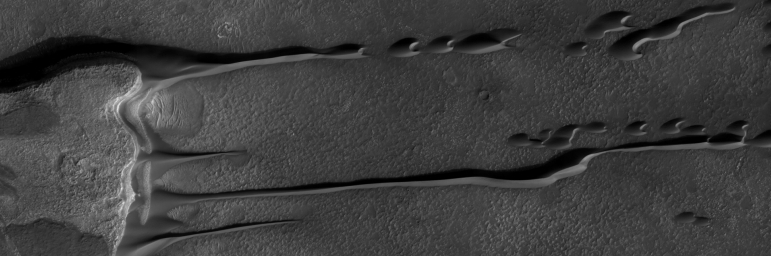

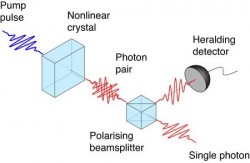

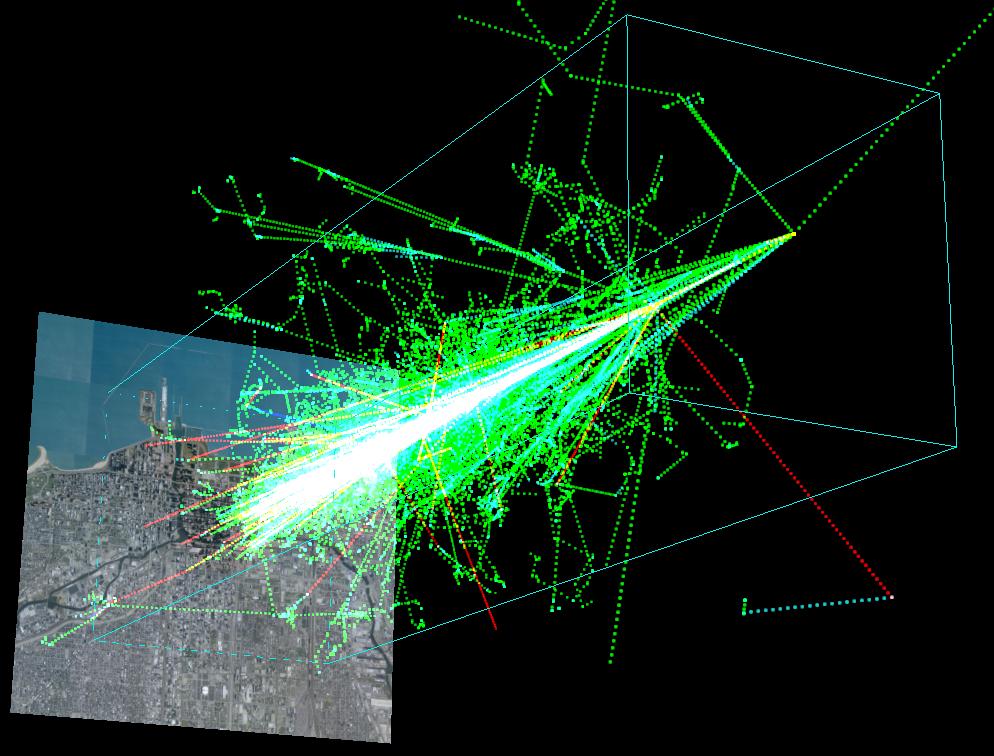

But what about on Earth, where we are protected from the full force of cosmic rays? Although a small portion of our annual radiation dose comes from cosmic rays (roughly 13%), they can have extensive effects over large volumes of the atmosphere. As cosmic rays collide with atmospheric molecules, a cascade of light particles is produced. This is known as an “air shower”. The billions of particles within the air shower from a single impact are often highly charged themselves (but of lesser energy than the parent cosmic ray), but the physics behind the air shower is beginning to grow in importance, especially in the realms of computing.

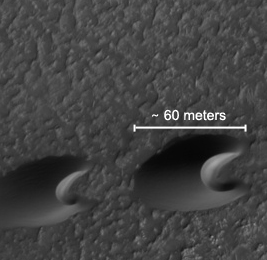

It seems computer microprocessor manufacturer Intel has been pondering the same question. They have just released a patent detailing their plans should a cosmic ray penetrate the atmosphere and hit one of their delicate microchips. The problem will come when computing becomes so advanced that the tiny chips may “misfire” when a comic ray impact event occurs. Should the unlucky chip be hit by a cosmic ray, a spike of electrical current may be exerted across the circuitry, causing a miscalculation.

This may sound pretty benign; after all, what’s one miscalculation in billions? Intel’s senior scientist Eric Hannah explains:

“All our logic is based on charge, so it gets interference. […] You could be going down the autobahn [German freeway] at 200 miles an hour and suddenly discover your anti-lock braking system doesn’t work because it had a cosmic ray event.” – Eric Hannah.

After all, computers are getting smaller and cheaper, they are being used everywhere including critical systems like the braking system described by Hannah above. As they are so small, many more chips can occupy computers, increasing the risk. Where a basic, one processor computer may only experience one cosmic ray event in several years (producing an unnoticed calculation error), supercomputers with tens of thousands of processors may suffer 10-20 cosmic ray events per week. What’s more, in the near future even humble personal laptops may have the computing power of today’s supercomputer; 10-20 calculation errors per week would be unworkable, there would be too high a risk of data loss, software corruption or hardware failure.

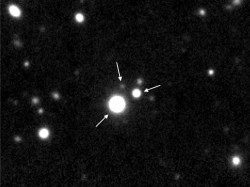

Orbital space stations, satellites and interplanetary spacecraft also come to mind. Space technology embraces advanced computing as you get far more processing power in a smaller package, reducing weight, size and cost. What happens when a calculation error occurs when a cosmic ray hits a satellite’s circuitry? A single miscalculation could spell the satellite’s fate. I’d dread to think what could happen to future manned missions to the Moon, Mars and beyond.

It is hoped that Intel’s plan may be the answer to this ominous problem. They want to manufacture a cosmic ray event tracker that would detect a cosmic ray impact, and then instruct the processor to recalculate the previous calculations from the point before the cosmic ray struck. This way the error can be purged from the system before it becomes a problem.

There will of course be many technical difficulties to overcome before a fast detector is developed; in fact Eric Hannah admits that it will be hard to say when such a device may become a practical reality. Regardless, the problem has been identified and scientists are working on a solution, at least it’s a start…

Source: BBC