Eris is the largest dwarf planet in the Solar System, and the ninth largest body orbiting our Sun. Sometimes referred to as the “tenth planet”, it’s discovery is responsible for upsetting the traditional count of nine planets in our Solar System, as well as leading the way to the creation of a whole new astronomical category.

Located beyond the orbit of Pluto, this “dwarf planet” is both a trans-Neptunian object (TNO), which refers to any planetary object that orbits the Sun at a greater distance than Neptune – or 30 astronomical units (AU). Because of this distance, and the eccentricity of its orbit, it is also a member of a the population of objects (mostly comets) known as the “scattered disk”.

The discovery of Eris was so important because it was a celestial body larger than Pluto, which forced astronomers to consider, for the first time in history, what the definition of a planet truly is.

Discovery:

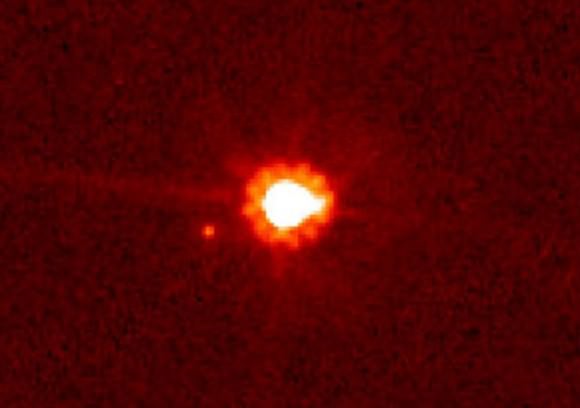

Eris, which has the full title of 136199 Eris, was first observed in 2003 during a Palomar Observatory survey of the outer solar system by a team led by Mike Brown, a professor of planetary astronomy at the California Institute of Technology. The discovery was confirmed in January 2005 after the team examined the pictures obtained from the survey in detail.

Classification:

At the time of it’s discovery, Brown and his colleagues believed that they had located the 10th planet of our solar system, since it was the first object in the Kuiper Belt found to be bigger than Pluto. Some astronomers agreed and liked the designation, but others objected since they claimed that Eris was not a true planet. At the time, the definition of “planet” was not a clear-cut since there had never been an official definition issued by the International Astronomical Union (IAU).

The matter was settled by the IAU in the summer of 2006. They defined a planet as an object that orbits the Sun, which is large enough to make itself roughly spherical. Additionally, it would have to be able to clear its neighborhood – meaning it has enough gravity to force any objects of similar size or that are not under its gravitational control out of its orbit.

In addition to finally defining what a planet is, the IAU also created a new category of “dwarf planets“. The only difference between a planet and a dwarf planet is that a dwarf planet has not cleared its neighborhood. Eris was assigned to this new category, and Pluto lost its status as a planet. Other celestial bodies, including Haumea, Ceres, and Makemake, have been classified as dwarf planets.

Naming:

Eris is named after the Greek goddess of strife and discord. The name was assigned on September 13th, 2006, following an unusually long consideration period that arose over the issue of classification. During this time, the object became known to the wider public as Xena, which was the name given to it by the discovery team.

The team had been saving this name, which was inspired by the title character of the television series Xena: Warrior Princess, for the first body they discovered that was larger than Pluto. They also chose it because it started with the letter X, a reference to Percival Lowell’s hunt for a planet he believed to exist the edge of the Solar System (which he referred to as “Planet X“).

According to fellow astronomer and science writer Govert Schilling, Brown initially wanted to call the object “Lila”. This name was inspired by a concept in Hindu mythology that described the cosmos as the outcome of a game played by Brahma, and also because it was similar to “Lilah” – the name of Brown’s newborn daughter.

Size and Orbit:

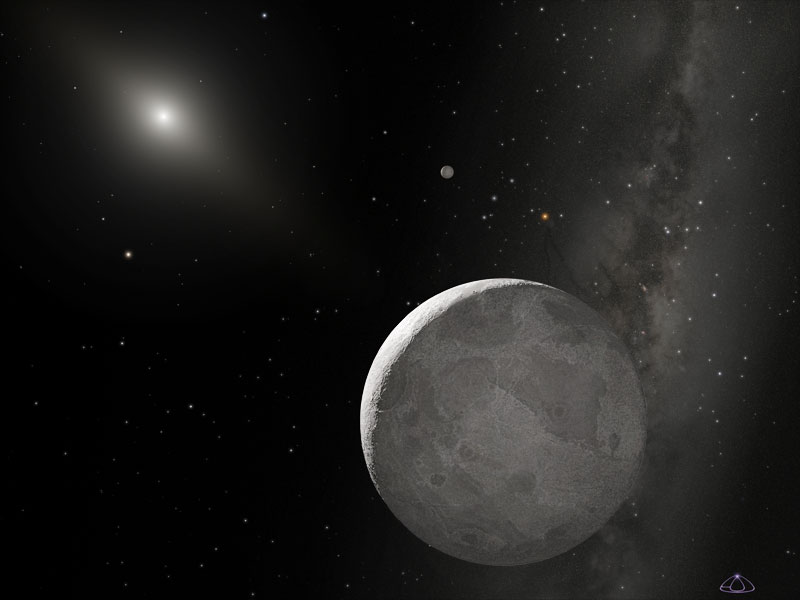

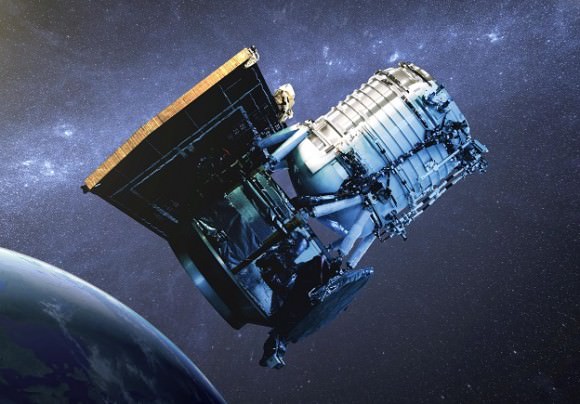

The actual size and mass of Eris has been the subject of debate, as official estimates have changed with time and subsequent viewing. In 2005, using images from the Hubble Space Telescope. the diameter of Eris was measured to be 2397 ± 100 km (1,489 miles). In 2007, a series of observations of the largest trans-Neptunian objects with the Spitzer Space Telescope estimated Eris’s diameter at 2600 (+400/-200) km (1616 miles).

The most recent observation took place in November of 2010, when Eris was the subject of one of the most distant stellar occultations yet achieved from Earth. The teams findings were announced on October 2011, and contradicted previous findings with an estimated diameter of 2326 ± 12 km (1445 miles).

Because of these differences, astronomers have been hard-pressed to maintain that Eris is more massive than Pluto. According to the latest estimates, the Solar System’s “ninth planet” has a diameter of 2368 km (1471 miles), placing it on par with Eris. Part of the difficulty in accurately assessing the planet’s size comes from interference from Pluto’s atmosphere. Astronomers expect a more accurate appraisal when the New Horizons space probe arrives at Pluto in July 2015.

Eris has an orbital period of 558 years. Its maximum possible distance from the Sun (aphelion) is 97.65 AU, and its closest (perihelion) is 37.91 AU. This means that Eris and its moon are currently the most distant known objects in the Solar System, apart from long-period comets and space probes.

Eris’s orbit is highly eccentric, and brings Eris to within 37.9 AU of the Sun, a typical perihelion for scattered objects. This is within the orbit of Pluto, but still safe from direct interaction with Neptune (29.8-30.4 AU). Unlike the eight planets, whose orbits all lie roughly in the same plane as the Earth’s, Eris’s orbit is highly inclined – the planet is tilted at an angle of about 44° to the ecliptic.

Moons:

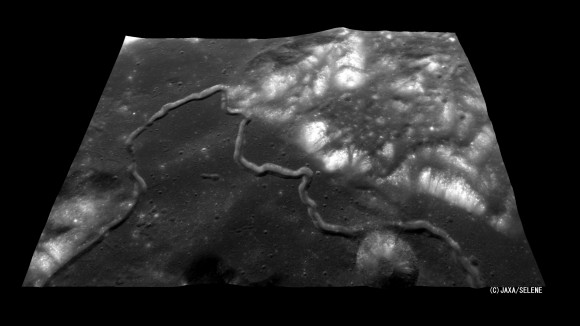

Eris has one moon called Dysnomia, which is named after the daughter of Eris in Greek mythology, which was first observed on September 10th, 2005 – a few months after the discovery of Eris. The moon was spotted by a team using the Keck telescopes in Hawaii, who were busy carrying out observations of the four brightest TNOs (Pluto, Makemake, Haumea, and Eris) at the time.

Interesting Facts:

The dwarf planet is rather bright and can be detected using something as simple as a small telescope. Models of internal heating via radioactive decay suggest that Eris may be capable of sustaining an internal ocean of liquid water at the mantle-core boundary. These studies were conducted by Hauke Hussmann and colleagues from the Institute of Astronomy, Geophysics and Atmospheric Sciences (IAG) at the University of São Paulo.

Brown and the discovery team followed up their initial identification of Eris with spectroscopic observations of the planet, which were made on January 25th, 2005. Infrared light from the object revealed the presence of methane ice, indicating that the surface may be similar to that of Pluto and of Neptune’s moon Triton.

Due to Eris’s distant eccentric orbit, its surface temperature is estimated to vary between about 30 and 56 K (?243.2 and ?217.2 °C). This places it on par with Pluto’s surface temperature, which ranges from 33 to 55 K (-240.15 and -218.15 °C).

We have many interesting articles on planets here at Universe Today, including this article on What is the newest planet and the 10th planet.

If you are looking for more information, try Eris and NASA’s Solar System Exploration entry.

Astronomy Cast has an episode on Pluto’s planetary identity crisis.

Source: