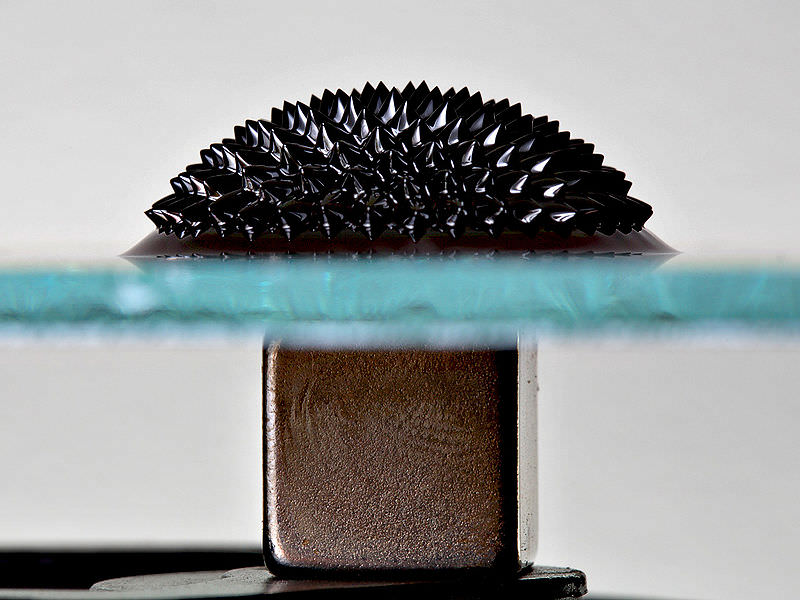

Electricity is an amazing, and potentially very dangerous, thing. In addition to powering our appliances, heating our homes, starting our cars and providing us with unnatural lighting during the evenings, it is also one of the fundamental forces upon which the Universe is based. Knowing what governs it is crucial to using it for our benefit, as well as understanding how the Universe works.

For those of us looking to understand it – perhaps for the sake of becoming an electrical engineer, a skilled do-it-yourselfer, or just satisfying scientific curiosity – some basic concepts need to be kept in mind. For example, we need to understand a little thing known as conductance, and quality that is related to resistance; which taken together govern the flow of electrical current.

Definition:

Conductance is the measure of how easily electricity flows along a certain path through an electrical element, and since electricity is so often explained in terms of opposites, conductance is considered the opposite of resistance. In terms of resistance and conductance, the reciprocal relationship between the two can be expressed through the following equation: R = 1/G, G=1/R; where R equals resistance and G equals conduction.

Another way to represent this is: W=1/S, S=1/W, where W (the Greek letter omega) represents resistance and S represents Siemens, ergo the measure of conductance. In addition, Siemens can be measured by comparing them to their equivalent of one ampere (A) per volt (V).

In other words, when a current of one ampere (1A) passes through a component across which a voltage of one volt (1V) exists, then the conductance of that component is one Siemens (1S). This can be expressed through the equation: G = I/E, where G represents conductance and E is the voltage across the component (expressed in volts).

The temperature of the material is definitely a factor, but assuming a constant temperature, the conductance of a material can be calculated.

Measurement:

The SI (International System) derived unit of conductance is known as the Siemens, named after the German inventor and industrialist Ernst Werner von Siemens. Since conductance is the opposite of resistance, it is usually expressed as the reciprocal of one ohm – a unit of electrical resistance named after George Simon Ohm – or one mho (ohm spelt backwards).

Recently, this term was re-designated to Siemens, expressed by the notational symbol S. The factors that affect the magnitude of resistance are exactly the same for conductance, but they affect conductance in the opposite manner. Therefore, conductance is directly proportional to area, and inversely proportional to the length of the material.

We have written many articles about conductance for Universe Today. Here’s What are Electrons?, Who Discovered Electricity?, What is Static Electricity?, What is Electromagnetic Induction?, and What are the Uses of Electromagnets?

If you’d like more info on Conductance, check out All About Circuits for another article about conductance.

We’ve also recorded an entire episode of Astronomy Cast all about Electromagnetism. Listen here, Episode 103: Electromagnetism.

Sources: