[/caption]

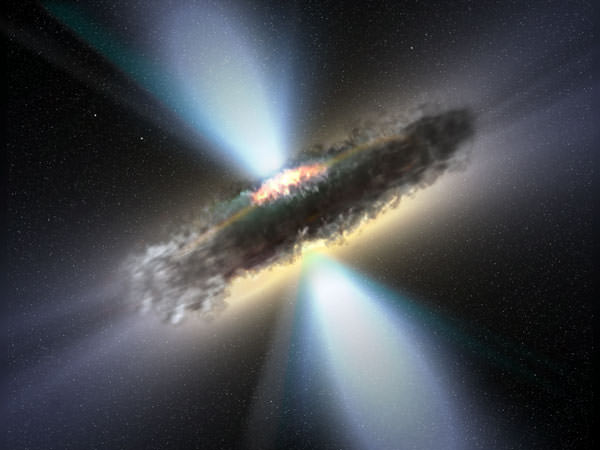

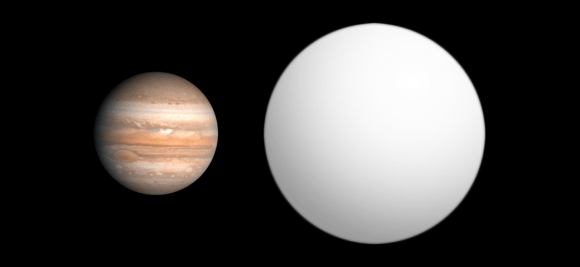

New observations of one of the biggest and hottest known exoplanets in the galaxy, WASP 12b, suggest that it is generating a powerful magnetic field sufficient to divert much of its star’s stellar wind into a bow shock wave.

Like exoplanets themselves, the discovery of an exo-magnetosphere isn’t that much of a surprise – indeed it would be a surprise if Jovian-type gas giants didn’t have magnetic fields, since the gas giants in our own backyard have quite powerful ones. But, assuming the data for this finding remains valid on further scrutiny, it is a first – even if it is just a confirming-what-everyone-had-suspected-all-along first.

WASP-12 is a Sun-like G type yellow star about 870 light years away from Earth. The exoplanet WASP-12b orbits it at a distance of only 3.4 million km out, with an orbital period of only 26 hours. Compare this to Mercury’s orbital period of 88 days at a 46 million kilometer distance from the Sun at orbital perihelion.

So habitable zone, this ain’t – but a giant among gas giants ploughing through a dense stellar wind of charged particles sounds like an ideal set of circumstances to look for an exo-magnetosphere.

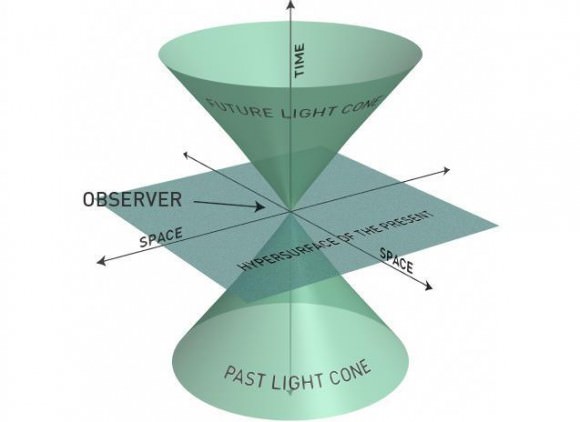

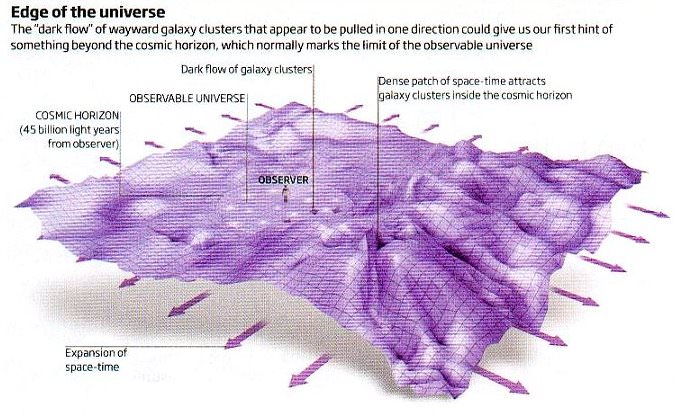

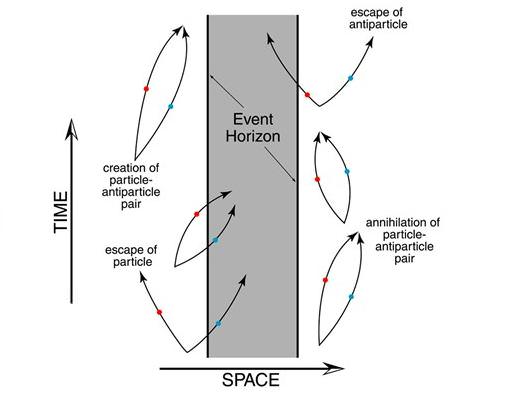

The bow shock was detected by an initial dip of the star’s ultraviolet light output ahead of the more comprehensive dip which was produced by the transiting planet itself. Given the rapid orbital speed of the planet, some bow wave effect might be expected regardless whether or not the planet generates a strong magnetic field. But apparently, the data from WASP 12-b best fits a model where the bow shock is produced by a magnetic, rather than just a dynamic physical, effect.

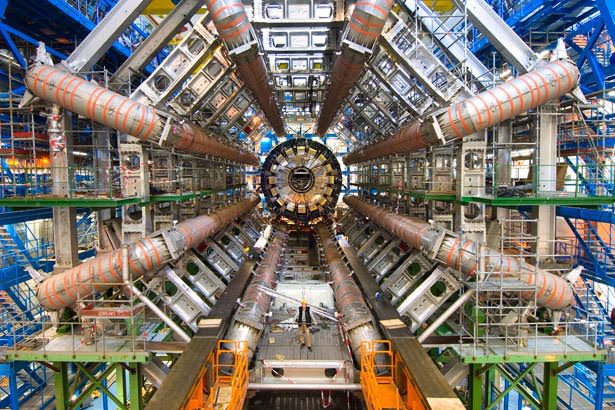

The finding is based on data from the SuperWASP (Wide Angle Search for Planets) project as well as Hubble Space Telescope data. Team leader Dr. Aline Vidotto of the University of St. Andrews said of the new finding. “The location of this bow shock provides us with an exciting new tool to measure the strength of planetary magnetic fields. This is something that presently cannot be done in any other way.”

Although WASP 12b’s magnetic field may be prolonging its life somewhat, by offering some protection from its star’s stellar wind – which might otherwise being blowing away its outer layers – WASP 12-b is still doomed due to the gravitational effects of the close-by WASP 12 star which has already been observed to be drawing material from the planet. Current estimates are that WASP 12-b will be completely consumed in about 10 million years.

There is at least one puzzle here, not really testable from such a distance. Presuming that a planet so close to its star is probably tidally-locked, it would not be spinning on its axis – which is generally thought to be a key feature of planets generating strong magnetic fields – at least the ones in our Solar System. This may need something like an OverwhelminglySuperWASP to investigate further.

Further reading: RAS National Astronomy Meeting 2011 press release.