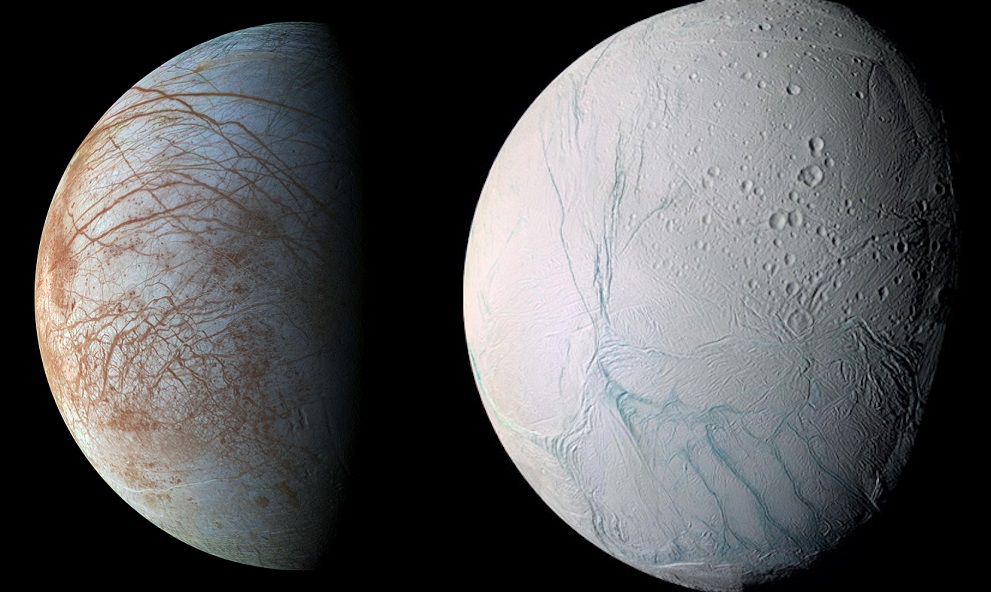

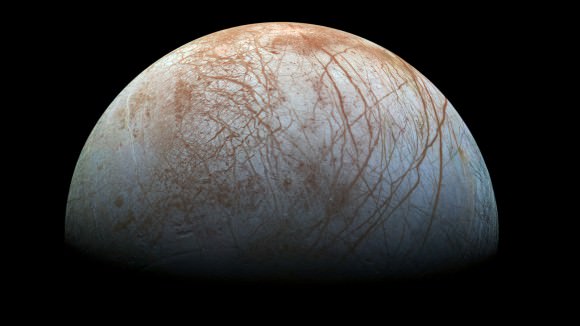

In the hunt for extra-terrestrial life, scientists tend to take what is known as the “low-hanging fruit approach”. This consists of looking for conditions similar to what we experience here on Earth, which include at oxygen, organic molecules, and plenty of liquid water. Interestingly enough, some of the places where these ingredients are present in abundance include the interiors of icy moons like Europa, Ganymede, Enceladus and Titan.

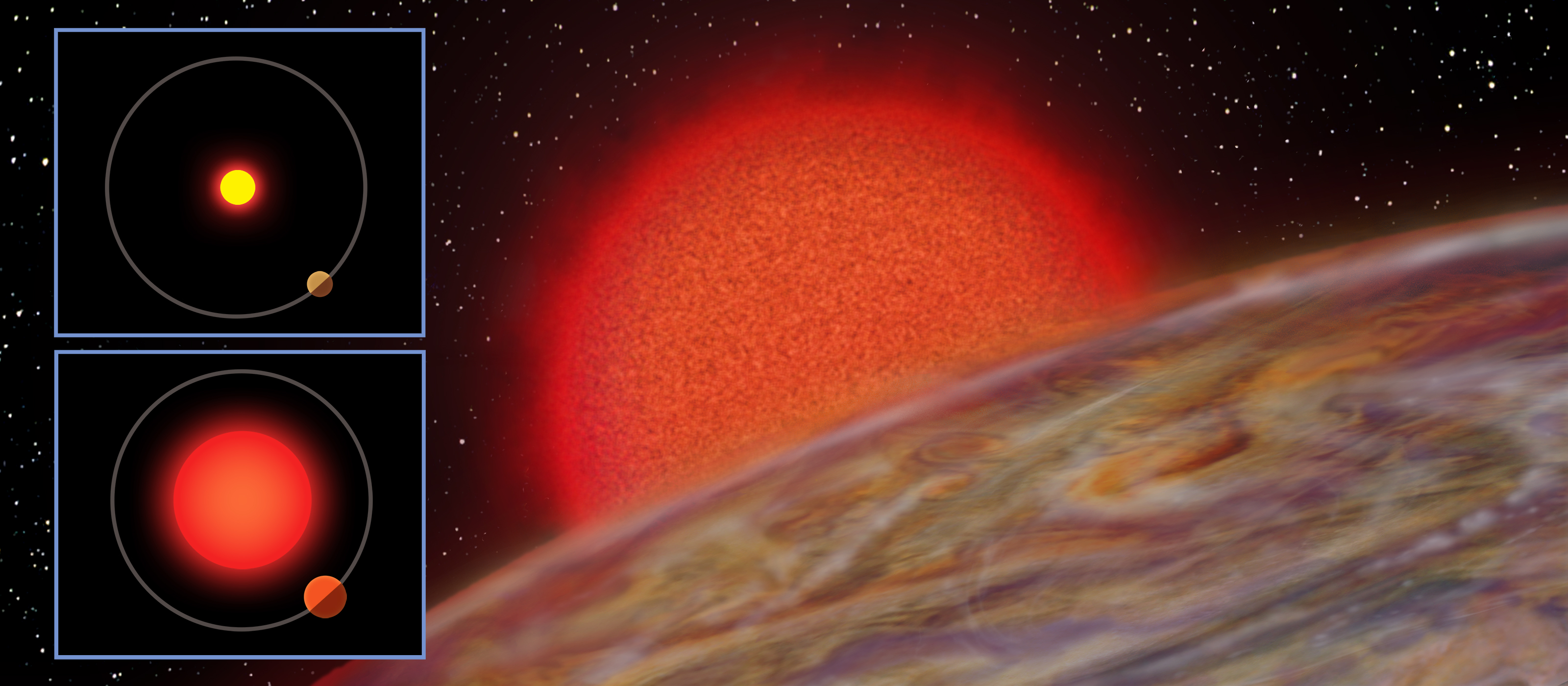

Whereas there is only one terrestrial planet in our Solar System that is capable of supporting life (Earth), there are multiple “Ocean Worlds” like these moons. Taking this a step further, a team of researchers from the Harvard Smithsonian Center for Astrophysics (CfA) conducted a study that showed how potentially-habitable icy moons with interior oceans are far more likely than terrestrial planets in the Universe.

The study, titled “Subsurface Exolife“, was performed by Manasvi Lingam and Abraham Loeb of the Harvard Smithsonain Center for Astrophysics (CfA) and the Institute for Theory and Computation (ITC) at Harvard University. For the sake of their study, the authors consider all that what defines a circumstellar habitable zone (aka. “Goldilocks Zone“) and likelihood of there being life inside moons with interior oceans.

To begin, Lingam and Loeb address the tendency to confuse habitable zones (HZs) with habitability, or to treat the two concepts as interchangeable. For instance, planets that are located within an HZ are not necessarily capable of supporting life – in this respect, Mars and Venus are perfect examples. Whereas Mars is too cold and it’s atmosphere too thin to support life, Venus suffered a runaway greenhouse effect that caused it to become a hot, hellish place.

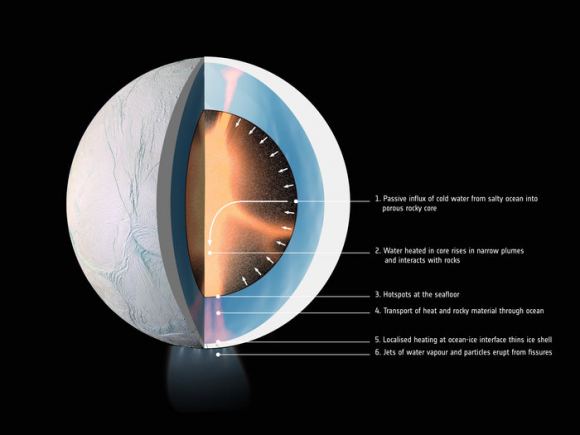

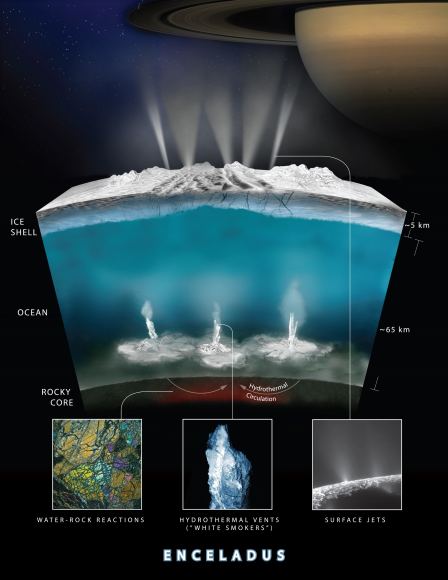

On the other hand, bodies that are located beyond HZs have been found to be capable of having liquid water and the necessary ingredients to give rise to life. In this case, the moons of Europa, Ganymede, Enceladus, Dione, Titan, and several others serve as perfect examples. Thanks to the prevalence of water and geothermal heating caused by tidal forces, these moons all have interior oceans that could very well support life.

As Lingam, a post-doctoral researcher at the ITC and CfA and the lead author on the study, told Universe Today via email:

“The conventional notion of planetary habitability is the habitable zone (HZ), namely the concept that the “planet” must be situated at the right distance from the star such that it may be capable of having liquid water on its surface. However, this definition assumes that life is: (a) surface-based, (b) on a planet orbiting a star, and (c) based on liquid water (as the solvent) and carbon compounds. In contrast, our work relaxes assumptions (a) and (b), although we still retain (c).”

As such, Lingam and Loeb widen their consideration of habitability to include worlds that could have subsurface biospheres. Such environments go beyond icy moons such as Europa and Enceladus and could include many other types deep subterranean environments. On top of that, it has also been speculated that life could exist in Titan’s methane lakes (i.e. methanogenic organisms). However, Lingam and Loeb chose to focus on icy moons instead.

“Even though we consider life in subsurface oceans under ice/rock envelopes, life could also exist in hydrated rocks (i.e. with water) beneath the surface; the latter is sometimes referred to as subterranean life,” said Lingam. “We did not delve into the second possibility since many of the conclusions (but not all of them) for subsurface oceans are also applicable to these worlds. Similarly, as noted above, we do not consider lifeforms based on exotic chemistries and solvents, since it is not easy to predict their properties.”

Ultimately, Lingam and Loeb chose to focus on worlds that would orbit stars and likely contain subsurface life humanity would be capable of recognizing. They then went about assessing the likelihood that such bodies are habitable, what advantages and challenges life will have to deal with in these environments, and the likelihood of such worlds existing beyond our Solar System (compared to potentially-habitable terrestrial planets).

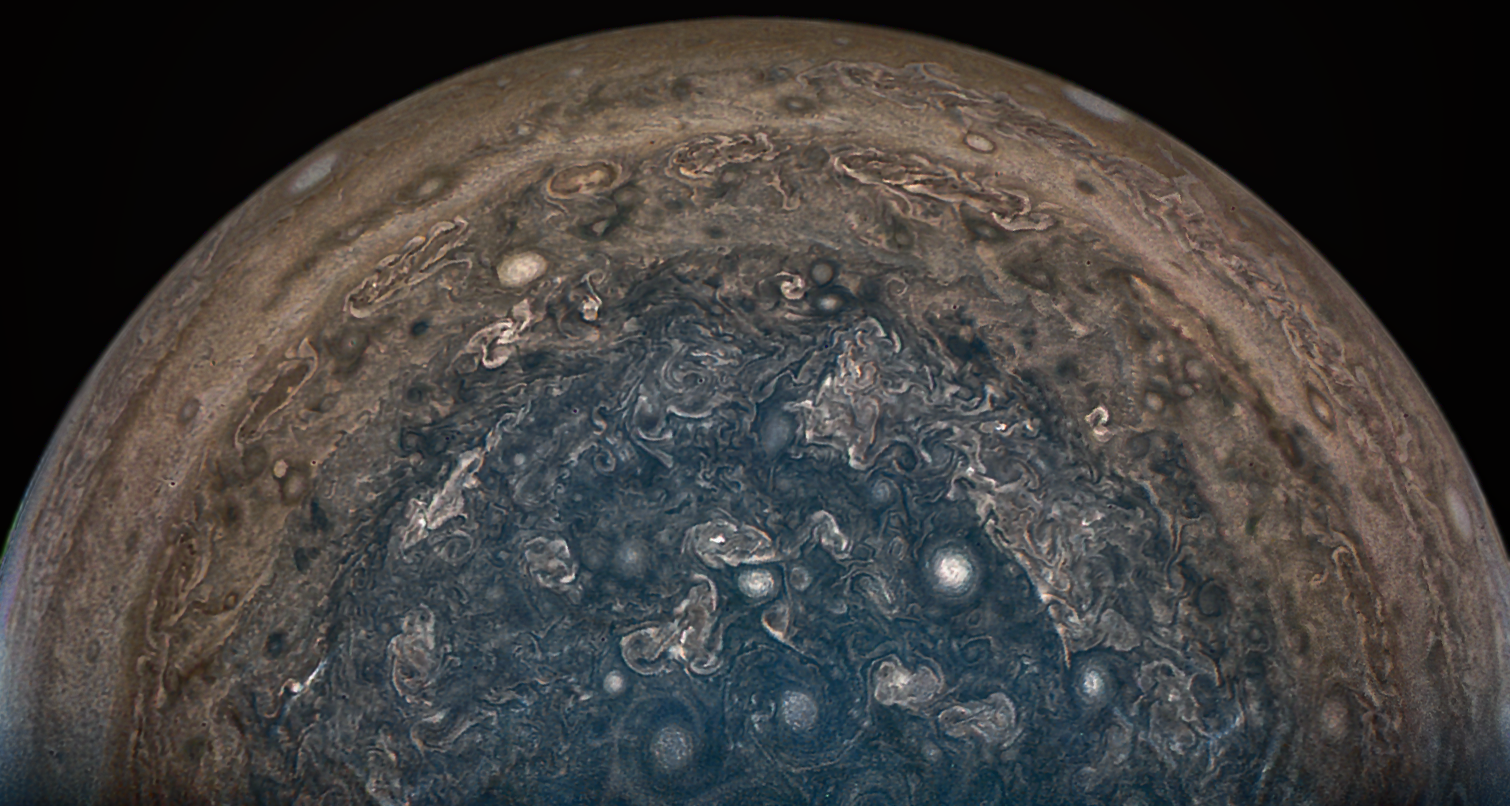

For starters, “Ocean Worlds” have several advantages when it comes to supporting life. Within the Jovian system (Jupiter and its moons) radiation is a major problem, which is the result of charged particles becoming trapped in the gas giants powerful magnetic field. Between that and the moon’s tenuous atmospheres, life would have a very hard time surviving on the surface, but life dwelling beneath the ice would fare far better.

“One major advantage that icy worlds have is that the subsurface oceans are mostly sealed off from the surface,” said Lingam. “Hence, UV radiation and cosmic rays (energetic particles), which are typically detrimental to surface-based life in high doses, are unlikely to affect putative life in these subsurface oceans.”

“On the negative side,’ he continued, “the absence of sunlight as a plentiful energy source could lead to a biosphere that has far less organisms (per unit volume) than Earth. In addition, most organisms in these biospheres are likely to be microbial, and the probability of complex life evolving may be low compared to Earth. Another issue is the potential availability of nutrients (e.g. phosphorus) necessary for life; we suggest that these nutrients might be available only in lower concentrations than Earth on these worlds.”

In the end, Lingam and Loeb determined that a wide range of worlds with ice shells of moderate thickness may exist in a wide range of habitats throughout the cosmos. Based on how statistically likely such worlds are, they concluded that “Ocean Worlds” like Europa, Enceladus, and others like them are about 1000 times more common than rocky planets that exist within the HZs of stars.

These findings have some drastic implications for the search for extra-terrestrial and extra-solar life. It also has significant implications for how life may be distributed through the Universe. As Lingam summarized:

“We conclude that life on these worlds will undoubtedly face noteworthy challenges. However, on the other hand, there is no definitive factor that prevents life (especially microbial life) from evolving on these planets and moons. In terms of panspermia, we considered the possibility that a free-floating planet containing subsurface exolife could be temporarily “captured” by a star, and that it may perhaps seed other planets (orbiting that star) with life. As there are many variables involved, not all of them can be quantified accurately.”

is being developed to find evidence of life on other worlds. Credit: NASA/Jenny Mottor

Professor Leob – the Frank B. Baird Jr. Professor of Science at Harvard University, the director of the ITC, and the study’s co-author – added that finding examples of this life presents its own share of challenges. As he told Universe Today via email:

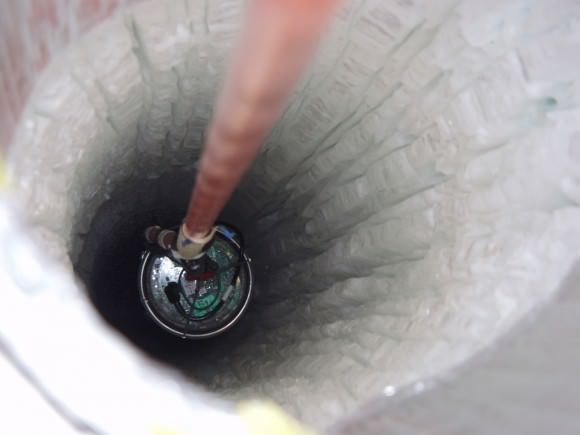

“It is very difficult to detect sub-surface life remotely (from a large distance) using telescopes. One could search for excess heat but that can result from natural sources, such as volcanos. The most reliable way to find sub-surface life is to land on such a planet or moon and drill through the surface ice sheet. This is the approach contemplated for a future NASA mission to Europa in the solar system.”

Exploring the implications for panspermia further, Lingam and Loeb also considered what might happen if a planet like Earth were ever ejected from the Solar System. As they note in their study, previous research has indicated how planets with thick atmospheres or subsurface oceans could still support life while floating in interstellar space. As Loeb explained, they also considered what would happen if this ever happened with Earth someday:

“An interesting question is what would happen to the Earth if it was ejected from the solar system into cold space without being warmed by the Sun. We have found that the oceans would freeze down to a depth of 4.4 kilometers but pockets of liquid water would survive in the deepest regions of the Earth’s ocean, such as the Mariana Trench, and life could survive in these remaining sub-surface lakes. This implies that sub-surface life could be transferred between planetary systems.”

This study also serves as a reminder that as humanity explores more of the Solar System (largely for the sake of finding extra-terrestrial life) what we find also has implications in the hunt for life in the rest of the Universe. This is one of the benefits of the “low-hanging fruit” approach. What we don’t know is informed but what we do, and what we find helps inform our expectations of what else we might find.

And of course, it’s a very vast Universe out there. What we may find is likely to go far beyond what we are currently capable of recognizing!

Further Reading: arXiv