In today’s modern, fast-paced world, human activity is very much reliant on electrical infrastructure. If the power grids go down, our climate control systems will shut off, our computers will die, and all electronic forms of commerce and communication will cease. But in addition to that, human activity in the 21st century is also becoming increasingly dependent upon the infrastructure located in Low Earth Orbit (LEO).

Aside from the many telecommunications satellites that are currently in space, there’s also the International Space Station and a fleet of GPS satellites. It is for this reason that solar flare activity is considered a serious hazard, and mitigation of it a priority. Looking to address that, a team of scientists from Harvard University recently released a study that proposes a bold solution – placing a giant magnetic shield in orbit.

The study – which was the work of Doctor Manasavi Lingam and Professor Abraham Loeb from the Harvard Smithsonian Center for Astrophysicist (CfA) – recently appeared online under the title “Impact and Mitigation Strategy for Future Solar Flares“. As they explain, solar flares pose a particularly grave risk in today’s world, and will become an even greater threat due to humanity’s growing presence in LEO.

Solar flares have been a going concern for over 150 years, ever since the famous Carrington Event of 1859. Since that time, a great deal of effort has been dedicated to the study of solar flares from both a theoretical and observational standpoint. And thanks to the advances that have been made in the past 200 years in terms of astronomy and space exploration, much has been learned about the phenomena known as “space weather”.

At the same time, humanity’s increased reliance on electricity and space-based infrastructure have also made us more vulnerable to extreme space weather events. In fact, if the Carrington event were to take place today, it is estimated that it would cause global damage to electric power grids, satellites communications, and global supply chains.

The cumulative worldwide economic losses, according to a 2009 report by the Space Studies Board (“Severe Space Weather Events–Understanding Societal and Economic Impacts”), would be $10 trillion, and recovery would take several years. And yet, as Professor Loeb explained to Universe Today via email, this threat from space has received far less attention than other possible threats.

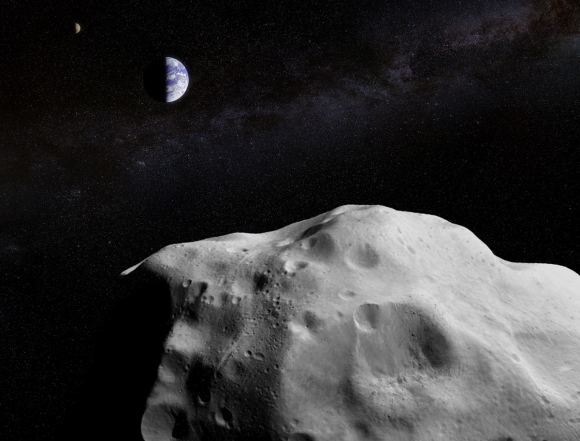

“In terms of risk from the sky, most of the attention in the past was dedicated to asteroids,” said Loeb. “They killed the dinosaurs and their physical impact in the past was the same as it will be in the future, unless their orbits are deflected. However, solar flares have little biological impact and their main impact is on technology. But a century ago, there was not much technological infrastructure around, and technology is growing exponentially. Therefore, the damage is highly asymmetric between the past and future.”

To address this, Lingham and Loeb developed a simple mathematical model to assess the economic losses caused by solar flare activity over time. This model considered the increasing risk of damage to technological infrastructure based on two factors. For one, they considered the fact that the energy of a solar flares increases with time, then coupled this with the exponential growth of technology and GDP.

What they determined was that on longer time scales, the rare types of solar flares that are very powerful become much more likely. Coupled with humanity’s growing presence and dependence on spacecraft and satellites in LEO, this will add up to a dangerous conjunction somewhere down the road. Or as Loeb explained:

“We predict that within ~150 years, there will be an event that causes damage comparable to the current US GDP of ~20 trillion dollars, and the damage will increase exponentially at later times until technological development will saturate. Such a forecast was never attempted before. We also suggest a novel idea for how to reduce the damage from energetic particles by a magnetic shield. This was my idea and was not proposed before.”

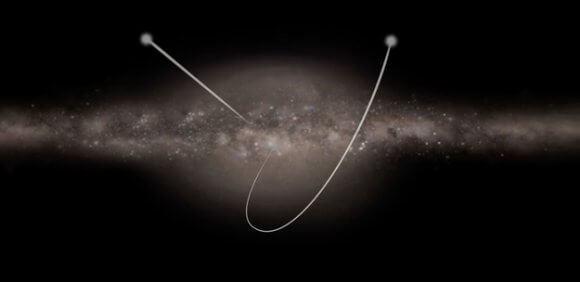

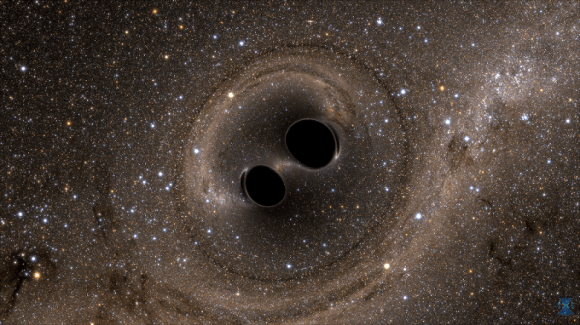

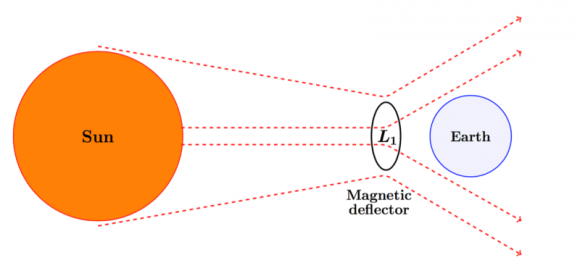

To address this growing risk, Lingham and Loeb also considered the possibility of placing a magnetic shield between Earth and the Sun. This shield would be placed at the Earth-Sun Lagrange Point 1, where it would be able to deflect charged particles and create an artificial bowshock around Earth. In this sense, this shield would protect Earth’s in a way that is similar to what its magnetic field already does, but to greater effect.

Based on their assessment, Lingham and Loeb indicate that such a shield is technically feasible in terms of its basic physical parameters. They were also able to provide a rudimentary timeline for the construction of this shield, not to mention some rough cost assessments. As Loeb indicated, such a shield could be built before this century is over, and at a fraction of the cost of what would be incurred from solar flare damage.

“The engineering project associated with the magnetic shield that we propose could take a few decades to construct in space,” he said. “The cost for lifting the needed infrastructure to space (weighting 100,000 tons) will likely be of order 100 billions of dollars, much less than the expected damage over a century.”

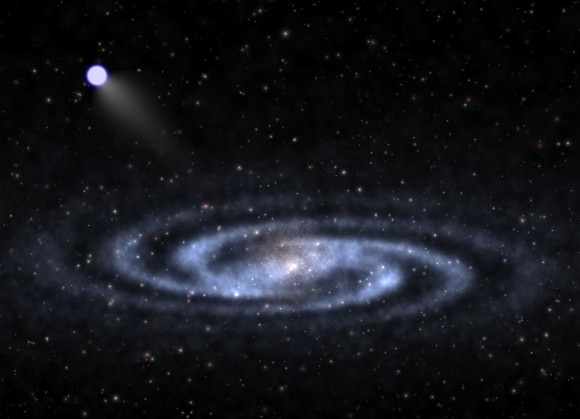

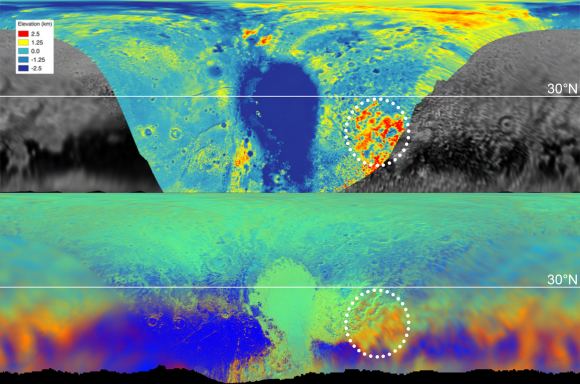

Interestingly enough, the idea of using a magnetic shield to protect planets has been proposed before. For example, this type of shield was also the subject of a presentation at this year’s “Planetary Science Vision 2050 Workshop“, which was hosted by NASA’s Planetary Science Division (PSD). This shield was recommended as a means of enhancing Mars’ atmosphere and facilitating crewed mission to its surface in the future.

During the course of the presentation, titled “A Future Mars Environment for Science and Exploration“, NASA Director Jim Green discussed how a magnetic shield could protect Mars’ tenuous atmosphere from solar wind. This would allow it to replenish over time, which would have the added benefit of warming Mars up and allowing liquid water to again flow on its surface. If this sounds similar to proposals for terraforming Mars, that’s because it is!

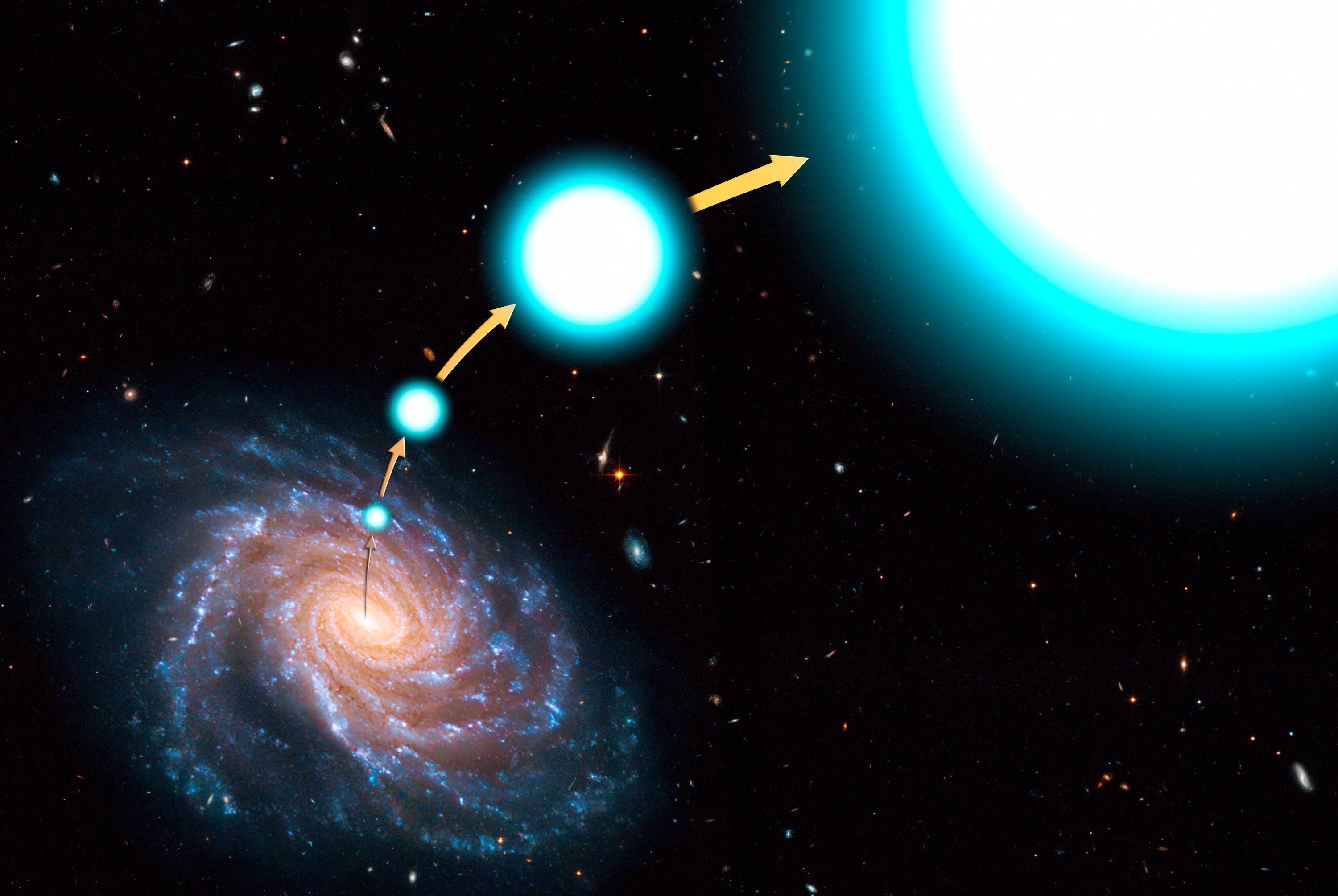

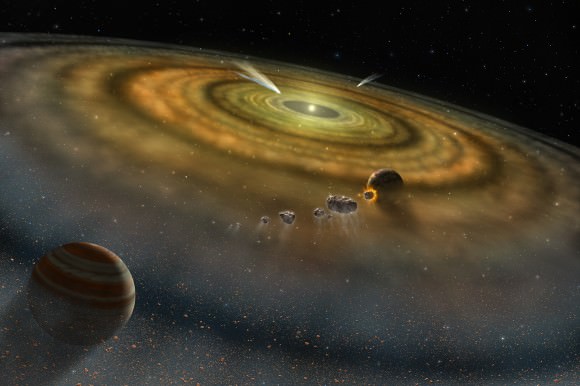

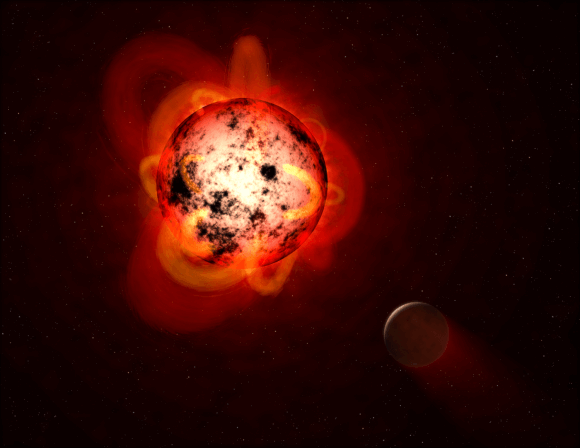

Beyond Earth and the Solar System, the implications for this study are quite overwhelming. In recent years, many terrestrial planets have been found orbiting within nearby M-type (aka. red dwarf) star systems. Because of the way these planets orbit closely to their respective suns, and the variable and unstable nature of M-type stars, scientists have expressed doubts about whether or not these planets could actually be habitable.

In short, scientists have ventured that over the course of billions of years, rocky planets that orbit close to their suns, are tidally-locked with them, and are subject to regular solar flares would lose their atmospheres. In this respect, magnetic shields could be a possible solution to creating extra-solar colonies. Place a large shield in orbit at the L1 Lagrange point, and you never have to worry again about powerful magnetic storms ravaging the planet!

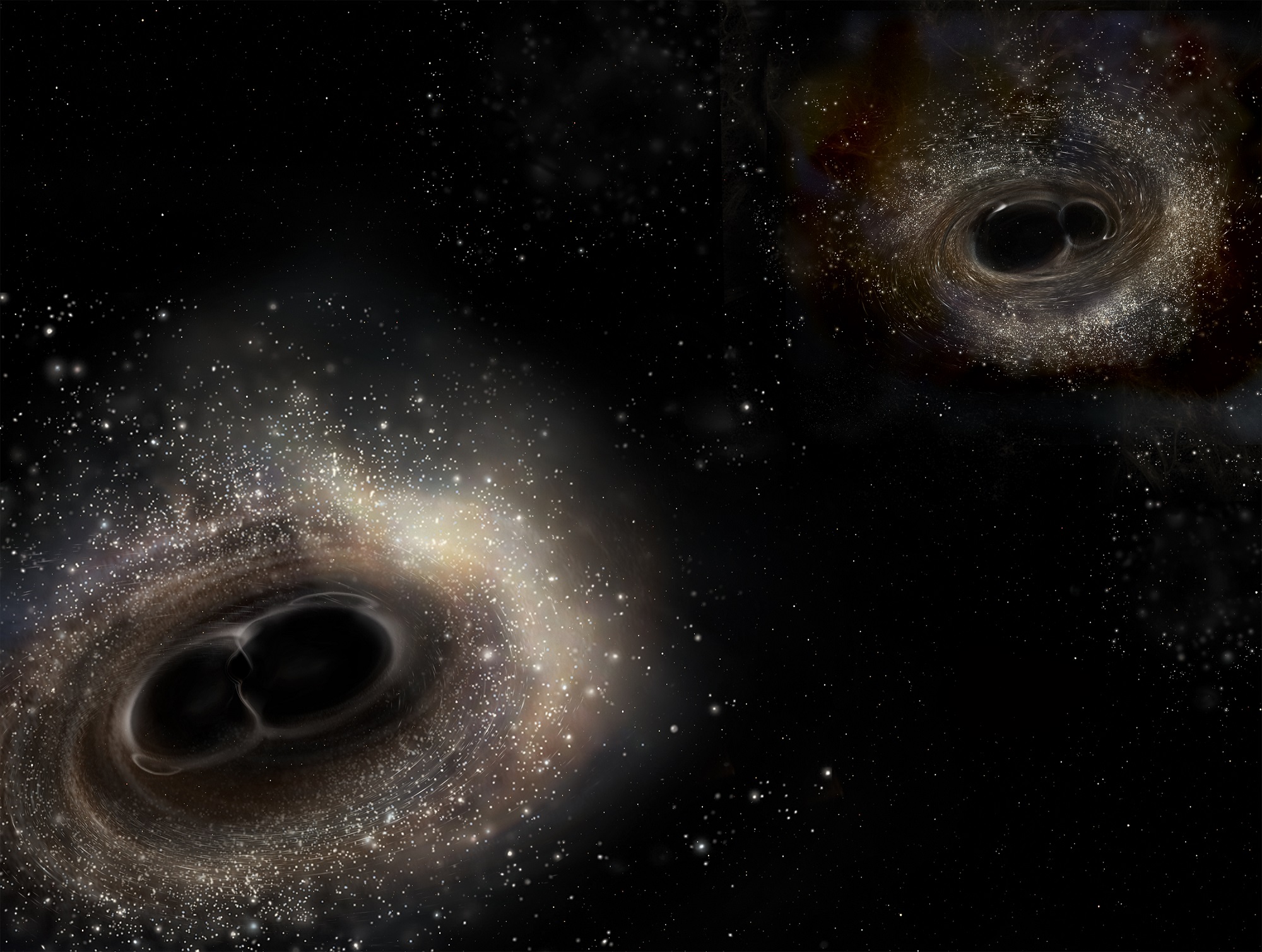

On top of that, this study offers a possible resolution to the Fermi Paradox. When looking for sign of Extra-Terrestrial Intelligence (ETI), it might make sense to monitor distant stars for signs of an orbiting magnetic shield. As Prof. Leob explained, such structures may have already been detected around distant stars, and could explain some of the unusual observations astronomers have made:

“The imprint of a shield built by another civilization could involve the changes it induces in the brightness of the host star due to occultation (similar behavior to Tabby’s star) if the structure is big enough. The situation could be similar to Dyson’s spheres, but instead of harvesting the energy of the star the purpose of the infrastructure is to protect a technological civilization on a planet from the flares of its host star.”

Further Reading: arXiv