Computer illustration of a binary star. Image credit: Carnegie Institution. Click to enlarge.

New theoretical work shows that gas-giant planet formation can occur around binary stars in much the same way that it occurs around single stars like the Sun. The work is presented today by Dr. Alan Boss of the Carnegie Institution’s Department of Terrestrial Magnetism (DTM) at the American Astronomical Society meeting in Washington, DC. The results suggest that gas-giant planets, like Jupiter, and habitable Earth-like planets could be more prevalent than previously thought. A paper describing these results has been accepted for publication in the Astrophysical Journal.

“We tend to focus on looking for other solar systems around stars just like our Sun,” Boss says. “But we are learning that planetary systems can be found around all sorts of stars, from pulsars to M dwarfs with only one third the mass of our Sun.”

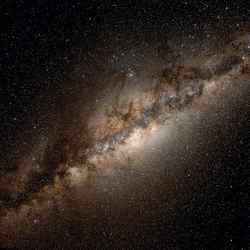

Two out of every three stars in the Milky Way is a member of a binary or multiple star system, in which the stars orbit around each other with separations that can range from being nearly in contact (close binaries) to thousands of light-years or more (wide binaries). Most binaries have separations similar to the distance from the Sun to Neptune (~30 AU, where 1 AU = 1 astronomical unit = 150 million kilometers–the distance from the Earth to the Sun).

It has not been clear whether planetary system formation could occur in typical binary star systems, where the strong gravitational forces from one star might interfere with the planet formation processes around the other star, and vice versa. Previous theoretical work had suggested, in fact, that typical binary stars would not be able to form planetary systems. However, planet hunters have recently found a number of gas-giant planets in orbit around binary stars with a range of separations.

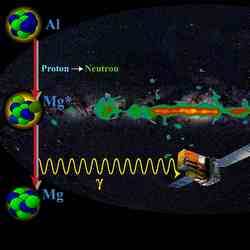

Boss found that if the shock heating resulting from the gravitational forces from the companion star is weak, then gas-giant planets are able to form in planet-forming disks in much the same way as they do around single stars. The planet-forming disk would remain cool enough for ice grains to stay solid and thus permit the growth of the solid cores that must reach multiple-Earth-mass size for the conventional mechanism of gas-giant planet formation (core accretion) to succeed.

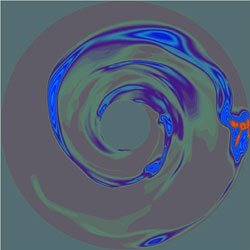

Boss’ models show even more directly that the alternative mechanism for gas-giant planet formation (disk instability) can proceed just as well in binary star systems as around single stars, and in fact may even be encouraged by the gravitational forces of the other star. In Boss’ new models, the planet-forming disk in orbit around one of the stars is quickly driven to form dense spiral arms, within which self-gravitating clumps of gas and dust form and begin the process of contracting down to planetary sizes. The process is amazingly rapid, requiring less than 1,000 years for dense clumps to form in an otherwise featureless disk. There would be plenty of room for Earth-like planets to form closer to the central star after the gas-giant planets have formed, in much the same way our own planetary system is thought to have formed.

Boss points out, “This result may have profound implications in that it increases the likelihood of the formation of planetary systems resembling our own, because binary stars are the rule in our galaxy, not the exception.” If binary stars can shelter planetary systems composed of outer gas-giant planets and inner Earth-like planets, then the likelihood of other habitable worlds suddenly becomes roughly three times more probable–up to three times as many stars could be possible hosts for planetary systems similar to our own. NASA’s plans to search for and characterize Earth-like planets in the next decade would then be that much more likely to succeed.

One of the key remaining questions about the theoretical models is the correct amount of shock heating inside the planet-forming disk, as well as the more general question of how rapidly the disk is able to cool. Boss and other researchers are actively working to better understand these heating and cooling processes. Given the growing observational evidence for gas-giant planets in binary star systems, the new results suggest that shock heating in binary disks cannot be too large, or it would prevent gas-giant planet formation.

Original Source: Carnegie News Release