Key questions relevant to fundamental physics and cosmology, namely the nature of the mysterious dark energy and dark matter (Euclid); the frequency of exoplanets around other stars, including Earth-analogs (PLATO); take the closest look at our Sun yet possible, approaching to just 62 solar radii (Solar Orbiter) … but only two! What would be your picks?

These three mission concepts have been chosen by the European Space Agency’s Science Programme Committee (SPC) as candidates for two medium-class missions to be launched no earlier than 2017. They now enter the definition phase, the next step required before the final decision is taken as to which missions are implemented.

These three missions are the finalists from 52 proposals that were either made or carried forward in 2007. They were whittled down to just six mission proposals in 2008 and sent for industrial assessment. Now that the reports from those studies are in, the missions have been pared down again. “It was a very difficult selection process. All the missions contained very strong science cases,” says Lennart Nordh, Swedish National Space Board and chair of the SPC.

And the tough decisions are not yet over. Only two missions out of three of them: Euclid, PLATO and Solar Orbiter, can be selected for the M-class launch slots. All three missions present challenges that will have to be resolved at the definition phase. A specific challenge, of which the SPC was conscious, is the ability of these missions to fit within the available budget. The final decision about which missions to implement will be taken after the definition activities are completed, which is foreseen to be in mid-2011.

[/caption]

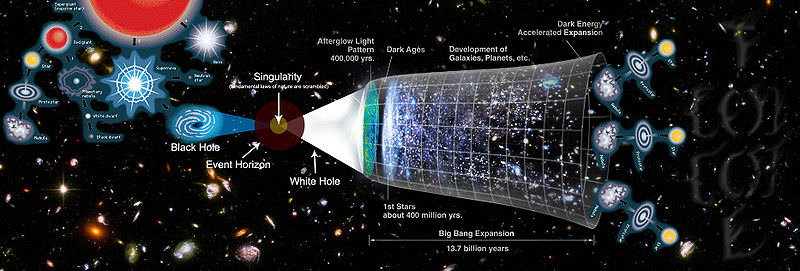

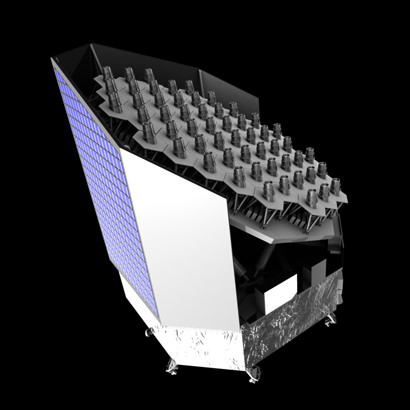

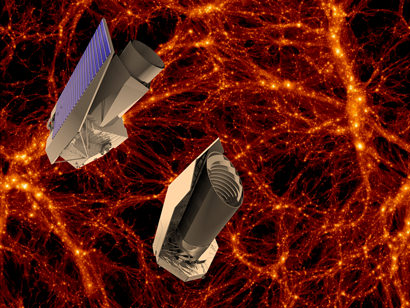

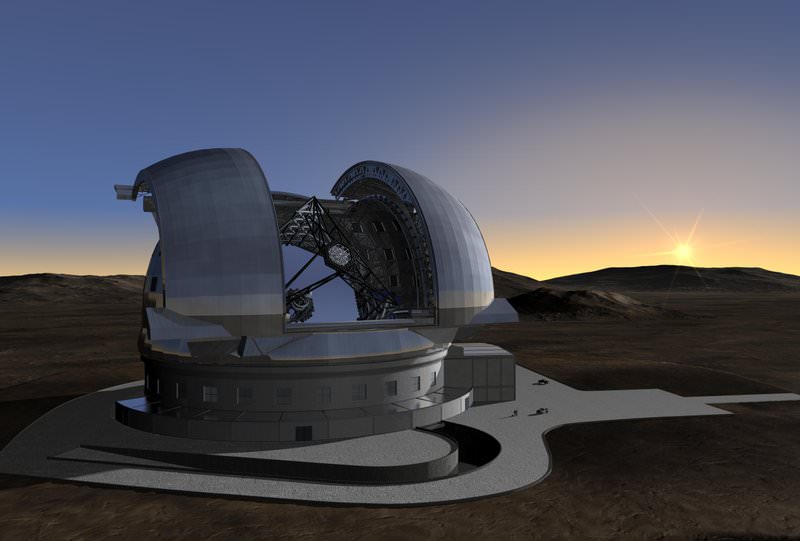

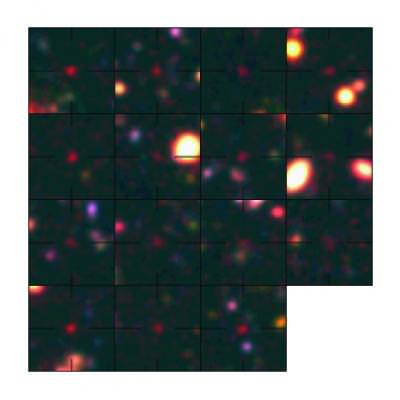

Euclid is an ESA mission to map the geometry of the dark Universe. The mission would investigate the distance-redshift relationship and the evolution of cosmic structures. It would achieve this by measuring shapes and redshifts of galaxies and clusters of galaxies out to redshifts ~2, or equivalently to a look-back time of 10 billion years. It would therefore cover the entire period over which dark energy played a significant role in accelerating the expansion.

By approaching as close as 62 solar radii, Solar Orbiter would view the solar atmosphere with high spatial resolution and combine this with measurements made in-situ. Over the extended mission periods Solar Orbiter would deliver images and data that would cover the polar regions and the side of the Sun not visible from Earth. Solar Orbiter would coordinate its scientific mission with NASA’s Solar Probe Plus within the joint HELEX program (Heliophysics Explorers) to maximize their combined science return.

PLATO (PLAnetary Transit and Oscillations of stars) would discover and characterize a large number of close-by exoplanetary systems, with a precision in the determination of mass and radius of 1%.

In addition, the SPC has decided to consider at its next meeting in June, whether to also select a European contribution to the SPICA mission.

SPICA would be an infrared space telescope led by the Japanese Space Agency JAXA. It would provide ‘missing-link’ infrared coverage in the region of the spectrum between that seen by the ESA-NASA Webb telescope and the ground-based ALMA telescope. SPICA would focus on the conditions for planet formation and distant young galaxies.

“These missions continue the European commitment to world-class space science,” says David Southwood, ESA Director of Science and Robotic Exploration, “They demonstrate that ESA’s Cosmic Vision programme is still clearly focused on addressing the most important space science.”

Source: ESA chooses three scientific missions for further study

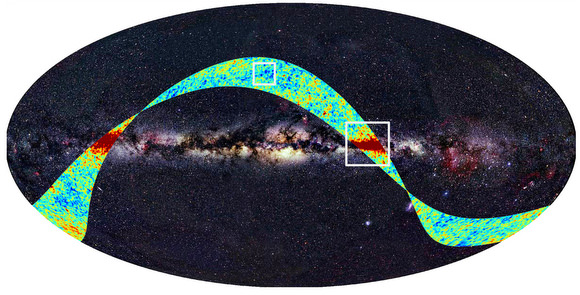

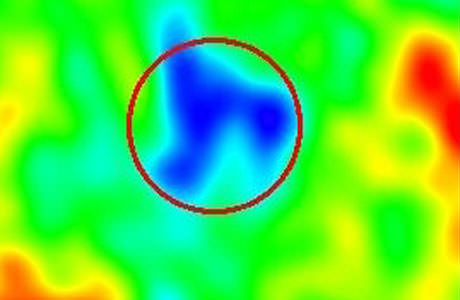

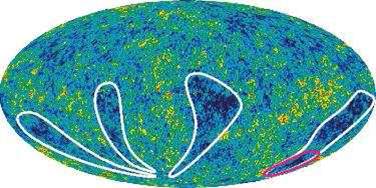

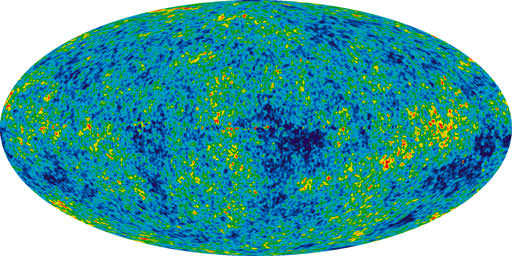

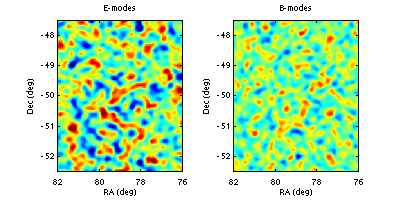

measurements come closer to fitting what is predicted by the Standard Cosmologicl Model by more than an order of magnitude, said Dr. Gear. This is a very important step on the path to verifying whether our model of the Universe is correct.

measurements come closer to fitting what is predicted by the Standard Cosmologicl Model by more than an order of magnitude, said Dr. Gear. This is a very important step on the path to verifying whether our model of the Universe is correct.