[/caption]

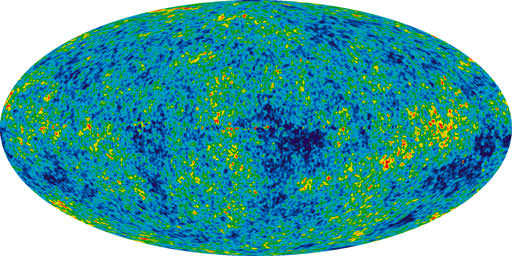

The Wilkinson Microwave Anisotropy Probe (WMAP) science team has finished analyzing seven full years’ of data from the little probe that could, and once again it seems we can sum up the universe in six parameters and a model.

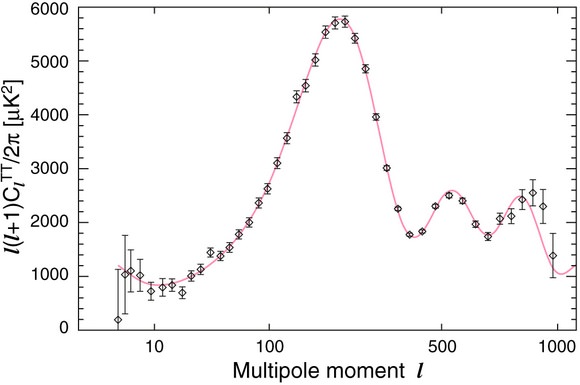

Using the seven-year WMAP data, together with recent results on the large-scale distribution of galaxies, and an updated estimate of the Hubble constant, the present-day age of the universe is 13.75 (plus-or-minus 0.11) billion years, dark energy comprises 72.8% (+/- 1.5%) of the universe’s mass-energy, baryons 4.56% (+/- 0.16%), non-baryonic matter (CDM) 22.7% (+/- 1.4%), and the redshift of reionization is 10.4 (+/- 1.2).

In addition, the team report several new cosmological constraints – primordial abundance of helium (this rules out various alternative, ‘cold big bang’ models), and an estimate of a parameter which describes a feature of density fluctuations in the very early universe sufficiently precisely to rule out a whole class of inflation models (the Harrison-Zel’dovich-Peebles spectrum), to take just two – as well as tighter limits on many others (number of neutrino species, mass of the neutrino, parity violations, axion dark matter, …).

The best eye-candy from the team’s six papers are the stacked temperature and polarization maps for hot and cold spots; if these spots are due to sound waves in matter frozen in when radiation (photons) and baryons parted company – the cosmic microwave background (CMB) encodes all the details of this separation – then there should be nicely circular rings, of rather exact sizes, around the spots. Further, the polarization directions should switch from radial to tangential, from the center out (for cold spots; vice versa for hot spots).

And that’s just what the team found!

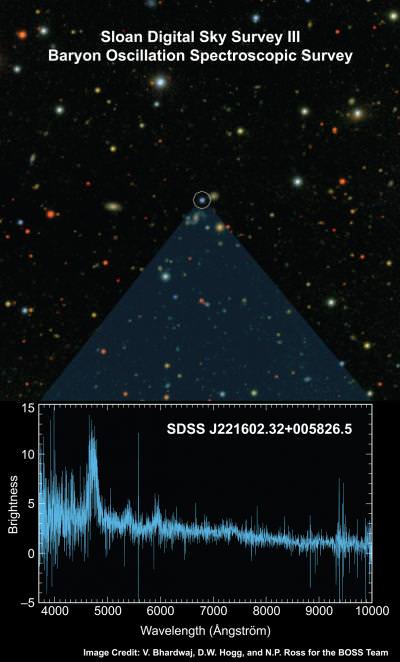

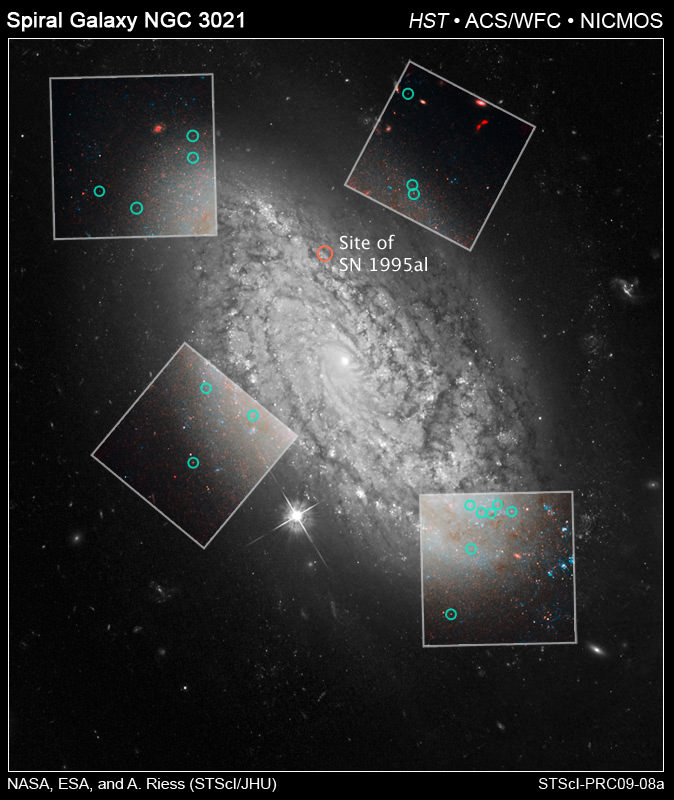

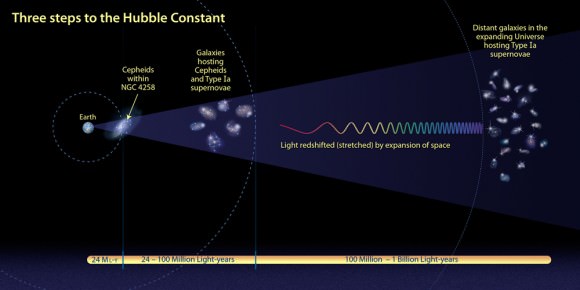

Concerning Dark Energy. Since the Five-Year WMAP results were published, several independent studies with direct relevance to cosmology have been published. The WMAP team took those from observations of the baryon acoustic oscillations (BAO) in the distribution of galaxies; of Cepheids, supernovae, and a water maser in local galaxies; of time-delay in a lensed quasar system; and of high redshift supernovae, and combined them to reduce the nooks and crannies in parameter space in which non-cosmological constant varieties of dark energy could be hiding. At least some alternative kinds of dark energy may still be possible, but for now Λ, the cosmological constant, rules.

Concerning Inflation. Very, very, very early in the life of the universe – so the theory of cosmic inflation goes – there was a period of dramatic expansion, and the tiny quantum fluctuations before inflation became the giant cosmic structures we see today. “Inflation predicts that the statistical distribution of primordial fluctuations is nearly a Gaussian distribution with random phases. Measuring deviations from a Gaussian distribution,” the team reports, “is a powerful test of inflation, as how precisely the distribution is (non-) Gaussian depends on the detailed physics of inflation.” While the limits on non-Gaussianity (as it is called), from analysis of the WMAP data, only weakly constrain various models of inflation, they do leave almost nowhere for cosmological models without inflation to hide.

Concerning ‘cosmic shadows’ (the Sunyaev-Zel’dovich (SZ) effect). While many researchers have looked for cosmic shadows in WMAP data before – perhaps the best known to the general public is the 2006 Lieu, Mittaz, and Zhang paper (the SZ effect: hot electrons in the plasma which pervades rich clusters of galaxies interact with CMB photons, via inverse Compton scattering) – the WMAP team’s recent analysis is their first to investigate this effect. They detect the SZ effect directly in the nearest rich cluster (Coma; Virgo is behind the Milky Way foreground), and also statistically by correlation with the location of some 700 relatively nearby rich clusters. While the WMAP team’s finding is consistent with data from x-ray observations, it is inconsistent with theoretical models. Back to the drawing board for astrophysicists studying galaxy clusters.

I’ll wrap up by quoting Komatsu et al. “The standard ΛCDM cosmological model continues to be an exquisite fit to the existing data.”

Primary source: Seven-Year Wilkinson Microwave Anisotropy Probe (WMAP) Observations: Cosmological Interpretation (arXiv:1001.4738). The five other Seven-Year WMAP papers are: Seven-Year Wilkinson Microwave Anisotropy Probe (WMAP) Observations: Are There Cosmic Microwave Background Anomalies? (arXiv:1001.4758), Seven-Year Wilkinson Microwave Anisotropy Probe (WMAP) Observations: Planets and Celestial Calibration Sources (arXiv:1001.4731), Seven-Year Wilkinson Microwave Anisotropy Probe (WMAP) Observations: Sky Maps, Systematic Errors, and Basic Results (arXiv:1001.4744), Seven-Year Wilkinson Microwave Anisotropy Probe (WMAP) Observations: Power Spectra and WMAP-Derived Parameters (arXiv:1001.4635), and Seven-Year Wilkinson Microwave Anisotropy Probe (WMAP) Observations: Galactic Foreground Emission (arXiv:1001.4555). Also check out the official WMAP website.