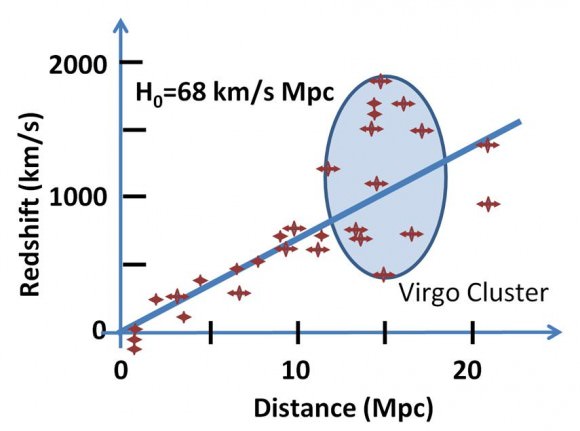

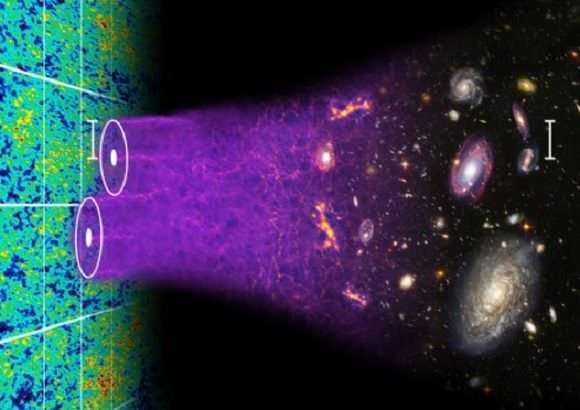

In 1929, Edwin Hubble forever changed our understanding of the cosmos by showing that the Universe is in a state of expansion. By the 1990s, astronomers determined that the rate at which it is expanding is actually speeding up, which in turn led to the theory of “Dark Energy“. Since that time, astronomers and physicists have sought to determine the existence of this force by measuring the influence it has on the cosmos.

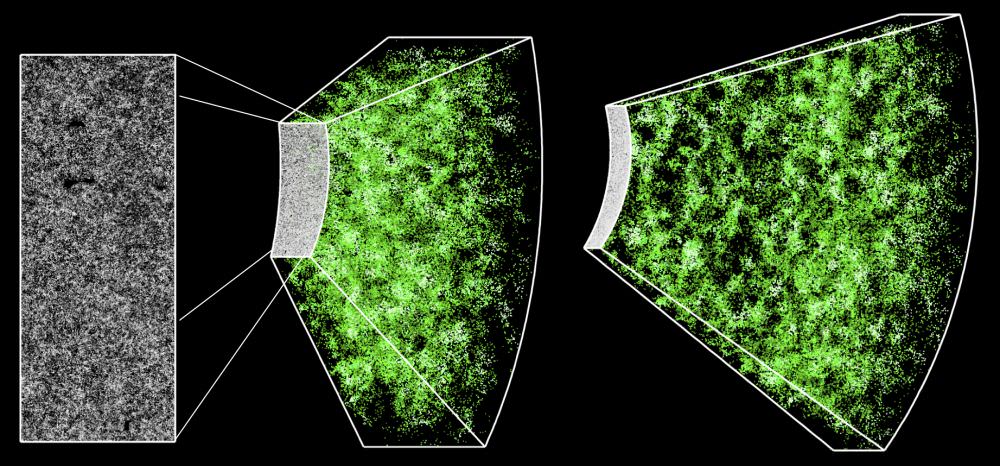

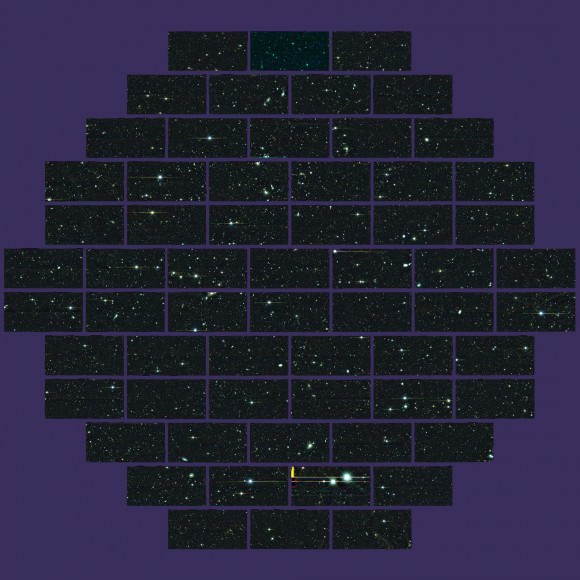

The latest in these efforts comes from the Sloan Digital Sky Survey III (SDSS III), where an international team of researchers have announced that they have finished creating the most precise measurements of the Universe to date. Known as the Baryon Oscillation Spectroscopic Survey (BOSS), their measurements have placed new constraints on the properties of Dark Energy.

The new measurements were presented by Harvard University astronomer Daniel Eisenstein at a recent meeting of the American Astronomical Society. As the director of the Sloan Digital Sky Survey III (SDSS-III), he and his team have spent the past ten years measuring the cosmos and the periodic fluctuations in the density of normal matter to see how galaxies are distributed throughout the Universe.

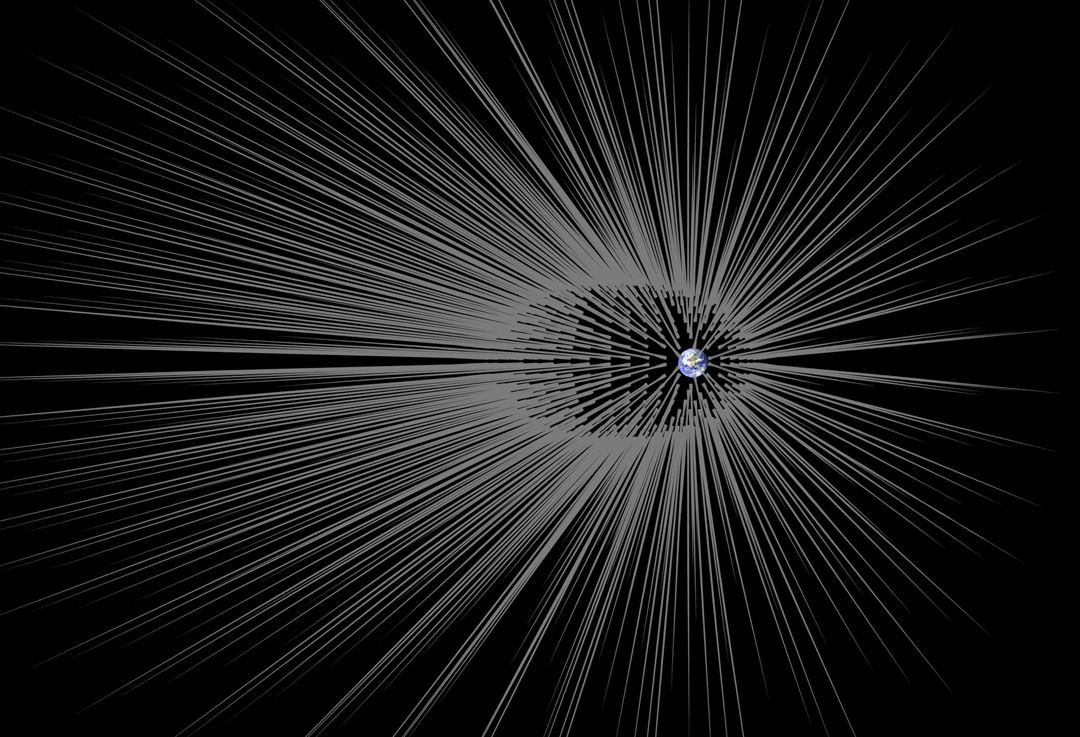

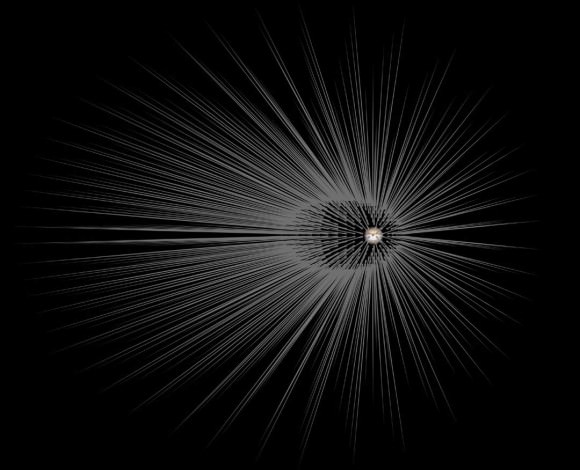

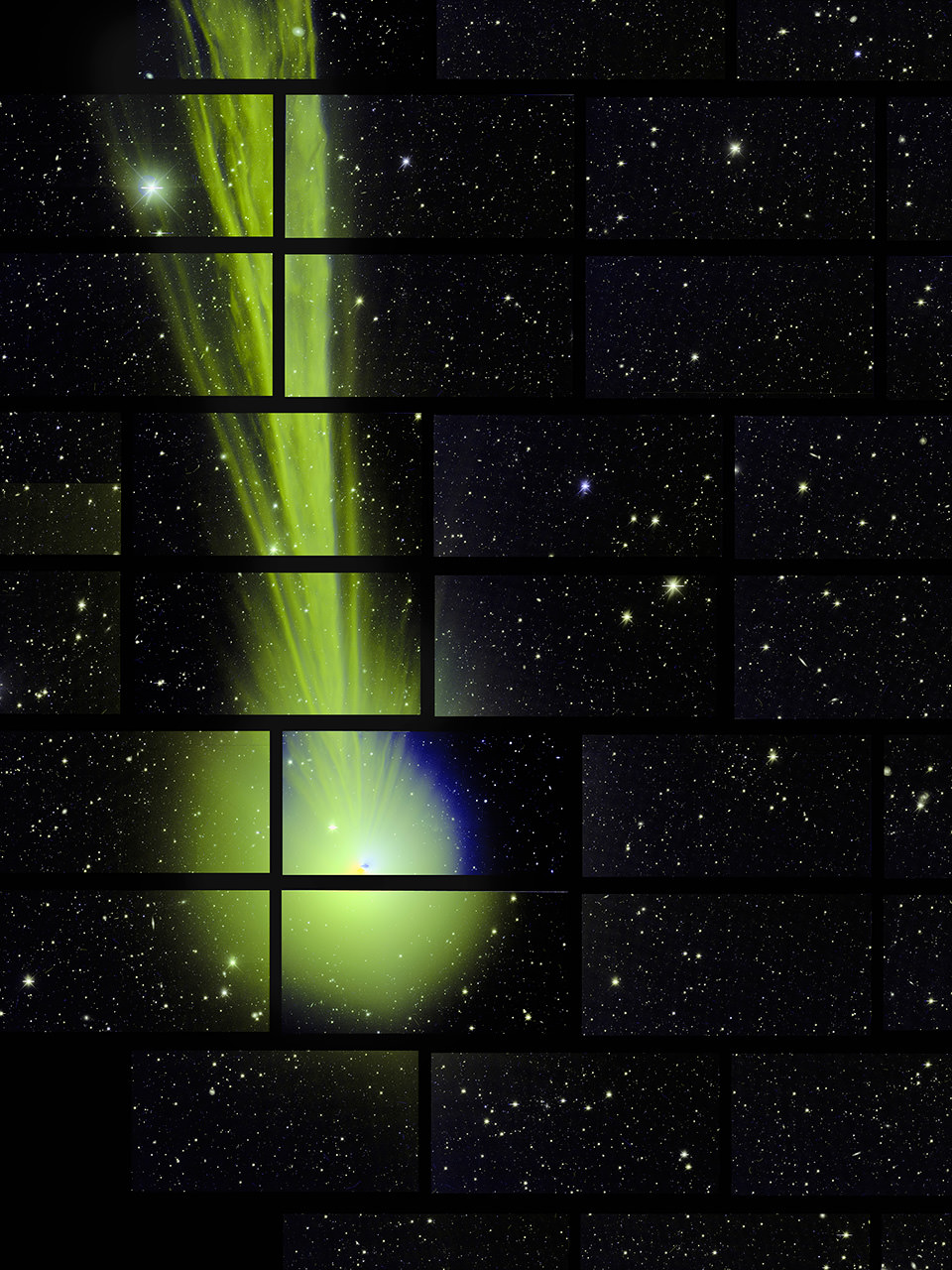

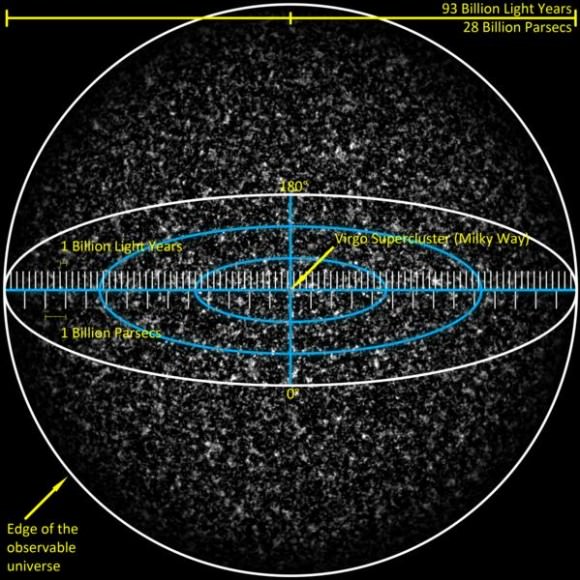

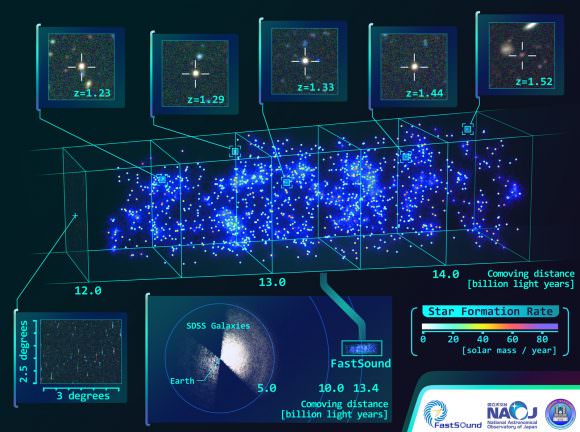

And after a decade of research, the BOSS team was able to produce a three-dimensional map of the cosmos that covers more than six billion light-years. And while other recent surveys have looked further afield – up to distances of 9 and 13 billion light years – the BOSS map is unique in that it boasts the highest accuracy of any cosmological map.

In fact, the BOSS team was able to measure the distribution of galaxies in the cosmos, and at a distance of 6 billion light-years, to within an unprecedented 1% margin of error. Determining the nature of cosmic objects at great distances is no easy matter, due the effects of relativity. As Dr. Eisenstein told Universe Today via email:

“Distances are a long-standing challenge in astronomy. Whereas humans often can judge distance because of our binocular vision, galaxies beyond the Milky Way are much too far away to use that. And because galaxies come in a wide range of intrinsic sizes, it is hard to judge their distance. It’s like looking at a far-away mountain; one’s judgement of its distance is tied up with one’s judgement of its height.”

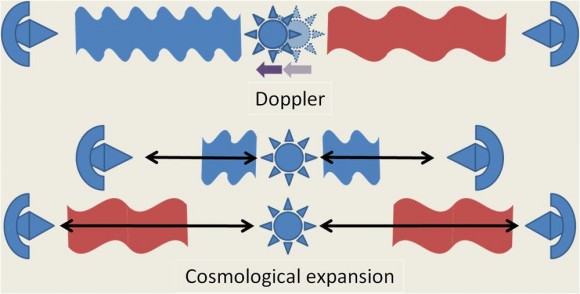

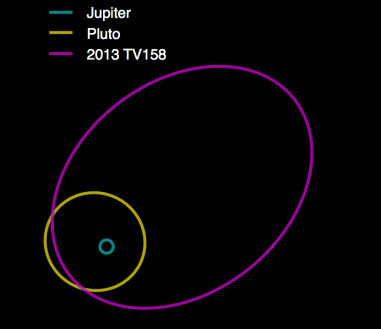

In the past, astronomers have made accurate measurements of objects within the local universe (i.e. planets, neighboring stars, star clusters) by relying on everything from radar to redshift – the degree to which the wavelength of light is shifted towards the red end of the spectrum. However, the greater the distance of an object, the greater the degree of uncertainty.

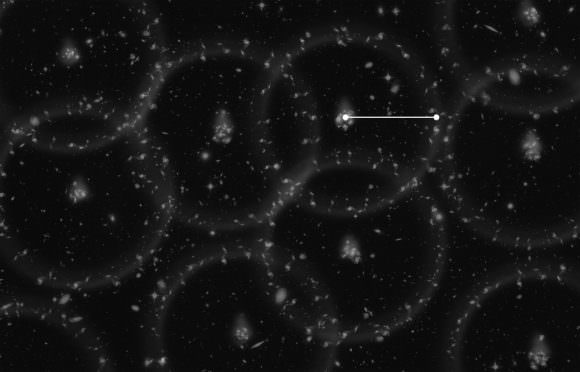

And until now, only objects that are a few thousand light-years from Earth – i.e. within the Milky Way galaxy – have had their distances measured to within a one-percent margin of error. As the largest of the four projects that make up the Sloan Digital Sky Survey III (SDSS-III), what sets BOSS apart is the fact that it relies primarily on the measurement of what are called “baryon acoustic oscillations” (BAOs).

These are essentially subtle periodic ripples in the distribution of visible baryonic (i.e. normal) matter in the cosmos. As Dr. Daniel Eisenstein explained:

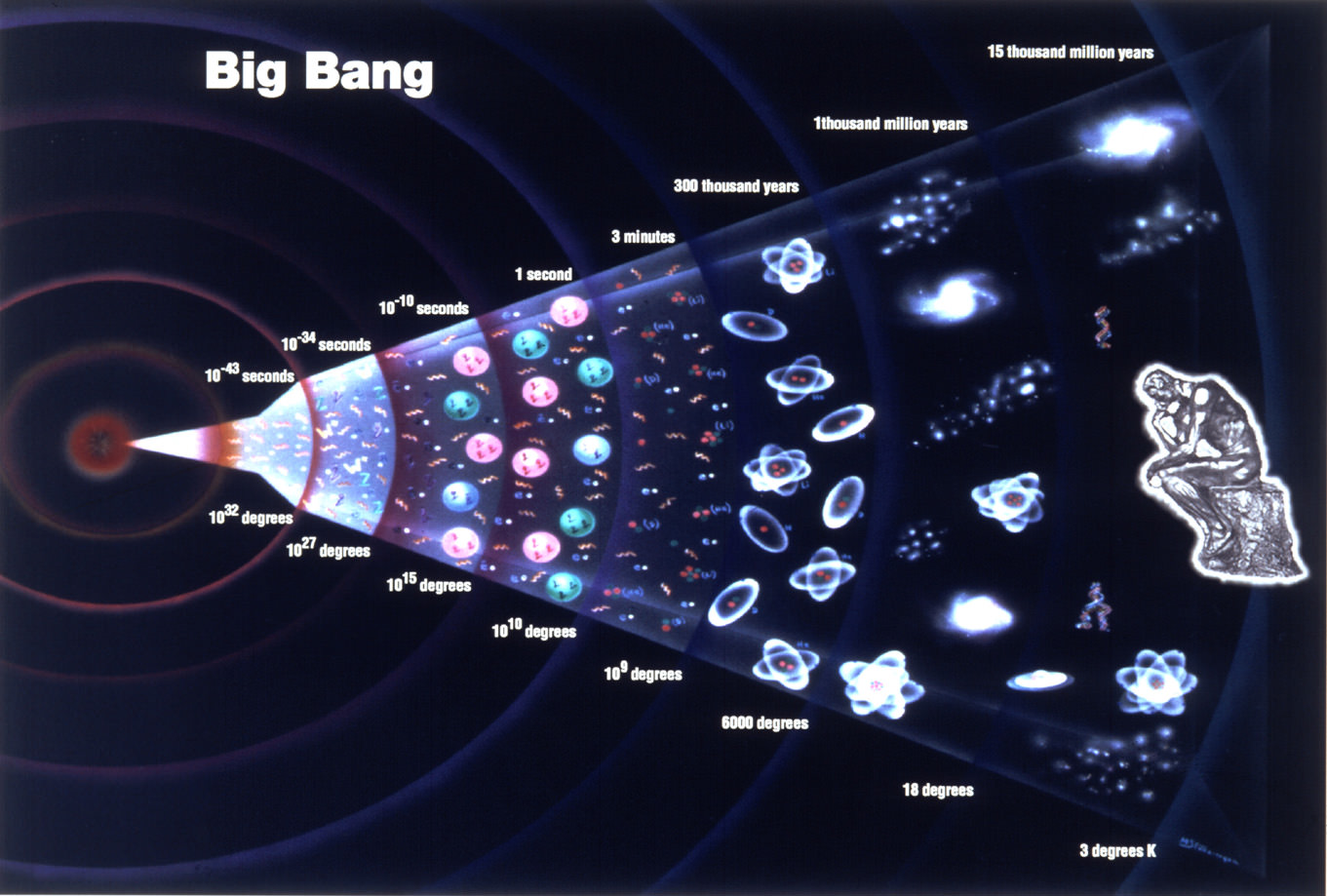

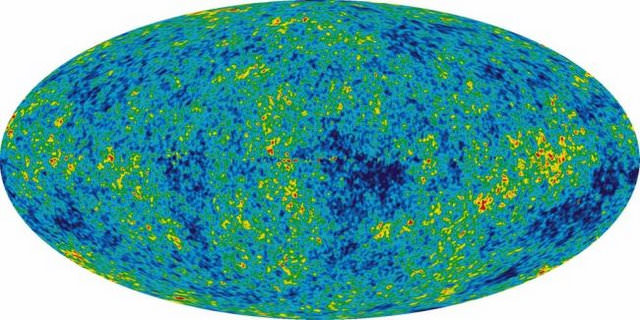

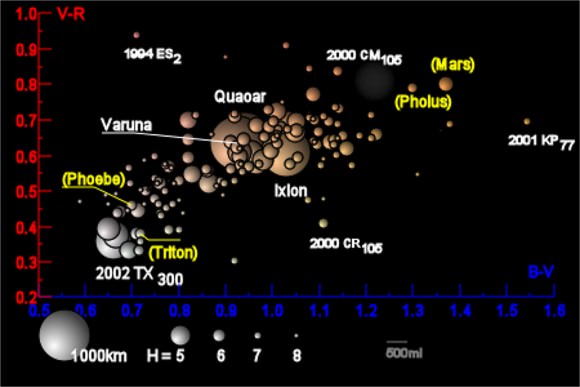

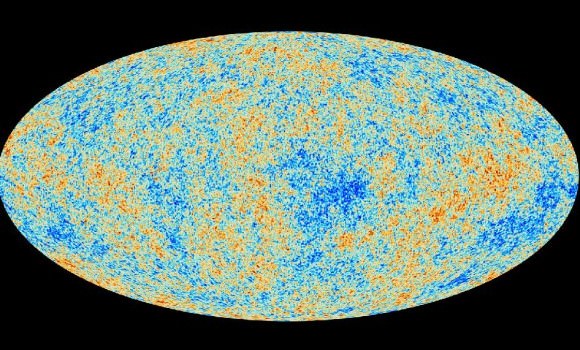

“BOSS measures the expansion of the Universe in two primary ways. The first is by using the baryon acoustic oscillations (hence the name of the survey). Sound waves traveling in the first 400,000 years after the Big Bang create a preferred scale for separations of pairs of galaxies. By measuring this preferred separation in a sample of many galaxies, we can infer the distance to the sample.

“The second method is to measure how clustering of galaxies differs between pairs oriented along the line of sight compared to transverse to the line of sight. The expansion of the Universe can cause this clustering to be asymmetric if one uses the wrong expansion history when converting redshifts to distance.”

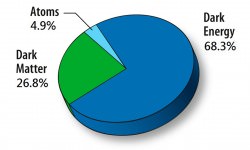

With these new, highly-accurate distance measurements, BOSS astronomers will be able to study the influence of Dark Matter with far greater precision. “Different dark energy models vary in how the acceleration of the expansion of the Universe proceeds over time,” said Eisenstein. “BOSS is measuring the expansion history, which allows us to infer the acceleration rate. We find results that are highly consistent with the predictions of the cosmological constant model, that is, the model in which dark energy has a constant density over time.”

In addition to measuring the distribution of normal matter to determine the influence of Dark Energy, the SDSS-III Collaboration is working to map the Milky Way and search for extrasolar planets. The BOSS measurements are detailed in a series of articles that were submitted to journals by the BOSS collaboration last month, all of which are now available online.

And BOSS is not the only effort to understand the large-scale structure of our Universe, and how all its mysterious forces have shaped it. Just last month, Professor Stephen Hawking announced that the COSMOS supercomputing center at Cambridge University would be creating the most detailed 3D map of the Universe to date.

Relying on data obtained by the CMB data obtained by the ESA’s Planck satellite and information from the Dark Energy Survey, they also hope to measure the influence Dark Energy has had on the distribution of matter in our Universe. Who knows? In a few years time, we may very well come to understand how all the fundamental forces governing the Universe work together.

Further Reading: SDSIII