[/caption]

Located at the southermost point on Earth, the 280-ton, 10-meter-wide South Pole Telescope has helped astronomers unravel the nature of dark energy and zero in on the actual mass of neutrinos — elusive subatomic particles that pervade the Universe and, until very recently, were thought to be entirely without measureable mass.

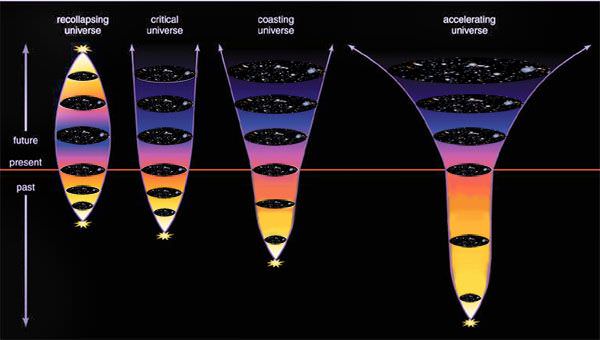

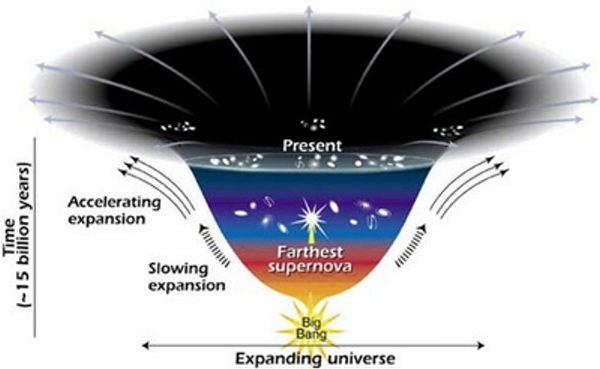

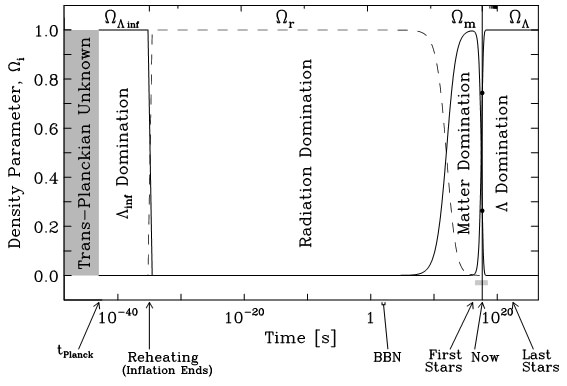

The NSF-funded South Pole Telescope (SPT) is specifically designed to study the secrets of dark energy, the force that purportedly drives the incessant (and apparently still accelerating) expansion of the Universe. Its millimeter-wave observation abilities allow scientists to study the Cosmic Microwave Background (CMB) which pervades the night sky with the 14-billion-year-old echo of the Big Bang.

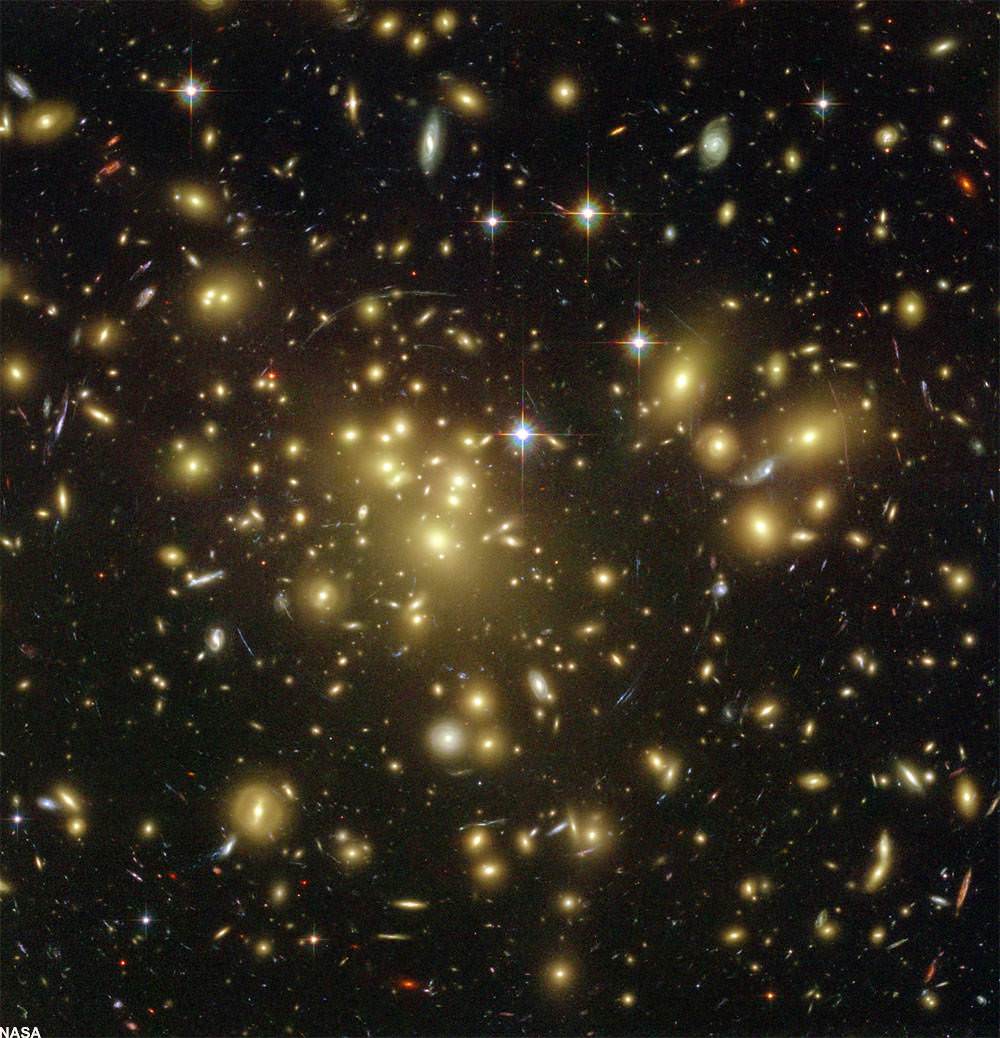

Overlaid upon the imprint of the CMB are the silhouettes of distant galaxy clusters — some of the most massive structures to form within the Universe. By locating these clusters and mapping their movements with the SPT, researchers can see how dark energy — and neutrinos — interact with them.

“Neutrinos are amongst the most abundant particles in the universe,” said Bradford Benson, an experimental cosmologist at the University of Chicago’s Kavli Institute for Cosmological Physics. “About one trillion neutrinos pass through us each second, though you would hardly notice them because they rarely interact with ‘normal’ matter.”

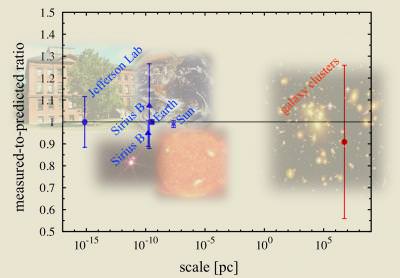

If neutrinos were particularly massive, they would have an effect on the large-scale galaxy clusters observed with the SPT. If they had no mass, there would be no effect.

The SPT collaboration team’s results, however, fall somewhere in between.

Even though only 100 of the 500 clusters identified so far have been surveyed, the team has been able to place a reasonably reliable preliminary upper limit on the mass of neutrinos — again, particles that had once been assumed to have no mass.

Previous tests have also assigned a lower limit to the mass of neutrinos, thus narrowing the anticipated mass of the subatomic particles to between 0.05 – 0.28 eV (electron volts). Once the SPT survey is completed, the team expects to have an even more confident result of the particles’ masses.

“With the full SPT data set we will be able to place extremely tight constraints on dark energy and possibly determine the mass of the neutrinos,” said Benson.

“We should be very close to the level of accuracy needed to detect the neutrino masses,” he noted later in an email to Universe Today.

Such precise measurements would not have been possible without the South Pole Telescope, which has the ability due to its unique location to observe a dark sky for very long periods of time. Antarctica also offers SPT a stable atmosphere, as well as very low levels of water vapor that might otherwise absorb faint millimeter-wavelength signals.

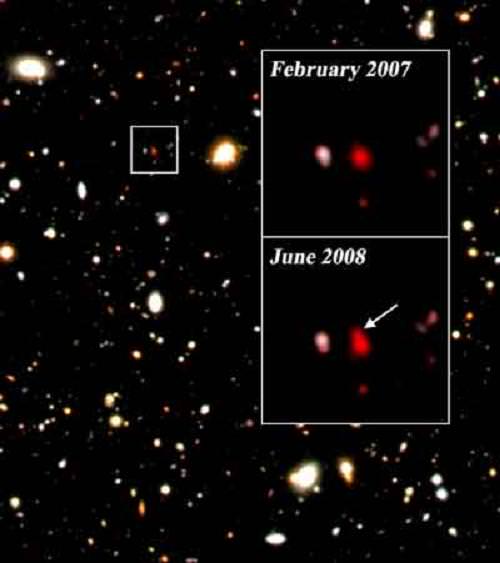

“The South Pole Telescope has proven to be a crown jewel of astrophysical research carried out by NSF in the Antarctic,” said Vladimir Papitashvili, Antarctic Astrophysics and Geospace Sciences program director at NSF’s Office of Polar Programs. “It has produced about two dozen peer-reviewed science publications since the telescope received its ‘first light’ on Feb. 17, 2007. SPT is a very focused, well-managed and amazing project.”

The team’s findings were presented by Bradford Benson at the American Physical Society meeting in Atlanta on April 1.