[/caption]

From a NASA press release:

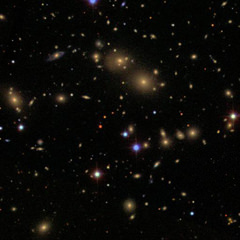

Astronomers using NASA’s Hubble Space Telescope have ruled out an alternate theory on the nature of dark energy after recalculating the expansion rate of the universe to unprecedented accuracy.

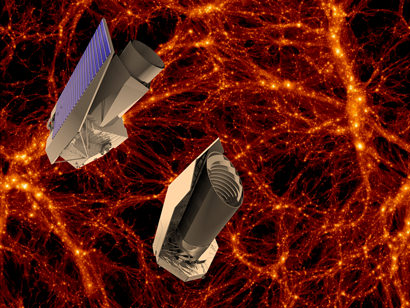

The universe appears to be expanding at an increasing rate. Some believe that is because the universe is filled with a dark energy that works in the opposite way of gravity. One alternative to that hypothesis is that an enormous bubble of relatively empty space eight billion light-years across surrounds our galactic neighborhood. If we lived near the center of this void, observations of galaxies being pushed away from each other at accelerating speeds would be an illusion.

This hypothesis has been invalidated because astronomers have refined their understanding of the universe’s present expansion rate. Adam Riess of the Space Telescope Science Institute (STScI) and Johns Hopkins University in Baltimore, Md., led the research. The Hubble observations were conducted by the SHOES (Supernova H0 for the Equation of State) team that works to refine the accuracy of the Hubble constant to a precision that allows for a better characterization of dark energy’s behavior. The observations helped determine a figure for the universe’s current expansion rate to an uncertainty of just 3.3 percent. The new measurement reduces the error margin by 30 percent over Hubble’s previous best measurement in 2009. Riess’s results appear in the April 1 issue of The Astrophysical Journal.

“We are using the new camera on Hubble like a policeman’s radar gun to catch the universe speeding,” Riess said. “It looks more like it’s dark energy that’s pressing the gas pedal.”

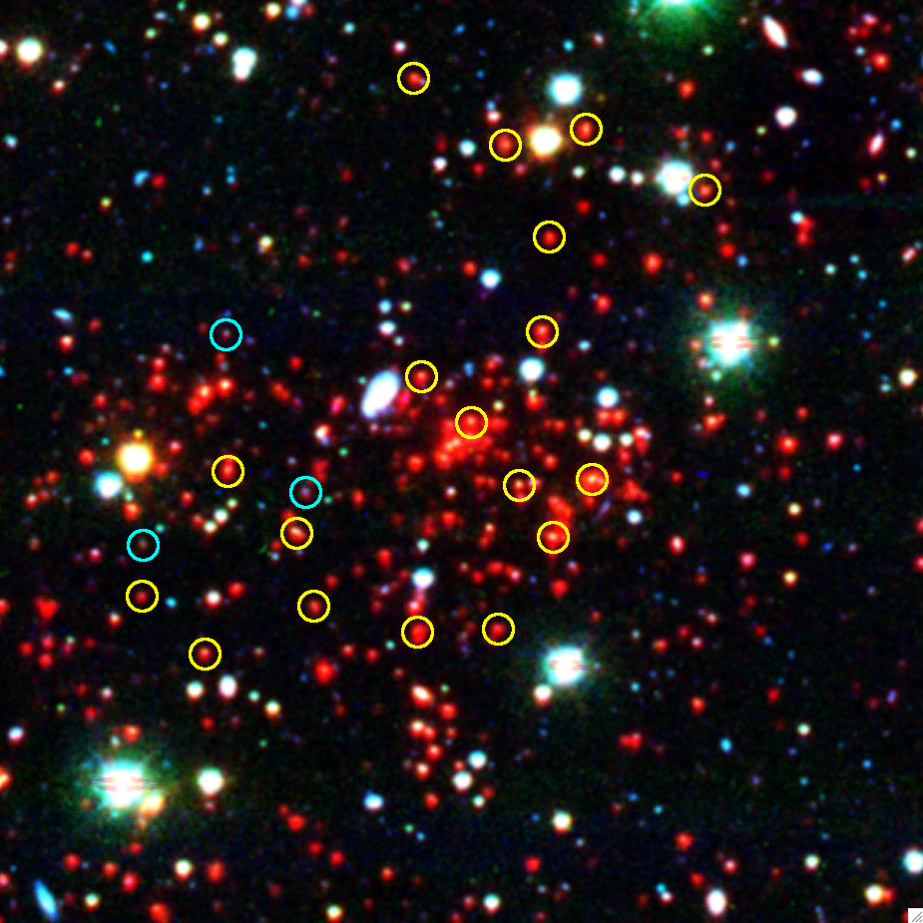

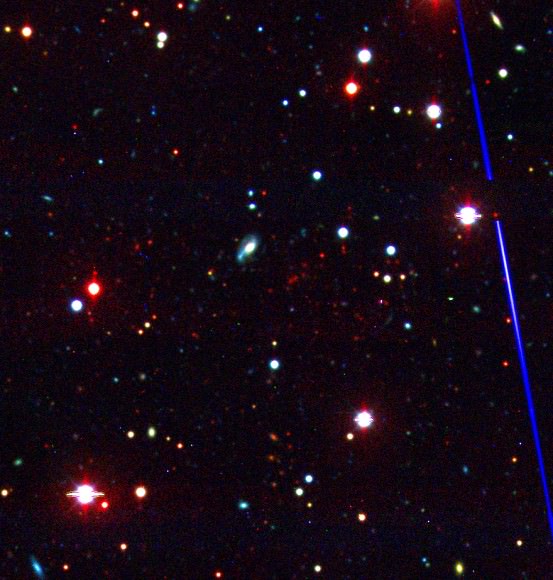

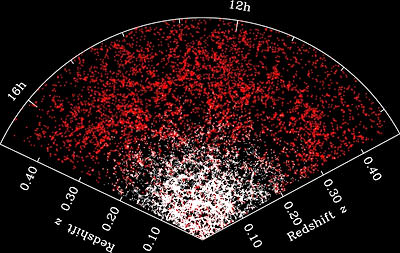

Riess’ team first had to determine accurate distances to galaxies near and far from Earth. The team compared those distances with the speed at which the galaxies are apparently receding because of the expansion of space. They used those two values to calculate the Hubble constant, the number that relates the speed at which a galaxy appears to recede to its distance from the Milky Way. Because astronomers cannot physically measure the distances to galaxies, researchers had to find stars or other objects that serve as reliable cosmic yardsticks. These are objects with an intrinsic brightness, brightness that hasn’t been dimmed by distance, an atmosphere, or stellar dust, that is known. Their distances, therefore, can be inferred by comparing their true brightness with their apparent brightness as seen from Earth.

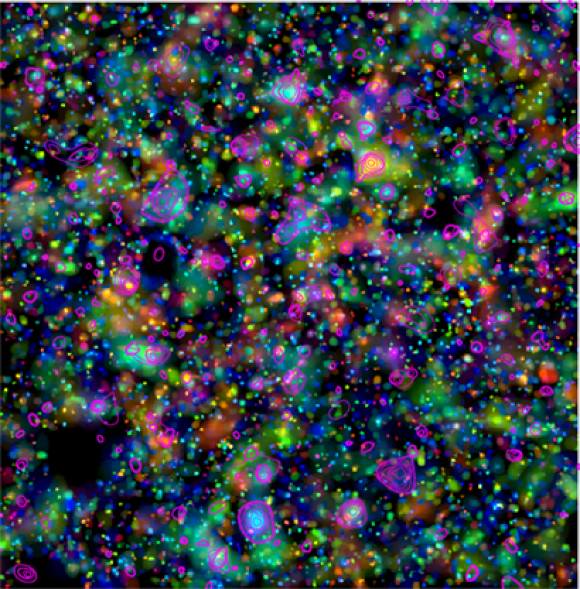

To calculate longer distances, Riess’ team chose a special class of exploding stars called Type 1a supernovae. These stellar explosions all flare with similar luminosity and are brilliant enough to be seen far across the universe. By comparing the apparent brightness of Type 1a supernovae and pulsating Cepheid stars, the astronomers could measure accurately their intrinsic brightness and therefore calculate distances to Type Ia supernovae in far-flung galaxies.

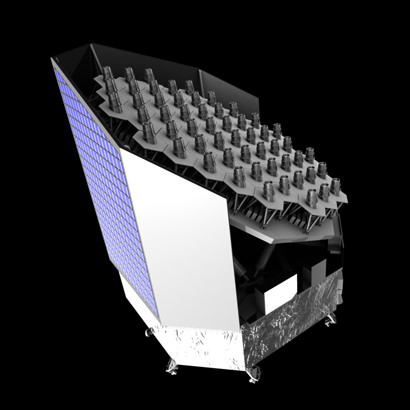

Using the sharpness of the new Wide Field Camera 3 (WFC3) to study more stars in visible and near-infrared light, scientists eliminated systematic errors introduced by comparing measurements from different telescopes.

“WFC3 is the best camera ever flown on Hubble for making these measurements, improving the precision of prior measurements in a small fraction of the time it previously took,” said Lucas Macri, a collaborator on the SHOES Team from Texas A&M in College Station.

Knowing the precise value of the universe’s expansion rate further restricts the range of dark energy’s strength and helps astronomers tighten up their estimates of other cosmic properties, including the universe’s shape and its roster of neutrinos, or ghostly particles, that filled the early universe.

“Thomas Edison once said ‘every wrong attempt discarded is a step forward,’ and this principle still governs how scientists approach the mysteries of the cosmos,” said Jon Morse, astrophysics division director at NASA Headquarters in Washington. “By falsifying the bubble hypothesis of the accelerating expansion, NASA missions like Hubble bring us closer to the ultimate goal of understanding this remarkable property of our universe.”