Antarctica. Image credit: Ben Holt, Sr. Click to enlarge

Researchers have completed the first comprehensive survey of Antarctic ice mass; not surprisingly, ice loss is on the rise – mostly from the West Antarctic ice shelf. From 2002 to 2005, the continent lost enough ice to raise global sea levels by about 1.2 mm (0.05 inches). The measurements were made by the GRACE satellite, which detects slight changes in the Earth’s gravity field over time. This is the most accurate estimate of Antarctic ice loss ever made.

The first-ever gravity survey of the entire Antarctic ice sheet, conducted using data from the NASA/German Aerospace Center Gravity Recovery and Climate Experiment (Grace), concludes the ice sheet’s mass has decreased significantly from 2002 to 2005.

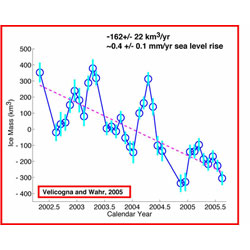

Isabella Velicogna and John Wahr, both from the University of Colorado, Boulder, conducted the study. They demonstrated for the first time that Antarctica’s ice sheet lost a significant amount of mass since 2002. The estimated mass loss was enough to raise global sea level about 1.2 millimeters (0.05 inches) during the survey period, or about 13 percent of the overall observed sea level rise for the same period. The researchers found Antarctica’s ice sheet decreased by 152 (plus or minus 80) cubic kilometers of ice annually between April 2002 and August 2005.

That is about how much water the United States consumes in three months (a cubic kilometer is one trillion liters; approximately 264 billion gallons of water). This represents a change of about 0.4 millimeters (.016 inches) per year to global sea level rise. Most of the mass loss came from the West Antarctic ice sheet.

“Antarctica is Earth’s largest reservoir of fresh water,” Velicogna said. “The Grace mission is unique in its ability to measure mass changes directly for entire ice sheets and can determine how Earth’s mass distribution changes over time. Because ice sheets are a large source of uncertainties in projections of sea level change, this represents a very important step toward more accurate prediction, and has important societal and economic impacts. As more Grace data become available, it will become feasible to search for longer-term changes in the rate of Antarctic mass loss,” she said.

Measuring variations in Antarctica’s ice sheet mass is difficult because of its size and complexity. Grace is able to overcome these issues, surveying the entire ice sheet, and tracking the balance between mass changes in the interior and coastal areas.

Previous estimates have used various techniques, each with limitations and uncertainties and an inherent inability to monitor the entire ice sheet mass as a whole. Even studies that synthesized results from several techniques, such as the assessment by the Intergovernmental Panel on Climate Change, suffered from a lack of data in critical regions.

“Combining Grace data with data from other instruments such as NASA’s Ice, Cloud and Land Elevation Satellite; radar; and altimeters that are more effective for studying individual glaciers is expected to substantially improve our understanding of the processes controlling ice sheet mass variations,” Velicogna said.

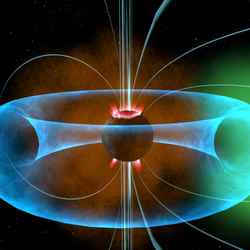

The Antarctic mass loss findings were enabled by the ability of the identical twin Grace satellites to track minute changes in Earth’s gravity field resulting from regional changes in planet mass distribution. Mass movement of ice, air, water and solid earth reflect weather patterns, climate change and even earthquakes. To track these changes, Grace measures micron-scale variations in the 220-kilometer (137-mile) separation between the two satellites, which fly in formation.

Grace is managed for NASA by the Jet Propulsion Laboratory, Pasadena, Calif. The University of Texas Center for Space Research has overall mission responsibility. GeoForschungsZentrum Potsdam (GFZ), Potsdam, Germany, is responsible for German mission elements. Science data processing, distribution, archiving and product verification are managed jointly by JPL, the University of Texas and GFZ. The results will appear in this week’s issue of Science.

For information about NASA and agency programs on the Web, visit:

http://www.nasa.gov/home

For more information about Grace on the Web, visit:

http://www.csr.utexas.edu/grace ; and http://www.gfz-potsdam.de/grace

For University of Colorado information call Jim Scott at: (303) 492-3114.

JPL is managed for NASA by the California Institute of Technology in Pasadena.

Original Source: NASA News Release