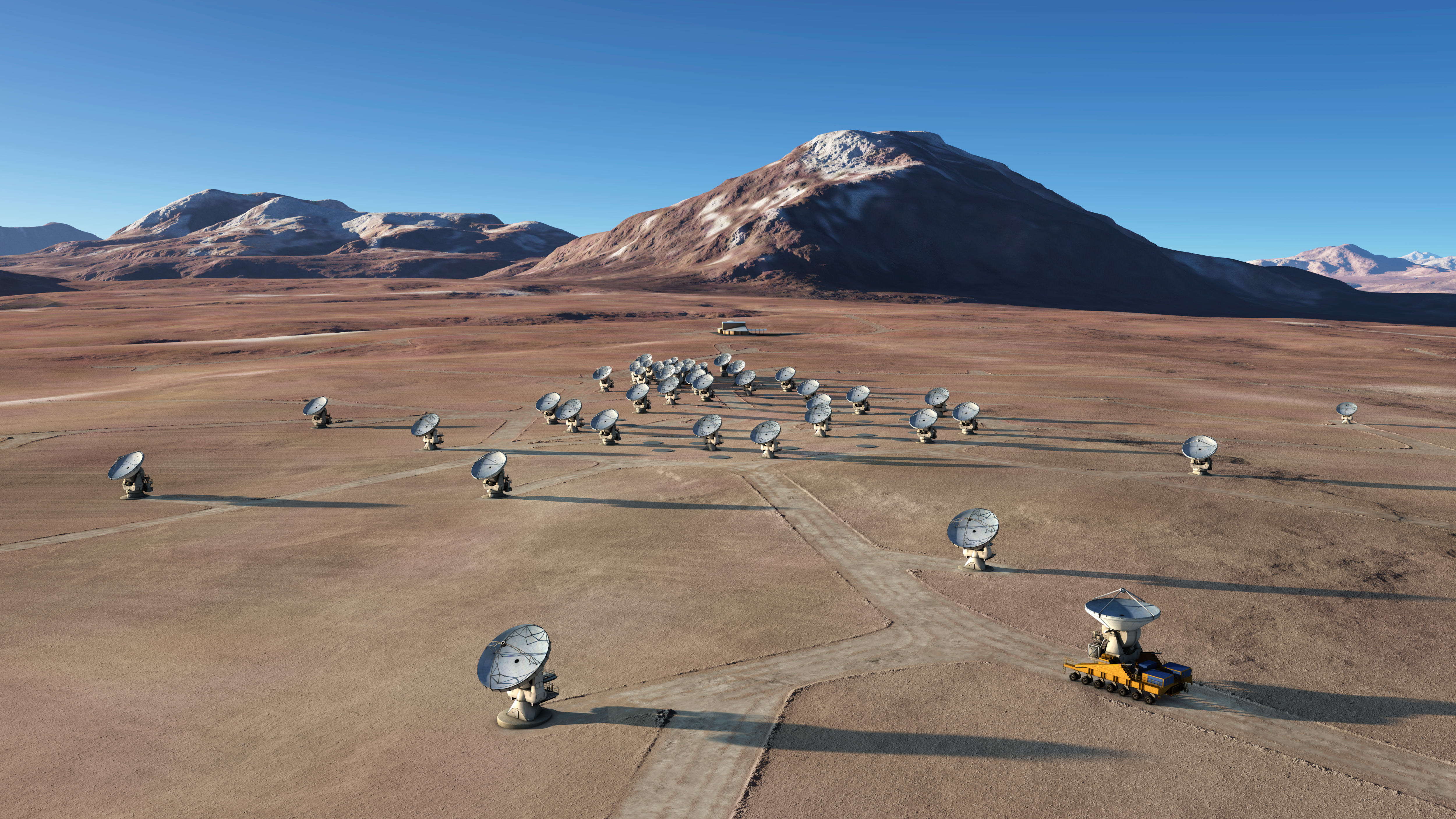

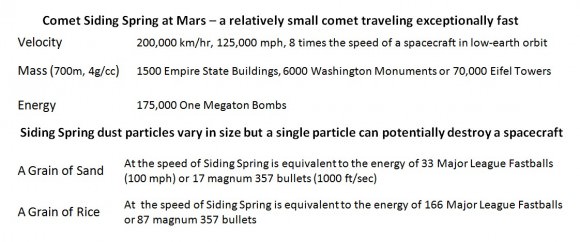

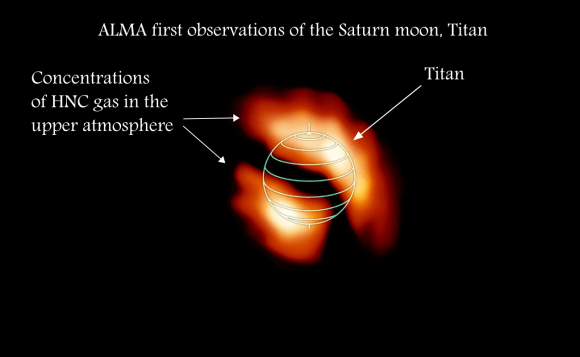

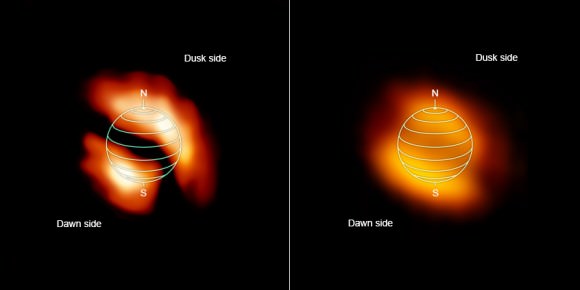

A new mystery of Titan has been uncovered by astronomers using their latest asset in the high altitude desert of Chile. Using the now fully deployed Atacama Large Millimeter Array (ALMA) telescope in Chile, astronomers moved from observing comets to Titan. A single 3 minute observation revealed organic molecules that are askew in the atmosphere of Titan. The molecules in question should be smoothly distributed across the atmosphere, but they are not.

The Cassini/Huygens spacecraft at the Saturn system has been revealing the oddities of Titan to us, with its lakes and rain clouds of methane, and an atmosphere thicker than Earth’s. But the new observations by ALMA of Titan underscore how much more can be learned about Titan and also how incredible the ALMA array is.

The ALMA astronomers called it a “brief 3 minute snapshot of Titan.” They found zones of organic molecules offset from the Titan polar regions. The molecules observed were hydrogen isocyanide (HNC) and cyanoacetylene (HC3N). It is a complete surprise to the astrochemist Martin Cordiner from NASA Goddard Space Flight Center in Greenbelt, Maryland. Cordiner is the lead author of the work published in the latest release of Astrophysical Journal Letters.

The NASA Goddard press release states, “At the highest altitudes, the gas pockets appeared to be shifted away from the poles. These off-pole locations are unexpected because the fast-moving winds in Titan’s middle atmosphere move in an east–west direction, forming zones similar to Jupiter’s bands, though much less pronounced. Within each zone, the atmospheric gases should, for the most part, be thoroughly mixed.”

When one hears there is a strange, skewed combination of organic compounds somewhere, the first thing to come to mind is life. However, the astrochemists in this study are not concluding that they found a signature of life. There are, in fact, other explanations that involve simpler forces of nature. The Sun and Saturn’s magnetic field deliver light and energized particles to Titan’s atmosphere. This energy causes the formation of complex organics in the Titan atmosphere. But how these two molecules – HNC and HC3N – came to have a skewed distribution is, as the astrochemists said, “very intriguing.” Cordiner stated, “This is an unexpected and potentially groundbreaking discovery… a fascinating new problem.”

The press release from the National Radio Astronomy Observatory states, “studying this complex chemistry may provide insights into the properties of Earth’s very early atmosphere.” Additionally, the new observations add to understanding Titan – a second data point (after Earth) for understanding organics of exo-planets, which may number in the hundreds of billions beyond our solar system within our Milky Way galaxy. Astronomers need more data points in order to sift through the many exo-planets that will be observed and harbor organic compounds. With Titan and Earth, astronomers will have points of comparison to determine what is happening on distant exo-planets, whether it’s life or not.

(Image Credit: NRAO/AUI/NSF)

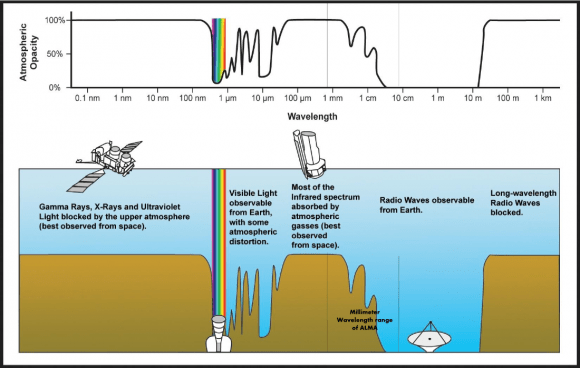

The report of this new and brief observation also underscores the new astronomical asset in the altitudes of Chile. ALMA represents the state of the art of millimeter and sub-millimeter astronomy. This field of astronomy holds a lot of promise. Back around 1980, at the Kitt Peak National Observatory in Arizona, alongside the great visible light telescopes, there was an oddity, a millimeter wavelength dish. That dish was the beginning of radio astronomy in the 1 – 10 millimeter wavelength range. Millimeter astronomy is only about 35 years old. These wavelengths stand at the edge of the far infrared and include many light emissions and absorptions from cold objects which often include molecules and particularly organics. The ALMA array has 10 times more resolving power than the Hubble space telescope.

The Earth’s atmosphere stands in the way of observing the Universe in these wavelengths. By no coincidence our eyes evolved to see in the visible light spectrum. It is a very narrow band, and it means that there is a great, wide world of light waves to explore with different detectors than just our eyes.

In the millimeter range of wavelengths, water, oxygen, and nitrogen are big absorbers. Some wavelengths in the millimeter range are completely absorbed. So there are windows in this range. ALMA is designed to look at those wavelengths that are accessible from the ground. The Chajnantor plateau in the Atacama desert at 5000 meters (16,400 ft) provides the driest, clearest location in the world for millimeter astronomy outside of the high altitude regions of the Antarctic.

At high altitude and over this particular desert, there is very little atmospheric water. ALMA consists of 66 12 meter (39 ft) and 7 meter (23 ft) dishes. However, it wasn’t just finding a good location that made ALMA. The 35 year history of millimeter-wavelength astronomy has been a catch up game. Detecting these wavelengths required very sensitive detectors – low noise in the electronics. The steady improvement in solid-state electronics from the late 70s to today and the development of cryostats to maintain low temperatures have made the new observations of Titan possible. These are observations that Cassini at 1000 kilometers from Titan could not do but ALMA at 1.25 billion kilometers (775 million miles) away could.

The ALMA telescope array was developed by a consortium of countries led by the United States’ National Science Foundation (NSF) and countries of the European Union though ESO (European Organisation for Astronomical Research in the Southern Hemisphere). The first concepts were proposed in 1999. Japan joined the consortium in 2001.

The prototype ALMA telescope was tested at the site of the VLA in New Mexico in 2003. That prototype now stands on Kitt Peak having replaced the original millimeter wavelength dish that started this branch of astronomy in the 1980s. The first dishes arrived in 2007 followed the next year by the huge transporters for moving each dish into place at such high altitude. The German-made transporter required a cabin with an oxygen supply so that the drivers could work in the rarefied air at 5000 meters. The transporter was featured on an episode of the program Monster Moves. By 2011, test observations were taking place, and by 2013 the first science program was undertaken. This year, the full array was in place and the second science program spawned the Titan observations. Many will follow. ALMA, which can operate 24 hours per day, will remain the most powerful instrument in its class for about 10 years when another array in Africa will come on line.

References:

“Alma Measurements Of The Hnc And Hc3N Distributions In Titan’s Atmosphere“, M. A. Cordiner, et al., Astrophysical Journal Letters