[/caption]

According to Wikipedia, a Journal Club is a group of individuals who meet regularly to critically evaluate recent articles in the scientific literature. Since this is Universe Today if we occasionally stray into critically evaluating each other’s critical evaluations, that’s OK too.

And of course, the first rule of Journal Club is… don’t talk about Journal Club. So, without further ado – today’s journal article is about a new addition to the Standard Model of fundamental particles.

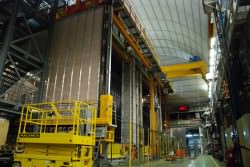

The good folk at the CERN Large Hadron Collider finished off 2011 with some vague murmurings about the Higgs Boson – which might have been kind-of sort-of discovered in the data already, but due to the degree of statistical noise around it, no-one’s willing to call it really found yet.

Since there is probably a Nobel prize in it – this seems like a good decision. It is likely that a one-way-or-the-other conclusion will be possible around this time next year – either because collisions to be run over 2012 reveal some critical new data, or because someone sifting through the mountain of data already produced will finally nail it.

But in the meantime, they did find something in 2011. There is a confirmed Observation of a new chi_b state in radiative transitions to Upsilon(1S) and Upsilon(2S) at the ATLAS experiment – or, in a nutshell… we hit Bottomonium.

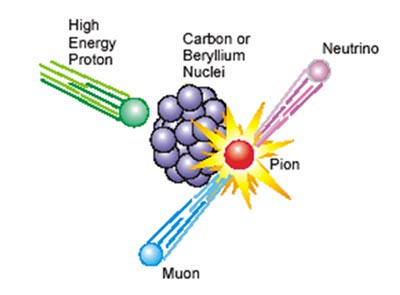

In the lexicon of sub-atomic particle physics, the term Quarkonium is used to describe a particle whose constituents comprise a quark and its own anti-quark. So for example you can have Charmonium (a charm quark and a charm anti-quark) and you can have Bottomonium (a bottom quark and a bottom anti-quark).

The new Chi b (3P) particle has been reported as a boson – which is technically correct, since it has integer spin, while fermions (hadrons and leptons) have half spins. But it’s not an elementary boson like photons, gluons or the (theoretical) Higgs – it’s a composite boson composed of quarks. So, it is perhaps less confusing to consider it a meson (which is a bosonic hadron). Like other mesons, Chi b (3P) is a hadron that would not be commonly found in nature. It just appears briefly in particle accelerator collisions before it decays.

So comments? Has the significance of this new finding been muted because the discoverers thought it would just prompt a lot of bottom jokes? Is Chi_b (3P) the ‘Claytons Higgs’ (the boson you have when you’re not having a Higgs?). Want to suggest an article for the next edition of Journal Club?

Otherwise, have a great 2012.

Today’s article:

The ATLAS collaboration Observation of a new chi_b state in radiative transitions to Upsilon(1S) and Upsilon(2S) at the ATLAS experiment.