[/caption]

Key to the astronomical modeling process by which scientists attempt to understand our universe, is a comprehensive knowledge of the values making up these models. These are generally measured to exceptionally high confidence levels in laboratories. Astronomers then assume these constants are just that – constant. This generally seems to be a good assumption since models often produce mostly accurate pictures of our universe. But just to be sure, astronomers like to make sure these constants haven’t varied across space or time. Making sure, however, is a difficult challenge. Fortunately, a recent paper has suggested that we may be able to explore the fundamental masses of protons and electrons (or at least their ratio) by looking at the relatively common molecule of methanol.

The new report is based on the complex spectra of the methane molecule. In simple atoms, photons are generated from transitions between atomic orbitals since they have no other way to store and translate energy. But with molecules, the chemical bonds between the component atoms can store the energy in vibrational modes in much the same way masses connected to springs can vibrate. Additionally, molecules lack radial symmetry and can store energy by rotation. For this reason, the spectra of cool stars show far more absorption lines than hot ones since the cooler temperatures allow molecules to begin forming.

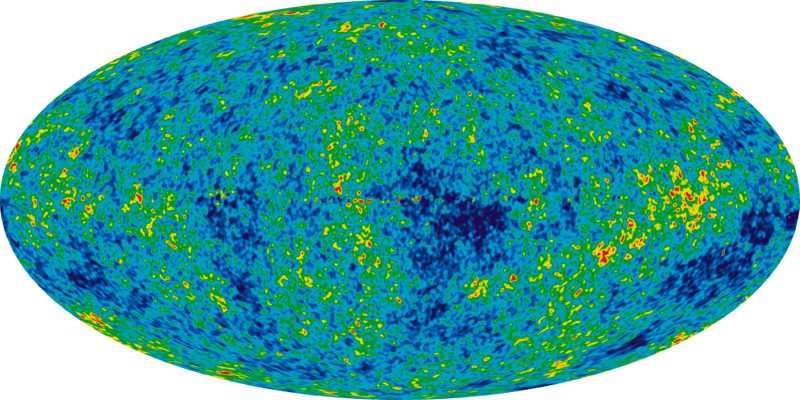

Many of these spectral features are present in the microwave portion of the spectra and some are extremely dependent on quantum mechanical effects which in turn depend on precise masses of the proton and electron. If those masses were to change, the position of some spectral lines would change as well. By comparing these variations to their expected positions, astronomers can gain valuable insights to how these fundamental values may change.

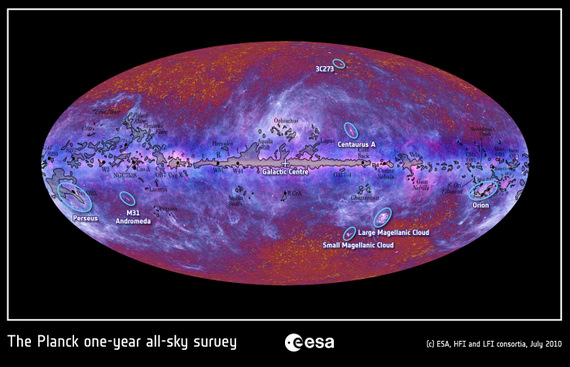

The primary difficulty is that, in the grand scheme of things, methanol (CH3OH) is rare since our universe is 98% hydrogen and helium. The last 2% is composed of every other element (with oxygen and carbon being the next most common). Thus, methanol is comprised of three of the four most common elements, but they have to find each other, to form the molecule in question. On top of that, they must also exist in the right temperature range; too hot and the molecule is broken apart; too cold and there’s not enough energy to cause emission for us to detect it. Due to the rarity of molecules with these conditions, you might expect that finding enough of it, especially across the galaxy or universe, would be challenging.

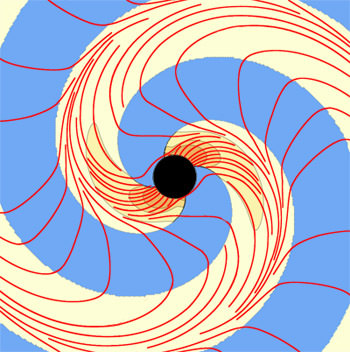

Fortunately, methanol is one of the few molecules which are prone to creating astronomical masers. Masers are the microwave equivalent of lasers in which a small input of light can cause a cascading effect in which it induces the molecules it strikes to also emit light at specific frequencies. This can greatly enhance the brightness of a cloud containing methanol, increasing the distance to which it could be readily detected.

By studying methanol masers within the Milky Way using this technique, the authors found that, if the ratio of the mass of an electron to that of a proton does change, it does so by less than three parts in one hundred million. Similar studies have also been conducted using ammonia as the tracer molecule (which can also form masers) and have come to similar conclusions.