In 1971 physicist Stephen Hawking suggested that there might be “mini” black holes all around us that were created by the Big Bang. The violence of the rapid expansion following the beginning of the Universe could have squeezed concentrations of matter to form miniscule black holes, so small they can’t even be seen in a regular microscope. But what if these mini black holes were everywhere, and in fact, what if they make up the fabric of the universe? A new paper from two researchers in California proposes this idea.

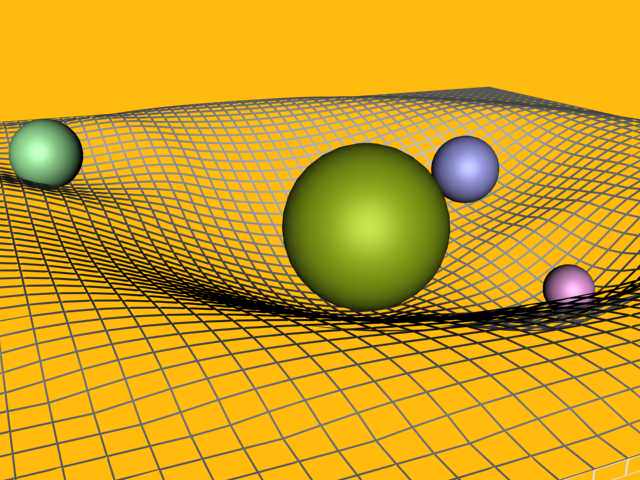

Black holes are regions of space where gravity is so strong that not even light can escape, and are usually thought of as large areas of space, such as the supermassive black holes at the center of galaxies. No observational evidence of mini-black holes exists but, in principle, they could be present throughout the Universe.

Since black holes have gravity, they also have mass. But with mini black holes, the gravity would be weak. However, many physicists have assumed that even on the tiniest scale, the Planck scale, gravity regains its strength.

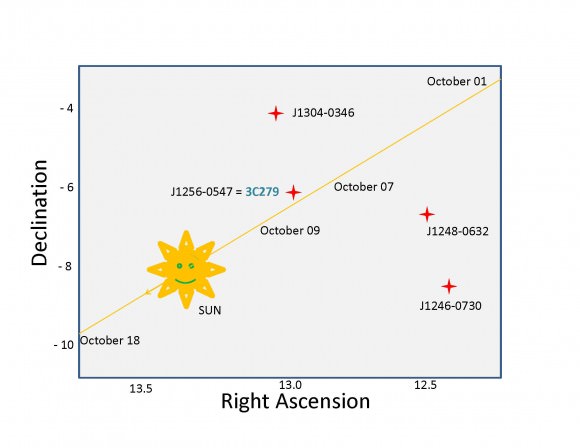

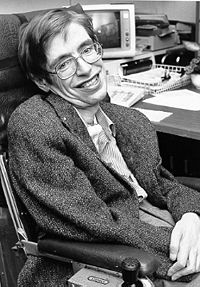

Experiments at the Large Hadron Collider are aimed at detecting mini black holes, but suffer from not knowing exactly how a reduced-Planck-mass black hole would behave, say Donald Coyne from UC Santa Cruz (now deceased) and D. C. Cheng from the Almaden Research Center near San Jose.

String theory also proposes that gravity plays a stronger role in higher dimensional space, but it is only in our four dimensional space that gravity appears weak.

Since these dimensions become important only on the Planck scale, it’s at that level that gravity re-asserts itself. And if that’s the case, then mini-black holes become a possibility, say the two researchers.

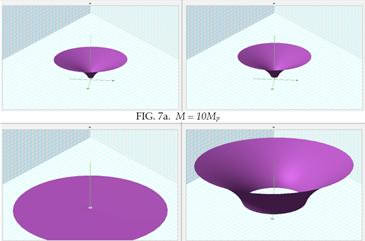

They looked at what properties black holes might have at such a small scale, and determined they could be quite varied.

Black holes lose energy and shrink in size as they do so, eventually vanishing, or evaporating. But this is a very slow process and only the smallest back holes will have had time to significantly evaporate over the enter 14 billion year history of the universe.

The quantization of space on this level means that mini-black holes could turn up at all kinds of energy levels. They predict the existence of huge numbers of black hole particles at different energy levels. And these black holes might be so common that perhaps “All particles may be varying forms of stabilized black holes.”

“At first glance the scenario … seems bizarre, but it is not,” Coyne and Cheng write. “This is exactly what would be expected if an evaporating black hole leaves a remnant consistent with quantum mechanics… This would put a whole new light on the process of evaporation of large black holes, which might then appear no different in principle from the correlated decays of elementary particles.”

They say their research need more experimentation. This may come from the LHC, which could begin to probe the energies at which these kinds of black holes will be produced.

Original paper.

Source: Technology Review