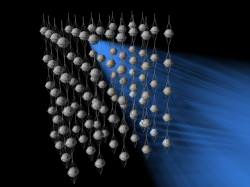

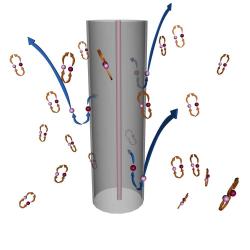

The idea goes like this: Cosmic rays, originating from outside the Solar System, hit the Earth’s atmosphere. In doing so these highly energetic particles create microscopic aerosols. Aerosols collect in the atmosphere and act as nuclei for water droplet formation. Large-scale cloud cover can result from this microscopic interaction. Cloud cover reflects light from the Sun, therefore cooling the Earth. This “global dimming” effect could hold some answers to the global warming debate as it influences the amount of radiation entering the atmosphere. Therefore the flux of cosmic rays is highly dependent on the Sun’s magnetic field that varies over the 11-year solar cycle.

If this theory is so, some questions come to mind: Is the Sun’s changing magnetic field responsible for the amount of global cloud cover? To what degree does this influence global temperatures? Where does that leave man-made global warming? Two research groups have published their work and, perhaps unsurprisingly, have two different opinions…

I always brace myself when I mention “global warming”. I have never come across such an emotive and controversial subject. I get comments from people that support the idea that the human race and our insatiable desire for energy is the root cause of the global increases in temperature. I get anger (big, scary anger!) from people who wholeheartedly believe that we are being conned into thinking the “global warming swindle” is a money-making scheme. You just have to look at the discussions that ensued in the following climate-related stories:

But what ever our opinion, huge quantities of research spending is going into understanding all the factors involved in this worrying upward trend in average temperature.

Cue cosmic rays.

Researchers from the National Polytechnic University in the Ukraine take the view that mankind has little or no effect on global warming and that it is purely down to the flux of cosmic radiation (creating clouds). Basically, Vitaliy Rusov and colleagues run the analysis of the situation and deduce that the carbon dioxide content of the atmosphere has very little effect on global warming. Their observations suggest that global temperature increases are periodic when looking into the history of global and solar magnetic field fluctuations and the main culprit could be cosmic ray interactions with the atmosphere. Looking back over 750,000 years of palaeotemperature data (historic records of climatic temperature stored in ice cores sampled in the Northern Atlantic ice sheets), Rusov’s theory and data analysis draw the same conclusion, that global warming is periodic and intrinsically linked with the solar cycle and Earth’s magnetic field.

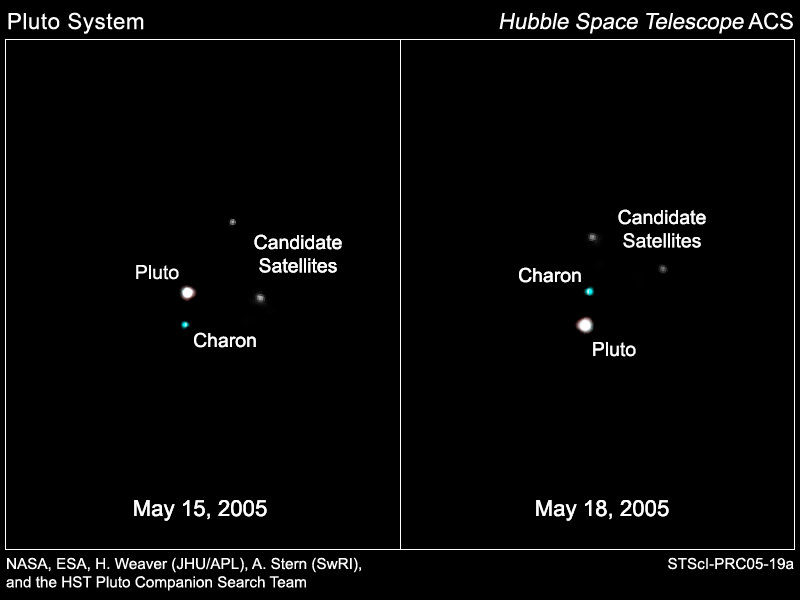

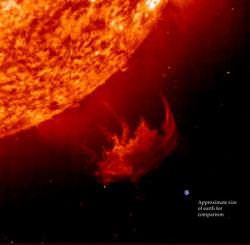

But how does the Sun affect the cosmic ray flux? As the Sun approaches “solar maximum” its magnetic field is at its most stressed and active state. Flares and coronal mass ejections become commonplace, as do sunspots. Sunspots are a magnetic manifestation, showing areas on the solar surface where the powerful magnetic field is up welling and interacting. It is during this period of the 11-year solar cycle that the reach of the solar magnetic field is most powerful. So powerful that galactic cosmic rays (high energy particles from supernovae etc.) will be swept from their paths by the magnetic field lines en-route to the Earth in the solar wind.

It is on this premise that the Ukrainian research is based. Cosmic ray flux incident on the Earth’s atmosphere is anti-correlated with sunspot number – less sunspots equals an increase in cosmic ray flux. And what happens when there is an increase in cosmic ray flux? There is an increase in global cloud cover. This is the Earth’s global natural heat shield. At solar minimum (when sunspots are rare) we can expect the albedo (reflectivity) of the Earth to increase, thus reducing the effect of global warming.

This is a nice bit of research, with a very elegant mechanism that could physically control the amount of solar radiation heating the atmosphere. However, there is a lot of evidence out there that suggests carbon dioxide emissions are to blame for the current upward trend of average temperature.

Prof. Terry Sloan and Prof. Sir Arnold Wolfendale from the University of Lancaster and University of Durham, UK step into the debate with the publication “Testing the proposed causal link between cosmic rays and cloud cover“. Using data from the International Satellite Cloud Climatology Project (ISCCP), the UK-based researchers set out to investigate the idea that the solar cycle has any effect on the amount of global cloud cover. They find that cloud cover varies depending on latitude, demonstrating that in some locations cloud cover/cosmic ray flux correlates in others it does not. The big conclusion from this comprehensive study states that if cosmic rays in some way influence cloud cover, at maximum the mechanism can only account for 23 percent of cloud cover change. There is no evidence to suggest that changes in the cosmic ray flux have any effect on global temperature changes.

The cosmic-ray, cloud-forming mechanism itself is even in doubt. So far, there has been little observational evidence of this phenomenon. Even looking at historical data, there has never been an accelerated increase in global temperature rise than the one we are currently observing.

So could we be clutching at straws here? Are we trying to find answers to the global warming problem when the answer is already right in front of us? Even if global warming can be amplified by natural global processes, mankind sure ain’t helping. There is a known link between carbon dioxide emission and global temperature rise whether we like it or not.

Perhaps taking action on carbon emissions is a step in the right direction while further research is carried out on some of the natural processes that can influence climate change, as for now, cosmic rays do not seem to have a significant part to play.

Original source: arXiv blog