[/caption]

Hiding an object with a cloaking device has been the stuff of science fiction, but over the past few years scientists have successfully brought cloaking technology into reality. There have been limits, however. So far, cloaked objects have been quite small, and researchers have only been able to hide an object in 2 dimensions, meaning the objects would be immediately visible when the observer changes their point of view. But now a team has created a cloak that can obscure objects in three dimensions. While the device only works in a limited range of wavelengths, the team says that this step should help keep the cloaking field moving forward.

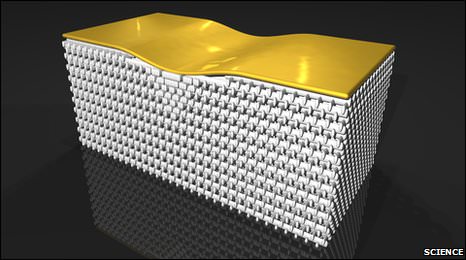

The cloaking technology developed so far does not actually make objects invisible. Instead, it plays tricks with light, misdirecting it so that the objects being “covered” cannot be seen, much like putting a piece of carpet over an object. But in this case, the carpet also disappears.

This field is called transformation optics, and uses a new class of materials called metamaterials that are able to guide and control light in new ways.

Researchers from the Karlsruhe Institute of Technology in Germany used photonic crystals, putting them together like a pile of wood to make an invisibility cloak. They used the cloak to conceal a small bump on a gold mirror-like surface. The “cloak” is composed of special lenses that work by partially bending light waves to suppress light scattering from the bump. To the observer, the mirror appears flat, so you can’t tell there is something on the mirror.

“It is composed of photonic polymer that is commercially available,” said Tolga Ergin, who led the research team, speaking on the AAAS Science podcast. “The ratio between polymer and air is changed locally in space, and by choosing the right distribution of the local filing sector, you can achieve the needed cloaking. We were surprised the cloaking effect is that good.”

The wavelengths of “invisibility” are in the infrared spectrum, and the cloaking effect is observed in wavelengths down to 1.3 to 1.4 microns, which is an area currently used for telecommunications.

So, what is the practicability of this device?

“Applications are a tough question,” said Ergin. “Carpet cloaks and general cloaking device are just beautiful and exciting benchmarks to show what transformational optics can do. There have been proposals in the field of transformation optics for different devices such as beam concentrators, beam shifters, or super antennas which concentrate light from all directions and much, much more. So it is really hard to say what the future will bring in applications. The field is large and the possibilities are large.”

“Cloaking structures have been very exciting to mankind for a very long time,” Ergin continued. “I think our team succeeded in pushing the results of transformation optics one step further because we realized the cloaking structure in three dimensions.”

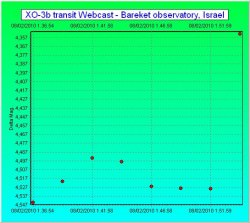

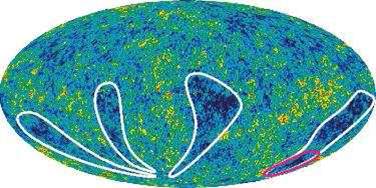

Computer simulation of of a microscope image of the “bump” that is to be cloaked. The viewing angle changes with time.

Sources: Science, Science Podcast