Ever since astronomers first began using telescopes to get a better look at the heavens, they have struggled with a basic conundrum. In addition to magnification, telescopes also need to be able to resolve the small details of an object in order to help us get a better understanding of them. Doing this requires building larger and larger light-collecting mirrors, which requires instruments of greater size, cost and complexity.

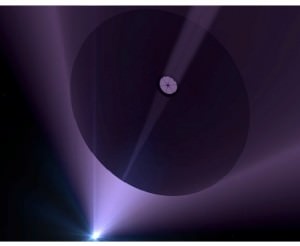

However, scientists working at NASA Goddard’s Space Flight Center are working on an inexpensive alternative. Instead of relying on big and impractical large-aperture telescopes, they have proposed a device that could resolve tiny details while being a fraction of the size. It’s known as the photon sieve, and it is being specifically developed to study the Sun’s corona in the ultraviolet.

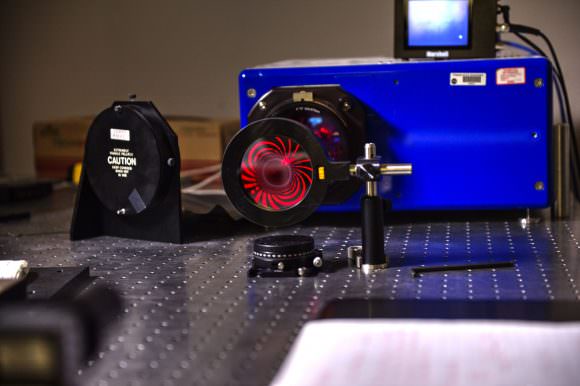

Basically, the photon sieve is a variation on the Fresnel zone plate, a form of optics that consist of tightly spaced sets of rings that alternate between the transparent and the opaque. Unlike telescopes which focus light through refraction or reflection, these plates cause light to diffract through transparent openings. On the other side, the light overlaps and is then focused onto a specific point – creating an image that can be recorded.

The photon sieve operates on the same basic principles, but with a slightly more sophisticated twist. Instead of thin openings (i.e. Fresnel zones), the sieve consists of a circular silicon lens that is dotted with millions of tiny holes. Although such a device would be potentially useful at all wavelengths, the Goddard team is specifically developing the photon sieve to answer a 50-year-old question about the Sun.

Essentially, they hope to study the Sun’s corona to see what mechanism is heating it. For some time, scientists have known that the corona and other layers of the Sun’s atmosphere (the chromosphere, the transition region, and the heliosphere) are significantly hotter than its surface. Why this is has remained a mystery. But perhaps, not for much longer.

As Doug Rabin, the leader of the Goddard team, said in a NASA press release:

“This is already a success… For more than 50 years, the central unanswered question in solar coronal science has been to understand how energy transported from below is able to heat the corona. Current instruments have spatial resolutions about 100 times larger than the features that must be observed to understand this process.”

With support from Goddard’s Research and Development program, the team has already fabricated three sieves, all of which measure 7.62 cm (3 inches) in diameter. Each device contains a silicon wafer with 16 million holes, the sizes and locations of which were determined using a fabrication technique called photolithography – where light is used to transfer a geometric pattern from a photomask to a surface.

However, in the long-run, they hope to create a sieve that will measure 1 meter (3 feet) in diameter. With an instrument of this size, they believe they will be able to achieve up to 100 times better angular resolution in the ultraviolet than NASA’s high-resolution space telescope – the Solar Dynamics Observatory. This would be just enough to start getting some answers from the Sun’s corona.

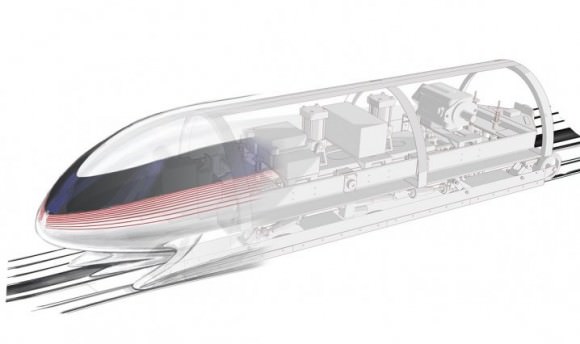

In the meantime, the team plans to begin testing to see if the sieve can operate in space, a process which should take less than a year. This will include whether or not it can survive the intense g-forces of a space launch, as well as the extreme environment of space. Other plans include marrying the technology to a series of CubeSats so a two-spacecraft formation-flying mission could be mounted to study the Sun’s corona.

In addition to shedding light on the mysteries of the Sun, a successful photon sieve could revolution optics as we know it. Rather than being forced to send massive and expensive apparatus’ into space (like the Hubble Space Telescope or the James Webb Telescope), astronomers could get all the high-resolution images they need from devices small enough to stick aboard a satellite measuring no more than a few square meters.

This would open up new venues for space research, allowing private companies and research institutions the ability to take detailed photos of distant stars, planets, and other celestial objects. It would also constitute another crucial step towards making space exploration affordable and accessible.

Further Reading: NASA