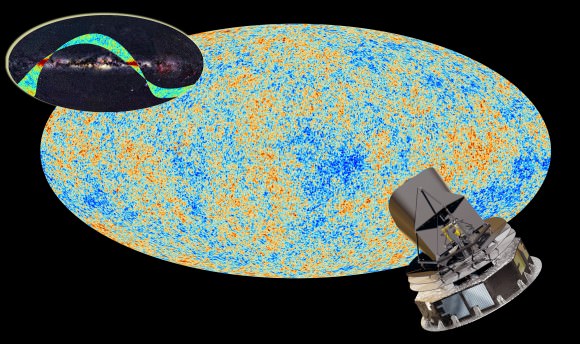

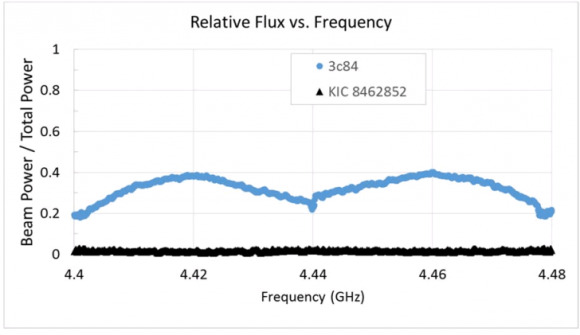

Astronomers at the SETI institute (search for extraterrestrial intelligence) have reported their findings after monitoring the reputed megastructure-encompassed star KIC 8462852. No significant radio signals were detected in observations carried out from the Allen Telescope Array between October 15-30th (nearly 12 hours each day). However, there are caveats, namely that the sensitivity and frequency range were limited, and gaps existed in the coverage (e.g., between 6-7 Ghz).

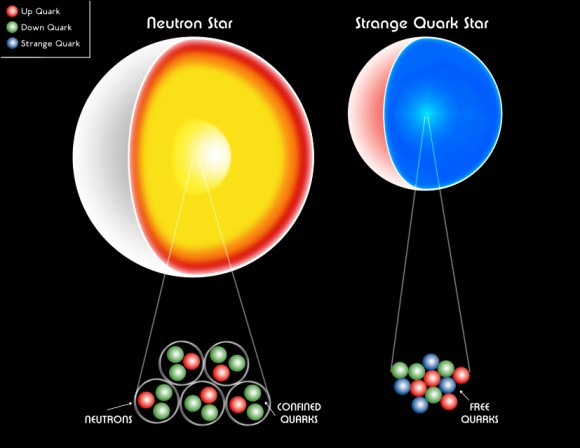

Lead author Gerald Harp and the SETI team discussed the various ideas proposed to explain the anomalous Kepler brightness measurements of KIC 8462852, “The unusual star KIC 8462852 studied by the Kepler space telescope appears to have a large quantity of matter orbiting quickly about it. In transit, this material can obscure more than 20% of the light from that star. However, the dimming does not exhibit the periodicity expected of an accompanying exoplanet.” The team went on to add that, “Although natural explanations should be favored; e.g., a constellation of comets disrupted by a passing star (Boyajian et al. 2015), or gravitational darkening of an oblate star (Galasyn 2015), it is interesting to speculate that the occluding matter might signal the presence of massive astroengineering projects constructed in the vicinity of KIC 8462582 (Wright, Cartier et al. 2015).”

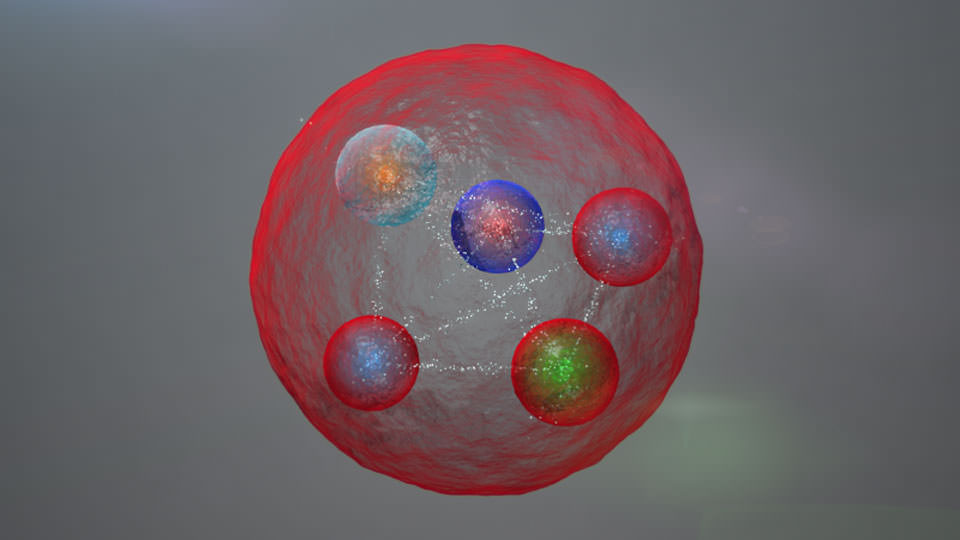

One such megastructure was discussed in a famous paper by Freeman Dyson (1960), and subsequently designated a ‘Dyson Sphere‘. In order to accommodate an advanced civilisation’s increasing energy demands, Dyson remarked that, “pressures will ultimately drive an intelligent species to adopt some such efficient exploitation of its available resources. One should expect that, within a few thousand years of its entering the stage of industrial development, any intelligent species should be found occupying an artificial biosphere which completely surrounds its parent star.” Dyson further proposed that a search be potentially conducted for artificial radio emissions stemming from the vicinity of a target star.

An episode of Star Trek TNG featured a memorable discussion regarding a ‘Dyson Sphere‘.

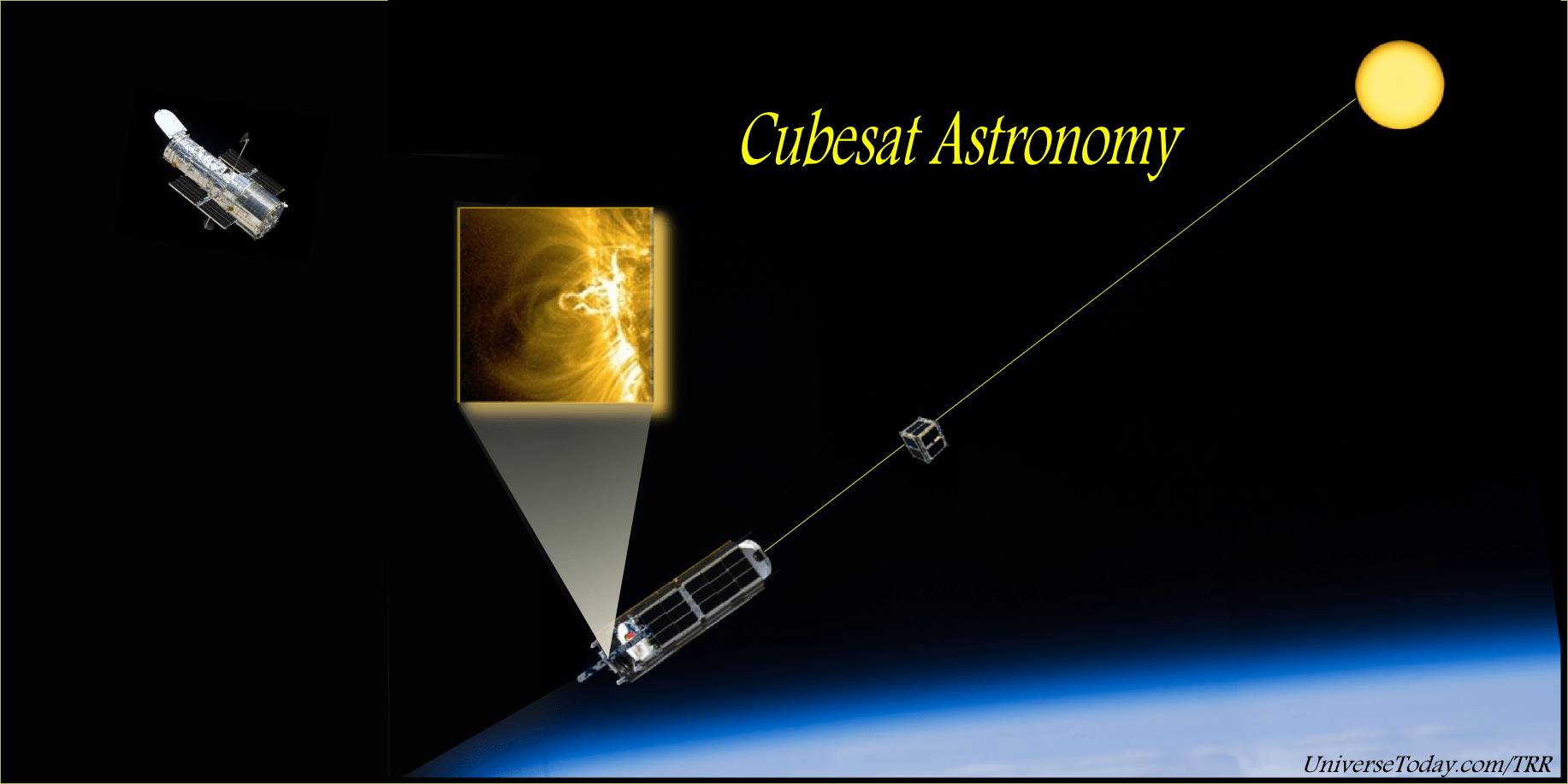

The SETI team summarized Dyson’s idea by noting that Solar panels could serve to capture starlight as a source of sustainable energy, and likewise highlighted that other, “large-scale structures might be built to serve as possible habitats (e.g., “ring worlds”), or as long-lived beacons to signal the existence of such civilizations to technologically advanced life in other star systems by occluding starlight in a manner not characteristic of natural orbiting bodies (Arnold 2013).” Indeed, bright variable stars such as the famed Cepheid stars have been cited as potential beacons.

The Universe Today’s Fraser Cain discusses a ‘Dyson Sphere‘.

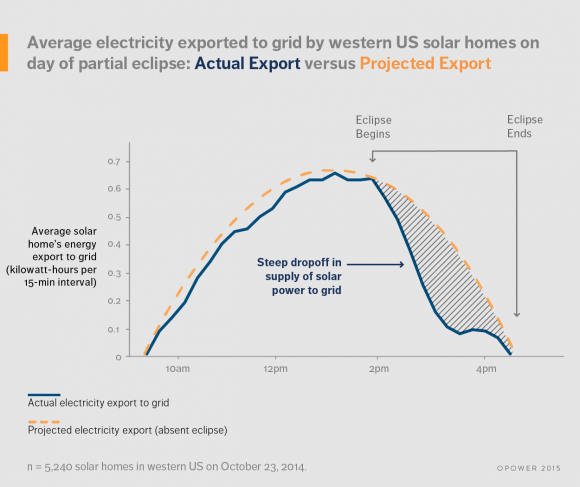

If a Dyson Sphere encompassed the Kepler catalogued star, the SETI team were seeking in part to identify spacecraft that may service a large structure and could be revealed by a powerful wide bandwidth signal. The team concluded that their radio observations did not reveal any significant signal stemming from the star (e.g., Fig 1 below). Yet as noted above, the sensitivity was limited to above 100 Jy and the frequency range was restricted to 1-10 Ghz, and gaps existed in that coverage.

What is causing the odd brightness variations seen in the Kepler star KIC 8462852? Were those anomalous variations a result of an unknown spurious artefact from the telescope itself, a swath of comets temporarily blocking the star’s light, or perhaps something more extravagant. The latter should not be hailed as the de facto source simply because an explanation is not readily available. However, the intellectual exercise of contemplating the technology advanced civilisations could construct to address certain needs (e.g., energy) is certainly a worthy venture.