For all those involved with the initial investigation of the skydiver and the possible meteorite, they now feel they have resolution to their puzzle, thanks to the beauty of crowdsourcing. The rock that showed up in a video taken during a skydive in Norway in 2012 was likely just a rock — accidentally packed in the parachute — and not a meteoroid.

Steinar Midtskogen, from the Norwegian Meteor Network who was involved in the initial investigation of the video, suggested an adaptation of Linus’s Law to explain what has happened in the past week: “Given enough eyeballs, all mysteries are shallow.”

With all the comments, opinions and analysis following the release of the video last week, the team of scientists and video experts from Norway have conceded that the likelihood of the rock being a meteoroid is extremely low. After nearly two years of analyzing the video, the Norwegian team was unable to fully resolve the puzzle, and so they went public, hoping to get input from others.

“We were left with scenarios that we were unable to find possible solutions for against something that fits but is extremely improbable, though possible,” Midtskogen wrote on the NMN website. “We seemed to get no further, and we decided to go public with what we had and at the same time invite anyone to have a go at the puzzle. … We expressed our hope that it would go viral and scrutinized for something that we might have missed, and the result was beyond our expectations.”

The group welcomed all the input (and criticism) but were especially swayed by the ballistics analysis provided by NASA planetary scientist Dr. Phil Metzger, who posted his investigation on Facebook:

Here is my conclusion: the ballistics are consistent with it being a small piece of gravel that came out of his parachute pack and flew past at close distance. The ballistics are also consistent with it being a large meteorite that flew past at about 12 to 18 meters distance. It could be either one, but IMO not anything in between. Based on the odds of parachute packing debris (common) versus meteorite personal flybys (extremely rare), and based on the timing (right after he opened his parachute), I vote for the parachute debris as the more likely.

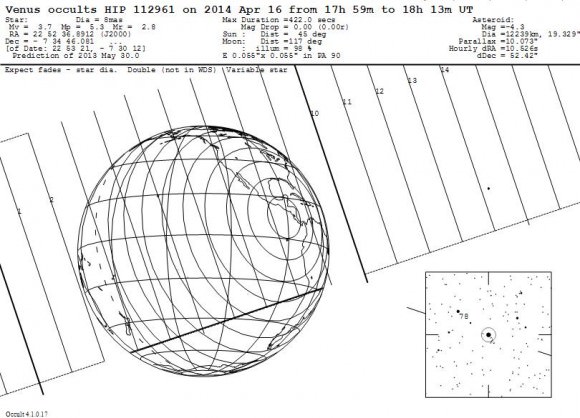

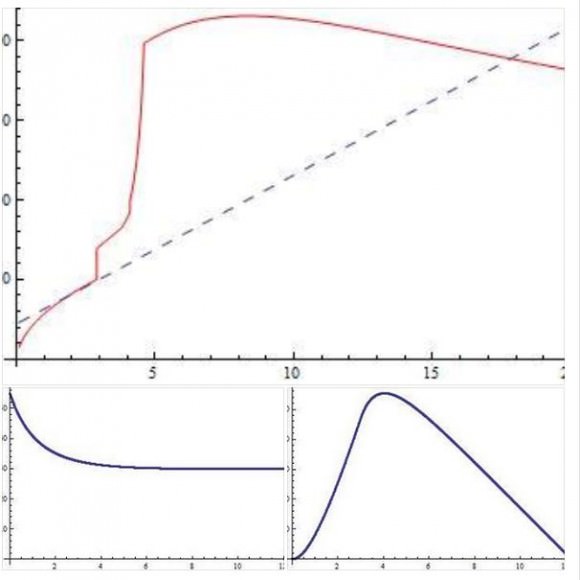

His three plots are below:

Metzger concluded the likely outcome is that a small piece of gravel about 3.3 cm in diameter flew by the camera by at about 30 meters per second, or 10 meters per second relative to the skydiver.

But while Metzter feels Occam’s razor favors parachute debris, he said his model only shows feasibility.

“I don’t consider it to be a smoking gun,” he told Universe Today. “There could be other, better scenarios.”

And so, Midtskogen told Universe Today, while the rock being a meteoroid isn’t completely ruled out, they feel the best answer is that it was a small rock embedded in the chute, and no further analysis is needed.

“I can confirm that the group will no longer do coordinated work on this,” Midtskogen said via email. “I think all of us feel confident about the conclusion and won’t work more on this individually either – although here I can only speak for myself. It was shown how a pebble packed in the chute could reappear well above the chute, and there is no strong evidence against a small size, so this has been easy to accept.”

While this rock ended up not likely to be a meteoroid, Midtskogen added, the crowdsourcing and interest in the video was overwhelming and encouraging.

“So, no meteorite, but a good story,” he said good-naturedly in his email to Universe Today. “Our mood is still good, and we talk about putting up a plaque at ground zero: “On 17th June 2012 a pebble fell here, witnessed by 6 million people on YouTube”.

Additionally, the skydiver, Anders Helstrup, seemed relieved more than anything.

“After all we seem to have found a more natural explanation to the video,” he told Universe Today. “And that is a good thing. I see that this had to have been MY mistake – packing a pebble into my parachute (I always pack myself). Our intention was to find out more and this way let the story out in the public, for people to make up their own minds. This became way bigger than I had imagined.”

In the end, while this story was not as fantastic as it might have been, it shows the beauty of crowdsourcing and using science to analyze a puzzle. And I readily admit to being overly enthusiastic in my initial article about this being a meteoroid, but I have to agree with Phil Plait who may have said it best in his update today: I would have loved to have this to have been a real meteoroid, but I’m glad this worked the way it did:

The video-makers were honest, did their level best to figure this out, and when they got as far as they could, they put it out to the public. And when it was shown to not be what they had hoped, they admitted it openly and clearly.

— Phil Plait