Space holds the future of our species. While we’ve been flying for just barely over a century, we’ve also been rocketing upward for nearly as long. As these technologies advanced so did related opportunities. Space tourism is one such and Derek Webber in his book “The Wright Stuff– The Century of Effort Behind Your Ticket to Space” shows how it was such a logical progression and holds such promise from where we stand today. Through his words we see how private citizens may soon be able to enjoy and contribute to our specie’s future.

The Wright brothers first flew their human controlled aircraft in December of 1903. The author uses this as the starting point and the namesake of his book. In a lively, active voice he carries the reader along a quick, somewhat routine history of flight and rocketry. However, where most historical journeys, especially in the field of aerospace, focus upon events and technology this book espouses the individual or sometimes a couple as with the brothers Wright.

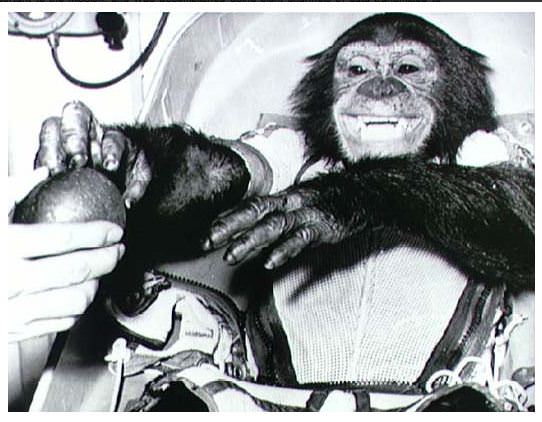

In somewhat jocular fashion, the author anoints a ‘Wright Stuff Award’ to individuals that he thinks have most significantly contributed to space tourism. Some recipients are obvious such as the Wright brothers and Sergei Korolev who respectively advanced flight and rocketry. Other recipients may cause a few surprises such as former President George Bush and Chesley Bonestell. Yet, it is clear the author’s intent is to show that major contributions to the field of space tourism have arisen from a disparate source of promoters and nurturers.

The real relevance of the book comes with its final chapter entitled Tourists. In it, the author introduces the reader to non-government individuals who have taken advantage of a spare seat or two and used government equipment, principally the Soyuz spacecraft, to journey into space. Their flights were principally for personal pleasure. The first few were sponsored. Most of the later used personal fortunes. Nearly all are still alive today. These, the book says, are the original tourists and they are the ones shown to be as much benefactors as champions of human space flight.

While the early part of this book stressed the individual and their accomplishments, the very last section extends tourism into the future. In it, the author runs through a cacophony of current companies, developers and pioneers who are vibrantly competing against each other to offer reasonable and attractive space travel packages. Some seem to have much promise such as Virgin Space with its new space port and White Knight 2 vehicle. Others have just started test flights while still others are in the planning stages. All however show themselves to be part of a busy business sector aiming to offer, at a reasonable cost, a few hours travel into or very close to space.

With the historical progression and the review of current organizations, the

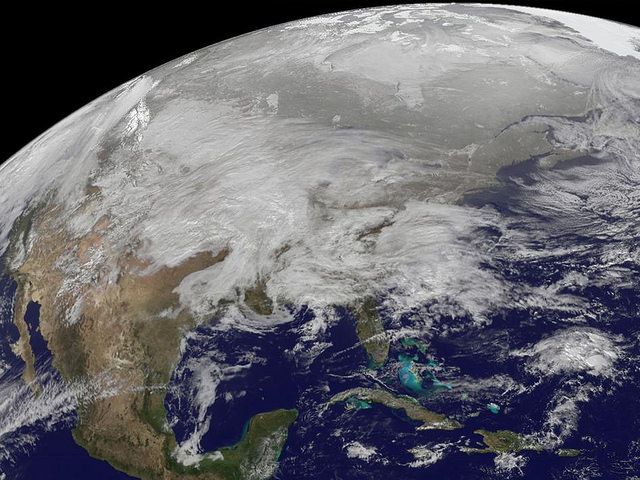

author has shown that space tourism has solid groundwork and that supporting infrastructure continues to flourish. The book doesn’t however address some base questions. The principle one is that so much of the current industry is still Earth focused. People fly up to the edge of space, see the curvature of the Earth and fly back down. As such it would be a small step in moving our species spaceward but space travel would still be a long way down the road. As well, the book doesn’t deal with much substantiation of the business case for space tourism. There is mention of the Commission on the Future of the US Aerospace Industry. But, placing the future of our species at the vagaries of discretionary spending seems at best opportunistic. Thus while the book shows progress, the progress may be fleeting rather than a permanent capability.

This book does present a brash, bold and optimistic view of space tourism. Derek Webber’s “The Wright Stuff– The Century of Effort Behind Your Ticket to Space” looks at positive contributions through humankind’s brief history of flight and insights a positive feel into space tourism. There would be no surprise if after reading this book, the reader began saving for their own future ride into space.

Click here to read more reviews or buy this book from Amazon.com.