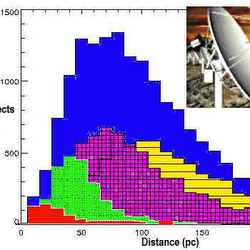

M2: Doug Williams – REU Program – NOAO/AURA/NSF

Monday, November 21 – Tonight let’s start with a wonderful globular cluster that gives the very best of all worlds – one that can be seen in even the smallest of binoculars and from both hemispheres! Your destination is about one-third the distance between Beta Aquarii and Epsilon Pegasi…

First recorded by Maraldi in 1746 and cataloged by Messier in 1760, the 6.0 magnitude M2 is one of the finest and brightest of Class II globular clusters. At 13 billion years old, this rich galactic globular is one of the oldest in our galaxy and its position in the halo puts it directly beneath the Milky Way’s southern pole. In excess of 100,000 faint stars form a well concentrated sphere which spans across 150 light-years – one that shows easily to any optical aid. While only larger scopes will begin resolution on this awesome cluster’s yellow and red giants, we’d do well to remember that our own Sun would be around the 21st magnitude if it were as distant as this ball of stars!

Tuesday, November 22 – With the Moon comfortably out of the way this evening, let’s head for the constellation of Cetus and a dual study as we conquer NGC 246 and NGC 255.

Located about four finger widths north of bright Beta Ceti – and triangulating south with Phi 1, 2 and 3 – is our first mark. NGC 246 is an 8.0 magnitude planetary nebula which will appear as a slightly out-of-focus star in binoculars, but a whole lot like a Messier object to a small scope. Here is the southern sky’s version of the “Ring Nebula.” While this one is actually a bit brighter than its M57 counterpart, larger scopes might find its 12.0 magnitude central star just a little bit easier to resolve.

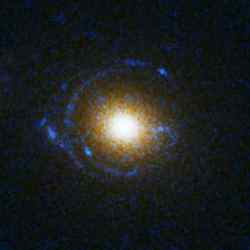

If you are using large aperture, head just a breath northwest and see if you can capture small and faint galaxy NGC 255. This 12.8 magnitude spiral will show a very round signature with a gradual brightening towards the nucleus and a hint of outer spiral arms.

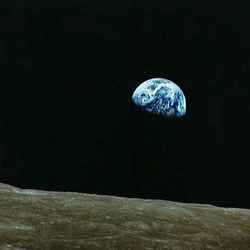

Wednesday, November 23 – Tonight in 1885, the very first photograph of a meteor shower was taken. Also, weather satellite Tiros II was launched on this day in 1960. Carried to orbit by a three-stage Delta rocket, the “Television Infrared Observation Satellite” was about the size of a barrel, testing experimental television techniques and infrared equipment. Operating for 376 days, Tiros II sent back thousands of pictures of Earth’s cloud cover and was successful in its experiments to control orientation of satellite spin and infrared sensors. Oddly enough, on this day in 1977 a similar mission – Meteosat 1- became the first satellite put into orbit by the European Space Agency. Where is all this leading? Why not try observing satellites on your own! Thanks to wonderful on-line tools like Heavens-Above, you’ll be “in the know” whenever a bright satellite makes a pass for your specific area. It’s fun!

Thursday, November 24 – Tonight let’s return to Cassiopeia and start first by exploring the central most bright star – Gamma. At approximately 100 light-years away, Gamma is very unusual. Once thought to be a variable, this particular star has been known to go through some very radical changes in its temperature, spectrum, magnitude, color and even diameter! Gamma is also a visual double star, but the 11th magnitude companion is highly difficult to perceive so close (2.3″) to the primary.

Four degrees southeast of Gamma is our marker for this starhop, Phi Cassiopeiae. By aiming binoculars or telescopes at this star, it is very easy to locate an interesting open cluster – NGC 457 – in the same field of view. This bright and splendid galactic cluster has received a variety of names over the years because of its uncanny resemblance to a figure.

Both Phi and HD 7902 may not be true members of the cluster. If magnitude 5 Phi were actually part of this grouping, it would have to have a distance of approximately 9300 light-years, making it the most luminous star in the sky! The fainter members of NGC 457 comprise a relatively “young” star cluster that spans about 30 light-years across. Most of the stars are only about 10 million years old, yet there is an 8.6 magnitude red supergiant in the center.

Friday, November 25 – If you live in the northeastern United States or Canada, it would be worth getting up early this morning as the Moon occults bright Sigma Leonis. Be sure to check IOTA for times and locations near you!

Tonight we’re heading south and our goal will be about two finger widths north-northwest of Alpha Phoenicis.

At magnitude 7.8, this huge member of the Sculptor Galaxy Group, known as NGC 55, will be a treat to both binoculars and telescopes. Somewhat similar to the large Magellanic Cloud in structure, those in the southern hemisphere will have an opportunity to see a galaxy very similar in appearance to M82 – but on a much grander scale! Larger scopes will show mottling in structure, resolution of individual stars and nebulous areas, as well as a very prominent central dark dust cloud. Like its northern counterpart, both the Ursa Major and Sculptor Group are around the same distance from our own Local Group.

Saturday, November 26 – This morning it is our Russian comrades’ turn as the Moon occults Beta Virginis. As always, times and locations can be found on the IOTA website! Today also marks the launch of the first French satellite – Asterix 1.

It’s time to head north again as we turn our eyes towards 1000 light-year distant Delta Cephei, one of the most famous of all variables. It is an example of a “pulsing variable” – one whose magnitude changes are not attributed to an eclipsing companion, but to the expansion and contraction of the star itself. For unaided observers, discover what John Goodricke did in 1784… You can follow its near one magnitude variability by comparing it to nearby Epsilon and Zeta. It rises to maximum in about a day and a half, yet the fall takes about four days.

Let’s travel about a finger width southeast of Delta Cephei for new open cluster NGC 7380. This large gathering of stars has a combined magnitude of 7.2. Like many young clusters, it is embroiled in faint nebulosity. Surrounded by a dispersed group of brighter stars, the cluster itself can be seen in binoculars and may resolve around three dozen faint members to mid-aperture.

Before you leave, return to Delta Cephei and take a closer look. It is also a well-known double star that was measured by F.G.W. Struve in 1835. Its 6.3 magnitude companion has not shown change in position or separation angle in the 171 years since Struve looked at it – and as we see it now. Chances are, this means the two are probably not a physical pair. S.W. Burnham discovered a third, 13th magnitude companion in 1878. Enjoy the color contrast between its members!

Sunday, November 27 – Tonight let’s use binoculars or small scopes to go northern and southern “cluster hunting.”

The first destination is NGC 7654, but you’ll find it more easily by its common name of M52. To find it with binoculars, draw a mental line between Alpha and Beta Cassiopeiae and extend it about the same distance along the same trajectory. This mixed magnitude cluster is bright and easy.

The next, NGC 129, is located almost directly between Gamma and Beta. This is also a large, bright cluster that resolves in a small scope but shows at least a dozen of its 35 members to binoculars. Near the cluster’s center and north of a pair of matched magnitude stars is Cepheid variable DI Cassiopeiae – which changes by about a magnitude in a period of a week.

Now head for northeastern Epsilon Cassiopeiae and hop about three finger widths to the east-southeast. Here you will find 3300 light-year distant NGC 1027. As an attractive “starry swatch” in binoculars, small scopes will have a wonderful time resolving its 40 or more faint members.

If you live south, have a look at open cluster IC 2602. This very bright, mixed magnitude cluster includes 3.0 magnitude Theta Carinae. Seen easily unaided, this awesome open cluster contains around 30 stars. Be sure to look on its southwest edge with binoculars or scopes for a smaller feature overshadowed by the grander companions. Tiny by comparison, Melotte 101 will appear like a misty patch of faint stars. Enjoy!

Until next week… May all your journeys be at light speed! ~Tammy Plotner