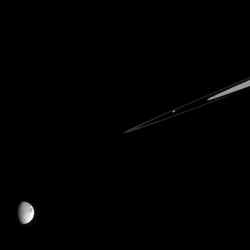

The more we explore Mars, the more it looks like Earth. Image credit: NASA Click to enlarge

One of the paradoxes of recent explorations of the Martian surface is that the more we see of the planet, the more it looks like Earth, despite a very big difference: Complex life forms have existed for billions of years on Earth, while Mars never saw life bigger than a microbe, if that.

“The rounded hills, meandering stream channels, deltas and alluvial fans are all shockingly familiar,” said William E. Dietrich, professor of earth and planetary science at the University of California, Berkeley. “This caused us to ask: Can we tell from topography alone, and in the absence of the obvious influence of humans, that life pervades the Earth? Does life matter?”

In a paper published in the Jan. 26 issue of the journal Nature, Dietrich and graduate student J. Taylor Perron reported, to their surprise, no distinct signature of life in the landforms of Earth.

“Despite the profound influence of biota on erosion processes and landscape evolution, surprisingly,?there are no landforms that can exist only in the presence of life and, thus, an abiotic Earth probably would present no unfamiliar landscapes,” said Dietrich.

Instead, Dietrich and Perron propose that life – everything from the lowest plants to large grazing animals – creates a subtle effect on the land not obvious to the casual eye: more of the “beautiful, rounded hills” typical of Earth’s vegetated areas, and fewer sharp, rocky ridges.

“Rounded hills are the purest expression of life’s influence on geomorphology,” Dietrich said. “If we could walk across an Earth on which life has been eliminated, we would still see rounded hills, steep bedrock mountains, meandering rivers, etc., but their relative frequency would be different.”

When a NASA scientist acknowledged to Dietrich a few years ago that he saw nothing in the Martian landscape that didn’t have a parallel on Earth, Dietrich began thinking about what effects life does have on landforms and whether there is anything distinctive about the topography of planets with life, versus those without life.

“One of the least known things about our planet is how the atmosphere, the lithosphere and the oceans interact with life to create landforms,” said Dietrich, a geomorphologist who for more than 33 years has studied the Earth’s erosional processes. “A review of recent research in Earth history leads us to suggest that life may have strongly contributed to the development of the great glacial cycles, and even influenced the evolution of plate tectonics.”

One of the main effects of life on the landscape is erosion, he noted. Vegetation tends to protect hills from erosion: Landslides often occur in the first rains following a fire. But vegetation also speeds erosion by breaking up the rock into smaller pieces.

“Everywhere you look, biotic activity is causing sediment to move down hill, and most of that sediment is created by life,” he said. “Tree roots, gophers and wombats all dig into the soil and raise it, tearing up the underlying bedrock and turning it into rubble that tumbles downhill.”

Because the shape of the land in many locations is a balance between river erosion, which tends to cut steeply into a slope’s bedrock, and the biotically-driven spreading of soil downslope, which tends to round off the sharp edges, Dietrich and Perron thought that rounded hills would be a signature of life. This proved to be untrue, however, as their colleague Ron Amundson and graduate student Justine Owen, both of the campus’s Department of Environmental Science, Policy and Management, discovered in the lifeless Atacama Desert in Chile, where rounded hills covered with soil are produced by salt weathering from the nearby ocean.

“There are other things on Mars, such as freeze-thaw activity, that can break rock” to create the rounded hills seen in photos taken by NASA’s rovers, Perron said.

They also looked at river meanders, which on Earth are influenced by streamside vegetation. But Mars shows meanders, too, and studies on Earth have shown that rivers cut into bedrock or frozen ground can create meanders identical to those created by vegetation.

The steepness of river courses might be a signature, too, they thought: Coarser, less weathered sediment would erode into the streams, causing the river to steepen and the ridges to become higher. But this also is seen in Earth’s mountains.

“It’s not hard to argue that vegetation affects the pattern of rainfall and, recently, it has been shown that rainfall patterns affect the height, width and symmetry of mountains, but this would not produce a unique landform,” Dietrich said. “Without life, there would still be asymmetric mountains.”

Their conclusion, that the relative frequency of rounded versus angular landforms would change depending on the presence of life, won’t be testable until elevation maps of the surfaces of other planets are available at resolutions of a few meters or less. “Some of the most salient differences between landscapes with and without life are caused by processes that operate at small scales,” Perron said.

Dietrich noted that limited areas of Mars’ surface have been mapped at two-meter resolution, which is better than most maps of the Earth. He is one of the leaders of a National Science Foundation (NSF)-supported project to map in high resolution the surface of the Earth using LIDAR (LIght Detection And Ranging) technology. Dietrich co-founded the National Center of Airborne Laser Mapping (NCALM), a joint project between UC Berkeley and the University of Florida to conduct LIDAR mapping showing not only the tops of vegetation, but also the bare ground as if denuded of vegetation. The research by Dietrich and Perron was funded by NSF’s National Center for Earth-surface Dynamics, the NSF Graduate Research Fellowship Program and NASA’s Astrobiology Institute.

Original Source: UC Berkeley News Release