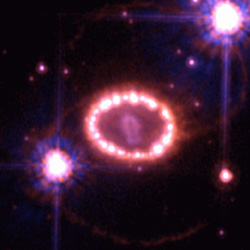

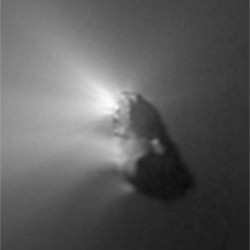

Halley’s Comet. Image credit: MPAE. Click to enlarge.

As Professor Emeritus of the Max Planck Institute, Dr. Kissel has a life-long devotion to the study of comets. “In the early 20th century the comet tails lead to the postulation and later to the detection of the ‘solar wind’, a stream of ionized atoms constantly blown away from the sun. As astronomical observations became more powerful, more and more constituents could be identified, both solid state particles and gaseous molecules, neutral and ionized.” As our techniques of studying these outer solar system visitors became more refined, so have our theories of what they might be comprised of – and what they look like. Says Kissel, “Many models have been proposed to describe the dynamic appearance of a comet, from which Fred Whipple’s was apparently the most promising. It postulated a nucleus made up from water-ice and dust. Under the influence of the sun, the water-ice would sublime and accelerate dust particles along its way.”

Still, they were a mystery – a mystery that science was eager to solve. “Not until Halley was it known that many comets are part of our solar system and orbit the sun just like the planets do, just on other type orbits and with additional effects due to the emission of materials.” comments Kissel. But only by getting up close and personal with a comet were we able to discover far more. With Halley’s return to our inner solar system, the plans were made to catch a comet and its name was Giotto.

Giotto’s mission was obtain color photographs of the nucleus, determine the elemental and isotopic composition of volatile components in the cometary coma, study the parent molecules, and help us to understand the physical and chemical processes that occur in the cometary atmosphere and ionosphere. Giotto would be the first to investigate the macroscopic systems of plasma flows resulting from the cometary-solar wind interaction. High on its list of priorities was measuring the gas production rate and determining the elemental and isotopic composition of the dust particles. Critical to the scientific investigation was the dust flux – its size and mass distribution and the crucial dust-to-gas ratio. As the on-board cameras imaged the nucleus from 596 km away – determining its shape and size – it was also monitoring structures in the dust coma and studying the gas with both neutral and ion mass spectrometers. As science suspected, the Giotto mission found the gas to be predominantly water, but it contained carbon monoxide, carbon dioxide, various hydrocarbons, as well as a trace of iron and sodium.

As a team research leader for the Giotto mission, Dr. Kissel recalls, “When the first close up missions to comet 1P/Halley came along, a nucleus was clearly identified in 1986. It was also the first time that dust particles, the comet released gases were analyzed in situ, i.e. without man made interference nor transportation back to ground.” It was an exciting time in cometary research, through Giotto’s instrumentation, researchers like Kissel could now study data like never before. “These first analyses showed that particles are all an intimate mixture of high mass organic material and very small dust particles. The biggest surprise was certainly the very dark nucleus (reflecting only 5% of the light shining onto it) and the amount and complexity of the organic material.”

But was a comet truly something more or just a dirty snowball? “Up until today there is – to my knowledge – no measurement showing the existence of solid water ice exposed on a cometary surface.” says Kissel, “However, we found that water (H2O) as a gas could be released by chemical reactions going on when the comet is increasingly heated by the sun. The reason could be ‘latent heat’, i.e. energy stored in the very cold cometary material, which acquired the energy by intense cosmic radiation while the dust was traveling through interstellar space through bond breaking. Very close to the model for which the late J. Mayo Greenberg has argued for years.”

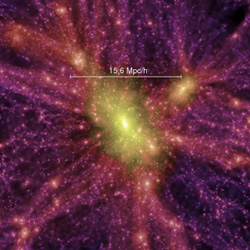

We now know Comet Halley consisted of the most primitive material known to us in the solar system. With the exception of nitrogen, the light elements shown were quite similar in abundance as that of our own Sun. Several thousand dust particles were determined to be hydrogen, carbon, nitrogen, oxygen – as well as mineral forming elements such as sodium, magnesium, silicon, calcium and iron. Because the lighter elements were discovered far away from the nucleus, we knew they were not cometary ice particles. From our studies of the chemistry of interstellar gas surrounding stars, we’ve learned how carbon chain molecules react to elements such as nitrogen, oxygen, and in a very small part, hydrogen. In the extreme cold of space, they can polymerize – changing the molecular arrangement of these compounds to form new. They would have the same percentage composition of the original, but a greater molecular weight and different properties. But what are those properties?

Thanks to some very accurate information from the probe’s close encounter with Comet Halley, Ranjan Gupta of the Inter-University Centre of Astronomy and Astrophysics (IUCAA) and his colleagues have made some very interesting findings with cometary dust composition and scattering properties. Since the beginning missions to comets were “fly-bys”, all the material captured was analyzed in-situ. This type of analysis showed that cometary materials are generally a mixture of silicates and carbon in amorphous and crystalline structure formed in the matrix. Once the water evaporates, the sizes of these grains range from sub-micron to micron and are highly porous in nature – containing non-spherical and irregular shapes.

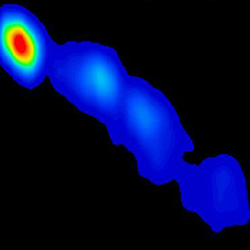

According to Gupta, most of the early models of light scattering from such grains were “based on solid spheres with conventional Mie theory and only in the recent years – when the space missions provided strong evidences against this – have new models have been evolved where non-spherical and porous grains have been used to reproduce the observed phenomenon”. In this case, linear polarization is produced by the comet from the incident solar light. Confined to a plane – the direction from which the light is scattered – it varies by position as the comet approaches or recedes from the the Sun. As Gupta explains, “An important feature of this polarization curve versus the scattering angle (referred to the sun-earth-comet geometry) is that there is some degree of negative polarization.”

Known as ‘back scattering’, this negativity occurs when monitoring a single wavelength – monochromatic light. The Mie algorithm models all of the accepted scattering processes caused by a spherical shape, taking into account external reflection, multiple internal reflections, transmission and surface waves. This intensity of scattered light works as a function of the angle, where 0? implies forward-scattering, away from the lights original direction, while 180? implies back scattering – back awards the source of the light.

According to Gupta, “Back scattering is seen in most of the comets generally in the visible bands and for some comets in the near-infra red (NIR) bands.” At the present time, models attempting to reproduce this aspect of negative polarization at high scattering angles have very limited success.

Their study has used a modified DDA (discrete dipole approximation) – where each dust grain is assumed to be an array of dipoles. A great range of molecules can contain bonds that are between the extremes of ionic and covalent. This difference between the electronegativities of the atoms in the molecules is sufficient enough that the electrons aren’t shared equally – but are small enough that the electrons aren’t attracted only to one of the atoms to form positive and negative ions. This type of bond in molecules is known as polar. because it has positive and negative ends – or poles – and the molecules have a dipole moment.

These dipoles interact with each other to produce the light scattering effects like extinction – spheres larger than the wavelength of light will block monochromatic and white light – and polarization – the scattering of the wave of the incoming light. By using a model of composite grains with a matrix of graphite and silicate spheroids, a very specific grain size range may be required to explain the observed properties in cometary dust. “However, our model is also unable to reproduce the negative branch of polarization which is observed in some comets. Not all comets show this phenomenon in the NIR band of 2.2 microns.”

These composite grain models developed by Gupta et al; will need to be refined further to explain the negative polarization branch, as well as the amount of polarization in various wavelengths. In this case, it is a color effect with higher polarization in red than green light. More extensive laboratory simulations of composite grains are upcoming and “The study of their light scattering properties will help in refining such models.”

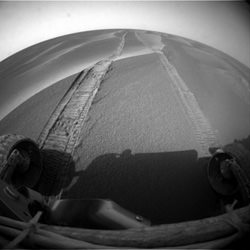

Mankind’s successful beginnings at following this cometary dust trail started with Halley. Vega 1, Vega 2 and Giotto provided the models needed to better research equipment. In May 2000, Drs. Franz R. Krueger and Jochen Kissel of Max Planck Institute published their findings as “First Direct Chemical Analysis of Interstellar Dust”. Says Dr. Kissel, “Three of our dust impact mass spectrometers (PIA on board GIOTTO, and PUMA-1 and -2 onboard VEGA-1 and -2) encountered Comet Halley. With those we were able to determine the elementary composition of the cometary dust. Molecular information, however, was only marginal.” Deep Space 1’s close encounter with Comet Borrelly returned the best images and other science data received so far. On the Borelly Team, Dr. Kissel replies, “The more recent mission to Borrelly (and STARDUST) showed fascinating details of the comet surface such as steep 200m high slopes and spires some 20m wide and 200m high.”

Despite the mission’s many problems, Deep Space 1 proved to be a total success. According to Dr. Mark Rayman’s December 18, 2001 Mission Log, “The wealth of science and engineering data returned by this mission will be analyzed and used for years to come. The testing of high risk, advanced technologies means that many important future missions that otherwise would have been unaffordable or even impossible now are within our grasp. And as all macroscopic readers know, the rich scientific harvest from comet Borrelly is providing scientists fascinating new insights into these important members of the solar system family.”

Now Stardust has taken our investigations just one step further. Collecting these primitive particles from Comet Wild 2, the dust grains will be stored safely in aerogel for study upon the probe’s return. NASA’s Donald Brownlee says, “Comet dust will also be studied in real time by a time-of-flight mass spectrometer derived from the PIA instrument carried to comet Halley on the Giotto mission. This instrument will provide data on the organic particle materials that may not survive aerogel capture, and it will provide an invaluable data set that can be used to evaluate the diversity among comets by comparison with Halley dust data recorded with the same technique.”

These very particles might contain an answer, explaining how interstellar dust and comets may have seeded life on Earth by providing the physical and chemical elements crucial to its development. According to Browlee, “Stardust captured thousands of comet particles that will be returned to Earth for analysis, in intimate detail, by researchers around the world.” These dust samples will allow us to look back some 4.5 billion years ago – teaching us about fundamental nature of interstellar grains and other solid materials – the very building blocks of our own solar system. Both atoms found on Earth and in our own bodies contain the same materials as released by comets.

And it just keeps getting better. Now en route to Comet Comet 67 P/Churyumov- Gerasimenko, ESA’s Rosetta will delve deeper into the mystery of comets as it attempts a successful landing on the surface. According to ESA, equipment such as “Grain Impact Analyser and Dust Accumulator (GIADA) will measure the number, mass, momentum, and velocity distribution of dust grains coming from the comet nucleus and from other directions (reflected by solar radiation pressure) – while Micro-Imaging Dust Analysis System (MIDAS) will study the dust environment around the comet. It will provide information on particle population, size, volume, and shape.”

A single cometary particle could be a composite of millions of individual interstellar dust grains, allowing us new insight on galactic and nebular processes increasing our understanding of both comets and stars. Just as we have produced amino acids in laboratory conditions that simulate what may occur in a comet, most of our information has been indirectly obtained. By understanding polarization, wavelength absorption, scattering properties and the shape of a silicate feature, we gain valuable knowledge into the physical properties of what we have yet to explore. Rosetta’s goal will be to carry a lander to the a comet’s nucleus and deploy it on the surface. The lander science will focus on in-situ study of the composition and structure of the nucleus – an unparalleled study of cometary material – providing researchers like Dr. Jochen Kissel valuable information.

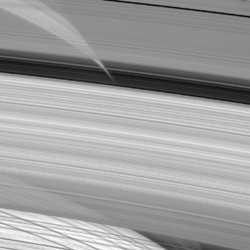

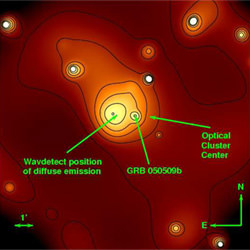

On July 4, 2005, the Deep Impact mission will arrive at Comet Temple 1. Buried beneath its surface may be even more answers. In an effort to form a new crater on the comet’s surface, a 370 kg mass will be released to impact Tempel 1’s sunlit side. The result will be the fresh ejection of ice and dust particles and will further our understanding about comets by observing the changes in activity. The fly-by craft will monitor structure and composition of the crater’s interior – relaying data back to Earth’s cometary dust expert, Kissel. “Deep Impact will be the first to simulate a natural event, the impact of a solid body onto a comet nucleus. The advantage is that the impact time is well known and a spacecraft properly equipped is around, when the impact occurs. This will definitely provide information of what is below the surfaces from which we have pictures by the previous missions. Many theories have been formulated to describe the thermal behavior of the comet nucleus, requiring crusts thick or thin and or other features. I’m sure all these models will have to be complimented by new ones after the Deep Impact.”

After a lifetime of cometary research, Dr. Kissel is still following the dust trail, “It’s the fascination of comet research that after each new measurement there are new facts, which show us, how wrong we were. And that is still on a rather global level.” As our methods improve, so does our understanding of these visitors from the Oort Cloud. Says Kissel, “The situation is not simple and as many simple models describe the global cometary activities pretty well, while details have still to be worked, and models including the chemistry aspects are not yet available.” For a man who has been there since the very beginning, working with Deep Impact continues a distinguished career. “It’s exciting to be part of it” says Dr. Kissel, “and I am eager to see what happens after the Deep Impact and grateful to be a part of it.”

For the very first time, studies will go well beneath the surface of a comet, revealing its pristine materials – untouched since its formation. What lay beneath the surface? Let’s hope spectroscopy shows carbon, hydrogen, nitrogen and oxygen. These are known to produce organic molecules, starting with the basic hydrocarbons, such as methane. Will these processes have increased in complexity to create polymers? Will we find the basis for carbohydrates, saccharides, lipids, glycerides, proteins and enzymes? Following dust trail might very well lead to the foundation of the most spectacular of all organic matter – deoxyribonucleic acid – DNA.

Written by Tammy Plotner