Image credit: Gemini

To investors looking for the next sure thing, the silver coating on the Gemini South 8-meter telescope mirror might seem like an insider’s secret tip-off to invest in this valuable metal for a huge profit. However, it turns out that this immense mirror required less than two ounces (50 grams) of silver, not nearly enough to register on the precious metals markets. The real return on Gemini’s shiny investment is the way it provides unprecedented sensitivity from the ground when studying warm objects in space.

The new coating-the first of its kind ever to line the surface of a very large astronomical mirror-is among the final steps in making Gemini the most powerful infrared telescope on our planet. “There is no question that with this coating, the Gemini South telescope will be able to explore regions of star and planet formation, black holes at the centers of galaxies and other objects that have eluded other telescopes until now,” said Charlie Telesco of the University of Florida who specializes in studying star- and planet-formation regions in the mid-infrared.

Covering the Gemini mirror with silver utilizes a process developed over several years of testing and experimentation to produce a coating that meets the stringent requirements of astronomical research. Gemini’s lead optical engineer, Maxime Boccas who oversaw the mirror-coating development said, “I guess you could say that after several years of hard work to identify and tune the best coating, we have found our silver lining!”

Most astronomical mirrors are coated with aluminum using an evaporation process, and require recoating every 12-18 months. Since the twin Gemini mirrors are optimized for viewing objects in both optical and infrared wavelengths, a different coating was specified. Planning and implementing the silver coating process for Gemini began with the design of twin 9-meter-wide coating chambers located at the observatory facilities in Chile and Hawaii. Each coating plant (originally built by the Royal Greenwich Observatory in the UK) incorporates devices called magnetrons to “sputter” a coating on the mirror. The sputtering process is necessary when applying multi-layered coatings on the Gemini mirrors in order to accurately control the thickness of the various materials deposited on the mirror’s surface. A similar coating process is commonly used for architectural glass to reduce air-conditioning costs and produce an aesthetic reflection and color to glass on buildings, but this is the first time it has been applied to a large astronomical telescope mirror.

The coating is built up in a stack of four individual layers to assure that the silver adheres to the glass base of the mirror and is protected from environmental elements and chemical reactions. As anyone with silverware knows, tarnish on silver reduces the reflection of light. The degradation of an unprotected coating on a telescope mirror would have a profound impact on its performance. Tests done at Gemini with dozens of small mirror samples over the past few years show that the silvered coating applied to the Gemini mirror should remain highly reflective and usable for at least a year between recoatings.

In addition to the large primary mirror, the telescope’s 1-meter secondary mirror and a third mirror that directs light into scientific instruments were also coated using the same protected silver coatings. The combination of these three mirror coatings as well as other design considerations are all responsible for the dramatic increase in Gemini’s sensitivity to thermal infrared radiation.

A key measure of a telescope’s performance in the infrared is its emissivity (how much heat it actually emits compared to the total amount it can theoretically emit) in the thermal or mid-infrared part of the spectrum. These emissions result in a background noise against which astronomical sources must be measured. Gemini has the lowest total thermal emissivity of any large astronomical telescope on the ground, with values under 4% prior to receiving its silver coating. With this new coating, Gemini South’s emissivity will drop to about 2%. At some wavelengths this has the same effect on sensitivity as increasing the diameter of the Gemini telescope from 8 to more than 11 meters! The result is a significant increase in the quality and amount of Gemini’s infrared data, which allows detection of objects that would otherwise be lost in the noise generated by heat radiating from the telescope. It is common among other ground-based telescopes to have emissivity values in excess of 10%

The recoating procedure was successfully performed on May 31, and the newly coated Gemini South mirror has been re-installed and calibrated in the telescope. Engineers are currently testing the systems before returning the telescope to full operations. The Gemini North mirror on Mauna Kea will undergo the same coating process before the end of this year.

Why Silver?

The reason astronomers wish to use silver as the surface on a telescope mirror lies in its ability to reflect some types of infrared radiation more effectively than aluminum. However, it is not just the amount of infrared light that is reflected but also the amount of radiation actually emitted from the mirror (its thermal emissivity) that makes silver so attractive. This is a significant issue when observing in the mid-infrared (thermal) region of the spectrum, which is essentially the study of heat from space. ?The main advantage of silver is that it reduces the total thermal emission of the telescope. This in turn increases the sensitivity of the mid-infrared instruments on the telescope and allows us to see warm objects like stellar and planetary nurseries significantly better,? said Scott Fisher a mid-infrared astronomer at Gemini.

The advantage comes at a price however. To use silver, the coating must be applied in several layers, each with a very precise and uniform thickness. To do this, devices called magnetrons are used to apply the coating. They work by surrounding an extremely pure metal plate (called the target) with a plasma cloud of gas (argon or nitrogen) that knocks atoms out from the target and deposits them uniformly on the mirror (which rotates slowly under the magnetron). Each layer is extremely thin; with the silver layer only about 0.1 microns thick or about 1/200 the thickness of a human hair. The total amount of silver deposited on the mirror is approximately equal to 50 grams.

Studying Heat Originating from Space

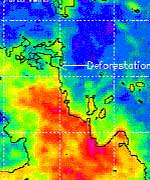

Some of the most intriguing objects in the universe emit radiation in the infrared part of the spectrum. Often described as “heat radiation,” infrared light is redder than the red light we see with our eyes. Sources that emit in these wavelengths are sought after by astronomers since most of their infrared radiation can pass through clouds of obscuring gas dust and reveal secrets otherwise shrouded from view. The infrared wavelength regime is split into three main regions, near- , mid- and far-infrared. Near-infrared is just beyond what the human eye can see (redder than red), mid-infrared (often called thermal infrared) represents longer wavelengths of light usually associated with heat sources in space, and far-infrared represents cooler regions.

Gemini’s silver coating will enable the most significant improvements in the thermal infrared part of the spectrum. Studies in this wavelength range include star- and planet-formation regions, with intense research that seeks to understand how our own solar system formed some five billion years ago.

Original Source: Gemini News Release