Image credit: Chandra

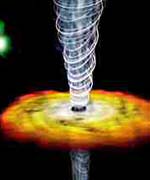

Imagine making a natural telescope more powerful than any other telescope currently operating. Then imagine using it to view closer to the edge of a black hole where its mouth is like a jet that forms super-hot charged particles and spits them millions of light-years into space. The task would seem to take one to the edge of no-return, a violent spot four billion light-years from Earth. That place is called a quasar named PKS 1257-326. Its faint twinkle in the sky is given the more catchy name of a ‘blazar’, meaning it is a quasar that varies dramatically in brightness, and may mask an even more mysterious, inner black hole of enormous gravitational power.

The length of a telescope needed to peer into the mouth of the blazar would have to be gigantic, about a million kilometers wide. But just such a natural lens has been found by a team of Australian and European astronomers; its lens is remarkably, a cloud of gas. The idea of a vast, natural telescope seems too elegant to avoid peering into.

The technique, dubbed ‘Earth-Orbit Synthesis’, was first outlined by Dr Jean-Pierre Macquart of the University of Groningen in The Netherlands and CSIRO’s Dr David Jauncey in a paper published in 2002. The new technique promises researchers the ability to resolve details about 10 microarcseconds across – equivalent to seeing a sugar cube on the Moon, from Earth.

“That’s a hundred times finer detail than we can see with any other current technique in astronomy,” says Dr. Hayley Bignall, who recently completed her PhD at the University of Adelaide and is now at JIVE, the Joint Institute for Very Long Baseline Interferometry in Europe. “It’s ten thousand times better than the Hubble Space Telescope can do. And it’s as powerful as any proposed future space-based optical and X-ray telescopes.”

Bignall made the observations with the CSIRO Australia Telescope Compact Array radio telescope in eastern Australia. When she refers to a microarcsecond, that is a measure of angular size, or how big an object looks. If for instance the sky were divided by degrees as a hemisphere, the unit is about a third of a billionth of one degree.

How does the largest telescope work? Using the clumpiness inside a cloud of gas is not entirely unfamiliar to night-watchers. Like atmospheric turbulence makes the stars twinkle, our own galaxy has a similar invisible atmosphere of charged particles that fill the voids between stars. Any clumping of this gas naturally can form a lens, just like the density change from air-to-glass bent and focused the light in what Galileo first saw when he pointed his first telescope towards the star. The effect is also called scintillation, and the cloud acts like a lens.

Seeing better than anyone else may be remarkable, but how to decide where to look first? The team is particularly interested using ‘Earth-Orbit Synthesis’ to peer close to black holes in quasars, which are the super-bright cores of distant galaxies. These quasars subtend such small angles on the sky as to be mere points of light or radio emission. At radio wavelengths, some quasars are small enough to twinkle in our Galaxy’s atmosphere of charged particles, called the ionized interstellar medium. Quasars twinkle or vary much more slowly than the twinkling one might associate with visible stars. So observers have to be patient to view them, even with the help of the most powerful telescopes. Any change in less than a day is considered to be fast. The fastest scintillators have signals that double or treble in strength in less than an hour. In fact the best observations made so far benefit from the annual motion of the Earth, since the yearly variation gives a complete picture, potentially allowing astronomers to see the violent changes in the mouth of a black-hole jet. That’s one of the team’s goals: “to see to within a third of a light-year of the base of one of these jets,” according to CSIRO’s Dr David Jauncey. “That’s the ‘business end’ where the jet is made.”

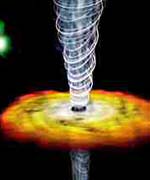

It is not possible to “see” into a black hole, because these collapsed stars are so dense, that their overpowering gravity doesn’t even allow light to escape. Only the behavior of matter outside a horizon some distance away from a black-hole can signal that they even exist. The largest telescope may help the astronomers understand the size of a jet at its base, the pattern of magnetic fields there, and how a jet evolves over time. “We can even look for changes as matter strays near the black hole and is spat out along the jets, ” says Dr Macquart.

Astrobiology Magazine had the opportunity to talk with Hayley Bignall about how to make a telescope from gas clouds, and why peering deeper than anyone before may offer insight into remarkable events near black holes. Astrobiology Magazine (AM): How did you first become interested in using gas clouds as part of a natural focus for resolving very distant objects?

Hayley Bignall (HB): The idea of using interstellar scintillation (ISS), a phenomenon due to radio wave scattering in turbulent, ionized Galactic gas “clouds”, to resolve very distant, compact objects, really represents the convergence of a couple of different lines of research, so I will outline a little of the historical background.

In the 1960s, radio astronomers used another kind of scintillation, interplanetary scintillation, due to scattering of radio waves in the solar wind, to measure sub-arcsecond (1 arcsecond = 1/3600 degrees of arc) angular sizes for radio sources. This was higher resolution than could be achieved by other means at the time. But these studies largely fell by the wayside with the advent of Very Long Baseline Interferometry (VLBI) in the late 1960s, which allowed direct imaging of radio sources with much higher angular resolution – today, VLBI achieves resolution better than a milliarcsecond.

I personally became interested in potential uses of interstellar scintillation through being involved in studies of radio source variability – in particular, variability of “blazars”. Blazar is a catchy name applied to some quasars and BL Lacertae objects – that is, Active Galactic Nuclei (AGN), probably containing supermassive black holes as their “central engines”, which have powerful jets of energetic, radiating particles pointed almost straight at us.

We then see effects of relativistic beaming in the radiation from the jet, including rapid variability in intensity across the whole electromagnetic spectrum, from radio to high-energy gamma rays. Most of the observed variability in these objects could be explained, but there was a problem: some sources showed very rapid, intra-day radio variability. If such short time-scale variability at such long (centimeter) wavelengths were intrinsic to the sources, they would be far too hot to stay around for years, as many were observed to do. Sources that hot should radiate all their energy away very quickly, as X-rays and gamma-rays. On the other hand, it was already known that interstellar scintillation affects radio waves; so the question of whether the very rapid radio variability was in fact ISS, or intrinsic to the sources, was an important one to resolve.

During my PhD research I found, by chance, rapid variability in the quasar (blazar) PKS 1257-326, which is one of the three most rapidly radio variable AGN ever observed. My colleagues and I were able to show conclusively that the rapid radio variability was due to ISS [scintillation]. The case for this particular source added to mounting evidence that intra-day radio variability in general is predominantly due to ISS.

Sources which show ISS must have very small, microarcsecond, angular sizes. Observations of ISS can in turn be used to “map” source structure with microarcsecond resolution. This is much higher resolution than even VLBI can achieve. The technique was outlined in a 2002 paper by two of my colleagues, Dr Jean-Pierre Macquart and Dr David Jauncey.

The quasar PKS 1257-326 proved to be a very nice “guinea pig” with which to demonstrate that the technique really works.

AM: The principles of scintillation are visible to anyone even without a telescope, correct–where a star twinkles because it covers a very small angle in the sky (being so far away), but a planet in our solar system doesn’t scintillate visibly? Is this a fair comparison of the principle for estimating distances visually with scintillation?

HB: The comparison with seeing stars twinkle as a result of atmospheric scintillation (due to turbulence and temperature fluctuations in the Earth’s atmosphere) is a fair one; the basic phenomenon is the same. We don’t see planets twinkle because they have much larger angular sizes – the scintillation gets “smeared out” over the planet’s diameter. In this case, of course, it is because the planets are so close to us that they subtend larger angles on the sky than stars.

Scintillation is not really useful for estimating distances to quasars, however: objects that are further away do not always have smaller angular sizes. For example, all pulsars (spinning neutron stars) in our own Galaxy scintillate because they have very tiny angular sizes, much smaller than any quasar, even though quasars are often billions of light-years away. In fact, scintillation has been used to estimate pulsar distances. But for quasars, there are many factors besides distance which affect their apparent angular size, and to complicate matters further, at cosmological distances, the angular size of an object no longer varies as the inverse of distance. Generally the best way of estimating the distance to a quasar is to measure the redshift of its optical spectrum. Then we can convert measured angular scales (e.g. from scintillation or VLBI observations) to linear scales at the redshift of the source

AM: The telescope as described offers a quasar example that is a radio source and observed to vary over an entire year. Are there any natural limits to the types of sources or the length of observation?

HB: There are angular size cut-offs, beyond which the scintillation gets “quenched”. One can picture the radio source brightness distribution as a bunch of independently scintillating “patches” of a given size, so that as the source gets larger, the number of such patches increases, and eventually the scintillation over all the patches averages out so that we cease to observe any variations at all. From previous observations we know that for extragalactic sources, the shape of the radio spectrum has a lot to do with how compact a source is – sources with “flat” or “inverted” radio spectra (i.e. flux density increasing towards shorter wavelengths) are generally the most compact. These also tend to be “blazar”-type sources.

As far as the length of observation goes, it is necessary to obtain many independent samples of the scintillation pattern. This is because scintillation is a stochastic process, and we need to know some statistics of the process in order to extract useful information. For fast scintillators like PKS 1257-326, we can get an adequate sample of the scintillation pattern from just one, typical 12-hour observing session. Slower scintillators need to be observed over several days to get the same information. However, there are some unknowns to solve for, such as the bulk velocity of the scattering “screen” in the Galactic interstellar medium (ISM). By observing at intervals spaced over a whole year, we can solve for this velocity – and importantly, we also get two-dimensional information on the scintillation pattern and hence the source structure. As the Earth goes around the Sun, we effectively cut through the scintillation pattern at different angles, as the relative Earth/ISM velocity varies over the course of the year. Our research group dubbed this technique “Earth Orbital Synthesis”, as it is analogous to “Earth rotation synthesis”, a standard technique in radio interferometry.

AM: A recent estimate for the number of stars in the sky estimated that there are ten times more stars in the known universe than grains of sand on Earth. Can you describe why jets and black holes are interesting as difficult-to-resolve objects, even using current and future space telescopes like Hubble and Chandra?

HB: The objects we are studying are some of the most energetic phenomena in the universe. AGN can be up to ~1013 (10 to the power of 13, or 10,000 trillion) times more luminous than the Sun. They are unique “laboratories” for high energy physics. Astrophysicists would like to fully understand the processes involved in forming these tremendously powerful jets close to the central supermassive black hole. Using scintillation to resolve the inner regions of radio jets, we are peering close to the “nozzle” where the jet forms – closer to the action than we can see with any other technique!

AM: In your research paper, you point out that how fast and how strongly the radio signals vary depends on the size and shape of the radio source, the size and structure of the gas clouds, the Earth’s speed and direction as it travels around the Sun, and the speed and direction in which the gas clouds are travelling. Are there built-in assumptions about either the shape of the gas cloud ‘lens’ or the shape of observed object that is accessible with the technique?

The Ring Nebula, although not useful imaging through, has the suggestive look of a far-away telescope lens. 2,000 light years distant in the direction of the constellation, Lyra, the ring is formed in the late stages of the inner star’s life, when it sheds a thick and expanding outer gas layer. Credit: NASA Hubble HST

HB: Rather than think of gas clouds, it is perhaps more accurate to picture a phase-changing “screen” of ionized gas, or plasma, which contains a large number of cells of turbulence. The main assumption which goes into the model is that the size scale of the turbulent fluctuations follows a power-law spectrum – this seems to be a reasonable assumption, from what we know about general properties of turbulence. The turbulence could be preferentially elongated in a particular direction, due to magnetic field structure in the plasma, and in principle we can get some information on this from the observed scintillation pattern. We also get some information from the scintillation pattern about the shape of the observed object, so there are no built-in assumptions about that, although at this stage we can only use quite simple models to describe the source structure.

AM: Are fast scintillators a good target for expanding the capabilities of the method?

HB: Fast scintillators are good simply because they don’t require as much observing time as slower scintillators to get the same amount of information. The first three “intra-hour” scintillators have taught us a lot about the scintillation process and about how to do “Earth Orbit Synthesis”.

AM: Are there additional candidates planned for future observations?

HB: My colleagues and I have recently undertaken a large survey, using the Very Large Array in New Mexico, to look for new scintillating radio sources. The first results of this survey, led by Dr Jim Lovell of the CSIRO’s Australia Telescope National Facility (ATNF), were recently published in the Astronomical Journal (October 2003). Out of 700 flat spectrum radio sources observed, we found more than 100 sources which showed significant variability in intensity over a 3-day period. We are undertaking follow-up observations in order to learn more about source structure on ultra-compact, microarcsecond scales. We will compare these results with other source properties such as emission at other wavelengths (optical, X-ray, gamma-ray), and structure on larger spatial scales, such as that seen with VLBI. In this way we hope to learn more about these very compact, high brightness temperature sources, and also, in the process, learn more about properties of the interstellar medium of our own Galaxy.

It seems that the reason for very fast scintillation in some sources is that the plasma “scattering screen” causing the bulk of the scintillation is quite nearby, within 100 light-years of the solar system. These nearby “screens” are apparently quite rare. Our survey found very few fast scintillators, which was somewhat surprising as two of the three fastest known scintillators were discovered serendipitously. We thought that there might be many more such sources!

Original Source: Astrobiology Magazine