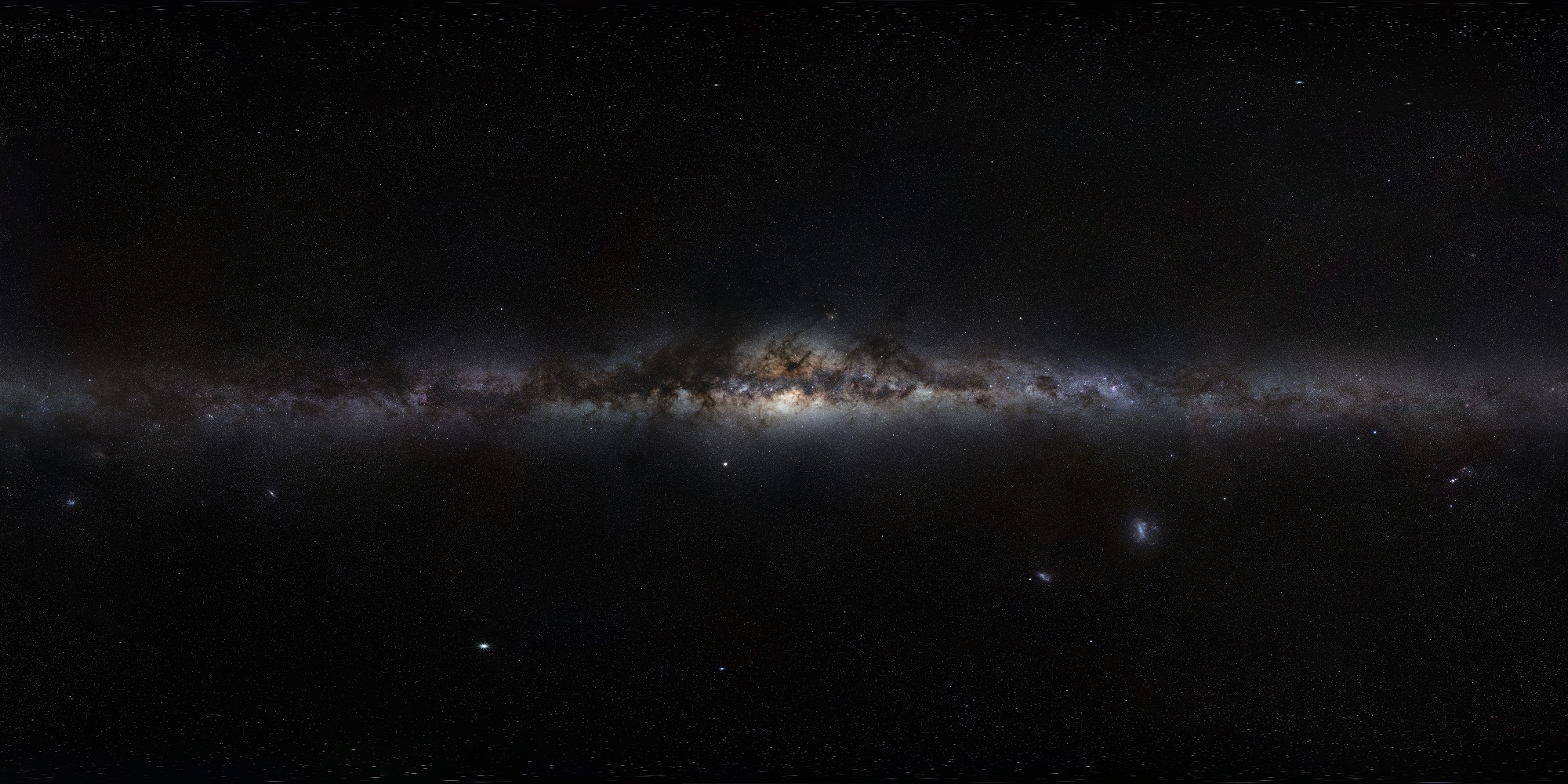

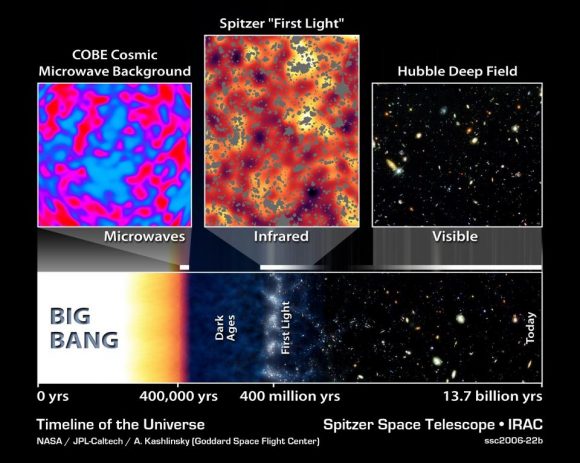

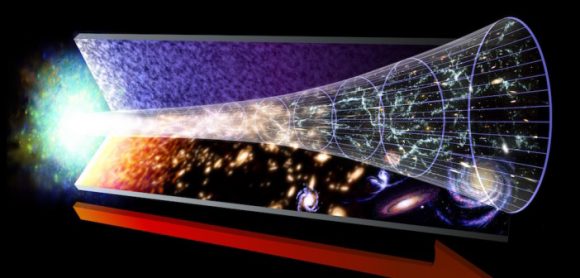

One of the tenets of our cosmological model is that the universe is expanding. For reasons we still don’t fully understand, space itself is stretching over time. It’s a strange idea to wrap your head around, but the evidence for it is conclusive. It is not simply that galaxies appear to be moving away from us, as seen by their redshift. Distant galaxies also appear larger than they should due to cosmic expansion. They are also distributed in superclusters separated by large voids. Then there is the cosmic microwave background, where even its small fluctuations in temperature confirm cosmic expansion.

Continue reading “Gravitational lenses could be the key to measuring the expansion rate of the Universe”The Average Temperature of the Universe has Been Getting Hotter and Hotter

For almost a century, astronomers have understood that the Universe is in a state of expansion. Since the 1990s, they have come to understand that as of four billion years ago, the rate of expansion has been speeding up. As this progresses, and the galaxy clusters and filaments of the Universe move farther apart, scientists theorize that the mean temperature of the Universe will gradually decline.

But according to new research led by the Center for Cosmology and AstroParticle Physics (CCAPP) at Ohio State University, it appears that the Universe is actually getting hotter as time goes on. After probing the thermal history of the Universe over the last 10 billion years, the team concluded that the mean temperature of cosmic gas has increased more than 10 times and reached about 2.2 million K (~2.2 °C; 4 million °F) today.

Continue reading “The Average Temperature of the Universe has Been Getting Hotter and Hotter”The Tools Humanity Will Need for Living in the Year 1 Trillion

Since the 1990s, astrophysicists have known that for the past few billion years, the Universe has been experiencing an accelerated rate of expansion. This gave rise to the theory that the Universe is permeated by a mysterious invisible energy known as “dark energy”, which acts against gravity and is pushing the cosmos apart. In time, this energy will become the dominant force in the Universe, causing all stars and galaxies to spread beyond the cosmic horizon.

At this point, all stars and galaxies in the Universe will no longer be visible or accessible from any other. The question remains, what will intelligent civilizations (such as our own) do for resources and energy at this point? This question was addressed in a recent paper by Dr. Abraham Loeb – the Frank B. Baird, Jr., Professor of Science at Harvard University and the Chair of the Harvard Astronomy Department.

The paper, “Securing Fuel for our Frigid Cosmic Future“, recently appeared online. As he indicates in his study, when the Universe is ten times its current age (roughly 138 billion years old), all stars outside the Local Group of galaxies will no be accessible to us since they will be receding away faster than the speed of light. For this reason, he recommends that humanity follow the lesson from Aesop’s fable, “The Ants and the Grasshopper”.

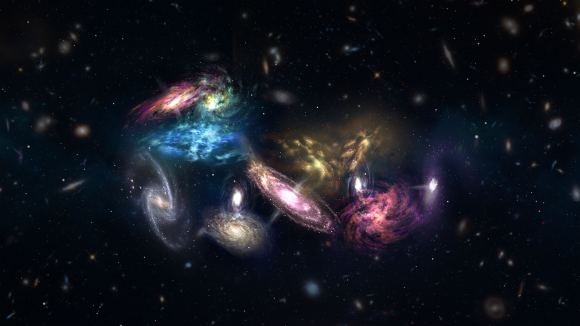

This classic tale tells the story of ants who spent the summer collecting food for the winter while the grasshopper chose to enjoy himself. While different versions of the story exist that offer different takes on the importance of hard work, charity, and compassion, the lesson is simple: always be prepared. In this respect, Loeb recommends that advanced species migrate to rich clusters of galaxies.

These clusters represent the largest reservoirs of matter bound by gravity and would therefore be better able to resist the accelerated expansion of the Universe. As Dr. Loeb told Universe Today via email:

“In my essay I point out that mother Nature was kind to us as it spontaneously gave birth to the same massive reservoir of fuel that we would have aspired to collect by artificial means. Primordial density perturbations from the early universe led to the gravitational collapse of regions as large as tens of millions of light years, assembling all the matter in them into clusters of galaxies – each containing the equivalent of a thousand Milky Way galaxies.”

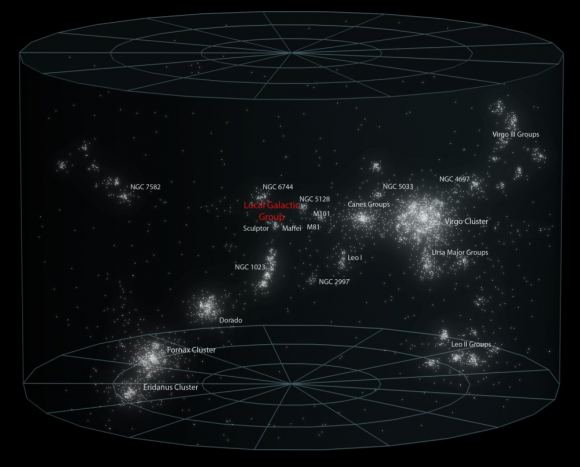

Dr. Loeb also indicated where humanity (or other advanced civilizations) should consider relocating to when the expansion of the Universe causes the stars of the Local Group to expand beyond the cosmic horizon. Within 50 million light years, he indicates, likes the Virgo Cluster, which contains about a thousands times more matter than the Milky Way Galaxy. The second closest is the Coma Cluster, a collection of over 1000 galaxies located about 336 million light years away.

In addition to offering a solution to the accelerating expansion of the Universe, Dr. Loeb’s study also presents some interesting possibilities when it comes to the search for extra-terrestrial intelligence (SETI). If, in fact, there are already advanced civilizations migrating to prepare for the inevitable expansion of the Universe, they may be detectable by various means. As Dr. Loeb explained:

“If traveling civilizations transmit powerful signals then we might be able to see evidence for their migration towards clusters of galaxies. Moreover, we would expected a larger concentration of advanced civilization in clusters than would be expected simply by counting the number of galaxies there. Those that settle there could establish more prosperous communities, in analogy to civilizations near rivers or lakes on Earth.”

This paper is similar to a study Dr. Loeb conducted back in 2011, which appeared in the Journal of Cosmology and Astroparticle Physics under the title “Cosmology with Hypervelocity Stars“. At the time, Dr. Loeb was addressing what would happen in the distant future when all extragalactic light sources will cease to be visible or accessible due to the accelerating expansion of the Universe.

This study was a follow-up to a 2001 paper in which Dr. Loeb addressed what would become of the Universe in billions of years – which appeared in the journal Physical Review Letters under the title “The Long–Term Future of Extragalactic Astronomy“. Shortly thereafter, Dr. Loeb and Freeman Dyson himself began to correspond about what could be done to address this problem.

Their correspondence was the subject of an article by Nathan Sanders (a writer for Astrobites) who recounted what Dr. Loeb and Dr. Dyson had to say on the matter. As Dr. Loeb recalls:

“A decade ago I wrote a few papers on the long-term future of the Universe, trillions of years from now. Since the cosmic expansion is accelerating, I showed that once the universe will age by a factor of ten (about a hundred billion years from now), all matter outside our Local Group of galaxies (which includes the Milky Way and the Andromeda galaxy, along with their satellites) will be receding away from us faster than light. After one of my papers was posted in 2011, Freeman Dyson wrote to me and suggested to a vast “cosmic engineering project” in which we will concentrate matter from a large-scale region around us to a small enough volume such that it will stay bound by its own gravity and not expand with the rest of the Universe.”

At the time, Dr. Loeb indicated that data gathered by the Sloan Digital Sky Survey (SDSS) indicated that attempts at “super-engineering” did not appear to be taking place. This was based on the fact that the galaxy clusters observed by the SDSS were not overdense, nor did they exhibit particularly high velocities (as would be expected). To this, Dr. Dyson wrote: “That is disappointing. On the other hand, if our colleagues have been too lazy to do the job, we have plenty of time to start doing it ourselves.”

A similar idea was presented in a recent paper by Dr. Dan Hooper, an astrophysicist from the Fermi National Accelerator Laboratory (FNAL) and the University of Chicago. In his study, Dr. Hooper suggested that advanced species could survive all stars in the Local Group expanding beyond the cosmic horizon (100 billion years from now), by harvesting stars across tens of millions of light years.

This harvesting would consist of building unconventional Dyson Spheres that would use the energy they collected from stars to propel them towards the center of the species’ civilization. However, only stars that range in mass of 0.2 to 1 Solar Masses would be usable, as high-mass stars would evolve beyond their main sequence before reaching the destination and low-mass stars would not generate enough energy for acceleration to make it in time.

But as Dr. Loeb indicates, there are additional limitations to this approach, which makes migrating more attractive than harvesting.

“First, we do not know of any technology that enables moving stars around, and moreover Sun-like stars only shine for about ten billion years (of order the current age of the Universe) and cannot serve as nuclear furnaces that would keep us warm into the very distant future. Therefore, an advanced civilization does not need to embark on a giant construction project as suggested by Dyson and Hooper, but only needs to propel itself towards the nearest galaxy cluster and take advantage of the cluster resources as fuel for its future prosperity.”

While this may seem like a truly far-off concern, it does raise some interesting questions about the long-term evolution of the Universe and how intelligent civilizations may be forced to adapt. In the meantime, if it offers some additional possibilities for searching for extra-terrestrial intelligences (ETIs), then so much the better.

And as Dr. Dyson said, if there are currently no ETIs preparing for the coming “cosmic winter” with cosmic engineering projects, perhaps it is something humanity can plan to tackle someday!

Further Reading: arXiv, Journal of Cosmology and Astroparticle Physics, astrobites, astrobites (2)

Precise New Measurements From Hubble Confirm the Accelerating Expansion of the Universe. Still no Idea Why it’s Happening

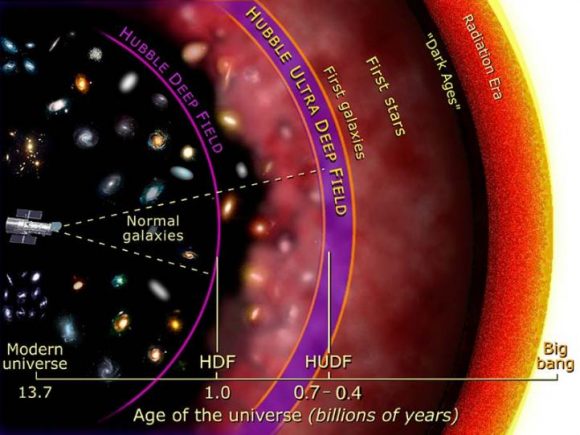

In the 1920s, Edwin Hubble made the groundbreaking revelation that the Universe was in a state of expansion. Originally predicted as a consequence of Einstein’s Theory of General Relativity, this confirmation led to what came to be known as Hubble’s Constant. In the ensuring decades, and thanks to the deployment of next-generation telescopes – like the aptly-named Hubble Space Telescope (HST) – scientists have been forced to revise this law.

In short, in the past few decades, the ability to see farther into space (and deeper into time) has allowed astronomers to make more accurate measurements about how rapidly the early Universe expanded. And thanks to a new survey performed using Hubble, an international team of astronomers has been able to conduct the most precise measurements of the expansion rate of the Universe to date.

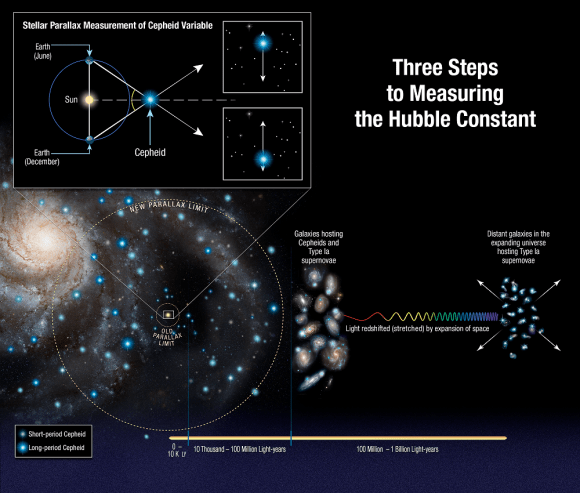

This survey was conducted by the Supernova H0 for the Equation of State (SH0ES) team, an international group of astronomers that has been on a quest to refine the accuracy of the Hubble Constant since 2005. The group is led by Adam Reiss of the Space Telescope Science Institute (STScI) and Johns Hopkins University, and includes members from the American Museum of Natural History, the Neils Bohr Institute, the National Optical Astronomy Observatory, and many prestigious universities and research institutions.

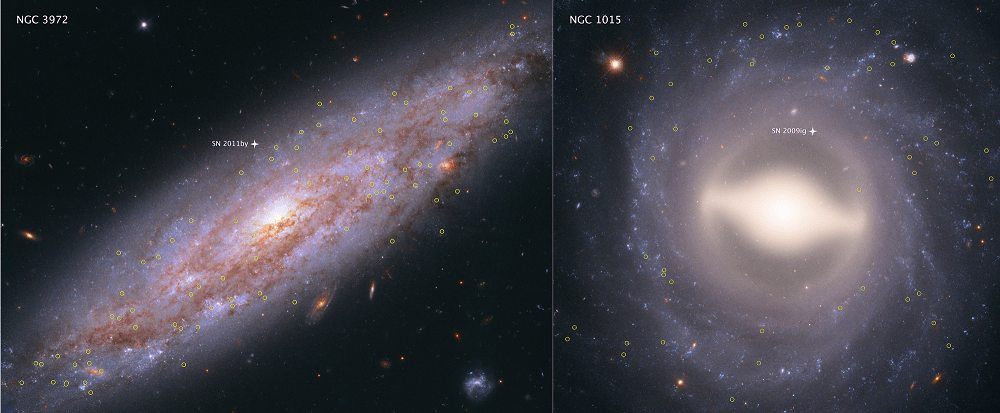

The study which describes their findings recently appeared in The Astrophysical Journal under the title “Type Ia Supernova Distances at Redshift >1.5 from the Hubble Space Telescope Multi-cycle Treasury Programs: The Early Expansion Rate“. For the sake of their study, and consistent with their long term goals, the team sought to construct a new and more accurate “distance ladder”.

This tool is how astronomers have traditionally measured distances in the Universe, which consists of relying on distance markers like Cepheid variables – pulsating stars whose distances can be inferred by comparing their intrinsic brightness with their apparent brightness. These measurements are then compared to the way light from distance galaxies is redshifted to determine how fast the space between galaxies is expanding.

From this, the Hubble Constant is derived. To build their distant ladder, Riess and his team conducted parallax measurements using Hubble’s Wide Field Camera 3 (WFC3) of eight newly-analyzed Cepheid variable stars in the Milky Way. These stars are about 10 times farther away than any studied previously – between 6,000 and 12,000 light-year from Earth – and pulsate at longer intervals.

To ensure accuracy that would account for the wobbles of these stars, the team also developed a new method where Hubble would measure a star’s position a thousand times a minute every six months for four years. The team then compared the brightness of these eight stars with more distant Cepheids to ensure that they could calculate the distances to other galaxies with more precision.

Using the new technique, Hubble was able to capture the change in position of these stars relative to others, which simplified things immensely. As Riess explained in a NASA press release:

“This method allows for repeated opportunities to measure the extremely tiny displacements due to parallax. You’re measuring the separation between two stars, not just in one place on the camera, but over and over thousands of times, reducing the errors in measurement.”

Compared to previous surveys, the team was able to extend the number of stars analyzed to distances up to 10 times farther. However, their results also contradicted those obtained by the European Space Agency’s (ESA) Planck satellite, which has been measuring the Cosmic Microwave Background (CMB) – the leftover radiation created by the Big Bang – since it was deployed in 2009.

By mapping the CMB, Planck has been able to trace the expansion of the cosmos during the early Universe – circa. 378,000 years after the Big Bang. Planck’s result predicted that the Hubble constant value should now be 67 kilometers per second per megaparsec (3.3 million light-years), and could be no higher than 69 kilometers per second per megaparsec.

Based on their sruvey, Riess’s team obtained a value of 73 kilometers per second per megaparsec, a discrepancy of 9%. Essentially, their results indicate that galaxies are moving at a faster rate than that implied by observations of the early Universe. Because the Hubble data was so precise, astronomers cannot dismiss the gap between the two results as errors in any single measurement or method. As Reiss explained:

“The community is really grappling with understanding the meaning of this discrepancy… Both results have been tested multiple ways, so barring a series of unrelated mistakes. it is increasingly likely that this is not a bug but a feature of the universe.”

These latest results therefore suggest that some previously unknown force or some new physics might be at work in the Universe. In terms of explanations, Reiss and his team have offered three possibilities, all of which have to do with the 95% of the Universe that we cannot see (i.e. dark matter and dark energy). In 2011, Reiss and two other scientists were awarded the Nobel Prize in Physics for their 1998 discovery that the Universe was in an accelerated rate of expansion.

Consistent with that, they suggest that Dark Energy could be pushing galaxies apart with increasing strength. Another possibility is that there is an undiscovered subatomic particle out there that is similar to a neutrino, but interacts with normal matter by gravity instead of subatomic forces. These “sterile neutrinos” would travel at close to the speed of light and could collectively be known as “dark radiation”.

Any of these possibilities would mean that the contents of the early Universe were different, thus forcing a rethink of our cosmological models. At present, Riess and colleagues don’t have any answers, but plan to continue fine-tuning their measurements. So far, the SHoES team has decreased the uncertainty of the Hubble Constant to 2.3%.

This is in keeping with one of the central goals of the Hubble Space Telescope, which was to help reduce the uncertainty value in Hubble’s Constant, for which estimates once varied by a factor of 2.

So while this discrepancy opens the door to new and challenging questions, it also reduces our uncertainty substantially when it comes to measuring the Universe. Ultimately, this will improve our understanding of how the Universe evolved after it was created in a fiery cataclysm 13.8 billion years ago.

Further Reading: NASA, The Astrophysical Journal

New Lenses To Help In The Hunt For Dark Energy

Since the 1990s, scientists have been aware that for the past several billion years, the Universe has been expanding at an accelerated rate. They have further hypothesized that some form of invisible energy must be responsible for this, one which makes up 68.3% of the mass-energy of the observable Universe. While there is no direct evidence that this “Dark Energy” exists, plenty of indirect evidence has been obtained by observing the large-scale mass density of the Universe and the rate at which is expanding.

But in the coming years, scientists hope to develop technologies and methods that will allow them to see exactly how Dark Energy has influenced the development of the Universe. One such effort comes from the U.S. Department of Energy’s Lawrence Berkeley National Lab, where scientists are working to develop an instrument that will create a comprehensive 3D map of a third of the Universe so that its growth history can be tracked.

Continue reading “New Lenses To Help In The Hunt For Dark Energy”

The Search for Dark Energy Just Got Easier

Since the early 20th century, scientists and physicists have been burdened with explaining how and why the Universe appears to be expanding at an accelerating rate. For decades, the most widely accepted explanation is that the cosmos is permeated by a mysterious force known as “dark energy”. In addition to being responsible for cosmic acceleration, this energy is also thought to comprise 68.3% of the universe’s non-visible mass.

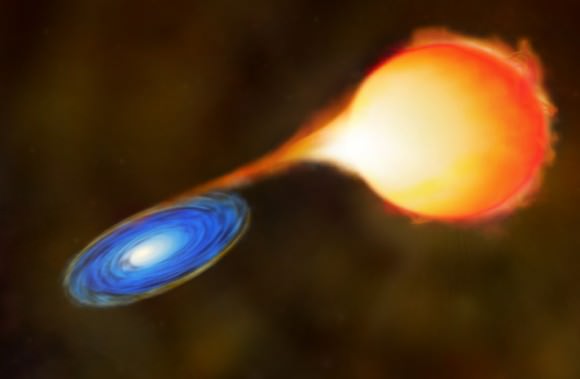

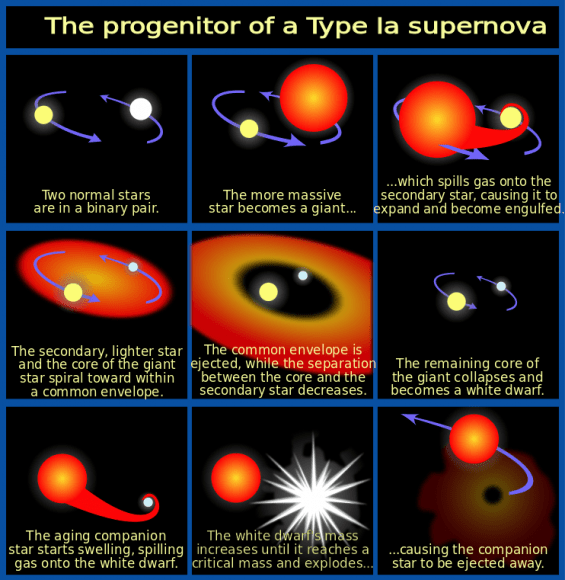

Much like dark matter, the existence of this invisible force is based on observable phenomena and because it happens to fit with our current models of cosmology, and not direct evidence. Instead, scientists must rely on indirect observations, watching how fast cosmic objects (specifically Type Ia supernovae) recede from us as the universe expands.

This process would be extremely tedious for scientists – like those who work for the Dark Energy Survey (DES) – were it not for the new algorithms developed collaboratively by researchers at Lawrence Berkeley National Laboratory and UC Berkeley.

“Our algorithm can classify a detection of a supernova candidate in about 0.01 seconds, whereas an experienced human scanner can take several seconds,” said Danny Goldstein, a UC Berkeley graduate student who developed the code to automate the process of supernova discovery on DES images.

Currently in its second season, the DES takes nightly pictures of the Southern Sky with DECam – a 570-megapixel camera that is mounted on the Victor M. Blanco telescope at Cerro Tololo Interamerican Observatory (CTIO) in the Chilean Andes. Every night, the camera generates between 100 Gigabytes (GB) and 1 Terabyte (TB) of imaging data, which is sent to the National Center for Supercomputing Applications (NCSA) and DOE’s Fermilab in Illinois for initial processing and archiving.

Object recognition programs developed at the National Energy Research Scientific Computing Center (NERSC) and implemented at NCSA then comb through the images in search of possible detections of Type Ia supernovae. These powerful explosions occur in binary star systems where one star is a white dwarf, which accretes material from a companion star until it reaches a critical mass and explodes in a Type Ia supernova.

“These explosions are remarkable because they can be used as cosmic distance indicators to within 3-10 percent accuracy,” says Goldstein.

Distance is important because the further away an object is located in space, the further back in time it is. By tracking Type Ia supernovae at different distances, researchers can measure cosmic expansion throughout the universe’s history. This allows them to put constraints on how fast the universe is expanding and maybe even provide other clues about the nature of dark energy.

“Scientifically, it’s a really exciting time because several groups around the world are trying to precisely measure Type Ia supernovae in order to constrain and understand the dark energy that is driving the accelerated expansion of the universe,” says Goldstein, who is also a student researcher in Berkeley Lab’s Computational Cosmology Center (C3).

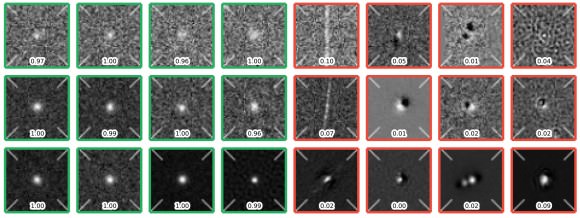

The DES begins its search for Type Ia explosions by uncovering changes in the night sky, which is where the image subtraction pipeline developed and implemented by researchers in the DES supernova working group comes in. The pipeline subtracts images that contain known cosmic objects from new images that are exposed nightly at CTIO.

Each night, the pipeline produces between 10,000 and a few hundred thousand detections of supernova candidates that need to be validated.

“Historically, trained astronomers would sit at the computer for hours, look at these dots, and offer opinions about whether they had the characteristics of a supernova, or whether they were caused by spurious effects that masquerade as supernovae in the data. This process seems straightforward until you realize that the number of candidates that need to be classified each night is prohibitively large and only one in a few hundred is a real supernova of any type,” says Goldstein. “This process is extremely tedious and time-intensive. It also puts a lot of pressure on the supernova working group to process and scan data fast, which is hard work.”

To simplify the task of vetting candidates, Goldstein developed a code that uses the machine learning technique “Random Forest” to vet detections of supernova candidates automatically and in real-time to optimize them for the DES. The technique employs an ensemble of decision trees to automatically ask the types of questions that astronomers would typically consider when classifying supernova candidates.

At the end of the process, each detection of a candidate is given a score based on the fraction of decision trees that considered it to have the characteristics of a detection of a supernova. The closer the classification score is to one, the stronger the candidate. Goldstein notes that in preliminary tests, the classification pipeline achieved 96 percent overall accuracy.

“When you do subtraction alone you get far too many ‘false-positives’ — instrumental or software artifacts that show up as potential supernova candidates — for humans to sift through,” says Rollin Thomas, of Berkeley Lab’s C3, who was Goldstein’s collaborator.

He notes that with the classifier, researchers can quickly and accurately strain out the artifacts from supernova candidates. “This means that instead of having 20 scientists from the supernova working group continually sift through thousands of candidates every night, you can just appoint one person to look at maybe few hundred strong candidates,” says Thomas. “This significantly speeds up our workflow and allows us to identify supernovae in real-time, which is crucial for conducting follow up observations.”

“Using about 60 cores on a supercomputer we can classify 200,000 detections in about 20 minutes, including time for database interaction and feature extraction.” says Goldstein.

Goldstein and Thomas note that the next step in this work is to add a second-level of machine learning to the pipeline to improve the classification accuracy. This extra layer would take into account how the object was classified in previous observations as it determines the probability that the candidate is “real.” The researchers and their colleagues are currently working on different approaches to achieve this capability.

Further Reading: Berkley Lab