New measurements of the cosmic microwave background (CMB) – the leftover light from the Big Bang – lend further support the Standard Cosmological Model and the existence of dark matter and dark energy, limiting the possibility of alternative models of the Universe. Researchers from Stanford University and Cardiff University produced a detailed map of the composition and structure of matter as it would have looked shortly after the Big Bang, which shows that the Universe would not look as it does today if it were made up solely of ‘normal matter’.

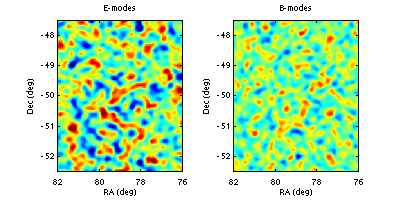

By measuring the way the light of the CMB is polarized, a team led by Sarah Church of the Kavli Institute for Particle Astrophysics and Cosmology at Stanford University and by Walter Gear, head of the School of Physics and Astronomy at Cardiff University in the United Kingdom were able construct a map of the way the Universe would have looked shortly after matter came into existence after the Big Bang. Their findings lend evidence to the predictions of the Standard Model in which the Universe is composed of 95% dark matter and energy, and only 5% of ordinary matter.

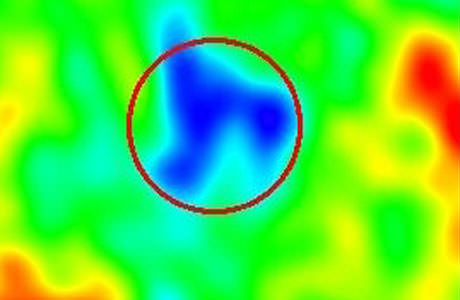

Polarization is a feature of light rays in which the oscillation of the light wave lies in right angles to the direction in which the light is traveling. Though most light is unpolarized, light that has interacted with matter can become polarized. The leftover light from the Big Bang – the CMB – has now cooled to a few degrees above 0 Kelvin, but it still retains the same polarization it had in the early Universe, once it had cooled enough to become transparent to light. By measuring this polarization, the researchers were able to extrapolate the location, structure, and velocity of matter in the early Universe with unprecedented precision. The gravitational collapse of large clumps of matter in the early universe creates certain resonances in the polarization that allowed the researchers to create a map of the matter composition.

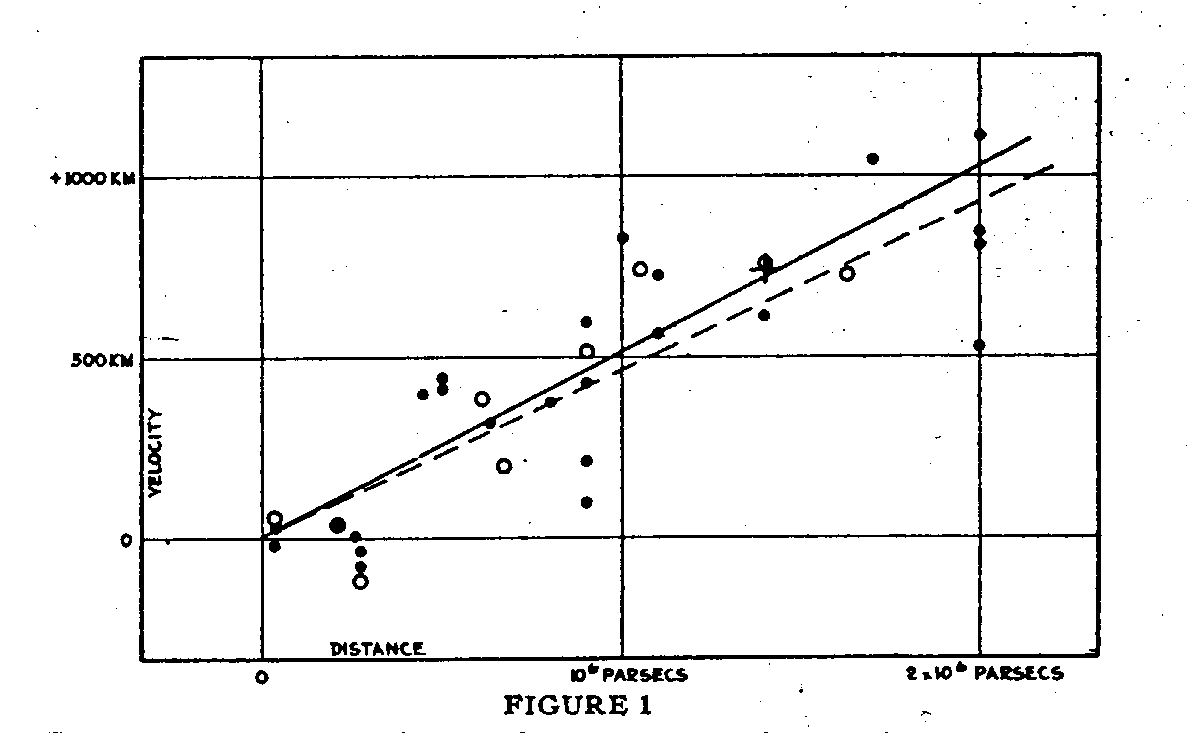

Dr. Gear said, “The pattern of oscillations in the power spectra allow us to discriminate, as “real” and “dark” matter affect the position and amplitudes of the peaks in different ways. The results are also consistent with many other pieces of evidence for dark matter, such as the rotation rate of galaxies, and the distribution of galaxies in clusters.”

The measurements made by the QUaD experiment further constrain those made by previous experiments to measure properties of the CMB, such as WMAP and ACBAR. In comparison to these previous experiments, the  measurements come closer to fitting what is predicted by the Standard Cosmologicl Model by more than an order of magnitude, said Dr. Gear. This is a very important step on the path to verifying whether our model of the Universe is correct.

measurements come closer to fitting what is predicted by the Standard Cosmologicl Model by more than an order of magnitude, said Dr. Gear. This is a very important step on the path to verifying whether our model of the Universe is correct.

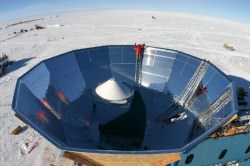

The researchers used the QUaD experiment at the South Pole to make their observations. The QUaD telescope is a bolometer, essentially a thermometer that measures how certain types of radiation increase the temperature of the metals in the detector. The detector itself has to be near 1 degree Kelvin to eliminate noise radiation from the surrounding environment, which is why it is located at the frigid South Pole, and placed inside of a cryostat.

Paper co-author Walter Gear said in an email interview:

“The polarization is imprinted at the time the Universe becomes transparent to light, about 400,000 years after the big bang, rather than right after the big bang before matter existed. There are major efforts now to try to find what is called the “B-mode” signal” which is a more complicated polarization pattern that IS imprinted right after the big-bang. QuaD places the best current upper limit on this but is still more than an order of magnitude away in sensitivity from even optimistic predictions of what that signal might be. That is the next generation of experiments’s goal.”

The results, published in a paper titled Improved Measurements of the Temperature and Polarization of the Cosmic Microwave Background from QUaD in the November 1st Astrophysical Journal, fit the predictions of the Standard Model remarkably well, providing further evidence for the existence of dark matter and energy, and constraining alternative models of the Universe.

Source: SLAC, email interview with Dr. Walter Gear