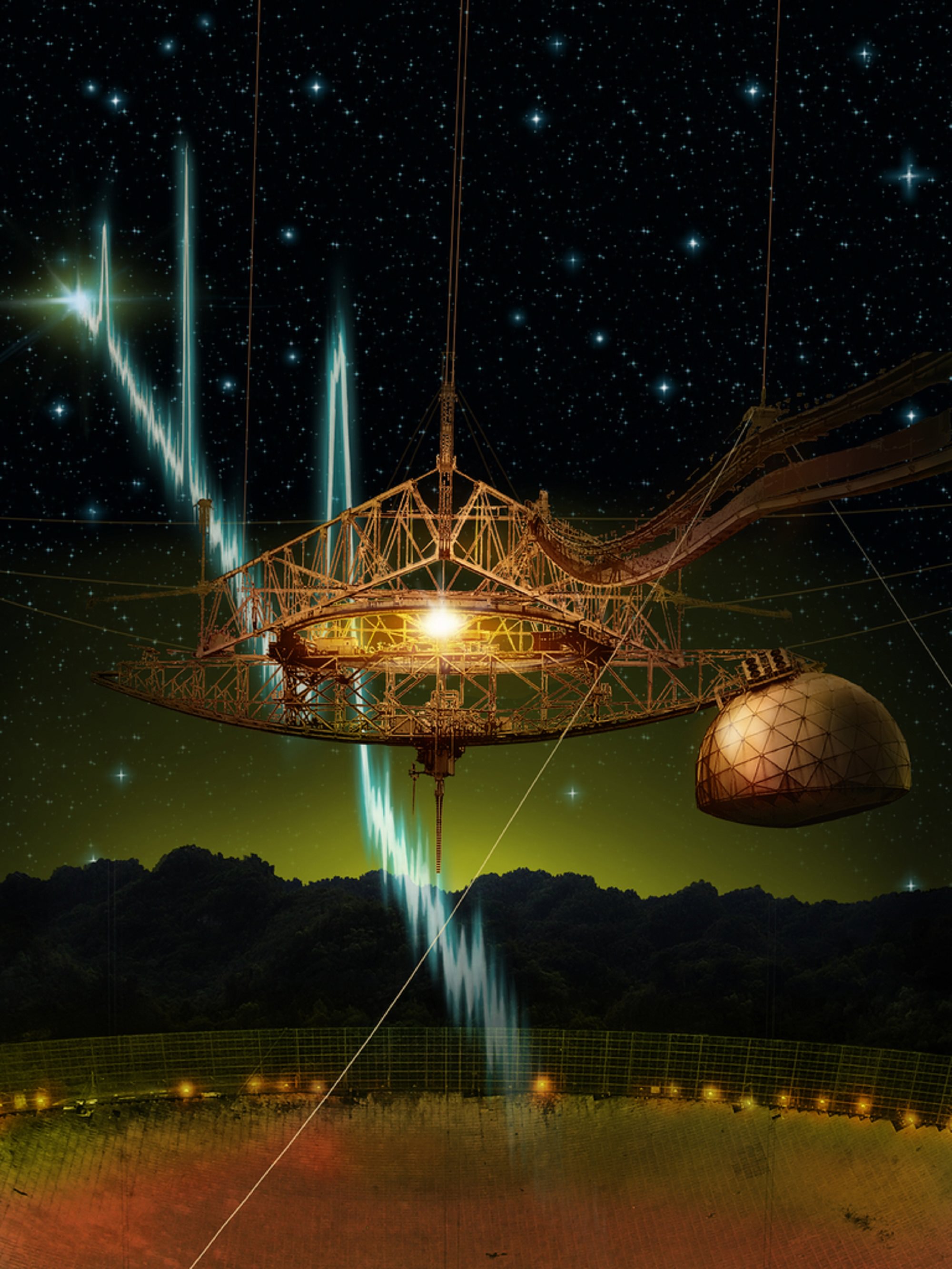

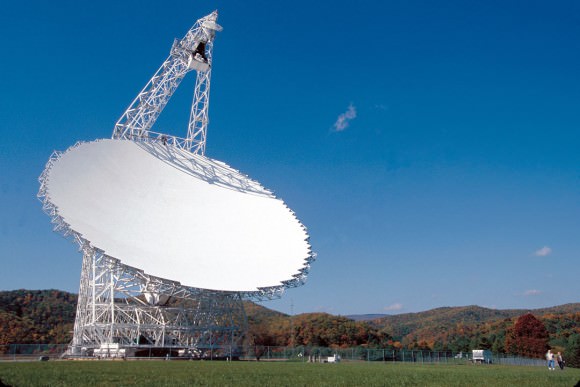

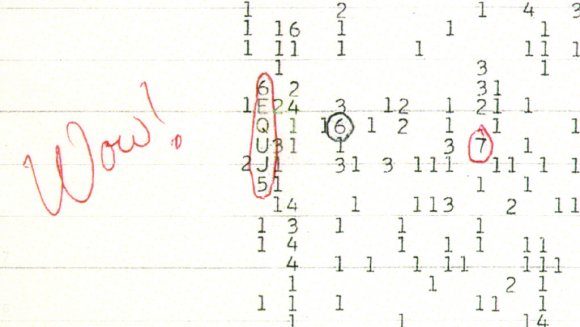

Roughly half a century ago, Cornell astronomer Frank Drake conducted Project Ozma, the first systematic SETI survey at the National Radio Astronomy Observatory in Green Bank, West Virginia. Since that time, scientists have conducted multiple surveys in the hopes of find indications of “technosignatures” – i.e. evidence of technologically-advanced life (such as radio communications).

To put it plainly, if humanity were to receive a message from an extra-terrestrial civilization right now, it would be the single-greatest event in the history of civilization. But according to a new study, such a message could also pose a serious risk to humanity. Drawing on multiple possibilities that have been explored in detail, they consider how humanity could shield itself from malicious spam and viruses.

The study, titled “Interstellar communication. IX. Message decontamination is impossible“, recently appeared online. The study was conducted by Michael Hippke, a independent scientist from the Sonneberg Observatory in Germany; and John G. Learned, a professor with the High Energy Physics Group at the University of Hawaii. Together, they examine some of the foregone conclusions about SETI and what is more likely to be the case.

To be fair, the notion that an extra-terrestrial civilization could pose a threat to humanity is not just a well-worn science fiction trope. For decades, scientists have treated it as a distinct possibility and considered whether or not the risks outweigh the possible benefits. As a result, some theorists have suggested that humans should not engage in SETI at all, or that we should take measures to hide our planet.

As Professor Learned told Universe Today via email, there has never been a consensus among SETI researchers about whether or not ETI would be benevolent:

“There is no compelling reason at all to assume benevolence (for example that ETI are wise and kind due to their ancient civilization’s experience). I find much more compelling the analogy to what we know from our history… Is there any society anywhere which has had a good experience after meeting up with a technologically advanced invader? Of course it would go either way, but I think often of the movie Alien… a credible notion it seems to me.”

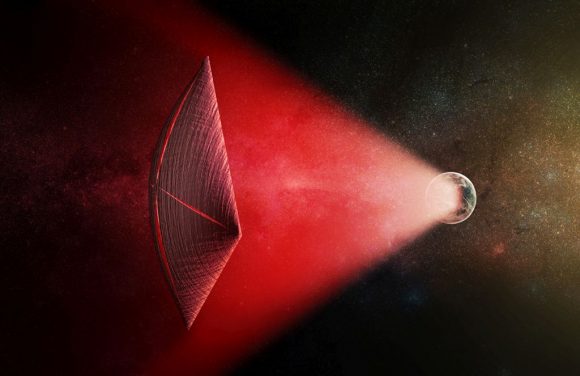

In addition, assuming that an alien message could pose a threat to humanity makes practical sense. Given the sheer size of the Universe and the limitations imposed by Special Relativity (i.e. no known means of FTL), it would always be cheaper and easier to send a malicious message to eradicate a civilization compared to an invasion fleet. As a result, Hippke and Learned advise that SETI signals be vetted and/or “decontaminated” beforehand.

In terms of how a SETI signal could constitute a threat, the researchers outline a number of possibilities. Beyond the likelihood that a message could convey misinformation designed to cause a panic or self-destructive behavior, there is also the possibility that it could contain viruses or other embedded technical issues (i.e. the format could cause our computers to crash).

They also note that, when it comes to SETI, a major complication arises from the fact that no message is likely to received in only one place (thus making containment possible). This is unlikely because of the “Declaration of Principles Concerning Activities Following the Detection of Extraterrestrial Intelligence”, which was adopted by the International Academy of Astronautics in 1989 (and revised in 2010).

Article 6 of this declaration states the following:

“The discovery should be confirmed and monitored and any data bearing on the evidence of extraterrestrial intelligence should be recorded and stored permanently to the greatest extent feasible and practicable, in a form that will make it available for further analysis and interpretation. These recordings should be made available to the international institutions listed above and to members of the scientific community for further objective analysis and interpretation.”

As such, a message that is confirmed to have originated from an ETI would most likely be made available to the entire scientific community before it could be deemed to be threatening in nature. Even if there was only one recipient, and they attempted to keep the message under strict lock and key, it’s a safe bet that other parties would find a way to access it before long.

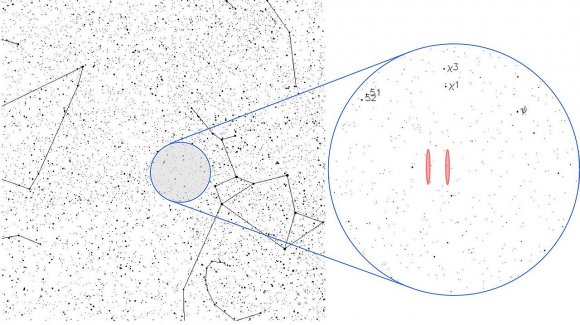

The question naturally arises then, what can be done? One possibility that Hippke and Learned suggest is to take a analog approach to interpreting these messages, which they illustrate using the 2017 SETI Decrypt Challenge as an example. This challenge, which was issued by René Heller of the Max Planck Institute for Solar System Research, consisted of a sequence of about two million binary digits and related information being posted to social media.

In addition to being a fascinating exercise that gave the public a taste of what SETI research means, the challenge also sough to address some central questions when it came to communicating with an ETI. Foremost among these was whether or not humanity would be bale to understand a message from an alien civilization, and how we might be able to make a message comprehensible (if we sent one first). As they state:

“As an example, the message from the “SETI Decrypt Challenge” (Heller 2017) was a stream of 1,902,341 bits, which is the product of prime numbers. Like the Arecibo message (Staff At The National Astronomy Ionosphere Center 1975) and Evpatoria’s “Cosmic Calls” (Shuch 2011), the bits represent the X/Y black/white pixel map of an image. When this is understood, further analysis could be done off-line by printing on paper. Any harm would then come from the meaning of the message, and not from embedded viruses or other technical issues.”

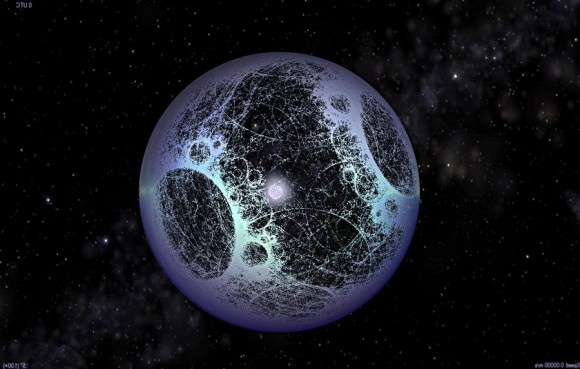

However, where messages are made up of complex codes or even a self-contained AI, the need for sophisticated computers may be unavoidable. In this case, the authors explore another popular recommendation, which is the use on quarantined machines to conduct the analysis – i.e. a message prison. Unfortunately, they also acknowledge that no prison would be 100% effective and containment could eventually fail.

“This scenario resembles the Oracle-AI, or AI box, of an isolated computer system where a possibly dangerous AI is ‘imprisoned’ with only minimalist communication channels,” they write. “Current research indicates that even well-designed boxes are useless, and a sufficiently intelligent AI will be able to persuade or trick its human keepers into releasing it.”

In the end, it appears that the only real solution is to maintain a vigilant attitude and ensure that any messages we send are as benign as possible. As Hippke summarized: “I think it’s overwhelmingly likely that a message will be positive, but you can not be sure. Would you take a 1% chance of death for a 99% chance of a cure for all diseases? One learning from our paper is how to design own message, in case we decide to send any: Keep it simple, don’t send computer code.”

Basically, when it comes to the search for extra-terrestrial intelligence, the rules of internet safety may apply. If we begin to receive messages, we shouldn’t trust those that come with big attachments and send any suspicious looking ones to our spam folder. Oh, and if a sender is promising the cure for all known diseases, or claims to be the deposed monarch of Andromeda in need of some cash, we should just hit delete!

Further Reading: arXiv