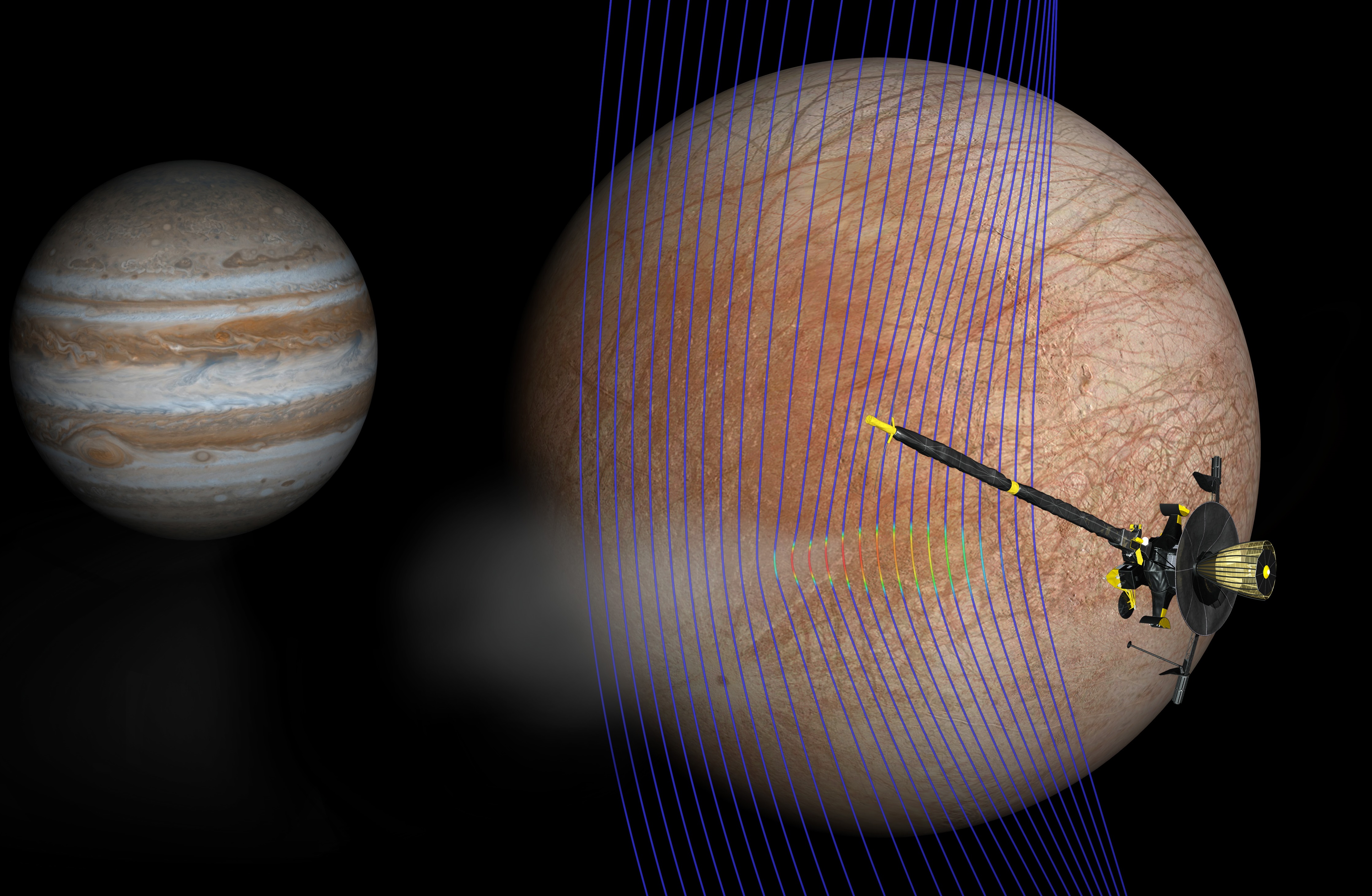

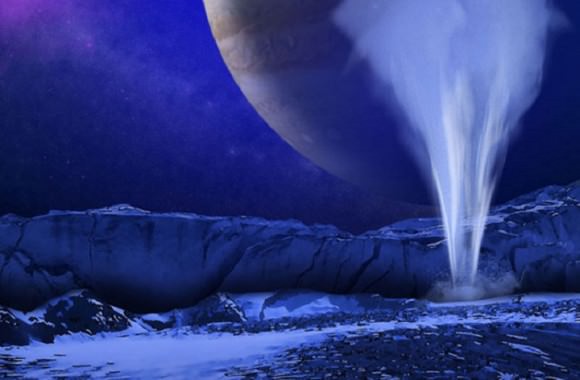

Jupiter’s moon Europa continues to fascinate and amaze! In 1979, the Voyager missions provided the first indications that an interior ocean might exist beneath it’s icy surface. Between 1995 and 2003, the Galileo spaceprobe provided the most detailed information to date on Jupiter’s moons to date. This information bolstered theories about how life could exist in a warm water ocean located at the core-mantle boundary.

Even though the Galileo mission ended when the probe crashed into Jupiter’s atmosphere, the spaceprobe is still providing vital information on Europa. After analyzing old data from the mission, NASA scientists have found independent evidence that Europa’s interior ocean is venting plumes of water vapor from its surface. This is good news for future mission to Europa, which will attempt to search these plumes for signs of life.

The study which describes their findings, titled “Evidence of a plume on Europa from Galileo magnetic and plasma wave signatures“, recently appeared in the journal Nature Astronomy. The study was led by Xianzhe Jia, a space physicist from the Department of Climate and Space Sciences and Engineering at the University of Michigan, and included members from UCLA and the University of Iowa.

The data was collected in 1997 by Galileo during a flyby of Europa that brought it to within 200 km (124 mi) of the moon’s surface. At the time, its Magnetometer (MAG) sensor detected a brief, localized bend in Jupiter’s magnetic field, which remained unexplained until now. After running the data through new and advanced computer models, the team was able to create a simulation that showed that this was caused by interaction between the magnetic field and one of the Europa’s plumes.

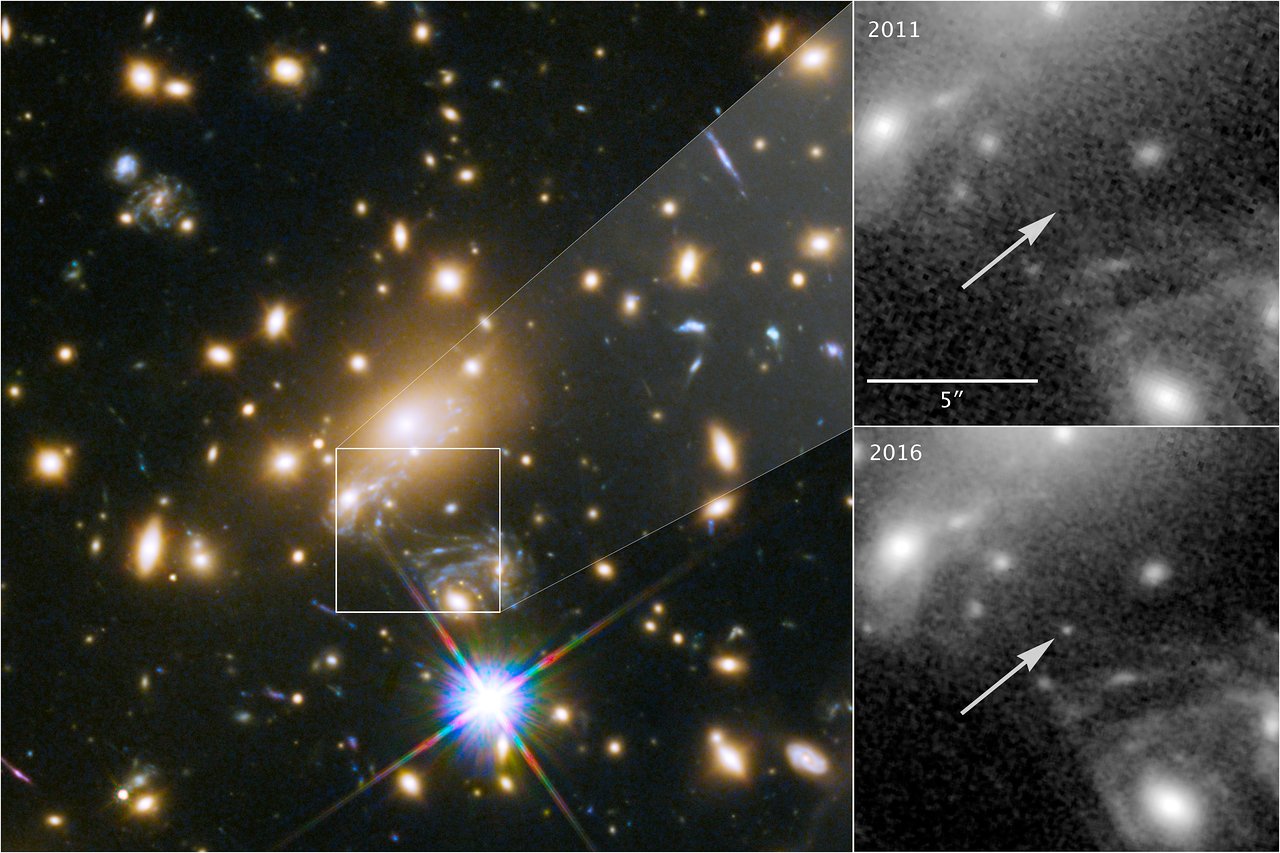

This analysis confirmed ultraviolet observations made by NASA’s Hubble Space Telescope in 2012, which suggested the presence of water plumes on the moon’s surface. However, this new analysis used data collected much closer to the source, which indicated how Europa’s plumes interact with the ambient flow of plasma contained within Jupiter’s powerful magnetic field.

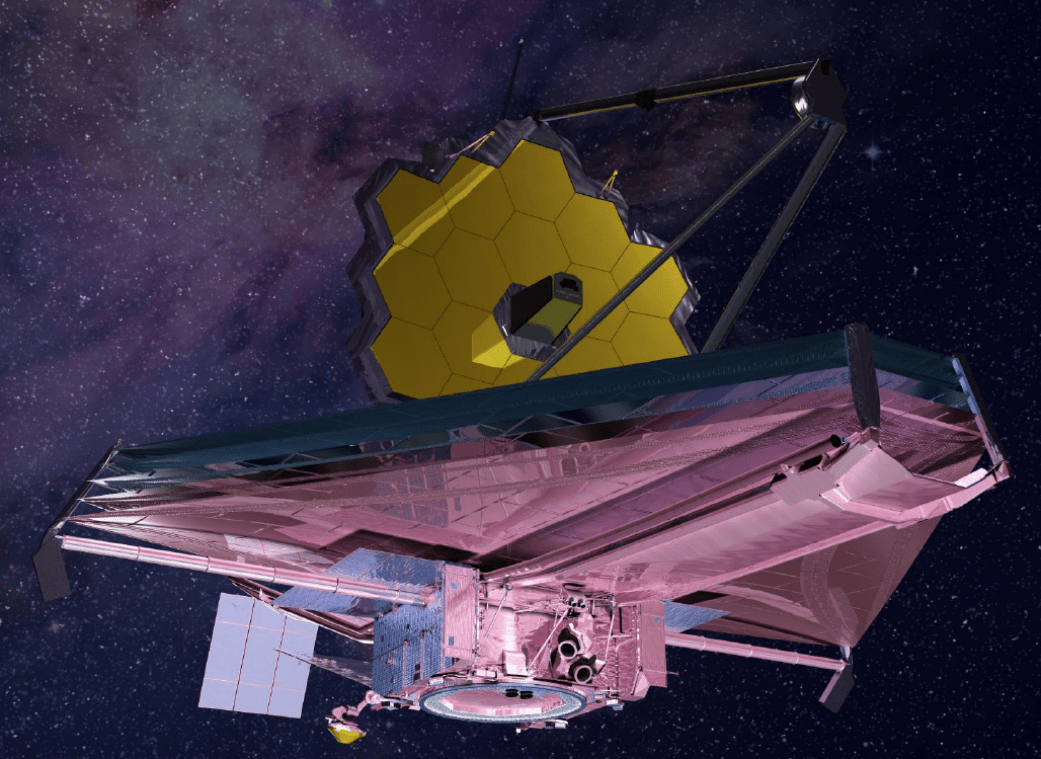

In addition to being the lead author on this study, Jia is also the co-investigator for two instruments that will travel aboard the Europa Clipper mission – which may launch as soon as 2022 to explore the moon’s potential habitability. Jia’s and his colleagues were inspired to reexamine data from the Galileo mission thanks to Melissa McGrath, a member of the SETI Institute and also a member of the Europa Clipper science team.

During a presentation to her fellow team scientists, McGrath highlighted other Hubble observations of Europa. As Jiang explained in a recent NASA press release:

“The data were there, but we needed sophisticated modeling to make sense of the observation. One of the locations she mentioned rang a bell. Galileo actually did a flyby of that location, and it was the closest one we ever had. We realized we had to go back. We needed to see whether there was anything in the data that could tell us whether or not there was a plume.”

When they first examined the information 21 years ago, the high-resolution data obtained by the MAG instrument showed something strange. But it was thanks to the lessons provided by the Cassini mission, which explored the plumes on Saturn’s moon Enceladus, that the team knew what to look for. This included material from the plumes which became ionized by the gas giant’s magnetosphere, leaving a characteristic blip in the magnetic field.

After reexamining the data, they found that the same characteristic bend (localized and brief) in the magnetic field was present around Europa. Jia’s team also consulted data from Galileo’s Plasma Wave Spectrometer (PWS) instrument to measure plasma waves caused by charged particles in gases around Europa’s atmosphere, which also appeared to back the theory of a plume.

This magnetometry data and plasma wave signatures were then layered into new 3D modeling developed by the team at the University of Michigan (which simulated the interactions of plasma with Solar system bodies). Last, they added the data obtained from Hubble in 2012 that suggested the dimensions of the potential plumes. The end result was a simulated plume that matched the magnetic field and plasma signatures they saw in the Galileo data.

As Robert Pappalardo, a Europa Clipper project scientist at NASA’s Jet Propulsion Laboratory (JPL), indicated:

“There now seem to be too many lines of evidence to dismiss plumes at Europa. This result makes the plumes seem to be much more real and, for me, is a tipping point. These are no longer uncertain blips on a faraway image.”

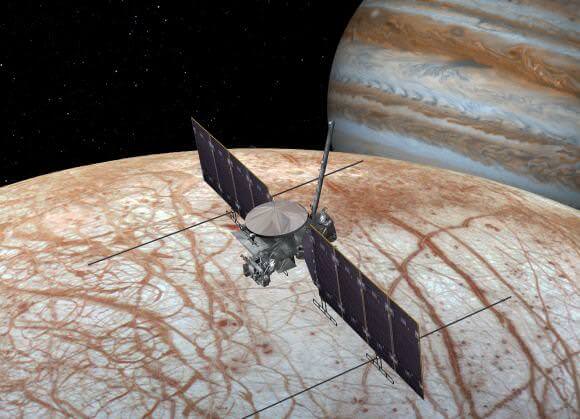

The findings are certainly good news for the Europa Clipper mission, which is expected to make the journey to Jupiter between 2022 and 2025. When this probe arrives in the Jovian system, it will establish an orbit around Jupiter and conduct rapid, low-altitude flybys of Europa. Assuming that plume activity does take place on the surface of the moon, the Europa Clipper will sample the frozen liquid and dust particles for signs of life.

“If plumes exist, and we can directly sample what’s coming from the interior of Europa, then we can more easily get at whether Europa has the ingredients for life,” Pappalardo said. “That’s what the mission is after. That’s the big picture.”

At present, the mission team is busy looking at potential orbital paths for the Europa Clipper mission. With this new research in hand, the team will choose a path that will take the spaceprobe above the plume locations so that it is in an ideal position to search them for signs of life. If all goes as planned, the Europa Clipper could be the first of several probes that finally proves that there is life beyond Earth.

And be sure to check out this video of the Europa Clipper mission, courtesy of NASA: