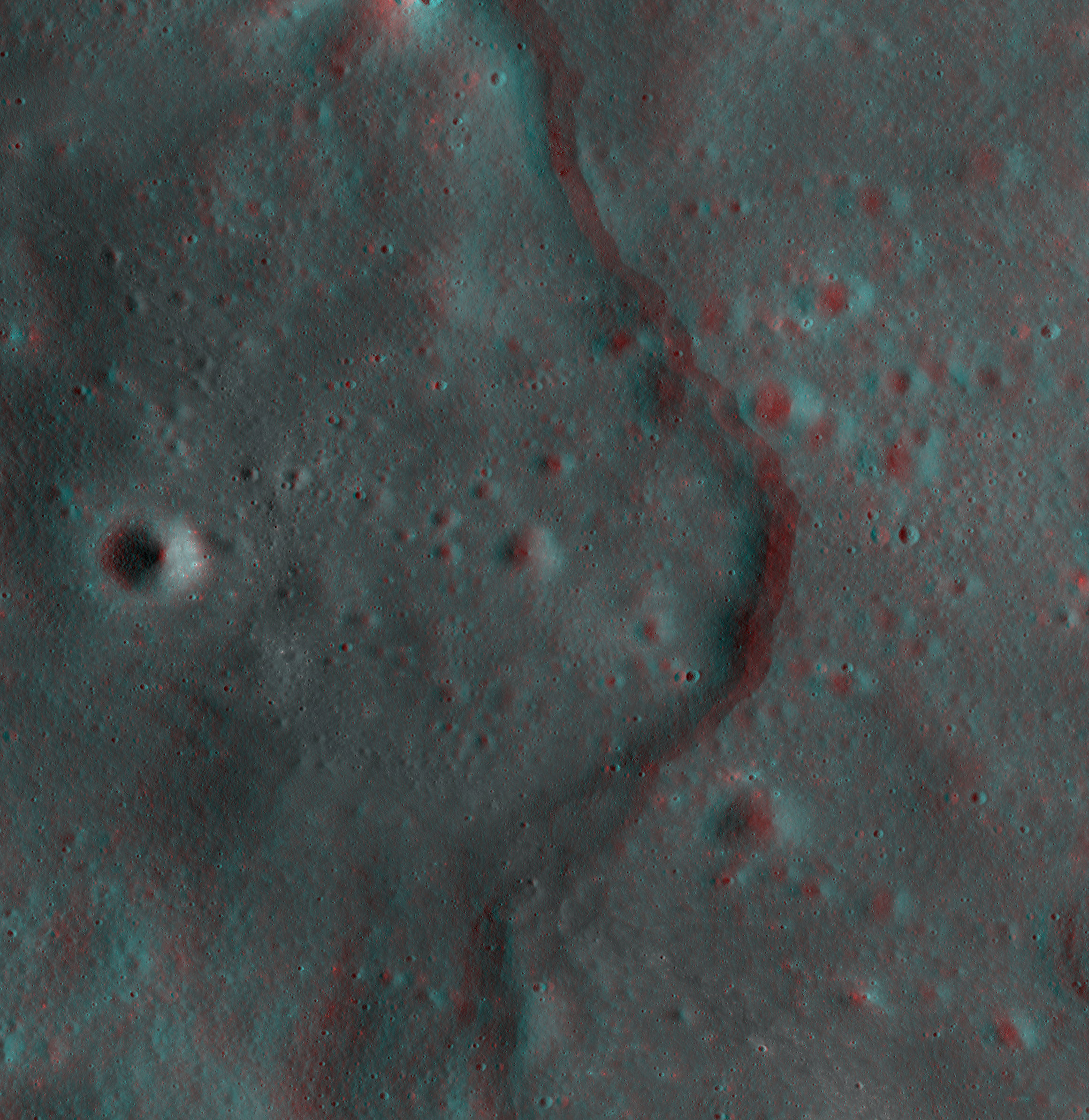

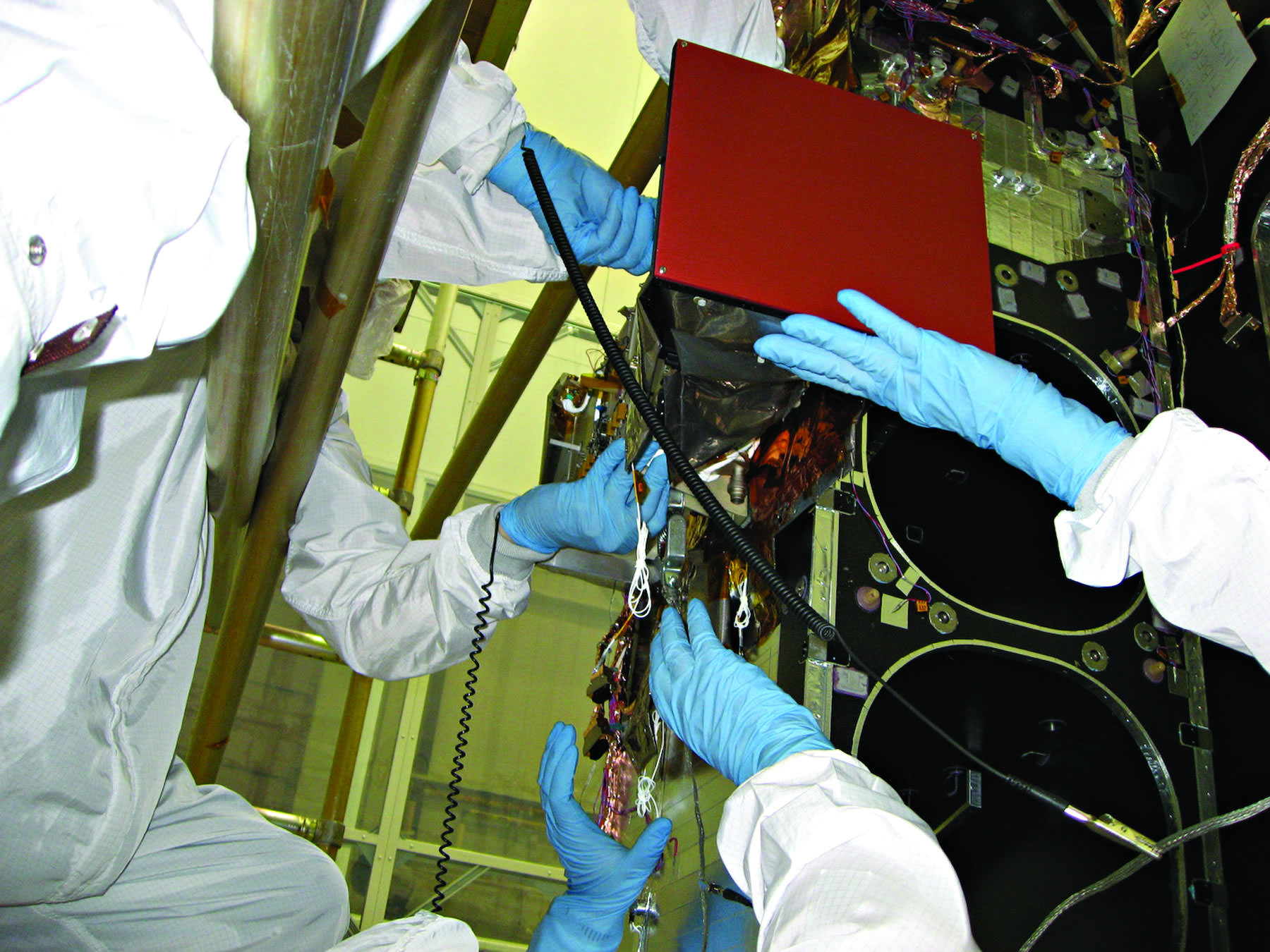

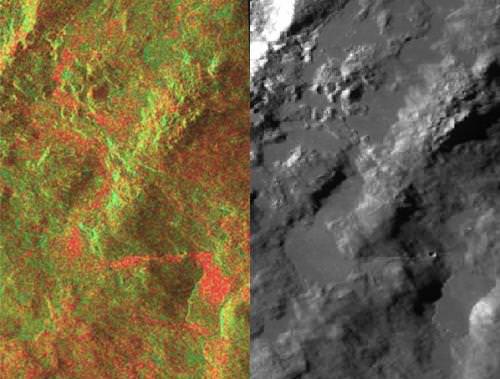

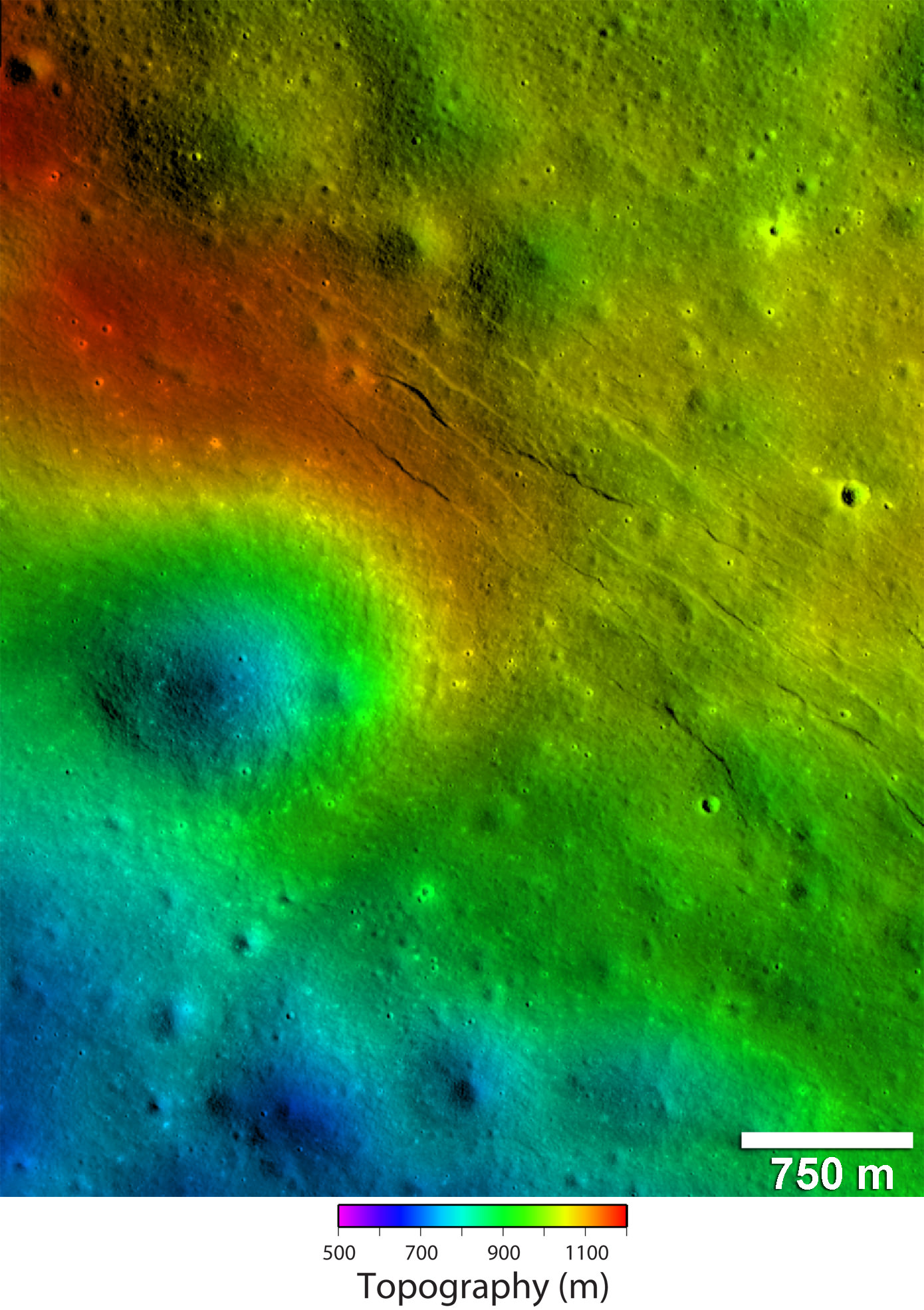

Korolev lobate scarp on the Moon, in 3-D. Lobate scarps, a type of cliff,are found mostly in the highlands on the Moon, and are relatively small and young. Credit: NASA/GSFC/Arizona State University.

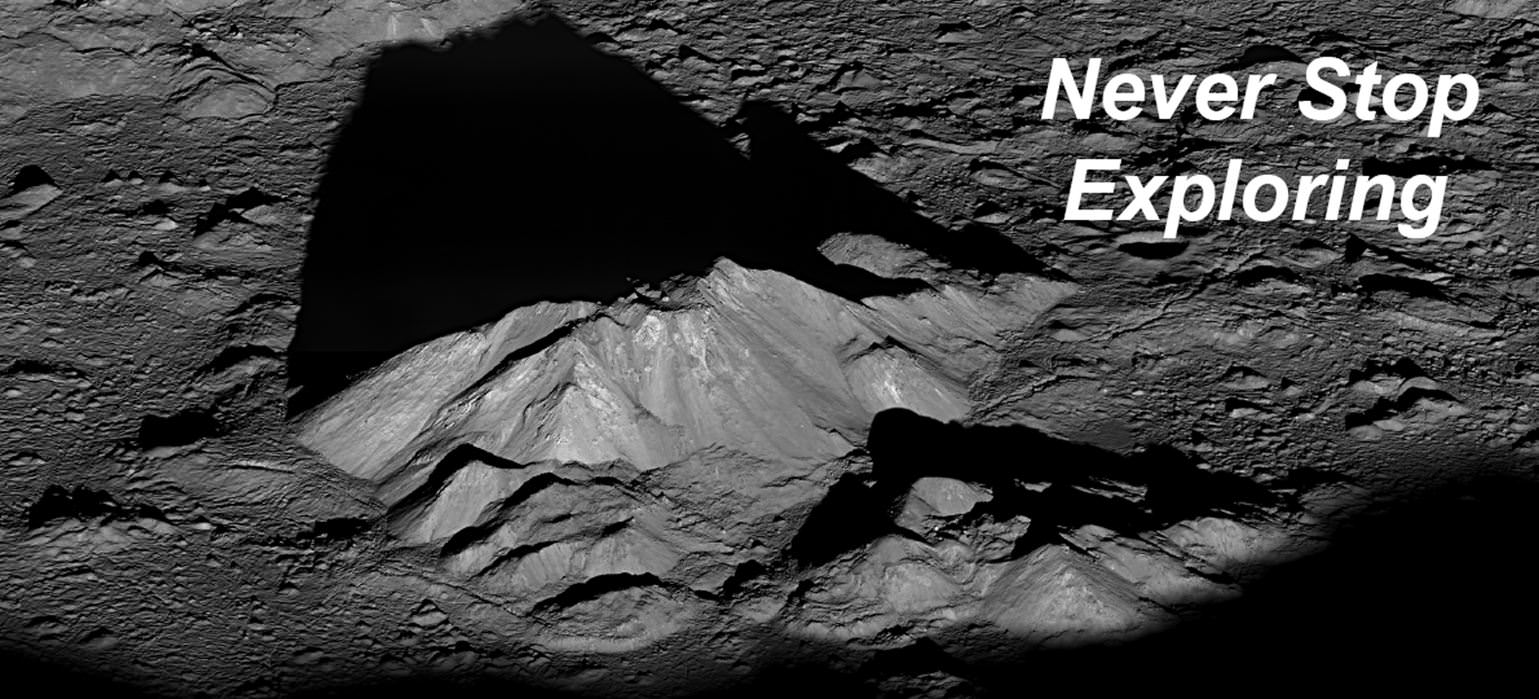

Who doesn’t love 3-D images, especially of objects in space? But creating them can be a bit time-consuming for scientists, especially for images from orbiting spacecraft like the Lunar Reconnaissance Orbiter that takes images from just one angle at a time. Usually, it is “amateur” enthusiasts who take the time to find and combine images from different orbital passes to create rich, 3-D views.

But now, scientists at the University of Arizona and Arizona State University have developed a new automatic “brain” — a new automatic processing system that aligns and adjusts images from LRO, and combines them into images that can be viewed using standard red-cyan 3D glasses.

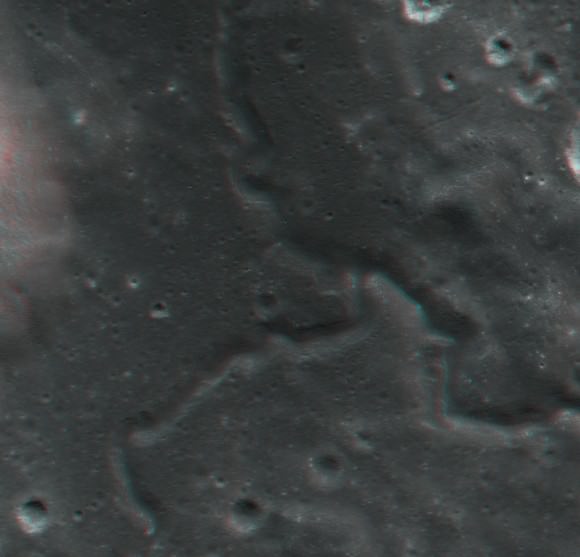

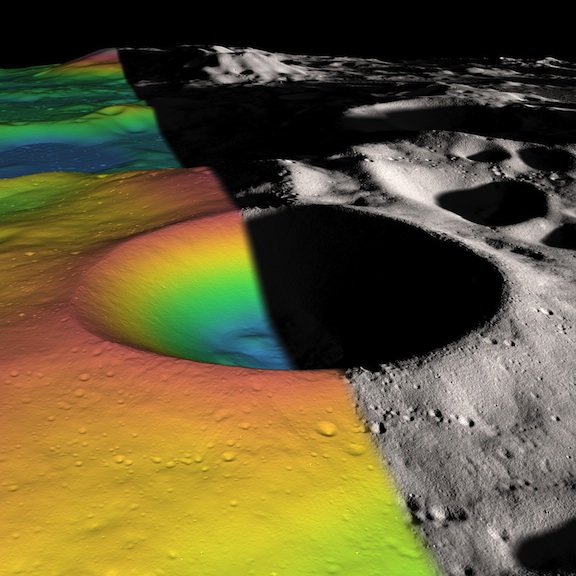

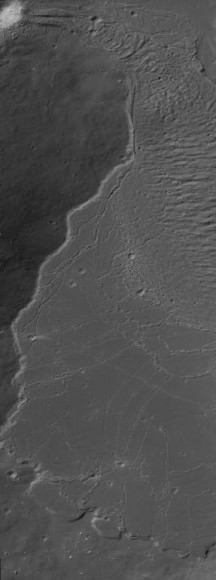

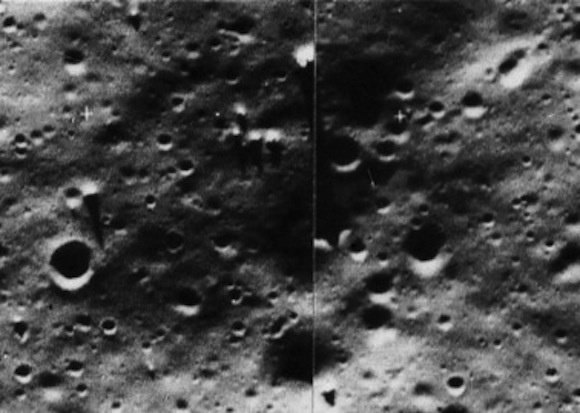

Alpes Sinuous Rille, an ancient channel formed as massive eruptions of very fluid lava poured across the surface of the Moon. Credit: NASA/GSFC/Arizona State University

Human vision sees in three dimensions because our eyes are set slightly apart and see the world from two different angles at once. Our brain then interprets the two images and combines them into a single three dimensional view.

It’s fairly easy to create 3-D views from the Mars rovers like Curiosity and Opportunity, because they have mast cameras and navigation cameras which operate in pairs to provide stereo views of the Martian surface.

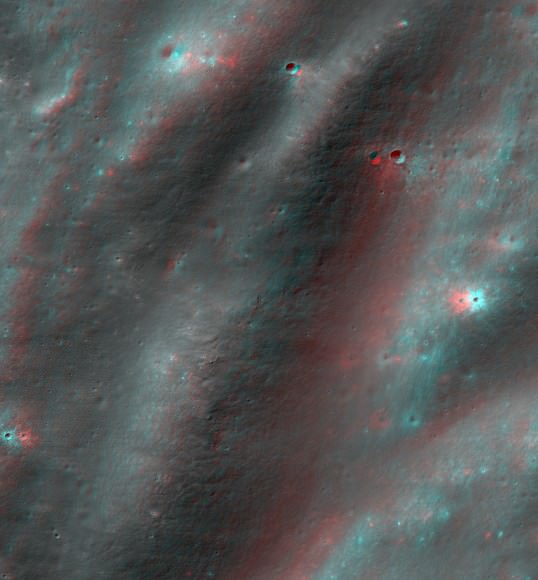

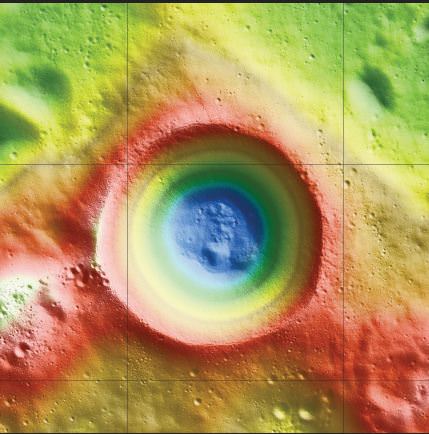

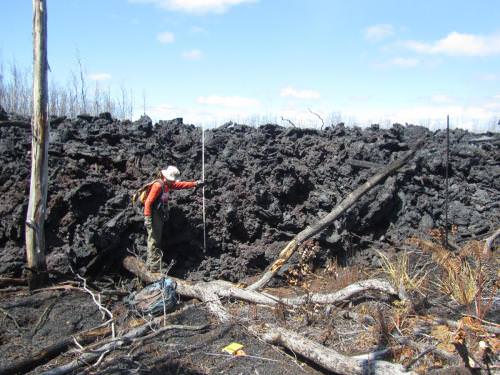

Ancient radial scars of ejecta extend out from the Orientale basin for hundreds of kilometers and consist of aligned craters and massive dune-like forms. They formed as streamers of lunar rock thrown out from the Orientale impact and crashed back to the surface. Credit: NASA/GSFC/Arizona State University

But LRO orbits high above the Moon’s surface, and can see from only one angle at one time. However, images taken in different orbits, from different angles can be combined together to reconstruct a view in three dimensions.

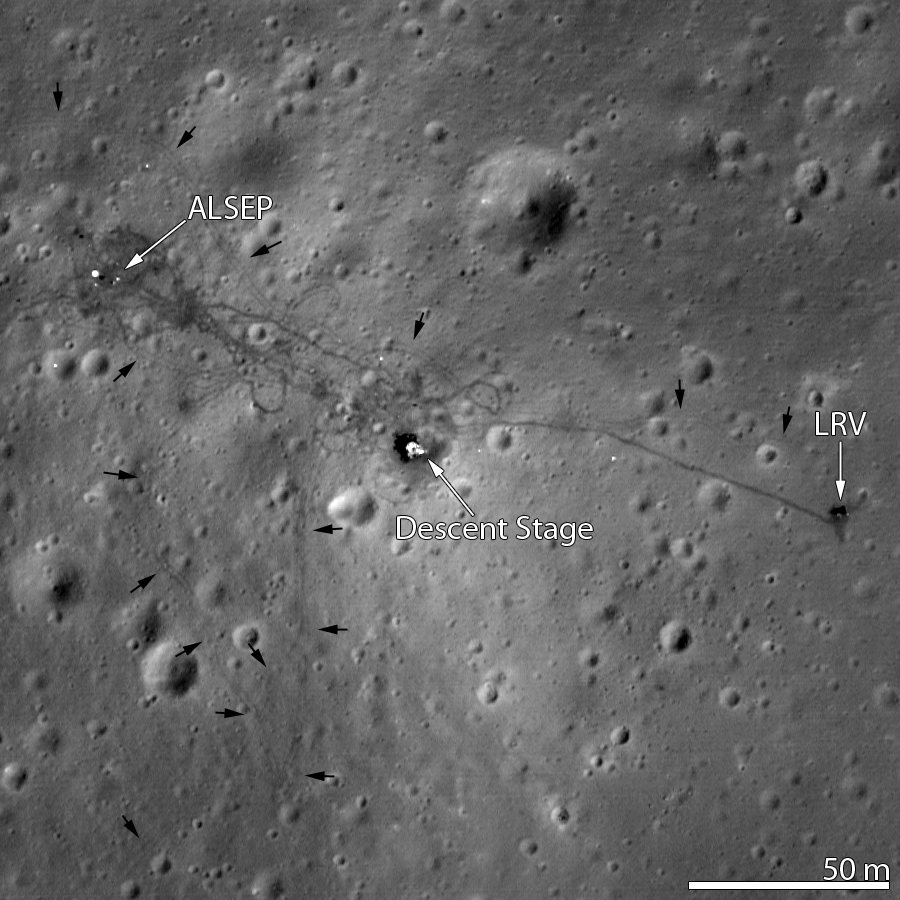

And this new system can automatically combine the disparate shots together. The images here are a sample of what the team has created so far.

This ‘brain’ is provided by a new initiative, presented by team member Sarah Mattson (University of Arizona) to the European Planetary Science Congress on 25 September. The team have developed an This type of image is known as an anaglyph.

“Anaglyphs are used to better understand the 3D structure of the lunar surface,” said Sarah Mattson from the University of Arizona and LRO team member. “This visualization is extremely helpful to scientists in understanding the sequence and structures on the surface of the Moon in a qualitative way. LROC NAC anaglyEuropean Planetary Science Congress on 25 September. LROC NAC anaglyphs will also make detailed images of surface of the Moon accessible in 3D to the general public.”

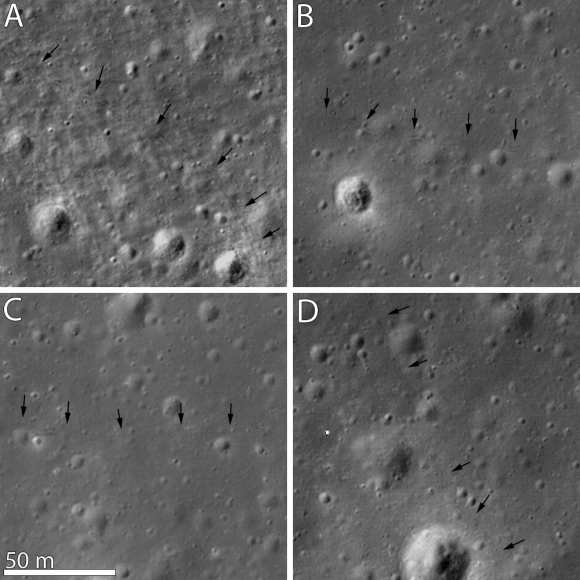

The Lunar Reconnaissance Orbiter Camera – Narrow Angle Camera (LROC NAC) has acquired hundreds of stereo pairs of the lunar surface, and is acquiring more as the mission progresses. The LROC NAC anaglyphs make lunar features such as craters, volcanic flows, lava tubes and tectonic features jump out in 3D. The anaglyphs will be released through the LROC website as they become available.

Mattson presented the new system at the European Planetary Science Congress on September 25.